The singleton cluster is created and we have a single disk based filesystem. To create a NAS from this we have to enable the Cluster Export Services (CES), which manages the NFS and SMB exports and the highly available floating IP address. We'll pick an IP address in the same range as my regular subnet. You could also have a dedicated network for NAS access, but we won't bother with that now.

First, we have to assign a location in the filesystem to store status data, and then we designate our system as a CES node, and assign a floating IP address

# mmchconfig cesSharedRoot=/nas1/ces

# mkdir /nas1/ces

# mmchnode --ces-enable -N `hostname`

# mmces address add --ces-node `hostname` --ces-ip 192.168.178.200

# mmlscluster --ces

GPFS cluster information

========================

GPFS cluster name: scalenode1

GPFS cluster id: 17518691519147820244

Cluster Export Services global parameters

-----------------------------------------

Shared root directory: /nas1/ces

Enabled Services: None

Log level: 0

Address distribution policy: even-coverage

Node Daemon node name IP address CES IP address list

-----------------------------------------------------------------------

1 scalenode1 192.168.178.199 192.168.178.200

If there are no AD servers in your environment, you can still use Windows File Sharing, but you'll need to create users manually using the smbpasswd command. NFS uses local user accounts anyway, so we can configure the authentication to be standalone, or as Spectrum Scale calls it, "userdefined", AKA, your problem.

Enable standalone authentication for CES as follows:

# mmuserauth service create --data-access-method file --type userdefined

The CES definitions are done, we can enable and start NFS and SMB:

# mmces service enable NFS

# mmces service enable SMB

# mmhealth cluster show

Let's set up the GUI, we start by enabling the performance collection:

# mmperfmon config generate --collectors=`hostname`

# mmchnode --perfmon -N `hostname`

Then we create a GUI user, and start the GUI:

# /usr/lpp/mmfs/gui/cli/mkuser admin -g SecurityAdmin

# systemctl enable gpfsgui --now

The GUI will be available in a minute (or two) on https://your-hostname

In the GUI we can create a fileset and export it using NFS and SMB. A fileset is a sub-division of the file system which is used to separate content and provide an easy way to manage file data using policies. We can create dependent and independent filesets, where dependent means it uses the general filesystem metadata, and independent means it has its own metadata.

Create a dependent fileset called Documents in the GUI, "Files", "Filesets":

The fileset is created and automatically linked. You can see the commands used in the dialog box that shows the progress. Next we can export the fileset.

Sharing with SMBWith SMB access we'll need to add your user account to the SMB local database first. Your user needs to exist as a UNIX user on the scalenode1 as well.

# smbpasswd -a maarten

New SMB password:

Retype new SMB password:

Added user maarten.

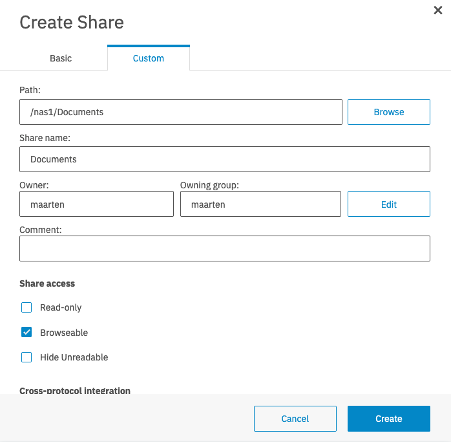

To create a SMB share, Go to "Protocols", "SMB Exports", and hit "Create Export":

Hit create. You can check your exports with:

# mmsmb export list# net conf listNow you can access the share from Windows, Linux, and MacOS:

Linux: sudo mount -t cifs //192.168.178.200/Documents /mnt/GPFS_Documents -o port=445,user=maartenWindows: net use G: \\192.168.178.200\Documents /user:maartenMacOS: mount -t smbfs //maarten@192.168.178.200:445/Documents /Users/maarten/GPFS_DocumentsSharing with NFS To create a NFS share, Go to "Protocols", "NFS Exports", and hit "Create Export":

In this example I allow R/W access to all systems in my subnet, including root access. If you want more security, select "Root Squash", -2 for anonymous UID and GID, and "Privileged port".

Export done, we can try and mount the NFS Share. As NFS uses UNIX IDs we do need to set the ownership right in the root of the export. Linux and Macos work in similar ways, Windows needs a bit more work. The User IDs that are used on the filesystem level need to be the same when using NFS, this takes a bit of coordination.

Linux/MacOS client:

Get your user ID (UID) and Group ID (GID) on the command line of Linux or MacOS:

% id

uid=501(maarten) gid=20(staff)

Set the ownership to the share correctly on the Spectrum Scale system:

% chown 501:20 /nas1/Documents

You can do this on the GUI as well using the "custom" tab of the create a fileset window, or modifying the fileset by right clicking on it.

Open a Terminal session to create a mountpoint and mount the filesystem:

% mkdir NasDocuments

% mount -o nolock 192.168.178.200:/nas1/Documents /Users/maarten/NasDocuments

Windows client:

We'll need to activate the NFS feature, and create a mapping of your Windows User Name to a UNIX user ID used by Spectrum Scale. You'll need the UID and GID that is used on the root of your export. This may be "0" (root) if you leave the export as default.

Open Programs and Features, select Turn Windows features on or off, Services for NFS, click OK

Open the regedit tool, and go to: HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\ClientForNFS\CurrentVersion\Default

- Create a new New DWORD (32-bit) Value inside the Default folder named AnonymousUid and assign the UID as a decimal value.

- Create a new New DWORD (32-bit) Value inside the Default folder named AnonymousGid and assign the GID as a decimal value.

- Reboot your windows system. (you probably saw this coming)

Now you can mount the NFS share from the Command prompt:

mount \\192.168.199.200\nas1\Documents Z: -o nolock

The next blog will talk about adding and replacing disks (NSDs) : Part 3: Extending the NAS

#Highlights-home#Highlights