In this article we will show how to connect the PLC in the shopfloor to end user applications using webMethods.

The following components will be used:

- Prosys OPC UA Simulator to simulate a PLC

- webMethods Integration Server 11.1

- webMethods OPC connector

- webMethods Designer

- Kafka

- InfluxDB

- Telegraf

- Grafana

- Docker

1 - Prosys OPC-UA Simulator

First, request a download and install Prosys OPC UA Simulator: https://prosysopc.com/products/opc-ua-simulation-server/

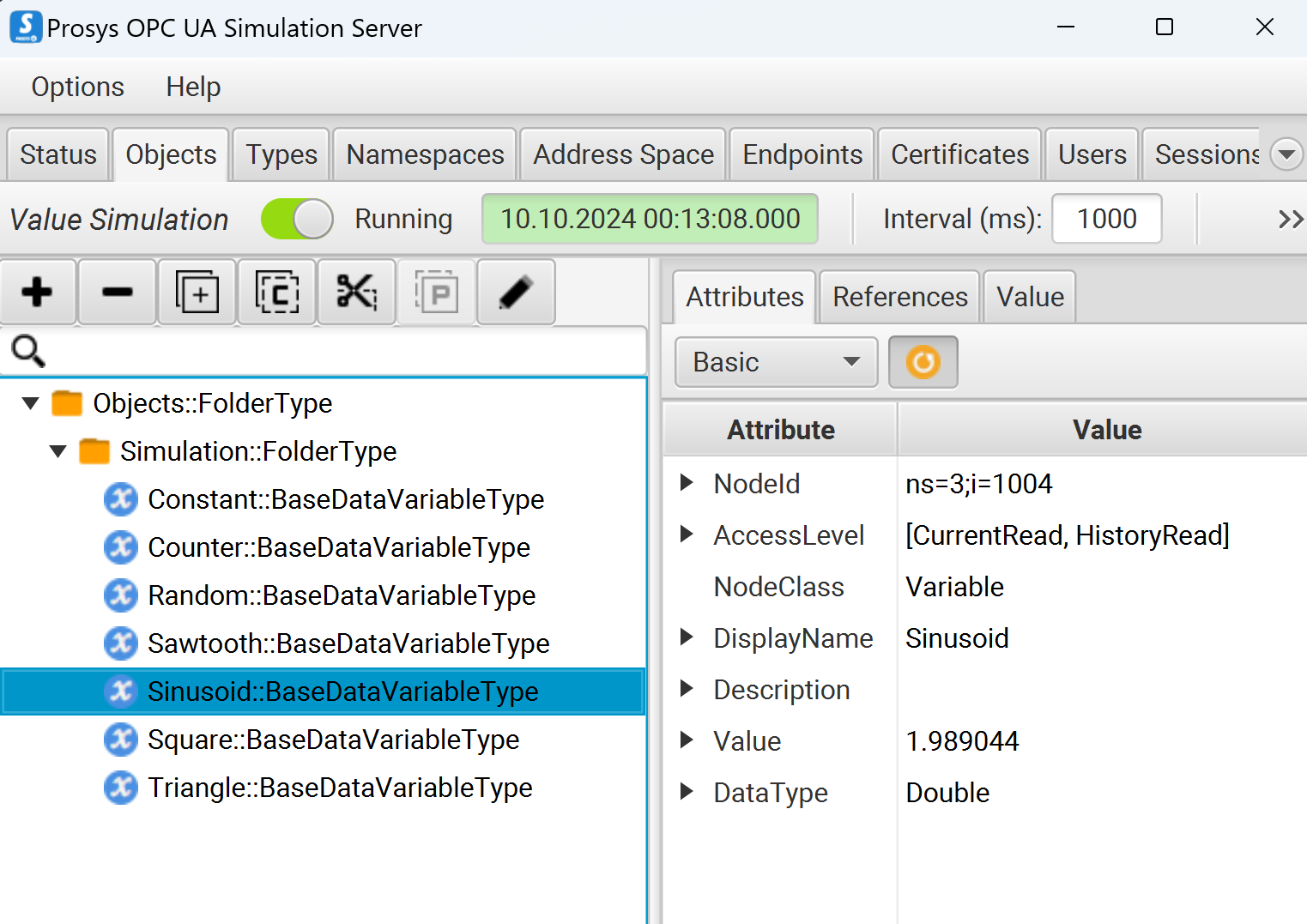

Once started, ensure the server status is green. Then go to the "Objects" tab and ensure that the Objects/Simulation/Sinusoid variable is updated every second.

2 - Install the stack Kafka+InfluxDB+Grafana

Use the following docker compose:

version: '3'

services:

zookeeper:

container_name: zookeeper

image: bitnami/zookeeper

environment:

ALLOW_ANONYMOUS_LOGIN: 'yes'

ports:

- "2181:2181"

kafka:

container_name: kafka

image: bitnami/kafka:3.7.0

depends_on:

- zookeeper

ports:

- "29092:29092"

- "9092:9092"

environment:

KAFKA_BROKER_ID: 1

KAFKA_CFG_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_CFG_LISTENERS: INTERNAL://:9092,EXTERNAL://0.0.0.0:29092

KAFKA_CFG_ADVERTISED_LISTENERS: INTERNAL://kafka:9092,EXTERNAL://localhost:29092

KAFKA_CFG_LISTENER_SECURITY_PROTOCOL_MAP: INTERNAL:PLAINTEXT,EXTERNAL:PLAINTEXT

KAFKA_CFG_INTER_BROKER_LISTENER_NAME: INTERNAL

KAFKA_CFG_AUTO_CREATE_TOPICS_ENABLE: true

ALLOW_PLAINTEXT_LISTENER: 'yes'

kafka-ui:

container_name: kafkaui

image: provectuslabs/kafka-ui:latest

depends_on:

- kafka

ports:

- 8080:8080

environment:

KAFKA_CLUSTERS_0_NAME: local

KAFKA_CLUSTERS_0_BOOTSTRAPSERVERS: kafka:9092

KAFKA_CLUSTERS_0_ZOOKEEPER: zookeeper:2181

grafana:

image: grafana/grafana-enterprise

container_name: grafana

restart: always

ports:

- '3000:3000'

depends_on:

- telegraf

environment:

- GF_SECURITY_ADMIN_USER=admin

- GF_SECURITY_ADMIN_PASSWORD=admin

influxdb:

image: docker.io/influxdb

container_name: influxdb

restart: always

ports:

- '8086:8086'

env_file:

- ./influxv2.env

telegraf:

image: telegraf

container_name: telegraf

restart: always

depends_on:

- influxdb

- kafka-ui

volumes:

- ./telegraf.conf:/etc/telegraf/telegraf.conf:ro

env_file:

- ./influxv2.env

nginx:

image: nginx

ports:

- 8089:80

volumes:

- ./nginx.conf:/etc/nginx/conf.d/default.conf

The Telegraf configuration (telegraf.conf file), used to make Kafka send events to InfluxDB, looks like this:

[agent]

## Default data collection interval for all inputs

interval = "10s"

## Rounds collection interval to 'interval'

## ie, if interval="10s" then always collect on :00, :10, :20, etc.

round_interval = true

## Telegraf will send metrics to outputs in batches of at most

## metric_batch_size metrics.

## This controls the size of writes that Telegraf sends to output plugins.

metric_batch_size = 1000

## For failed writes, telegraf will cache metric_buffer_limit metrics for each

## output, and will flush this buffer on a successful write. Oldest metrics

## are dropped first when this buffer fills.

## This buffer only fills when writes fail to output plugin(s).

metric_buffer_limit = 10000

## Collection jitter is used to jitter the collection by a random amount.

## Each plugin will sleep for a random time within jitter before collecting.

## This can be used to avoid many plugins querying things like sysfs at the

## same time, which can have a measurable effect on the system.

collection_jitter = "0s"

## Default flushing interval for all outputs. Maximum flush_interval will be

## flush_interval + flush_jitter

flush_interval = "10s"

## Jitter the flush interval by a random amount. This is primarily to avoid

## large write spikes for users running a large number of telegraf instances.

## ie, a jitter of 5s and interval 10s means flushes will happen every 10-15s

flush_jitter = "0s"

## By default or when set to "0s", precision will be set to the same

## timestamp order as the collection interval, with the maximum being 1s.

## ie, when interval = "10s", precision will be "1s"

## when interval = "250ms", precision will be "1ms"

## Precision will NOT be used for service inputs. It is up to each individual

## service input to set the timestamp at the appropriate precision.

## Valid time units are "ns", "us" (or "µs"), "ms", "s".

precision = ""

## Logging configuration:

## Run telegraf with debug log messages.

debug = false

## Run telegraf in quiet mode (error log messages only).

quiet = false

## Specify the log file name. The empty string means to log to stderr.

logfile = ""

## Override default hostname, if empty use os.Hostname()

hostname = ""

## If set to true, do no set the "host" tag in the telegraf agent.

omit_hostname = false

[[outputs.influxdb_v2]]

## The URLs of the InfluxDB cluster nodes.

urls = ["http://influxdb:8086"]

## Token for authentication.

token = "${DOCKER_INFLUXDB_INIT_ADMIN_TOKEN}"

## Organization is the name of the organization you wish to write to; must exist.

organization = "${DOCKER_INFLUXDB_INIT_ORG}"

## Destination bucket to write into.

bucket = "${DOCKER_INFLUXDB_INIT_BUCKET}"

insecure_skip_verify = true

[[inputs.kafka_consumer]]

## Kafka brokers.

brokers = ["kafka:9092"]

## Topics to consume.

topics = ["simulator"]

max_message_len = 1000000

data_format = "value"

data_type = "string"

[[processors.converter]]

[processors.converter.fields]

float = ["value"]

The important part is at the end where we tell Telegraf that we are going to receive String values and convert them to float.

The reason we're doing that is because the OPC-UA server is sending doubles, which are not supported by InfluxDB, therefore the Integration Server will first convert them to Strings before sending them to Kafka.

The IS could convert them to floats, but this way we have an example on how to convert data using Telegraf :)

The Nginx configuration (nginx.conf file), which is used in our case to ensure that Grafana call webMethods without CORS issue, looks like this:

server {

proxy_set_header Host $http_host;

location / {

proxy_pass http://grafana:3000;

}

location /wm {

proxy_pass http://172.17.0.1:5555/grafana;

}

}

Finally, the environment used by InfuxDB (influxv2.env) looks like this:

DOCKER_INFLUXDB_INIT_MODE=setup

DOCKER_INFLUXDB_INIT_USERNAME=admin

DOCKER_INFLUXDB_INIT_PASSWORD=ThisIsNotThePasswordYouAreLookingFor

DOCKER_INFLUXDB_INIT_ORG=webMethods

DOCKER_INFLUXDB_INIT_BUCKET=opcua

DOCKER_INFLUXDB_INIT_ADMIN_TOKEN=influxdbtoken

3 - webMethods Integration Server

3.1 Installation

Next, download and install webMethods Integration Server 11.1 and the Update Manager. I won't go through the process of installation and update, but ensure that the OPC connector is installed and updated. Prosys jar files should be provided with the latest fix of the package.

3.2 Package creation

Once the Integration Server is up and running, open the Designer and create a new package and folder in that package that we'll use for our example. Go the administration page and start configuring the OPC adapter.

3.3 OPC Adapter configuration

Copy the TCP URL of the OPC UA simulator on the "Status" tab on copy it in the Server URI field when creating a new adapter connection. You should know what to put in the other fields if you're familiar with webMethods.

Next, click on the LookUp button, this should fill up automatically the Endpoint URI.

Put authentication mode to anonymous. In the end, the configuration should look like this:

Note that in my case I had to change the hostname by the host IP since my IS is running in WSL while the OPC-UA Simulator is running in the Windows host.

Next, configure a listener. Nothing particular here. Just ensure it is enabled in the admin.

Go back to Designer and create a "Data Change Notification" adapter notification and using this listener . In the "add items" tab, add a row, then select "Objects/Simulation/Sinusoid".

In the adapter settings, we'll use the "IS_LOCAL_CONNECTION" connection as we don't need an external broker for this simple demo.

Back to the admin page, ensure that the listener notification is enabled.

Next, create an adapter service that uses the "Write" template. In the "Extended Node Id" field, select "Objects/Simulation/Constant". Change the input field type to java.lang.String[].

Next, create a service that takes an object as input, convert it to a string and send that string as the input to call the previously configured adapter service. Don't forget to set the index as the adapter service expects an array.

Test your service to check that the constant value is indeed updated in the OPC UA Simulator.

Finally, create a REST resource with a POST method that will call your service, and, using the admin page, map this REST resource to an alias called "grafana" in "Settings/URL aliases".

3.4 Streaming configuration

Now it is time to configure streaming in the IS admin.

Go to Streaming/Provider Settings and create a new configuration alias. Use "http://localhost:29092" as the provider URI and ensure that the client prefix is unique.

Save, and you should be able to enable the connection.

Create a new event specification. Just ensure the topic is "simulator" and that the value type is "string".

Bak to Designer, create a new "webMethods Messaging Trigger" that should listen to the document type created during the adapter notification creation.

Make it call a service that will convert the values from the OPC-UA server (//data[]/value) to strings (using objectToString service) and send the result to Kafka using the "pub.streaming:send" service.

Now, if you open InfluxDB UI you should be able to see data coming in: open http://localhost:8086 and go to "Data Explorer".

Grafana

Go to Grafana on http://localhost:8089 and install the "business forms" plugin.

Configure an InfluxDB data sources with http://influxdb:8086 as the URL, webMethods as the organization, opcua ad the bucket and copy and paste the token from the env file.

Create a new dashboard with a panel that contains the incoming data and should refresh automatically every 10s.

Add another panel using the Business Form Widget. Add a form element with "Number Slider" type. In the "Update Request" part, select POST as an "Update Action" and http://localhost:8089/wm as the URL.

Apply the change and check that moving the slider and submitting the change does update the constant in the OPC-UA Simulator.

Congratulations, you're done!

------------------------------

Cyril Poder

------------------------------