Last week, I had a chance to give a talk in 2019 FOC Wide Business Intelligence Summit. My talk was about the future of Data Science: Convergence of Humans & Machines, and how to prepare your AI for the next wave of regulations Trust and Transparency in the ML Lifecycle.

As we all know with widespread use of AI in different industries and institutions such as in health care, financial industry industry, technology, mobility, social media and criminal justice system comes an increased probability of unintended consequences and potential harms. For example, in autonomous driving, we should have liability for accidents and torts, knowing Who/What determines responsibility for incidents. In health care, for cancer screening and liability for malpractice, collection of data and potential bias in treatment data sets. In financial sector, we should avoid having unintended bias in credit and lending implications for health and other insurance products.

Regulation will likely emerge to address societal concerns and harms brought about by AI. The law has evolved to deal with human mistakes; however, AI is expected to make different types of mistakes. We need to be able to account for the possibilities of both human and AI induced error, while promoting the potential benefits of AI.

For example, an algorithm called COMPAS was used to score criminals for justice system, and later they realized that there is an united bias in the system. Rashida Richardson, who is the director of policy research at AI Now Institute at NYC said that “In my experience, in conversations around AI, people will talk about it like it’s just a list of pros and cons, but that’s a moment of power and privilege to be able to determine that it’s okay that some folks are disproportionally harmed.”

Or another example is based on Reuters where Amazon worked on building an artificial-intelligent tool to help with hiring process, but the plans backfired when the company discovered the system discriminated against women. Joy Boulamwini from MIT media Lab said that “We have entered the age of automation overconfident, yet underprepared. If we fail to make ethical and inclusive artificial intelligence, we risk losing gains made in civil rights and gender equity under the guise of machine neutrality.”

We need to recognize that the choices we make in building new technologies can have direct social ramifications. The key solution is education, Cornell, Harvard MIT, Stanford and University of Texas are beginning to require computer science majors to take ethics and morality in computer science courses.

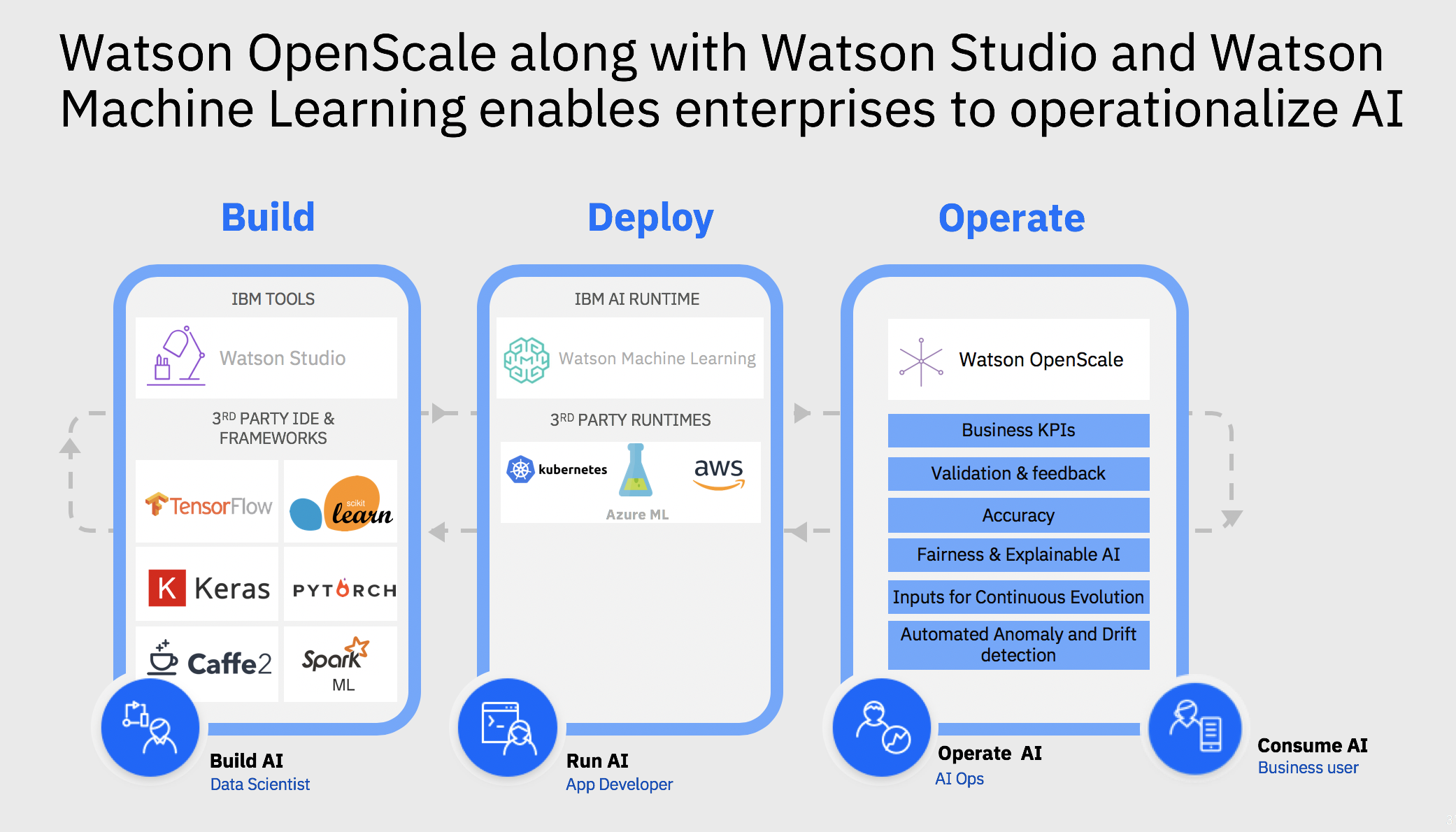

I see IBM as a pioneer to introduce how to overcome this problem to have machine learning algorithms which provide us with decisions with trust and transparency. IBM spent a lot of resources to find data sets of a more diverse nature for model training. Also has open sourced "AI Fairness 360" which is an open source tool kit that helps with bias detection. "Watson OpenScale" has the model explainability feature which as well, helps us to better understand why a certain algorithm has come up with certain decisions. "AI Fairness 360" is a good tool to work with, and get a feel of how to overcome bias. There are many other features in "Watson OpenScale", and it can work with the models that have been operationalized in production to track and measure impact of AI on business outcomes, drive fair outcomes and explain decisions to comply with regulations and govern AI, and adapt AI to changing business situation.

https://github.com/IBM/AIF360

http://aif360.mybluemix.net/community

http://aif360.mybluemix.net/

#GlobalAIandDataScience#GlobalDataScience