IBM® watsonx.ai is a next-generation enterprise studio for AI builders, bringing new generative AI capabilities powered by foundation models, in addition to machine learning capabilities. With watsonx.ai, businesses can effectively train, deploy, validate, and govern AI models with confidence and at scale across their enterprise.

There are several main components in watsonx.ai:

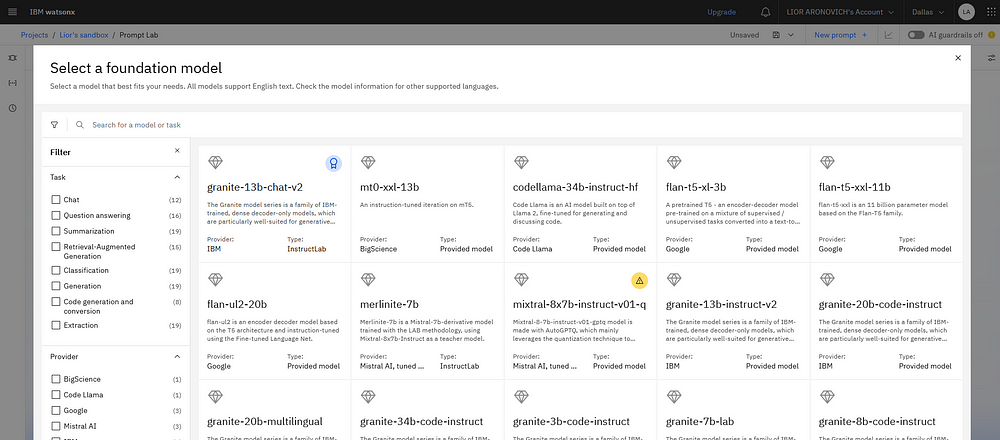

Foundation models: Clients have access to IBM-trained foundation models, IBM selected open source models, and third-party models, of different sizes, architectures and specializations. The foundation models in watsonx.ai support a variety of AI tasks, including text generation, classification, summarization, question answering, extraction, translation, and code generation. You can filter and select models based on their supported AI tasks, provider, and model type.

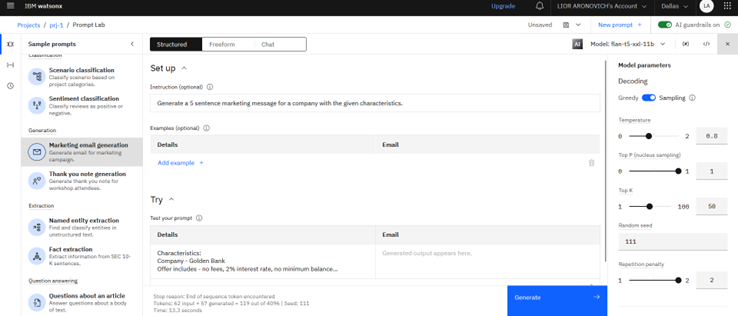

Prompt Lab: Enables AI builders to work with foundation models and build prompts using prompt engineering. Prompt lab provides templates for building prompts for various AI tasks . The templates include prompt structures as well as settings for inference parameters.

Prompt lab provides 3 prompt building modes: structured (includes sections for setup instructions, examples, and actions), free-form, and chat. You can use different models for your prompts, and specify settings for inference parameters. You can save, manage and reuse prompts, with and without history, and as notebooks. It is also possible to define and use dynamic prompt variables, as well as apply automatic detection of HAP (hateful, abusive or profane content) and PII (personally identifiable information) on the input and the output texts. For more information see the prompt lab documentation.

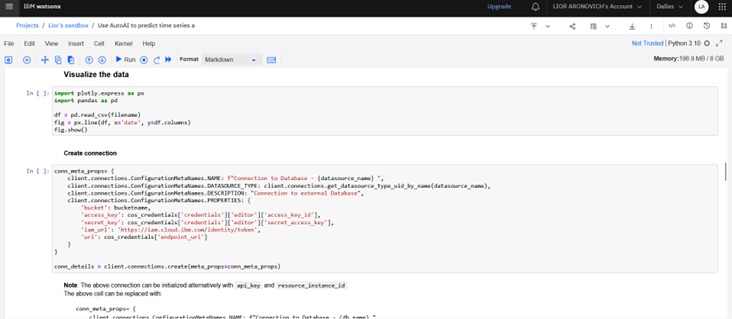

Work with notebooks and IDEs: You can work interactively with notebooks, with access to assets and connections available in your projects, as well as access to the inference APIs of watsonx.ai. You can define and schedule jobs to run notebooks, as well as use notebooks as components inside workflow pipelines.

You can also work in IDEs such as VS Code and JupyterLab to develop, run and test code. The IDE connects to a Python runtime environment inside the secure scope of a project, which enables to run code in that context with access to assets available in your project.

Tuning Studio: Enables to prompt tune foundation models for better efficiency and accuracy, without retraining nor updating the weights of the underlying foundation model. Prompt-tuning adapts a model to your data’s contents and format, by adding tuning information to the base model (in the form of adding a prefix to the prompt). Prompt-tuning is an efficient and low-cost method for adapting a foundation model to new downstream tasks. Once the model is tuned, it can be deployed and used via Prompt Lab or via the API. For more information see the tuning studio documentation.

Data Science and MLOps: Tools, pipelines and runtimes that support building ML models automatically, and automate the full lifecycle from development to deployment. Pipelines automate the processes for building, training and deploying models, supporting a wide range of data sources, automated building blocks, and model monitoring. Visual tools enable to develop applications using visual data science. Autoai automates data preparation, model development, feature engineering, and hyper-parameter optimization. Synthetic data generator enables to generate synthetic tabular data based on your existing data or custom data schemas.