In part 1 of this series we went through the steps required to take our deployed DayTrader application from our on-premises environment and get it working on Open Liberty in OpenShift. At the time, we were only interested in getting the application running in a developer's context--now let's restore some of the qualities of service the application was taking advantage of and bring the deployment to more of a pre-production level of functionality.

What We Have Already Done

So far, we have a single instance of DayTrader running on Open Liberty in OpenShift. We achieved this using the configuration generation feature of the binary scanner to generate Open Liberty configuration for the JDBC, JMS, and security resources we knew were needed just to get DayTrader to function.

We then gathered all the configuration and binaries, wrote a Dockerfile to produce an application image based on the Open Liberty image with all features included, and used the Open Liberty Operator to deploy that image by creating an OpenLibertyApplication resource description. With this, we were able to use DayTrader just fine--the application can still use all of its required services such as DB2 and MQ on-premises.

That's where we left it. The basics are working, but there's still more we need to bring over to recreate the full deployment we had on-premises.

What We Need To Do

Operational Considerations

When we moved from WebSphere Network Deployment (ND) to Open Liberty, we weren't able to bring over certain qualities of service provided by the ND deployment, because Open Liberty is designed to be lightweight and composable. Instead of providing those features internally, Open Liberty integrates with external providers of these qualities of service.

To get an overview of what we're missing and what to do about it, we can use the Migration Toolkit for Application Binaries like we did to generate configuration. This time, we're going to look at a specific section of the inventory report called Operational Considerations. Let's generate an inventory report just like we did our configuration:

[root@onprem-appserver-a1 was]# java -jar binaryAppScanner.jar /was/WAS90/profiles/appDmgr/config/cells/appCell/applications/DayTrader3.ear/DayTrader3.ear --inventory --output=blog

Scanning files..........................

The report was saved to the following file: /was/blog/DayTrader3.ear_InventoryReport.html

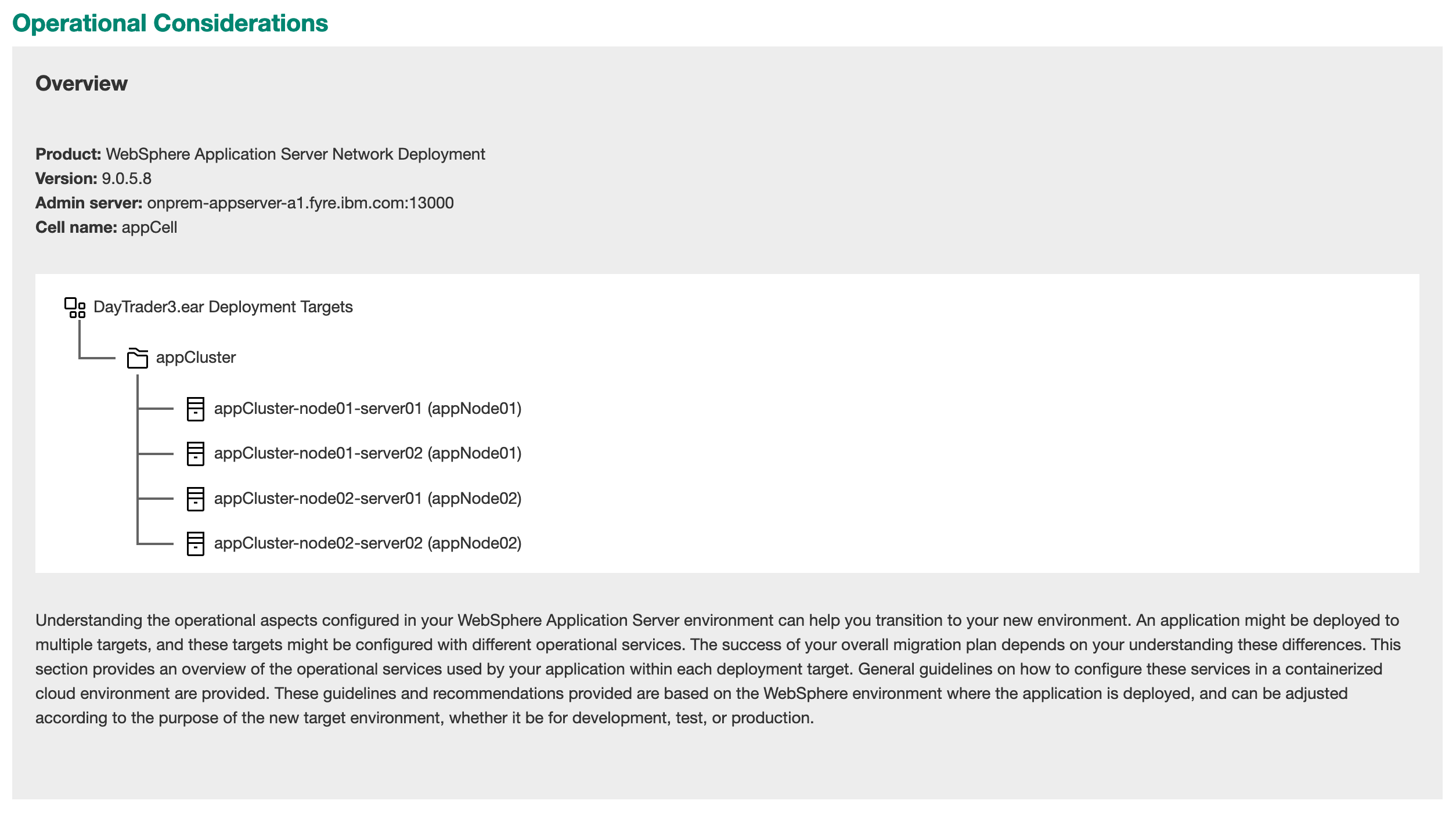

The Operational Considerations section starts with an overview showing basic information about the environment (WebSphere version, hostname, cell name) and an overview of the deployment topology. We can see that DayTrader was deployed to one target, a cluster, and that cluster had 4 member servers.

Next, it discusses each identified quality of service in the deployment. For each area, we get a description of the quality of service being used by the application, and some suggestions on how to achieve that quality of service on Open Liberty.

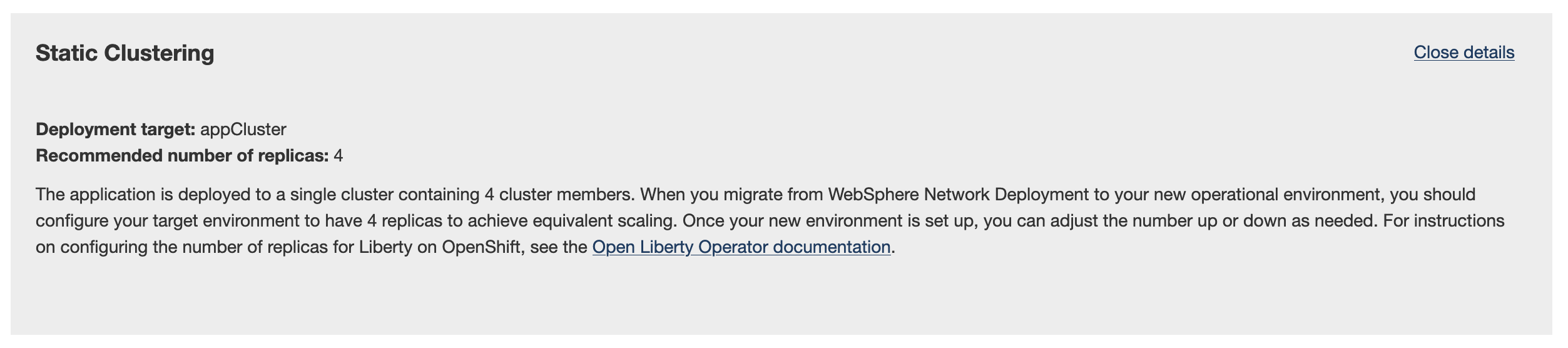

The first section we get is Static Clustering, which tells us we had 4 cluster members in our previous cluster, so we should start our performance tuning at 4 replicas and investigate from there. Since the clustered deployment on-premises was done to achieve high-availability, we'll take the recommendation to deploy 4 replicas.

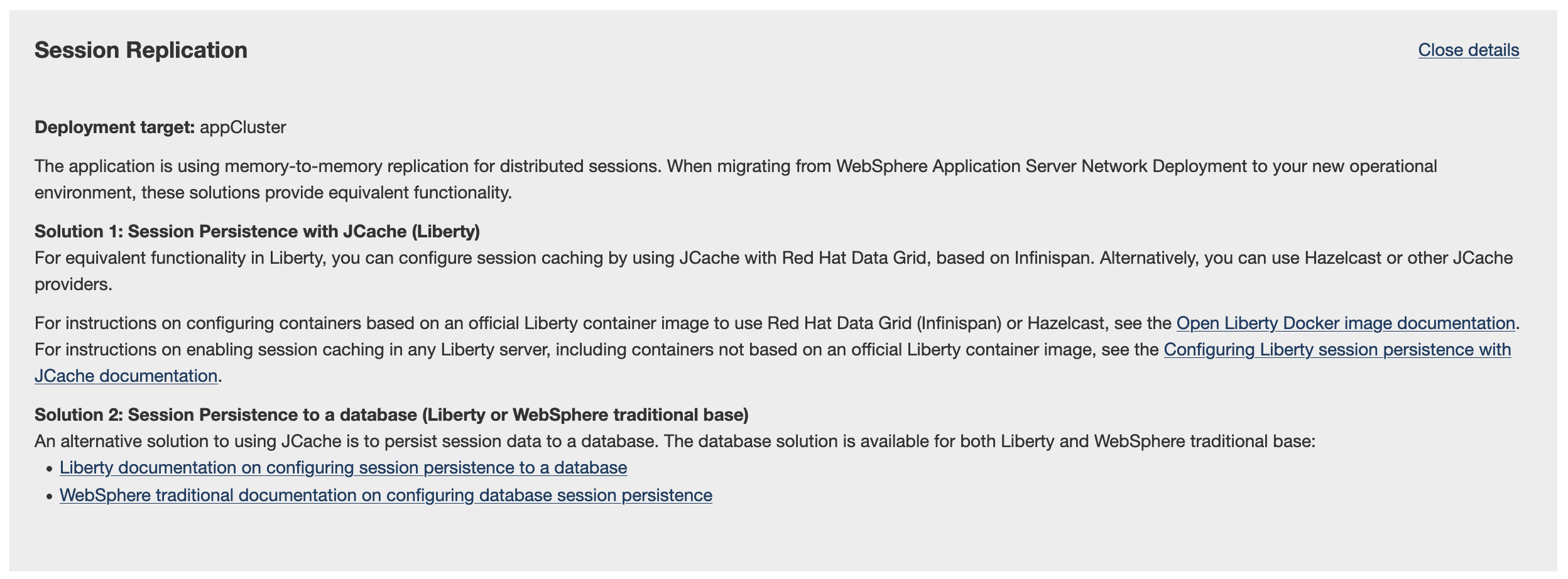

The second section we get is Session Replication. In our previous deployment, we were using memory-to-memory session replication among the members of the cluster to keep sessions alive even if the user is contacting a different server during the session. Here we have multiple options to consider: we can set up a JCache-compliant persistence provider, or we can set up a relational database to persist session data. We'll use a JCache provider for our session persistence. This will require setting up a caching provider within the OpenShift cluster, and updating the Dockerfile to enable the JCache integration.

Optimization

When we created our development container image for our application, we based it on the official Open Liberty image tag full-java11-openj9-ubi. This means that all available Open Liberty features are stored in the image--even the ones we'll never use. This makes the image faster to build, which we like when we're making changes often to test the app, but it also makes the image larger, meaning it's slower to download and start.

For our pre-production deployment, we'll switch to using the Open Liberty image tag kernel-slim-java11-openj9-ubi. We'll have to update our Dockerfile to install the features we need for DayTrader, but the end result will be a smaller, more efficient image that can restart and scale up much faster.

Reconfiguration

Our development deployment continues to rely on external services hosted on-premises, such as DB2 and MQ. In our pre-production environment, we'd like to have our MQ server much closer to the application itself. We'll reconfigure the deployment to talk to the cluster's MQ queue manager instead of the current on-premises one. Since the generated configuration externalized the connection details for all resources, we won't have to make any changes to Open Liberty configuration files or the Dockerfile--we'll just set the new values as environment variables in a config map and apply it to the OpenLibertyApplication.

Summary

That's a lot to take care of. Let's boil it down. We'll:

- set up a JCache provider in the cluster, and update the Dockerfile to enable JCache integration;

- update the Dockerfile to install only the Open Liberty features we need for DayTrader;

- create a ConfigMap with the connection details of our OpenShift-deployed MQ queue manager; and

- update the OpenLibertyApplication to specify the correct number of replicas, and to reference the ConfigMap we'll create to talk to our cluster-deployed MQ queue manager.

Getting Started

Since there are a few steps that need to be done via the command-line, we'll start by logging in to oc and then to the OpenShift internal image registry via docker.

$ oc login --token=sha256~_AIdXqiRrXYXZToGOmY48eE_INY6qAZo0tHYBw9ADmw --server=https://api.migr4.cp.fyre.ibm.com:6443

$ docker login -u (oc whoami) -p (oc whoami -t) default-route-openshift-image-registry.apps.migr4.cp.fyre.ibm.com

With both of these successful, we need to create a new project in OpenShift, then install the Open Liberty Operator into that project. In the OpenShift web console, we'll click Projects, then New Project, and name it "daytrader-preprod". Next, follow the instructions in this section of Part 1 to install the Open Liberty Operator, making sure to choose the "daytrader-preprod" namespace to deploy into, not "daytrader".

JCache Session Persistence With Infinispan

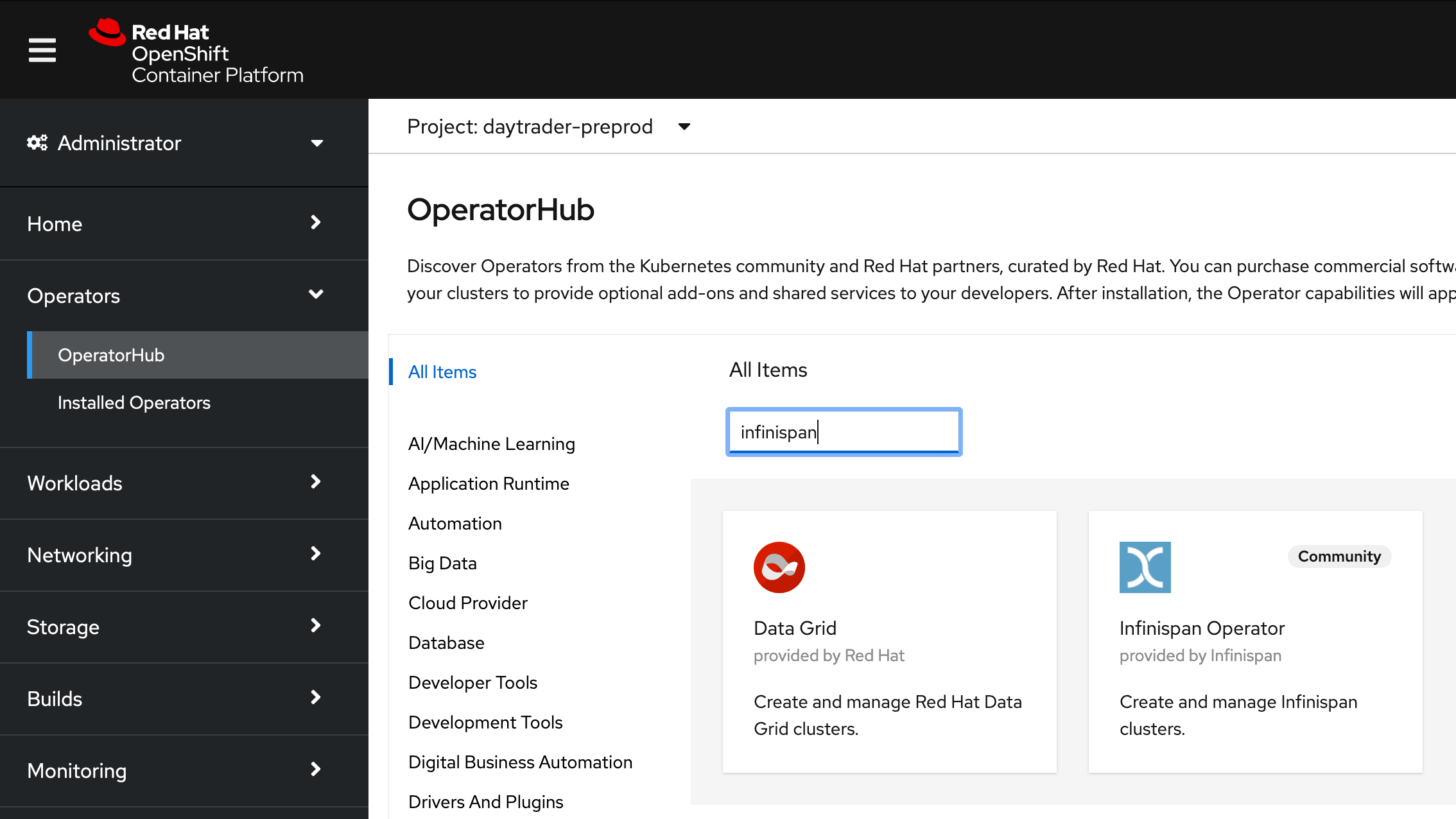

We've chosen to provide session persistence for DayTrader using a JCache-compatible provider. Infinispan is an open-source in-memory data grid with full JCache support. Infinispan is available through OperatorHub, just like Open Liberty.

Deploying Infinispan

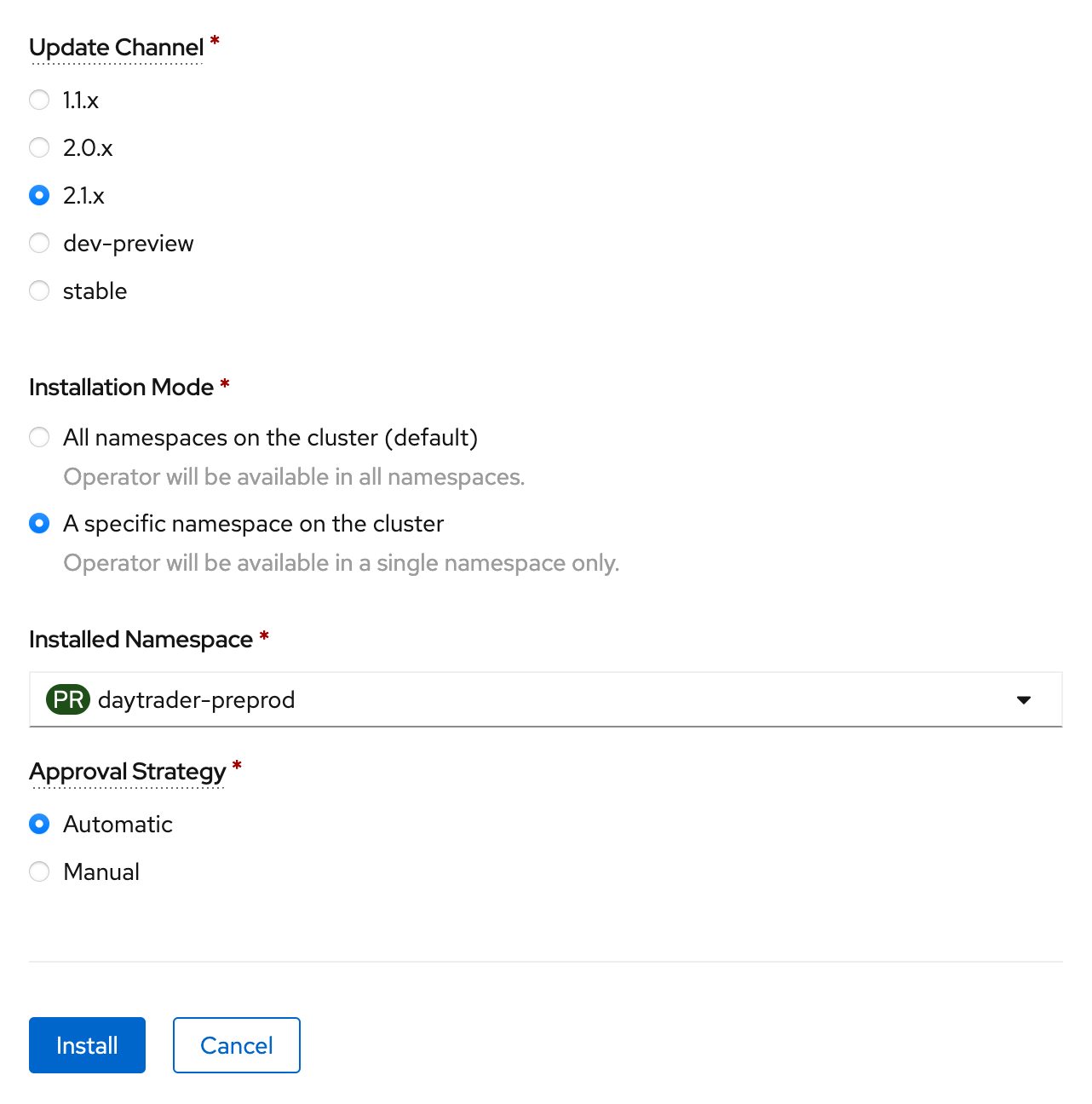

In the left navigation panel, click Operators, then OperatorHub. In the search box under All Items, type "Infinispan". Choose the Infinspan Operator, not the Red Hat Data Grid Operator.

Click Install, then choose the "daytrader-preprod" namespace to deploy into. Leave all other settings default and click Install.

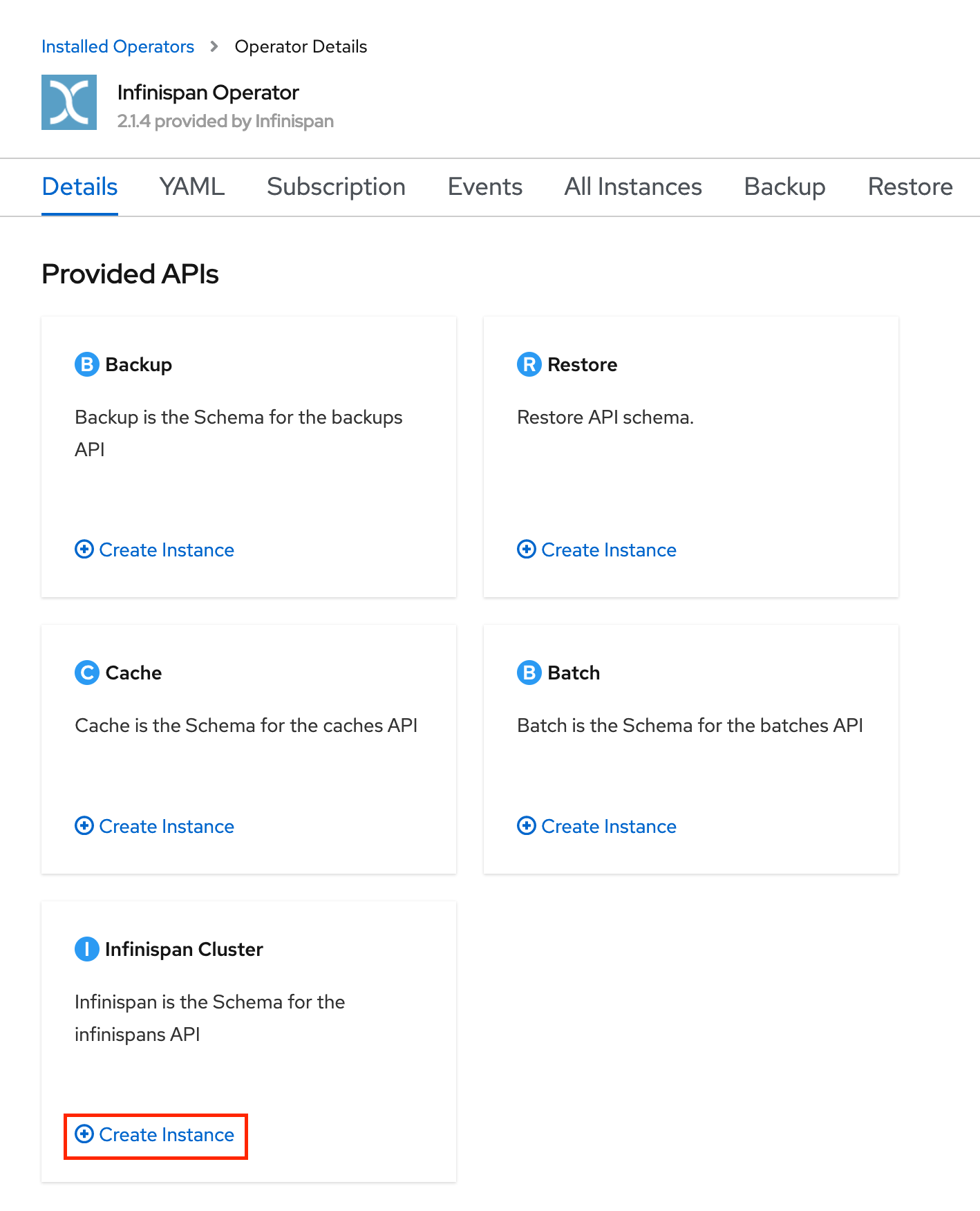

Then click on Installed Operators, Infinispan Operator. On the page for the operator, in the card for Infinispan Cluster, click Create Instance.

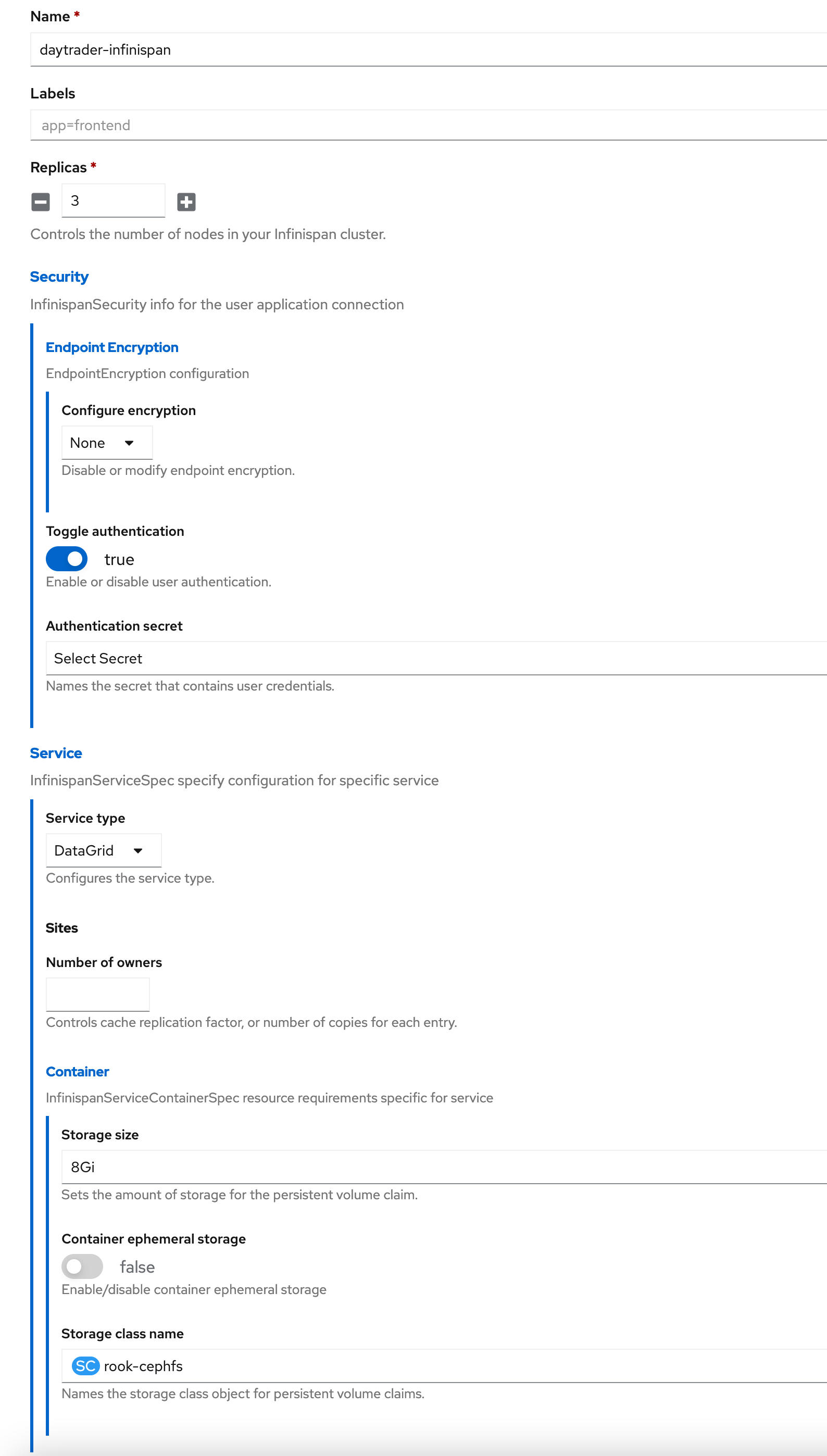

There are many settings you can change here. Make sure to set a name like daytrader-infinispan, and expand Service, Container, set the Storage size if you want more than 1GB of storage, and set the Storage class name to a valid storage class if you don't have a default set in your cluster. You can also set the amount of replicas for your Infinispan cluster; I choose 3 replicas because I have 3 worker nodes in my cluster. Currently, to work with Open Liberty, you'll also need to disable encryption by setting Configure encryption to None under Security, Endpoint Encryption. The end result should look similar this image:

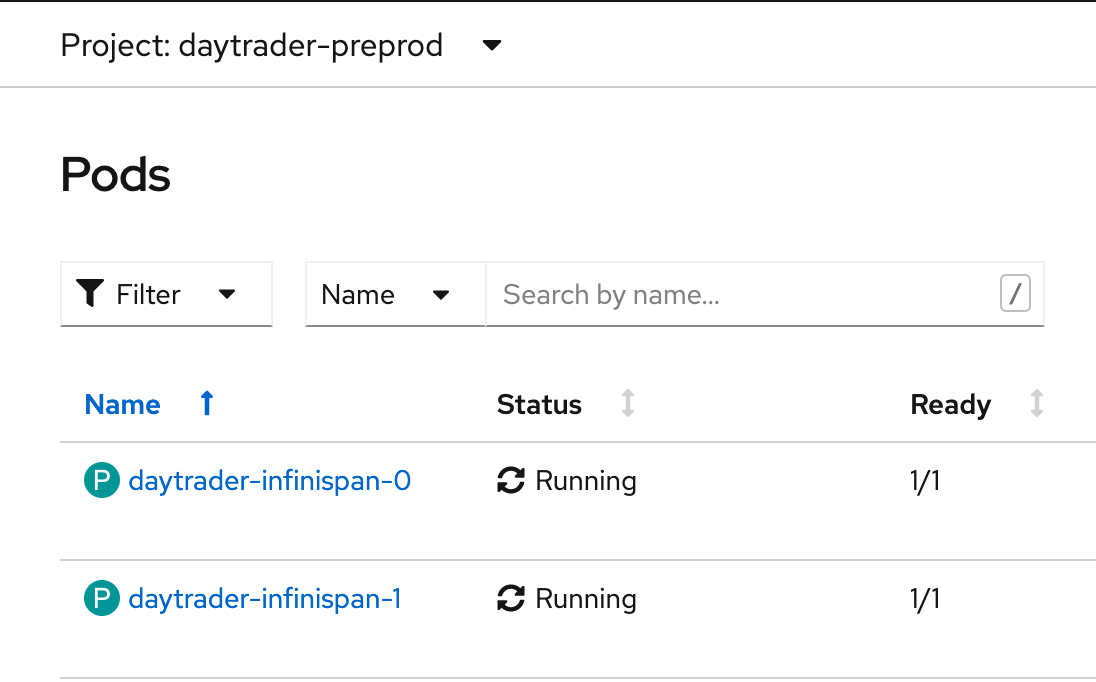

Click Create at the bottom of the page. After a few minutes, you should have your Infinispan pods up and running:

Your cluster is now ready to cache your application's sessions.

Updating The Dockerfile

When we last left off, we had a relatively simple Dockerfile:

FROM openliberty/open-liberty:full-java11-openj9-ubi

COPY --chown=1001:0 *.jar /opt/ol/wlp/usr/shared/resources/

COPY --chown=1001:0 *.rar /opt/ol/wlp/usr/shared/resources/

COPY --chown=1001:0 *.p12 /opt/ol/wlp/output/defaultServer/resources/security/

COPY --chown=1001:0 DayTrader3.ear_server_sensitiveData.xml /config/

COPY --chown=1001:0 DayTrader3.ear_server.xml /config/server.xml

COPY --chown=1001:0 DayTrader3.ear /config/apps/

RUN configure.sh

In order to enable integration between Open Liberty and Infinispan, we'll need to add some more steps to this Dockerfile. The Open Liberty image contains helper scripts for Infinispan and Hazelcast integration; we'll use the Infinispan scripts:

### Infinispan Session Caching ###

FROM openliberty/open-liberty:kernel-slim-java11-openj9-ubi AS infinispan-client

# Install Infinispan client jars

USER root

RUN infinispan-client-setup.sh

USER 1001

FROM openliberty/open-liberty:full-java11-openj9-ubi

# Copy Infinispan client jars to Open Liberty shared resources

COPY --chown=1001:0 --from=infinispan-client /opt/ol/wlp/usr/shared/resources/infinispan /opt/ol/wlp/usr/shared/resources/infinispan

ENV INFINISPAN_SERVICE_NAME=daytrader-infinispan

COPY --chown=1001:0 *.jar /opt/ol/wlp/usr/shared/resources/

COPY --chown=1001:0 *.rar /opt/ol/wlp/usr/shared/resources/

COPY --chown=1001:0 *.p12 /opt/ol/wlp/output/defaultServer/resources/security/

COPY --chown=1001:0 DayTrader3.ear_server_sensitiveData.xml /config/

COPY --chown=1001:0 DayTrader3.ear_server.xml /config/server.xml

COPY --chown=1001:0 DayTrader3.ear /config/apps/

RUN configure.sh

The Dockerfile now begins with a separate build stage that runs a built-in script to fetch some Infinispan dependencies. Then, in the main build stage, in addition to everything the Dockerfile used to do, it copies those Infinispan dependencies into place and sets the name of the Infinspan service, which we set when creating the Infinispan cluster. With all this in place, when configure.sh runs, the Infinispan integration will be set up and DayTrader will use it.

Build, tag, and push the image. Be sure to tag it with daytrader-preprod instead of daytrader for the project name. Alternatively, you can have OpenShift do the image build for you (make sure your terminal is in the directory containing your Dockerfile and other artifacts):

$ oc project daytrader-preprod

$ oc new-build --name=daytrader-preprod --binary --strategy=docker

$ oc start-build daytrader-preprod --from-dir=.

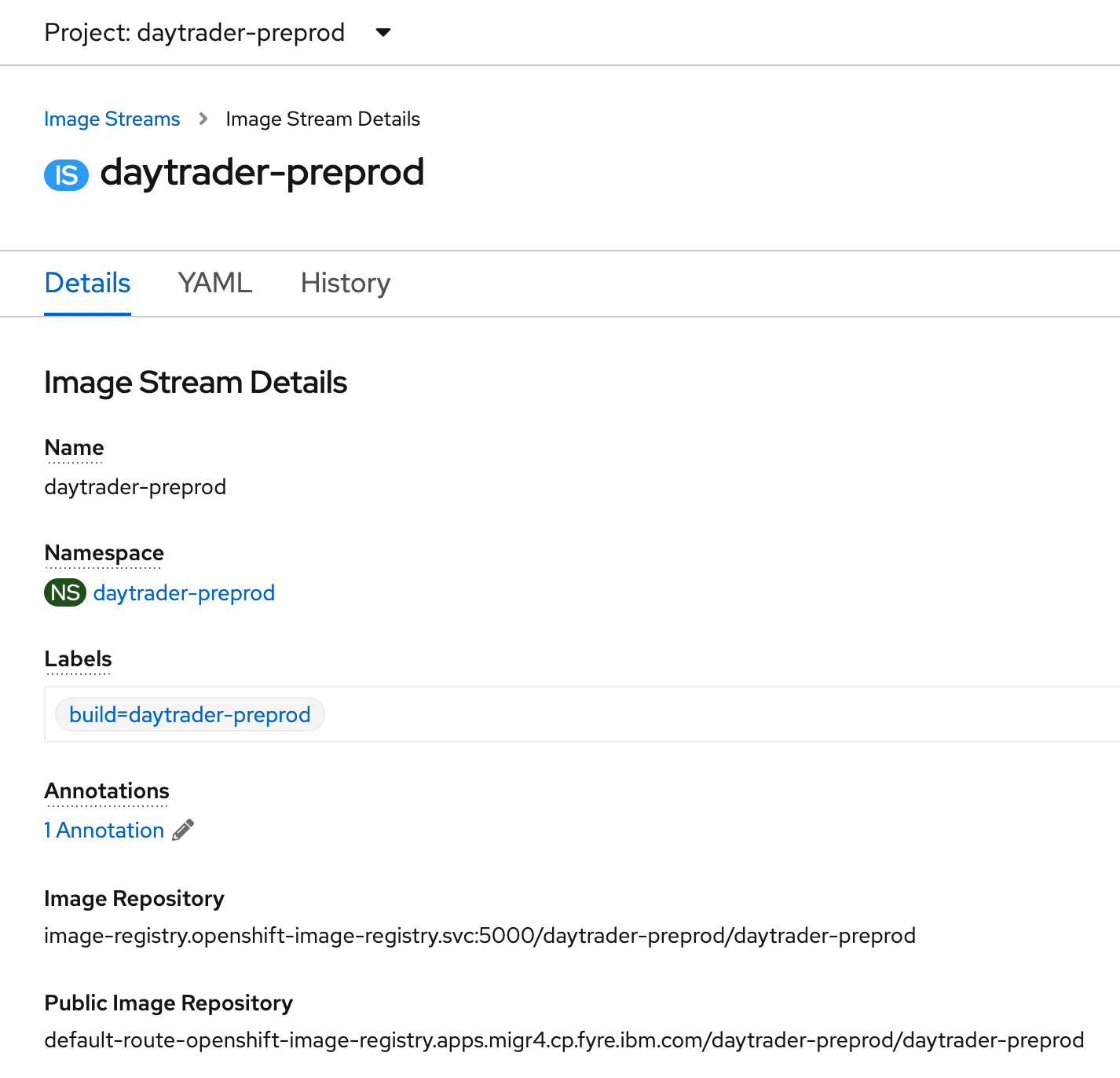

Either way, when the build/push is successful, you should have a daytrader-preprod image stream:

Let's get DayTrader deployed now, and we'll keep redeploying it after each new change we make. At this point, we need to make changes to our OpenLibertyApplication's olapp.yaml file from before, to specify the new name and image stream and to specify the volume mount for the credentials to connect to Infinispan (which were automatically generated by Infinispan; we don't need to create them manually):

apiVersion: openliberty.io/v1beta1

kind: OpenLibertyApplication

metadata:

name: daytrader-preprod

spec:

applicationImage: daytrader-preprod:latest

service:

type: ClusterIP

port: 9443

expose: true

route:

termination: passthrough

env:

- name: WLP_LOGGING_CONSOLE_FORMAT

value: dev

volumes:

- name: infinispan-secret-volume

secret:

secretName: daytrader-infinispan-generated-secret

volumeMounts:

- name: infinispan-secret-volume

readOnly: true

mountPath: "/platform/bindings/infinispan/secret"

Then we can apply it to get DayTrader deployed into the pre-production environment (making sure the current project is daytrader-preprod if not checked before):

$ oc project daytrader-preprod

$ oc apply -f olapp.yaml

When the application comes up, we see the following messages at times in the pod logs to know that Infinispan is being used:

[INFO ] SESN8502I: The session manager found a persistent storage location; it will use session persistence mode=JCACHE

...

[INFO ] ISPN004021: Infinispan version: Infinispan 'Turia' 10.1.8.Final

[INFO ] ISPN004021: Infinispan version: Infinispan 'Turia' 10.1.8.Final

Optimizing The Container Image

The other update we need to make to our application image will make it smaller and allow it to start faster. Right now, our image contains all existing Open Liberty features; however, we are only using the set of features required by our server.xml:

<featureManager>

<!--The following features are available in all editions of Liberty.-->

<feature>appSecurity-2.0</feature>

<feature>beanValidation-1.1</feature>

<feature>cdi-1.2</feature>

<feature>concurrent-1.0</feature>

<feature>ejbLite-3.2</feature>

<feature>jdbc-4.1</feature>

<feature>jndi-1.0</feature>

<feature>jpa-2.1</feature>

<feature>jsf-2.2</feature>

<feature>jsonp-1.0</feature>

<feature>jsp-2.3</feature>

<feature>ldapRegistry-3.0</feature>

<feature>servlet-3.1</feature>

<feature>websocket-1.1</feature>

<!--The following features are available in all editions of Liberty, except for Liberty Core.-->

<feature>ejbPersistentTimer-3.2</feature>

<feature>ejbRemote-3.2</feature>

<feature>jms-2.0</feature>

<feature>mdb-3.2</feature>

</featureManager>

Instead, we can start from an Open Liberty image that doesn't contain any features at all. We'll copy in our server.xml first, and then run a script, features.sh, which will look at our server.xml and download the features it requires. This will add some time to each image build, but will reduce the time it takes for the image to be pulled or to start up. This doesn't do much for developers who are making frequent changes to the image, but once an image is deployed in a more locked-down environment, it changes much less frequently--and it may need to scale up or restart much more often, so the time savings adds up.

Once more we'll update our Dockerfile to change the base image tag and to invoke features.sh when appropriate:

### Infinispan Session Caching ###

FROM openliberty/open-liberty:kernel-slim-java11-openj9-ubi AS infinispan-client

# Install Infinispan client jars

USER root

RUN infinispan-client-setup.sh

USER 1001

FROM openliberty/open-liberty:kernel-slim-java11-openj9-ubi

# Copy Infinispan client jars to Open Liberty shared resources

COPY --chown=1001:0 --from=infinispan-client /opt/ol/wlp/usr/shared/resources/infinispan /opt/ol/wlp/usr/shared/resources/infinispan

ENV INFINISPAN_SERVICE_NAME=daytrader-infinispan

COPY --chown=1001:0 DayTrader3.ear_server.xml /config/server.xml

RUN features.sh

COPY --chown=1001:0 *.jar /opt/ol/wlp/usr/shared/resources/

COPY --chown=1001:0 *.rar /opt/ol/wlp/usr/shared/resources/

COPY --chown=1001:0 *.p12 /opt/ol/wlp/output/defaultServer/resources/security/

COPY --chown=1001:0 DayTrader3.ear_server_sensitiveData.xml /config/

COPY --chown=1001:0 DayTrader3.ear /config/apps/

RUN configure.sh

We build and push this image with the same tag as before (or use the OpenShift build). Since the OpenLibertyApplication specifies an image stream, our deployment will automatically be updated with the new image. This will be the last update we need to make to the image itself.

Connecting To A Different Queue Manager

Let's say our operations team has provided us with an MQ instance they want us to use for pre-production or production only. This instance exists within the OpenShift cluster already, all we need to do is connect to it. At the same time, we don't want to rebuild our image to include the connection details, since we shouldn't use this queue manager for development environments.

This scenario emphasizes the importance of what are called "immutable images." This means that certain settings, such as the hostname and port of external services, are not hardcoded in the image, but are allowed to be overridden by data in each environment the image is deployed to.

When the binary scanner creates configuration, it extracts the values of commonly changed configuration items into variables. Those variables have the original values if not otherwise set, but can be overridden on an environment-by-environment basis. We'll use this to reconfigure all MQ connection information to point to our new queue manager by creating a ConfigMap with the new values and specifying it in the OpenLibertyApplication.

Here are the variable declarations related to MQ:

<variable name="TradeBrokerMQMDB_channel_1" defaultValue="DEV.APP.SVRCONN"/>

<variable name="TradeBrokerMQMDB_hostName_1" defaultValue="virmire.rtp.raleigh.ibm.com"/>

<variable name="TradeBrokerMQMDB_port_1" defaultValue="1414"/>

<variable name="TradeBrokerMQMDB_queueManager_1" defaultValue="mqtest"/>

<variable name="TradeBrokerMQQCF_channel_1" defaultValue="DEV.APP.SVRCONN"/>

<variable name="TradeBrokerMQQCF_host_1" defaultValue="virmire.rtp.raleigh.ibm.com"/>

<variable name="TradeBrokerMQQCF_queueManager_1" defaultValue="mqtest"/>

<variable name="TradeBrokerMQQueue_baseQueueName_1" defaultValue="TRADE.BROKER.QUEUE"/>

<variable name="TradeStreamerMQMDB_channel_1" defaultValue="DEV.APP.SVRCONN"/>

<variable name="TradeStreamerMQMDB_hostName_1" defaultValue="virmire.rtp.raleigh.ibm.com"/>

<variable name="TradeStreamerMQMDB_port_1" defaultValue="1414"/>

<variable name="TradeStreamerMQMDB_queueManager_1" defaultValue="mqtest"/>

<variable name="TradeStreamerMQTCF_channel_1" defaultValue="DEV.APP.SVRCONN"/>

<variable name="TradeStreamerMQTCF_clientID_1" defaultValue="mqtest"/>

<variable name="TradeStreamerMQTCF_host_1" defaultValue="virmire.rtp.raleigh.ibm.com"/>

<variable name="TradeStreamerMQTCF_queueManager_1" defaultValue="mqtest"/>

<variable name="TradeStreamerMQTopic_baseTopicName_1" defaultValue="TradeStreamerTopic"/>

We'll create a ConfigMap that will override the hostName variables to point to the queue manager in the cluster. Other values can keep their defaults:

kind: ConfigMap

apiVersion: v1

metadata:

name: daytrader-preprod-mq

namespace: daytrader-preprod

data:

TradeBrokerMQMDB_hostName_1: mq-test-ibm-mq.mq.svc

TradeBrokerMQQCF_host_1: mq-test-ibm-mq.mq.svc

TradeStreamerMQMDB_hostName_1: mq-test-ibm-mq.mq.svc

TradeStreamerMQTCF_host_1: mq-test-ibm-mq.mq.svc

We'll apply it:

$ oc apply -f mq-config.yaml

And then we'll specify that our OpenLibertyApplication should turn those values into environment variables in the pod using envFrom. Open Liberty will pick up those variables, and use their values to override the defaults set in server.xml, and the server will be configured to use the new queue manager without changing the container image itself.

apiVersion: openliberty.io/v1beta1

kind: OpenLibertyApplication

metadata:

name: daytrader-preprod

spec:

applicationImage: daytrader-preprod:latest

service:

type: ClusterIP

port: 9443

expose: true

route:

termination: passthrough

env:

- name: WLP_LOGGING_CONSOLE_FORMAT

value: dev

envFrom:

- configMapRef:

name: daytrader-preprod-mq

volumes:

- name: infinispan-secret-volume

secret:

secretName: daytrader-infinispan-generated-secret

volumeMounts:

- name: infinispan-secret-volume

readOnly: true

mountPath: "/platform/bindings/infinispan/secret"

We'll apply this version of the OpenLibertyApplication, and now DayTrader is using MQ in the cluster.

$ oc apply -f olapp.yaml

When the new pod starts up, the messaging endpoints should still activate:

[INFO ] J2CA8801I: The message endpoint for activation specification eis/TradeBrokerMDB and message driven bean application DayTrader3#daytrader-ee7-ejb.jar#DTBroker3MDB is activated.

[INFO ] J2CA8801I: The message endpoint for activation specification eis/TradeStreamerMDB and message driven bean application DayTrader3#daytrader-ee7-ejb.jar#DTStreamer3MDB is activated.

Enabling High Availability

Finally, we'll make one more update to the OpenLibertyApplication to enable high availability for DayTrader. The recommendation was to enable 4 replicas, because DayTrader was deployed to a cluster with 4 cluster members. Let's do that now:

apiVersion: openliberty.io/v1beta1

kind: OpenLibertyApplication

metadata:

name: daytrader-preprod

spec:

applicationImage: daytrader-preprod:latest

replicas: 4

service:

type: ClusterIP

port: 9443

expose: true

route:

termination: passthrough

env:

- name: WLP_LOGGING_CONSOLE_FORMAT

value: dev

envFrom:

- configMapRef:

name: daytrader-preprod-mq

volumes:

- name: infinispan-secret-volume

secret:

secretName: daytrader-infinispan-generated-secret

volumeMounts:

- name: infinispan-secret-volume

readOnly: true

mountPath: "/platform/bindings/infinispan/secret"

This amount of replicas is just a starting point--the performance characteristics of DayTrader on WebSphere are different enough from DayTrader on Open Liberty in OpenShift that the required amount of replicas to achieve the same throughput could be different. This is something that can only be determined through careful performance analysis, however, and an equivalent number of replicas is a good place to start.

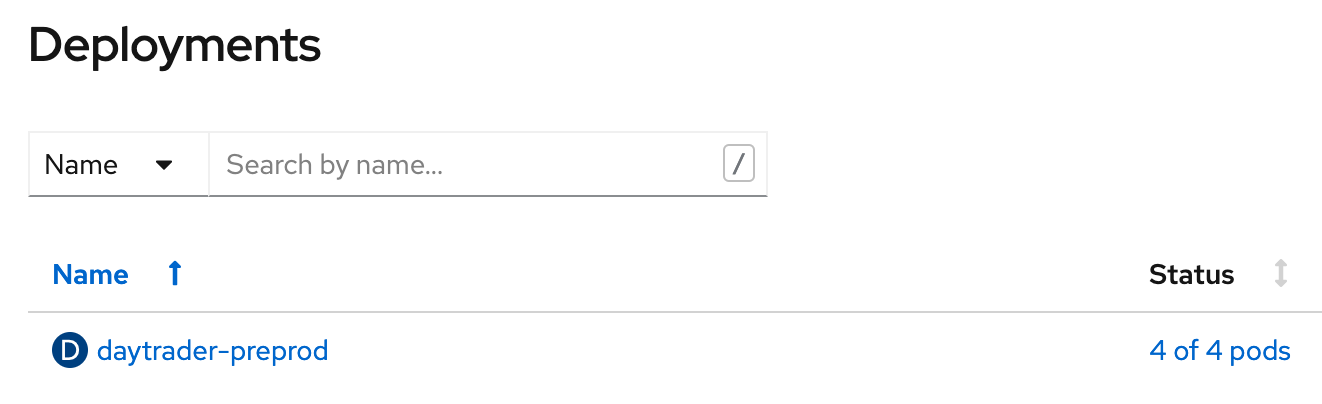

We'll apply the final version of the OpenLibertyApplication, and now DayTrader has 4 pods:

$ oc apply -f olapp.yaml

OpenShift automatically load-balances requests to the DayTrader route among these 4 pods, so there's no need to configure a webserver or reverse proxy. This also means the session persistence we set up earlier can take full effect: if any of these pods go down, requests will be routed to another pod, and the session will be migrated.

Summing It Up

This is just the beginning of what it means to "modernize" an application like DayTrader. From here, there is still lots that you can do: setting up logging and monitoring, setting up a DevOps deployment pipeline, updating DayTrader's code to take advantage of more recent Java/Jakarta EE functionality, and even refactoring DayTrader into microservices. It all depends on what you need to do, and what you'll get the most value out of.

Now, it's up to you. Give these tools and procedures a try on your own applications. See how much easier it is to deploy, maintain, and refactor your code when you have a modern, cloud-native environment to run it on. Definitely leave a comment with your experiences modernizing applications and let us know: what can we make even easier?

See the IBM Developer learning path Modernizing applications to use WebSphere Liberty to discover all the application modernization tools available with WebSphere Hybrid Edition. Also, check out the other articles in this app modernization blog series.