Java Garbage Collection (GC) fundamentals

As discussed in my prior post about how important GC is to Java performance, typical running Java programs create and release Java objects more-or-less continuously. The automated memory management system for dealing with these object allocations and abandonments is referred to as Garbage Collection (GC). This post discusses the basics of how GC works and the various types of GC available in IBM Java, with a focus on performance considerations.

Note: The descriptions herein are high-level and general - actual implementation specifics often vary from the general concept to optimize performance. The post contains IBM Java terminology, but the concepts are broadly applicable to other Java implementations.

Object Lifecycle – Allocation to Garbage Collection

When a running Java thread requests a new object, using the 'new <class-name>' operation, an instance of that Class is created on the Java heap, and a reference to the new object is returned to the requesting thread for its use. When the thread no longer needs the object, the reference is released, either explicitly by setting the reference to 'null' or to point to another object, or implicitly by allowing the reference to go out-of-scope; for example, objects created inside a loop go out-of-scope when thread execution leaves that loop. When there are no live references to a given object, that object becomes "garbage" which is eligible for collection by the GC system.

GCs are typically triggered by a failed attempt to allocate an object, implying free space is exhausted in the allocation area of the heap. The GC system keeps track of "free memory" spaces in the Java heap so that it knows where a newly created object can be placed. When a GC occurs, the heap spaces that garbage objects lived in are returned to the heap free memory list, so new objects can be allocated in those memory areas.

Usually for at least some portion of the GC work, all the application threads in the JVM are paused. This 'stop-the-world' (STW) pause allows the GC system to do things like relocate objects in the heap memory, without causing errors in app threads which contain object address info. For apps which involve any sort of user interaction, long GC pauses can cause unacceptable delays at the user interface. Much GC design effort focuses on minimizing the duration of STW GC pauses, to minimize the delay experienced by users due to GC.

Basic GC Operations

A Java GC cycle has three main operations:

- mark - GC threads start at the GC roots and walk the entire object reference graph, marking as "live" all memory spaces holding objects found during the traversal

- sweep - memory spaces not marked live are returned to the heap free memory list

- compact - object are rearranged to create large contiguous memory areas for future allocations

The mark and sweep operations are always done, in some form, for a GC to accomplish the task of freeing memory for object allocation. Compaction may take a long time, as it involves copying objects to a new location and updating any references to the moved objects. Therefore, compaction might be done only when the heap area has become excessively fragmented (few large contiguous open memory chunks), or it might be done incrementally to limit the length of the individual STW pauses.

Java Heap Management Models

Java object allocation follows one of two basic models:

- The entire heap memory is managed as a single area, with allocations made anywhere in the heap - the optthruput and optavgpause GC policies follow this single-area model

- The heap memory is segmented into two or more areas based on object age: the gencon and balanced GC policies use this 'generational' model

(Note: metronome GC policy is out-of-scope for this post)

The single-area model is very simple, but has the downside that every GC is a 'global GC' which operates on the entire heap; this is an issue because STW pause time for global GCs is roughly proportional to the size of the heap. For large heaps using optthruput GC policy, STW pause times may get quite long. The optavgpause policy does some GC work in parallel with the app threads before pausing, but still has STW pause proportional to the heap size. The diagrams below show a stylized view of app and GC thread activity in STW and concurrent modes.

Note: "STW pause proportional to heap size" really means "... to the amount of live data in the heap", not the total heap size. Most GC work is spent to find the live objects; GC starts with the "GC roots" (off-heap references to heap objects), and traverses the live reference graph from the GC roots to find all live objects in the heap. So for example, if an app maintains only 1G of live objects, a STW GC pause for that app will be similar duration whether the total heap size is 2G or 10G.

Generational GC

Generational GC policies are designed to reduce the frequency of global GCs, and the associated long STW pauses. In a generational GC design, new objects are allocated in an area called the 'nursery', while long-lived objects are kept in an area called the 'tenure' space. When the nursery space fills up, a GC needs only clean the smaller nursery space, not the entire heap, so the GC pause is shorter.

Nursery GC pauses are also much shorter than total-heap GC pauses because for typical Java applications, most newly allocated Java objects will be short-lived; this concept is referred to as the "generational hypothesis". This means that few objects in the nursery will be live when a nursery GC occurs, greatly reducing the work required to traverse the object graph for the nursery space.

Gencon GC policy

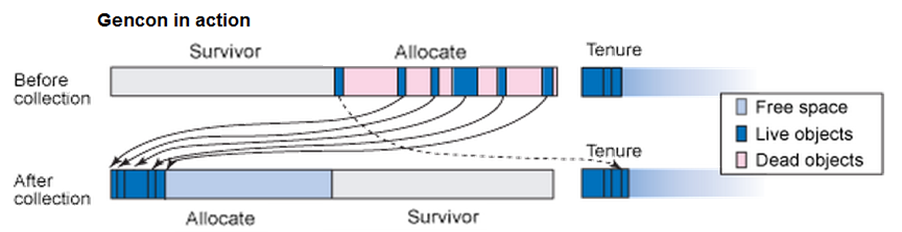

Under the gencon policy, nursery GCs use a 'copy collect' approach. A portion of the nursery space is reserved as a 'survivor area' separate from the 'allocation area'. During a nursery GC, live objects from the allocation area are copied into the survivor area. After live objects are copied over, the survivor area becomes the new allocation area, and a portion of the old allocation area is reserved as the survivor area for the next nursery GC.

Copy collect technique simplifies GC management of free memory spaces available to allocate new objects. After the nursery GC completes, the surviving objects are compactly placed at the beginning of the new allocation area, and the rest of the new allocation area is a contiguous free memory space. When the GC ends and app threads resume running, the nursery's large contiguous free memory area also makes runtime object allocation more efficient, as it is easy to find space for object placement.

Objects which survive more than some number of nursery collections are 'promoted' to the tenure space, because a corollary of the generational hypothesis is that objects which survive several nursery GC cycles will probably be long-lived. Work is required on each nursery GC to find and copy live objects in the allocate space to the survivor space, so long-lived objects are moved to tenure to reduce the nursery GC work.

The number of nursery GC cycles required for promotion, called the 'tenureAge', is determined by heuristics calculated by the GC system. For workloads that follow the generational hypothesis, the tenureAge will be high, with most objects dying in the nursery and only a few surviving long enough to make it into the tenure area. The diagram below shows a logical representation of a nursery GC copy collect operation.

After the JVM runs for some time, the tenure area will become full, with a combination of live objects and objects that have died after promotion; at this point a global GC is required. Because most objects are dying in the nursery, generational policies require relatively few global GCs – in a well-tuned system, it is common to see one global GC every 100-1000 nursery GCs. Also, since the tenure heap has fewer allocations of only long-lived objects, it is less prone to fragmentation, so compactions are rarely necessary, reducing average global GC duration.

If the tenure heap were entirely filled with live objects, the GC system would raise a "heap OutOfMemory (OOM)" error; in this state, JVM operation will be severely degraded and it may terminate, due to lack of memory space to work in. The general rule-of-thumb for tenure heap sizing is that after a global GC completes, there should be at least 30% free space in the tenure heap (not total heap - nursery area is not part of this calculation). As the tenure heap live data load gets higher than 70%, it becomes harder to find places in the tenure area for promoted objects and global GCs become more frequent; both of these conditions impact performance.

Note that just prior to the global GC, the tenure heap will appear to be full (or nearly), with very low "free memory". This does NOT mean that the heap is about to OOM, however. Before the global GC, the tenure heap is filled with some combination of live objects and garbage, and we do not know how much of the tenure heap fill is garbage until the global GC runs. Only after the global GC completes can tenure heap fill be meaningfully evaluated.

Balanced GC Policy

The gencon GC policy manages the heap in two generations, nursery and tenure. When the long-lived object set becomes large, the work required to complete a GC on the tenure area grows, and global GC STW pauses may become too long.

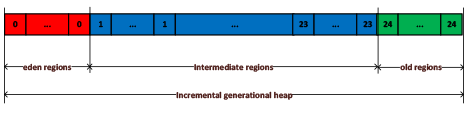

The balanced GC policy was developed to accommodate large live datasets while limiting STW pause duration, by dividing the heap into many smaller "regions” which are managed individually. The diagram below shows a conceptual view of the Java heap managed by balanced GC policy; the area for new object allocation, called ‘nursery’ in gencon, is called ‘eden’ in balanced GC.

Note: This is a logical view, which is unrealistically tidy; in physical memory, regions for each generation may be located anywhere across the Java heap address space.

To briefly summarize how balanced GC policy works:

- Balanced is 'many generational' vs only two generations in traditional gencon

- The total heap is divided into N regions where N >> the generation count

- Each occupied region (containing live data) is part of a generation

- Partial GCs (PGC) are triggered by eden generation fill threshold

- PGC operates on eden and selected regions - selection is based on heuristics about region fill, fragmentation, etc

- PGC copy collects selected regions from gen-N to gen-N+1

- PGC performs classunloading and defragmentation for selected regions (not globally)

- A set of survivor regions (empty or mostly empty) is maintained

- As useable survivor regions become scarce, incremental global mark/sweep is performed

With these techniques, balanced GC policy greatly reduces the worst-case STW pause times compared to gencon tenure space collections. There is however a trade-off - STW pauses for balanced 'partial GC' are typically somewhat longer than for gencon 'nursery GC', because of the extra work done in the partial GC. Ongoing development effort will further improve balanced GC policy performance, with the aim of matching gencon performance even for small heaps.

Concurrent GC Operation

Several techniques described above strive to reduce STW pause time by reducing the total work done in a single GC operation. Another way to reduce STW pause time is to do as much GC work as possible outside the STW pause window, running “concurrently” with the application threads. Concurrent work is the default mode for gencon global GCs and for balanced GCs, and is optionally available for gencon nursery GCs for IBM JDKs released since mid-2019.

From a performance perspective, concurrent GC involves a straightforward trade-off: The STW pause is shorter, but the concurrent GC work competes with application threads for CPU, so the max throughput of the application is reduced during the GC concurrent work period. A more subtle performance trade-off arises because doing the GC work concurrently is less efficient than doing the same work in STW mode, because of overhead required to ensure correctness, as discussed below.

A couple of basic functional issues arise when app threads and GC threads are concurrently working in the same area of the Java heap:

- App threads will be creating and changing references to objects, while the GC threads are building views of all live objects – if GC were unaware of changes made by the app threads while GC is working, subsequent GC work might make invalid changes to the object graph.

- Some GC operations involve moving objects around in the memory space, while app threads hold object references pointing to particular addresses – if an app thread used an old invalid address to access an object moved by GC, it would cause program errors.

There are well-established techniques for overcoming these issues, involving Object Access (read/write) Barriers and extra updates to the usual GC bookkeeping techniques, to ensure the correctness of the heap state for the running application threads. However, the Object Access Barriers and extra bookkeeping steps increase the execution path length for both application threads and GC threads. Thus performing a given GC operation concurrently generally requires more CPU and longer execution time than doing the same work in a STW pause.

Concurrent GC overhead is particularly evident for concurrent nursery GC, during which app threads are taxed with the task of moving nursery objects they access from the allocate to survivor area. This extra work may typically cause a reduction in max throughput of 10-20% compared to non-concurrent nursery GC operation, in return for an order-of-magnitude reduction in nursery GC STW pause time. The system administrator needs to understand the relative priority of max throughput and minimal GC STW pauses, to decide whether concurrent nursery GC is desirable for their workloads. As with all performance issues, we recommend A-B testing in a controlled environment to understand the tradeoffs.

Conclusion

Thus concludes my way-too-long yet still very high-level, oversimplified review of Java GC fundamentals, with performance-related commentary. With the basics established, in future posts I will move on to discuss how to understand GC performance in your application environments and how to diagnose GC-related problems. Below are links to docs providing further detail about how IBM Java GC works.

More detailed overview of IBM Java GC

Discussion of GC tradeoffs and tuning

Concurrent nursery GC Overview

#websphere-performance

#performance

#java-performance