-

Deploy OpenShift Container Platform 4.2 cluster

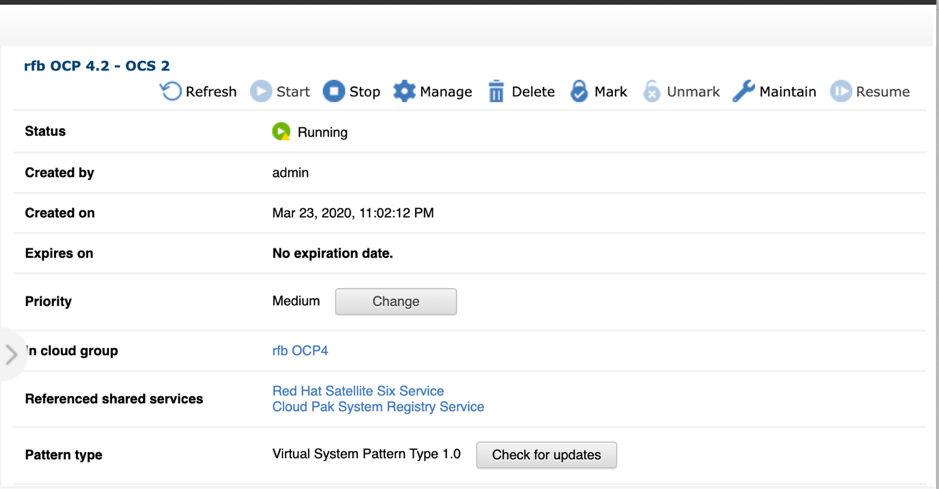

Deploy OpenShift Container Platform 4.2 cluster using OpenShift pattern in Cloud Pak System having a minimum of 3 worker nodes. There must be at least 3 nodes for deploying OpenShift Container Storage cluster.

Please refer following blog for deploying Red Hat OpenShift Container Platform 4.2 on IBM Cloud Pak System 2.3.2.0

https://developer.ibm.com/recipes/tutorials/deploying-red-hat-openshift-4-on-ibm-cloud-pak-system-2-3-2-0/

-

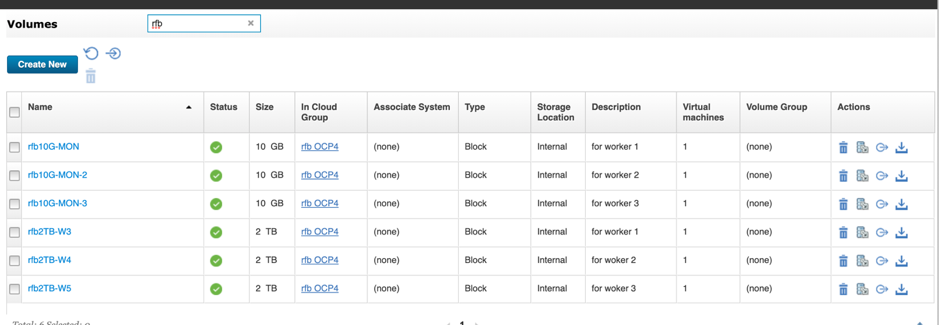

Create block volumes

Create three 2 TB and 10GB Block type volumes in cloud group where OpenShift Container Platform cluster is deployed. These volumes are used as storage for OpenShift Container Storage cluster nodes.

For eg: Volumes created in ‘rfbOCP4’ cloud group:

-

Resolve IP Address of worker nodes

SSH to primary helper node and find out the IP addresses of each worker node.These IP addresses are required for configuring YAML files as well as for tainting worker nodes for exclusive OpenShift Container Storage use.

For example:

-bash-4.2# Kubernetes get node -o wide | grep worker | awk {'print $1" " $6'}

worker1.cps-r81-9-46-123-47.rtp.raleigh.ibm.com 9.46.123.41

worker2.cps-r81-9-46-123-47.rtp.raleigh.ibm.com 9.46.123.44

worker3.cps-r81-9-46-123-47.rtp.raleigh.ibm.com 9.46.123.40

worker4.cps-r81-9-46-123-47.rtp.raleigh.ibm.com 9.46.123.43

worker5.cps-r81-9-46-123-47.rtp.raleigh.ibm.com 9.46.123.42

-

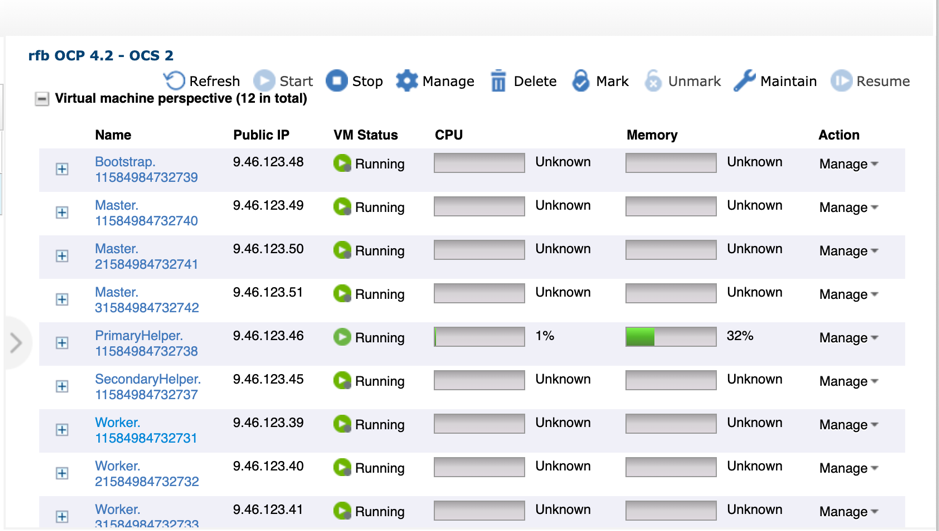

Attach volumes for OSD pods

Determine workers to be used for OpenShift Container Storage deployment and attach the already created 2TB volumes (in Step 2) to each of them. These block volumes are used for OpenShift Container Storage OSD pods(ceph data).

For example:

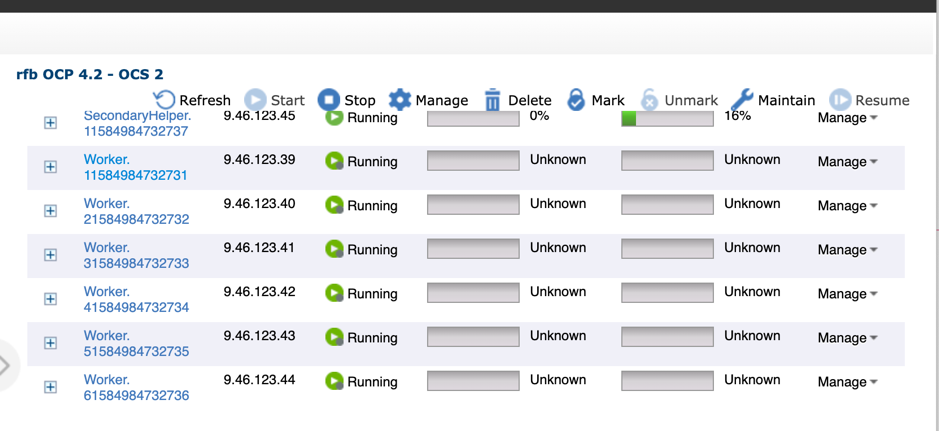

Workers Virtual Machines in OpenShift Container Platform instance UI

-

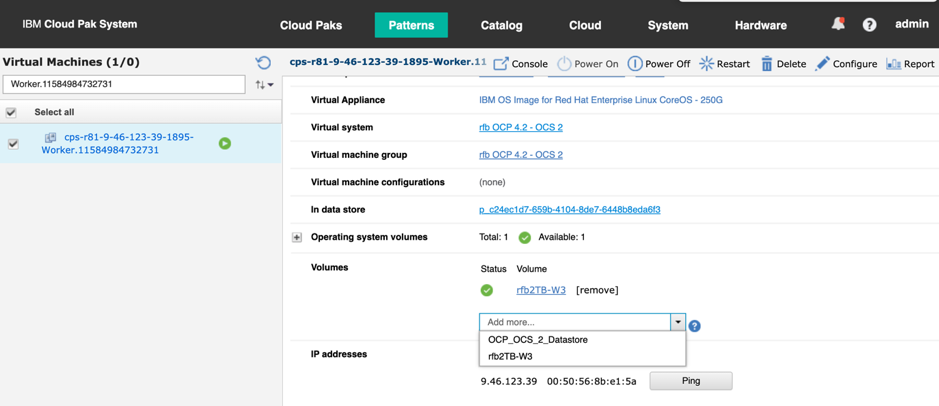

Attach volume to selected worker virtual machine

Click on the selected Worker Virtual Machine and attach volume to it from list of volumes in ‘Volumes’ section

-

Attach volumes for MON pods

Attach 10GB volumes created in Step 2 to workers that are selected for OpenShift Container Storage deployment. These volumes are used for OpenShift Container Storage MON pods (ceph monitor).

-

Verify volume attachment

Run the following bash command to verify whether the volumes are attached correctly to all the selected workers:

-bash-4.2# for i in {1..3} ; do ssh -i /core_rsa core@worker${i}lsblk | egrep "^sdb.*|sdc.*$" ; done

sdb 8:16 0 2T 0 disk

sdc 8:32 0 10G 0 disk

sdb 8:16 0 2T 0 disk

sdc 8:32 0 10G 0 disk

sdb 8:16 0 2T 0 disk

sdc 8:32 0 10G 0 disk

Note: On the worker node, 2TB is sdb and 10GB is sdc.-bash-4.2# for i in {1..3} ; do ssh -i /core_rsa core@worker${i}lsblk | egrep "^sdb.*|sdc.*$" ; done

-

Install the Local Storage operator:

- Run the following command to create local storage project:

-bash-4.2# oc new-project local-storage

- Run the following steps to install the local storage operator from OpenShift Container Platform GUI

1. Log in to the OpenShift Container Platform Web Console.

2. Go to *Operators-* > Operator Hub

3. Enter Local Storage in the filter box to search for the Local Storage Operator.

4. Click Install.

5. On the Create Operator Subscription page, select the specific namespace on the cluster. Select local-storage from the drop-down list.

6. Adjust the values for Update Channel and Approval Strategy to the desired values.

7. Click Subscribe. After it is completed, the local-storage operator gets listed in Installed Operators section of web console.

8. Wait for operator pod up and running.

-bash-4.2 # oc get pod -n local-storage

NAME READY STATUS RESTARTS AGE

local-storage-operator-ccbb59b45-nn7ww 1/1 Running 0 57s

-

Prepare LocalVolume YAML file for MON PODs.

For FileSystem volumes, prepare LocalVolume YAML file to create resource for MON PODs.We need to replace worker names given in Values section with name of workers selected for OpenShift Container Storage deployment.

For example:

-bash-4.2# cat local-storage-filesystem.yaml

apiVersion: "local.storage.openshift.io/v1"

kind: "LocalVolume"

metadata:

name: "local-disks-fs"

namespace: "local-storage"

spec:

nodeSelector:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- worker1.cps-r81-9-46-123-47.rtp.raleigh.ibm.com

- worker2.cps-r81-9-46-123-47.rtp.raleigh.ibm.com

- worker3.cps-r81-9-46-123-47.rtp.raleigh.ibm.com

storageClassDevices:

- storageClassName: "local-sc"

volumeMode: Filesystem

devicePaths:

- /dev/sdc

-

Create resource for MON PODs

Run the following command to create resource for MON PODs:

-bash-4.2# oc create -f local-storage-filesystem.yaml

-

Verify Storage Classes and Persistent Volumes (PVs)

Run the following command to check whether all the PODs are up and running and if StorageClass and PVs exist:

-bash-4.2# oc get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

local-pv-64f226af 10Gi RWO Delete Available local-sc 11s

local-pv-d34c3fd1 10Gi RWO Delete Available local-sc 11m

local-pv-f9975f98 10Gi RWO Delete Available local-sc 4

-bash-4.2# oc get sc

NAME PROVISIONER AGE

local-sc kubernetes.io/no-provisioner 109s

-

Prepare LocalVolume YAML file for OSD PODs

Prepare LocalVolume YAML file to create resource for OSD PODs. Block type is required for these volumes.We need to replace worker names given in Values section with name of workers selected for OpenShift Container Storage deployment.

For example:

-bash-4.2# cat local-storage-block.yaml

apiVersion: "local.storage.openshift.io/v1"

kind: "LocalVolume"

metadata:

name: "local-disks"

namespace: "local-storage"

spec:

nodeSelector:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- worker1.cps-r81-9-46-123-47.rtp.raleigh.ibm.com

- worker2.cps-r81-9-46-123-47.rtp.raleigh.ibm.com

- worker3.cps-r81-9-46-123-47.rtp.raleigh.ibm.com

storageClassDevices:

- storageClassName: "localblock-sc"

volumeMode: Block

devicePaths:

- /dev/sdb

-

Create resource for OSD PODs

Run the following command to create a resource for OSD PODs:

-bash-4.2# oc create -f local-storage-block.yaml

-

Verify Storage Classes and Persistent Volumes (PVs)

Run the following command to check whether all the PODs are up and running and if StorageClass and PVs exist:

-bash-4.2# oc get pv | grep localblock-sc | awk {'print $1" "$2 " " $7'}

local-pv-46028b05 2Ti localblock-sc

local-pv-764f220a 2Ti localblock-sc

local-pv-833c2983 2Ti localblock-sc

-bash-4.2# oc get sc

NAME PROVISIONER AGE

local-sc kubernetes.io/no-provisioner 6m36s

localblock-sc kubernetes.io/no-provisioner 25s

-

Deploy OpenShift Container Storage 4.2 operator

- Run the following command to create openshift-storage:

-bash-4.2# oc create -f ocs-namespace.yaml

-bash-4.2# cat ocs-namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

name: openshift-storage

labels:

openshift.io/cluster-monitoring: "true"

- Add labels to 3 worker nodes so that they can be used only for OpenShift Storage cluster

-bash-4.2# oc label node <Name of first worker node selected for OpenShift Storage Deployment> "cluster.ocs.openshift.io/openshift-storage=" --overwrite

-bash-4.2# oc label node <Name of first worker node selected for OpenShift Storage Deployment> "topology.rook.io/rack=rack0" --overwrit

-bash-4.2# oc label node <Name of second worker node selected for OpenShift Storage Deployment> "cluster.ocs.openshift.io/openshift-storage=" --overwrite

-bash-4.2# oc label node <Name of second worker node selected for OpenShift Storage Deployment> "topology.rook.io/rack=rack1" --overwrite

-bash-4.2# oc label node <Name of third worker node selected for OpenShift Storage Deployment> "cluster.ocs.openshift.io/openshift-storage=" --overwrite

-bash-4.2# oc label node <Name of third worker node selected for OpenShift Storage Deployment> "topology.rook.io/rack=rack2" --overwrite

For example:

-bash-4.2# oc label node worker1.cps-r81-9-46-123-47.rtp.raleigh.ibm.com "cluster.ocs.openshift.io/openshift-storage=" --overwrite

-bash-4.2# oc label node worker1.cps-r81-9-46-123-47.rtp.raleigh.ibm.com "topology.rook.io/rack=rack0" --overwrit

-bash-4.2# oc label node worker2.cps-r81-9-46-123-47.rtp.raleigh.ibm.com "cluster.ocs.openshift.io/openshift-storage=" --overwrite

-bash-4.2# oc label node worker2.cps-r81-9-46-123-47.rtp.raleigh.ibm.com "topology.rook.io/rack=rack1" --overwrite

-bash-4.2# oc label node worker3.cps-r81-9-46-123-47.rtp.raleigh.ibm.com "cluster.ocs.openshift.io/openshift-storage=" --overwrite

-bash-4.2# oc label node worker3.cps-r81-9-46-123-47.rtp.raleigh.ibm.com "topology.rook.io/rack=rack2" --overwrite

- Install OpenShift Container Storage 4.2 operator from OpenShift Container Platform GUI.

1. Log in to the OpenShift Container Platform Web Console

2. Go to *Operators-* > Operator Hub.

3. Enter OpenShift Container Storage into the filter box to locate the OpenShift Container Storage Operator.

4. Click Install.

5. On the Create Operator Subscription page, select specific namespace on the cluster. Select openshift-storage from the drop- down list.

6. Adjust the values for Update Channel(4.2 stable) and Approval Strategy to the desired values.

7. Click Subscribe. After it is completed, OpenShift Container Storage operator gets listed in the Installed Operators section of web console.

8. Make sure that 3 pods that are related to OpenShift Container Storage operator are in good condition:

-bash-4.2# oc get pod

NAME READY STATUS RESTARTS AGE

noobaa-operator-779fbc86c7-8zqj2 1/1 Running 0 46s

ocs-operator-79d555ffb-b9srx 1/1 Running 0 46s

rook-ceph-operator-7b85794f6f-2dpjx 1/1 Running 0 46s

-

Create Storage cluster of OpenShift Container Storage

Run the following command to create Storage cluster of OpenShift Container Storage:

-bash-4.2# oc create -f ocs-cluster-service.yaml

Content of ocs-cluster-service.yaml:

-bash-4.2# cat ocs-cluster-service.yaml

apiVersion: ocs.openshift.io/v1

kind: StorageCluster

metadata:

name: ocs-storagecluster

namespace: openshift-storage

spec:

manageNodes: false

monPVCTemplate:

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: 'local-sc'

volumeMode: Filesystem

storageDeviceSets:

- count: 1

dataPVCTemplate:

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Ti

storageClassName: 'localblock-sc'

volumeMode: Block

name: ocs-deviceset

placement: {}

portable: true

replica: 3

resources: {}

Here storage classes ‘local-sc’ and ‘localblock-sc’ are created in Steps 9 and 11.

-

Verify Local Volume Persistent Volumes (PVs)

Run the following command to check local volume PVs are bounded or not:

For example:

-bash-4.2# oc get pv |grep local | awk {'print $1" " $2 " " $5 " " $6'}

local-pv-46028b05 2Ti Bound openshift-storage/ocs-deviceset-0-0-6vg6n

local-pv-64f226af 10Gi Bound openshift-storage/rook-ceph-mon-a

local-pv-764f220a 2Ti Bound openshift-storage/ocs-deviceset-1-0-pwsr9

local-pv-833c2983 2Ti Bound openshift-storage/ocs-deviceset-2-0-crz8q

local-pv-d34c3fd1 10Gi Bound openshift-storage/rook-ceph-mon-c

local-pv-f9975f98 10Gi Bound openshift-storage/rook-ceph-mon-b

If all local Volume PVs are bounded, then OpenShift Container Storage 4.2 operator and storage-cluster are ready to use!!!

-

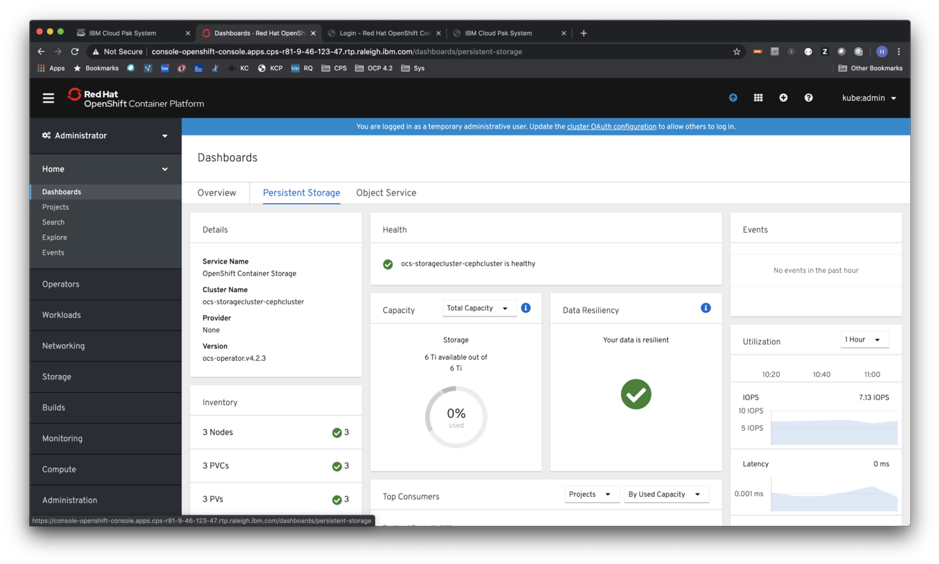

Verify Persistent Storage and Object storage in OpenShift Cluster dashboard

-

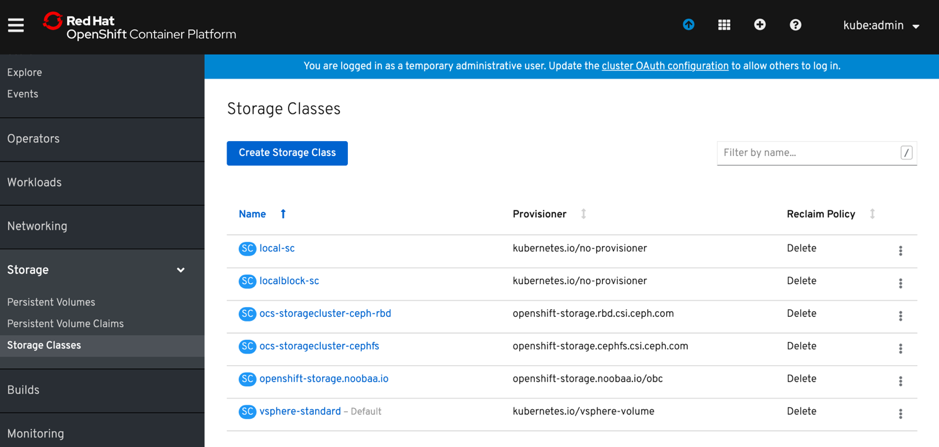

Verify storage classes

To enable user provisioning of storage, OpenShift Container Storage provides storage classes that are ready to use when OpenShift Container Storage is deployed.

-

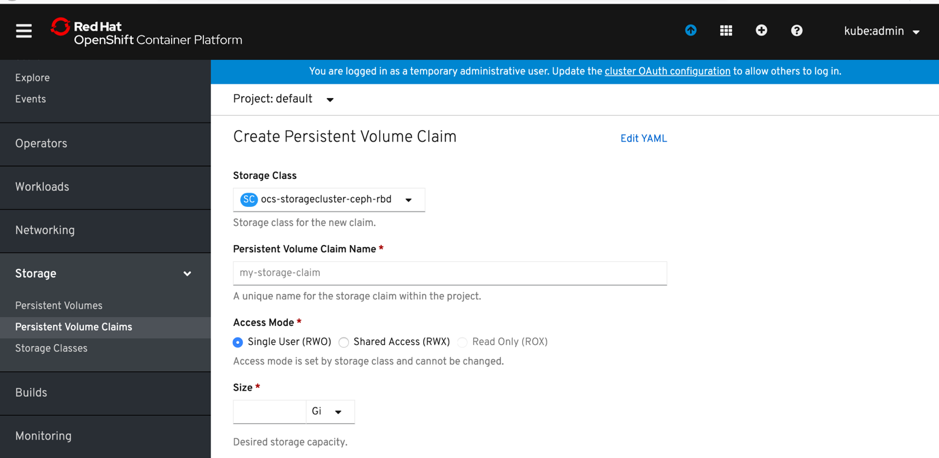

Create Persistent Volume Claim (PVC)

Users and /or developers can request dynamically provisioned storage by including storage class in their PersistentVolumeClaim requests for their applications.

-

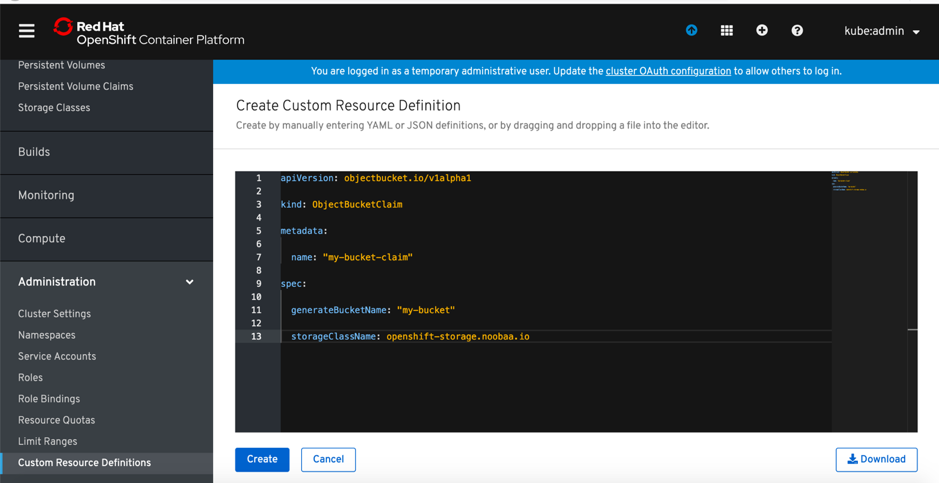

Create Object Bucket Claim (OBC)

In addition to block and file-based storage classes, OpenShift Container Storage introduces native object storage services for Kubernetes. To provide support for object buckets, OpenShift Container Storage introduces the Object Bucket Claims (OBC) and Object Buckets (OB) concept, which takes inspiration from the Persistent Volume Claims (PVC) and Persistent Volumes (PV).

Click on *Administration-* > *Custom Resource Definitions-* > Create Custom Resource Definition on Openshift Container Platform UI and paste the following YAML content in the given box to create Object Bucket Claim(OBC)

apiVersion: objectbucket.io/v1alpha1

kind: ObjectBucketClaim

metadata:

name: "my-bucket-claim"

spec:

generateBucketName: "my-bucket"

storageClassName: openshift-storage.noobaa.io

After OBC is created, the following resources get created:

- An ObjectBucket(OB) which contains bucket endpoint information, a reference to OBC and a reference to storage class.

- A ConfigMap in same namespace as the OBC, which contains endpoint that is used by applications to connect and consume object interface.

- A Secret in the same namespace as the OBC that contains key-pairs that are needed to access the bucket.

-

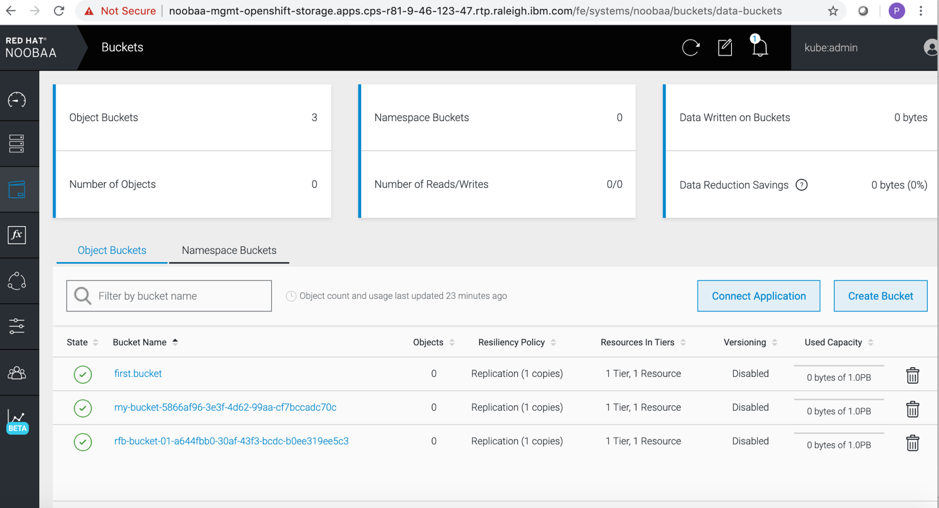

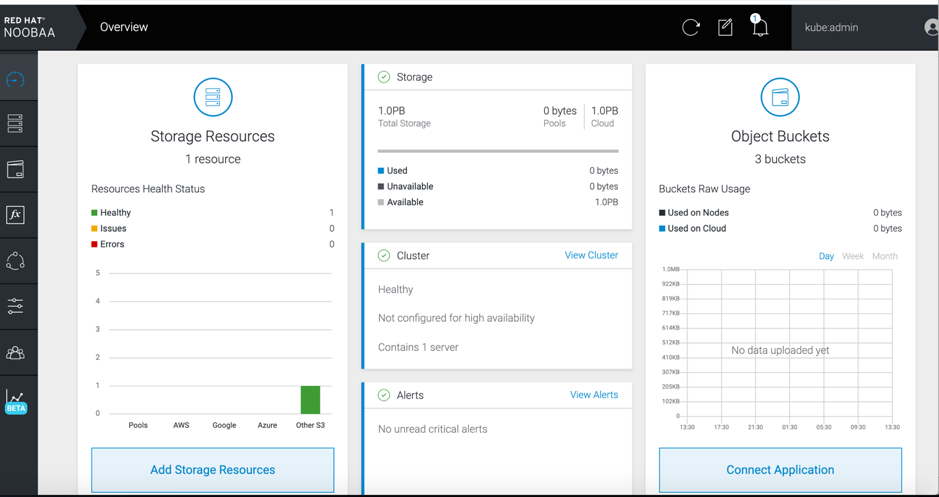

Verify ObjectBucket (OB) on Nooba operator console

You can also check ObjectBuckets(OB) on Nooba operator console:

Click *Dashboard-* > Object Service. In the Details section, click link ‘nooba’ given as System Name that redirects to nooba console.

Object Service:

Nooba Console Link:

Nooba console:

Object Buckets: