How to establish Ambari & HDP with GPFS transperency

[Lab Environment]

- 3 VM nodes with rhel 7.6

- each with 16G memory, 100G disks for root disk and another 100G disks for GPFS NSD.

- HDP-3.1.5.0

- ambari2.7.5

- Spectrum_Scale 5.1.0

- Spectrum_Scale_Management_Pack_MPACK_for_Ambari-2.7.0.9-noarch-Linux.tgz

[Reference]

BDA Info center, overall installation guide:

https://www.ibm.com/docs/en/spectrum-scale-bda?topic=3x-installation

Ambari Installaton guide from cloudera:

https://docs.cloudera.com/HDPDocuments/Ambari-2.7.5.0/bk_ambari-installation/content/ch_Installing_Ambari.html

HDP configuration and install all related services guide:

https://docs.cloudera.com/HDPDocuments/Ambari-2.7.5.0/bk_ambari-installation/content/launching_the_ambari_install_wizard.html

MPack installation guide:

https://www.ibm.com/docs/en/spectrum-scale-bda?topic=installation-install-mpack-package

[Overall steps]

- Establish yum http repo for ambari/HDP/GPFS

- Install ambari

- Setup HDP repo and install all services from HDP

- Env ready, and install GPFS MPack to change community HDFS to HDFS tranparency

[Step 1. Establish yum http repo for ambari/HDP/GPFS]

a) Install and Create an HTTP server:

yum install -y httpd

b) Download and put ambari/HDP/GPFS packages under default http server path:

[root@hadoop-transparency1 ~]# ll /var/www/html/

total 4

drwxrwxrwx. 6 root root 4096 Jun 26 09:52 ambari

drwxrwxrwx. 7 root root 4096 Jun 26 21:25 gpfs_rpms

drwxrwxrwx. 3 root root 4096 Jun 26 21:24 hdfs_rpms

drwxrwxrwx. 32 root root 4096 Jun 9 09:23 HDP-3.1.5.0-97

drwxrwxrwx. 5 root root 4096 Jun 9 22:40 HDP-UTILS-1.1.0.22

drwxrwxrwx. 3 root root 4096 Jun 22 00:52 libtirpc-devel

drwxrwxrwx. 2 root root 4096 Jun 9 22:38 repos

c) Start httpd service

systemctl start httpd

d) Disable SELinux to avoid permission issue:

setenforce 0

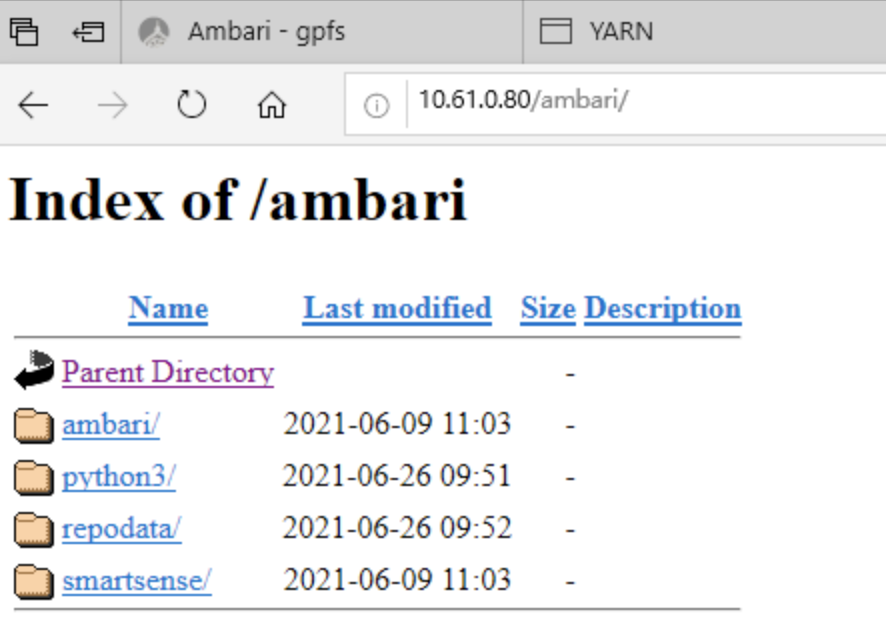

e) Verify if it can be reached via browser properly:

d) Configure yum on all 3 nodes:

[HDP-UTILS-1.1.0.22-repo-1]

name=HDP-UTILS-1.1.0.22-repo-1

baseurl=http://10.61.0.80/HDP-UTILS-1.1.0.22

enabled=1

gpgcheck=0

[HDP-3.1-repo-1]

name=HDP-3.1-repo-1

baseurl=http://10.61.0.80/HDP-3.1.5.0-97

enabled=1

gpgcheck=0

[ambari-2.7.5.0]

baseurl=http://10.61.0.80/ambari

name=ambari2.7.5.0

enabled=1

gpgcheck=0

[GPFS]

name=gpfs-4.2.0

baseurl=http://10.61.0.80/gpfs_rpms

enabled=1

gpgcheck=0

list yum repolist:

[root@ambari1 ~]# yum repolist

Loaded plugins: langpacks, product-id, search-disabled-repos, subscription-manager

This system is not registered with an entitlement server. You can use subscription-manager to register.

Repository media is listed more than once in the configuration

repo id repo name status

!GPFS gpfs-4.2.0 22

!HDP-3.1-repo-1 HDP-3.1-repo-1 201

!HDP-UTILS-1.1.0.22-repo-1 HDP-UTILS-1.1.0.22-repo-1 16

!ambari-2.7.5.0 ambari2.7.5.0 17

!media ftp 5,152

repolist: 5,625

[Step 2. Install ambari]

yum install ambari-server

after installed successfully run:

[root@ambari1 ~]# ambari-server start

Using python /usr/bin/python

Starting ambari-server

Ambari Server running with administrator privileges.

Organizing resource files at /var/lib/ambari-server/resources...

Ambari database consistency check started...

Server PID at: /var/run/ambari-server/ambari-server.pid

Server out at: /var/log/ambari-server/ambari-server.out

Server log at: /var/log/ambari-server/ambari-server.log

Waiting for server start......................

Server started listening on 8080

~

Ambari Server 'start' completed successfully.

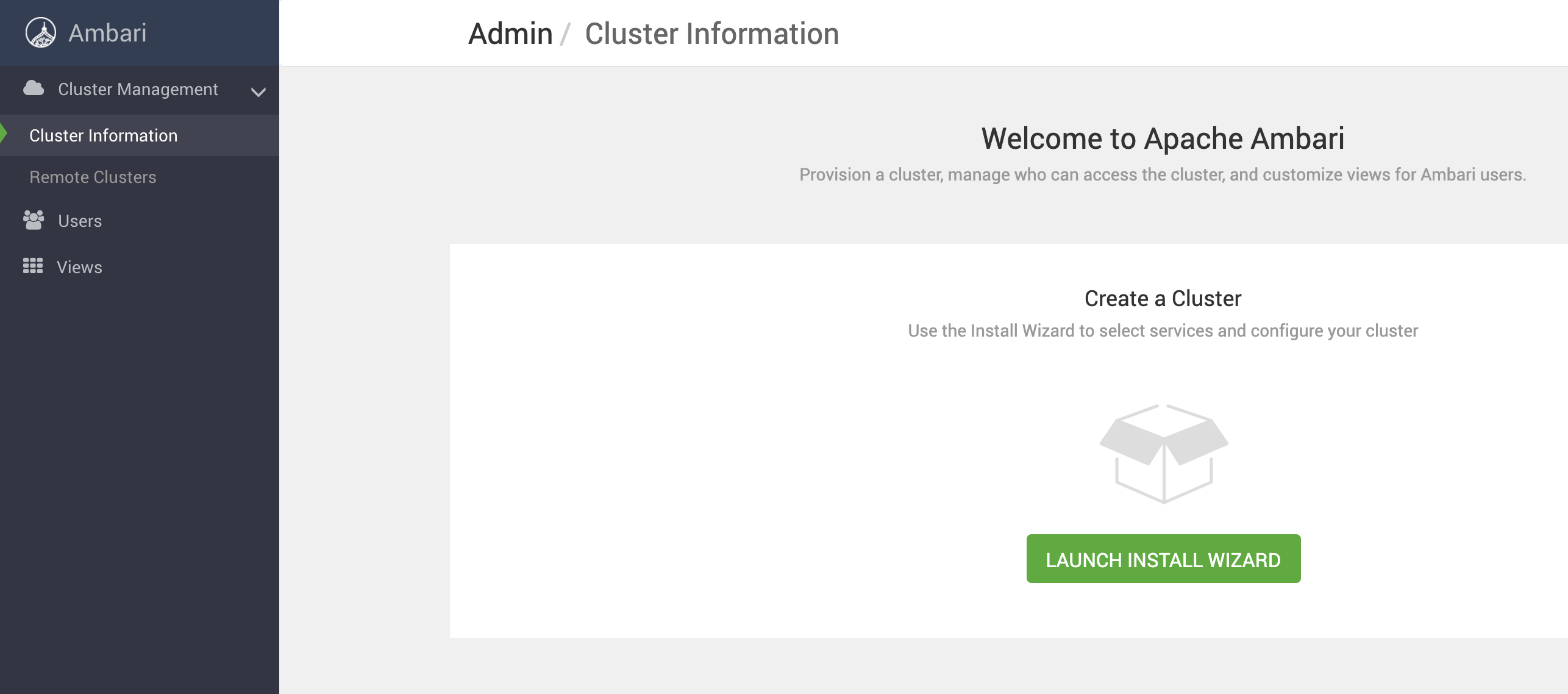

access ambari gui and launch install wizard to Install HDP:

[Step 3. Setup HDP repo and install all services from HDP]

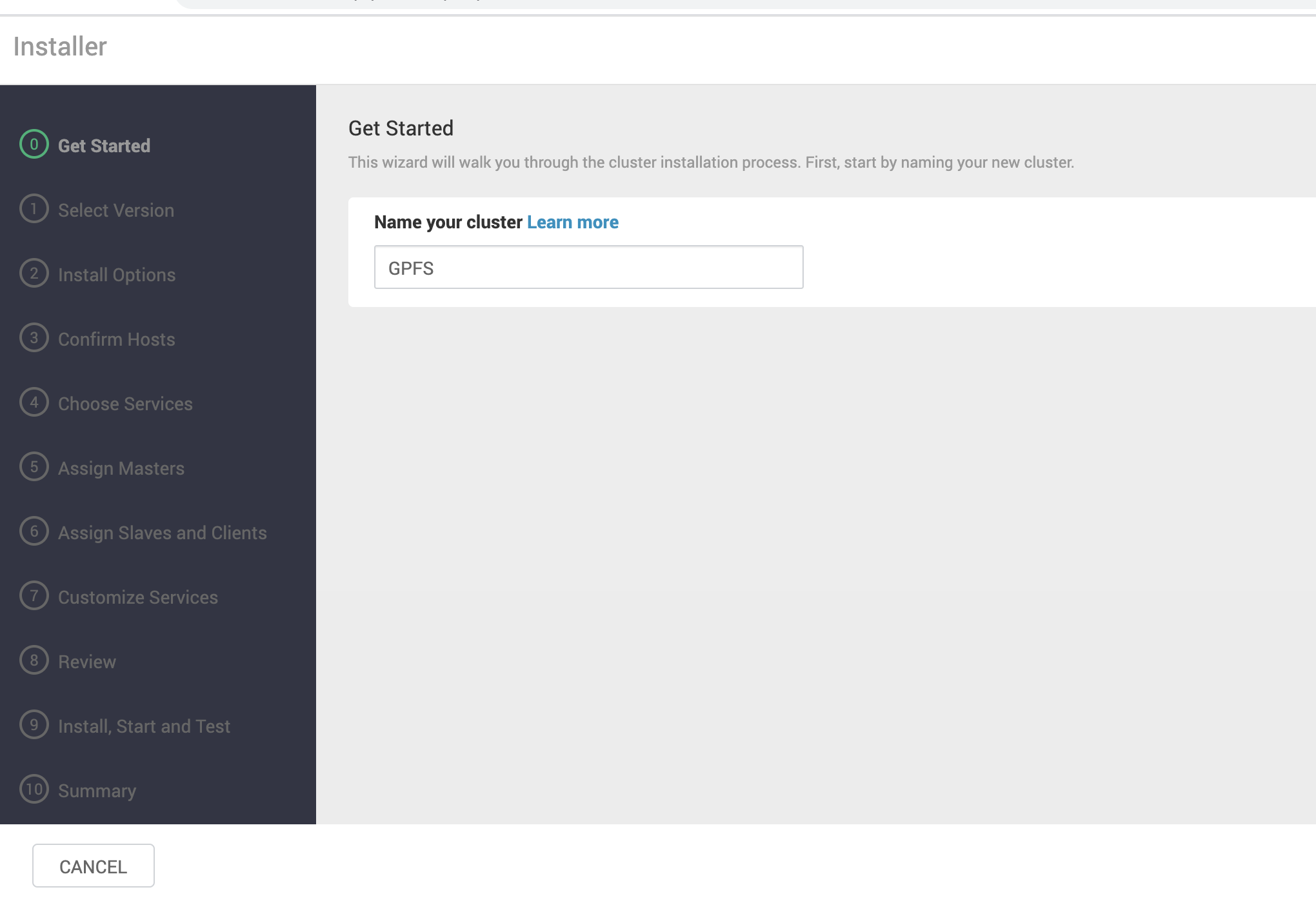

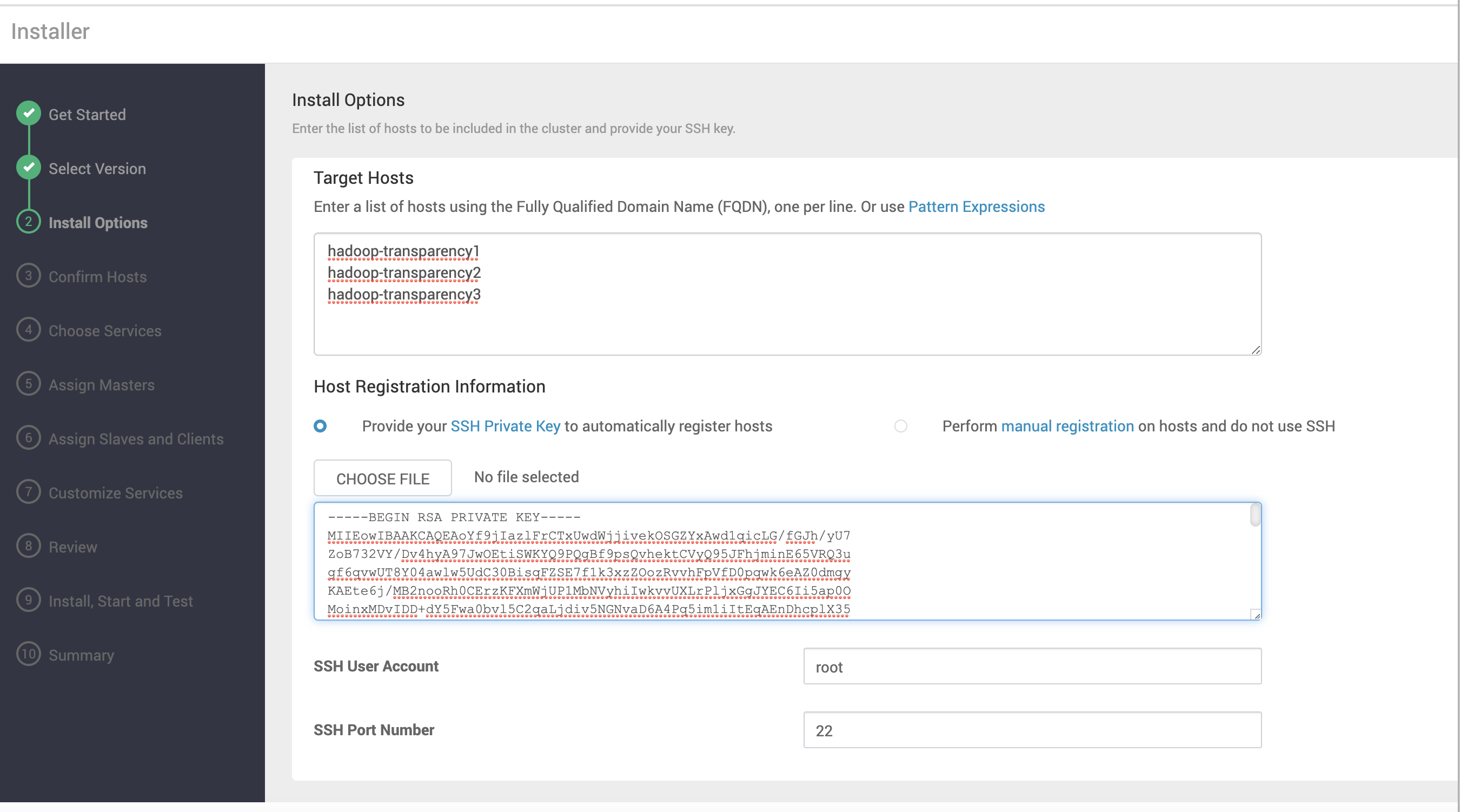

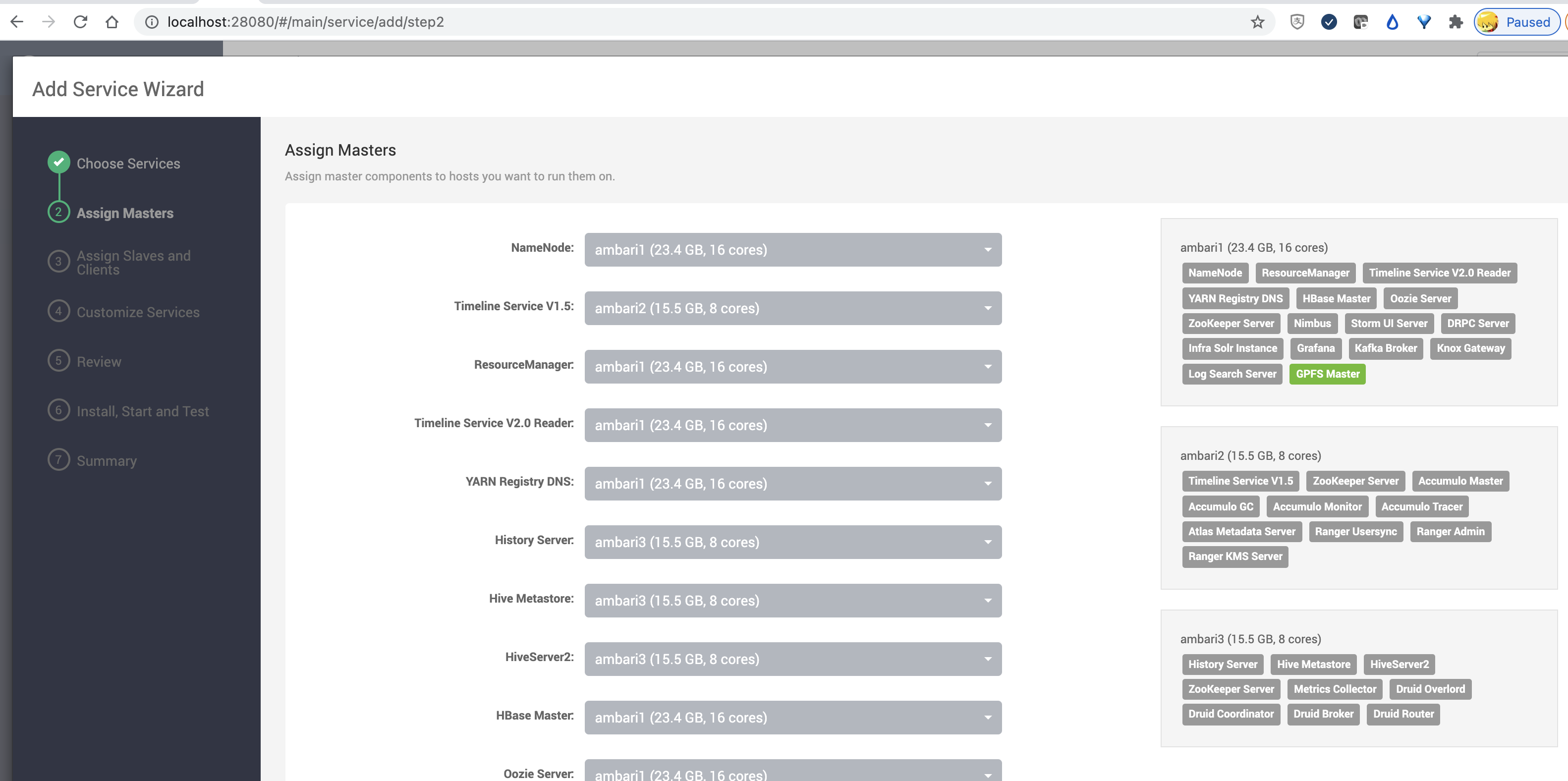

Follow the installation wizard to set your own cluster:

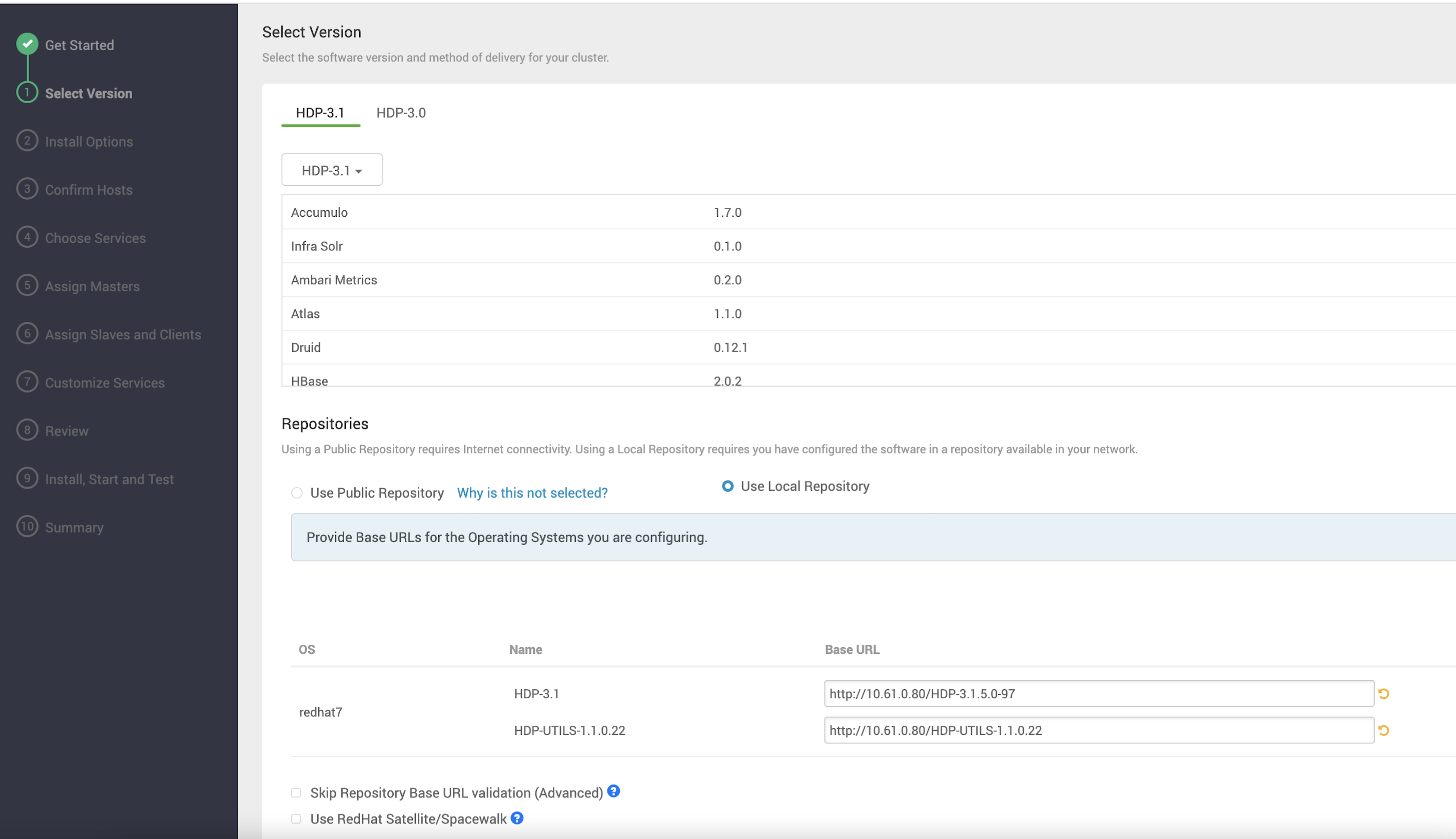

Specify the HDP and HDP utils repo, which we already prepared, all the service installation rpm will fetch from there:

example:

http://10.61.0.80/HDP-3.1.5.0-97

http://10.61.0.80/HDP-UTILS-1.1.0.22

Provide private key to all target hosts:

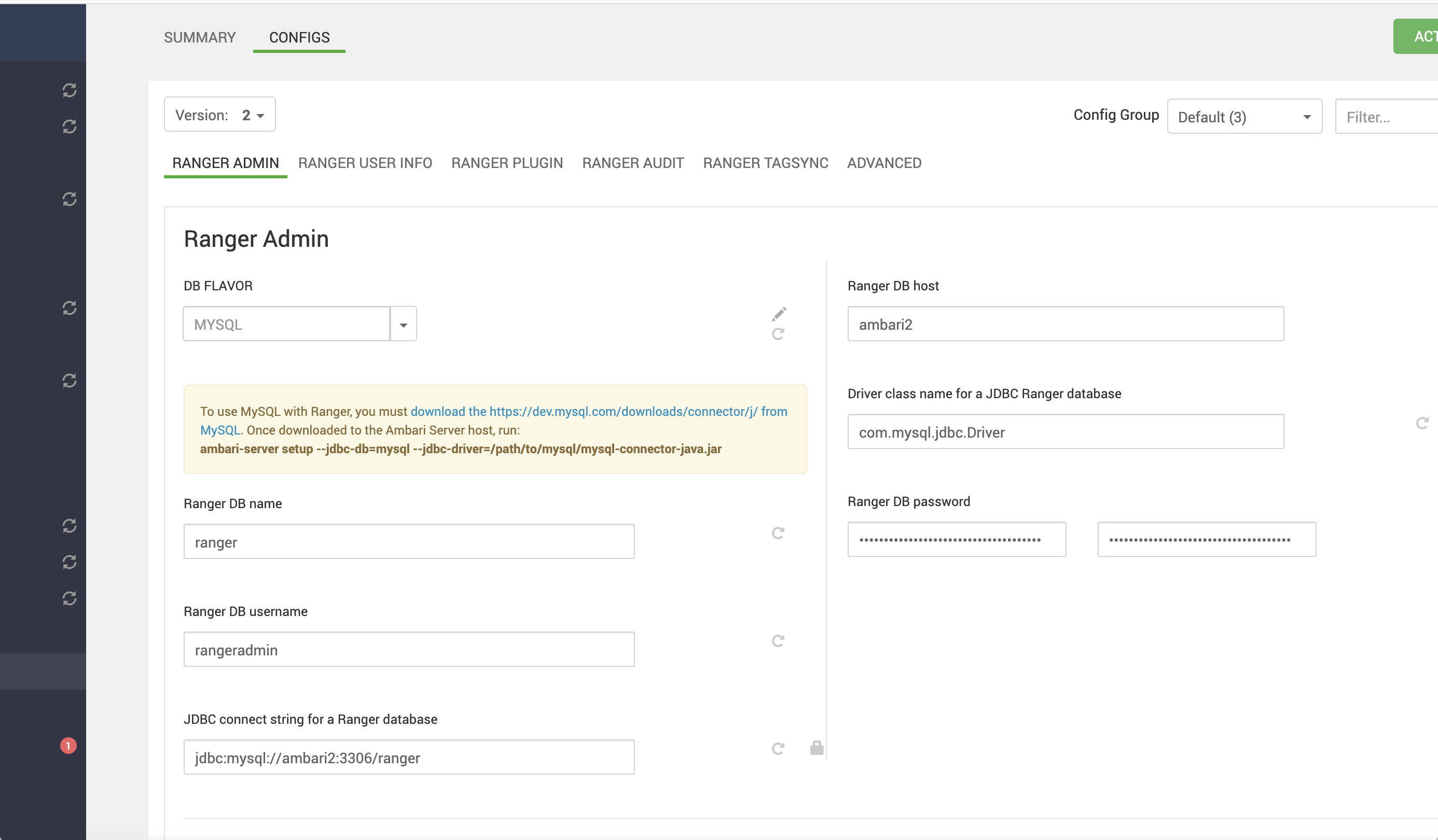

Choose service to install, no special, but about Ranger and Ranger kms(Authentication module), you need to install mysql and pre-create the db user and use this user to perform all the db operation.

The steps is follow:

1) Install mariadb

yum install mariadb-server mariadb

2) Configure for ranger and rangerkms dba account:

https://docs.cloudera.com/HDPDocuments/HDP3/HDP-3.1.4/installing-ranger/content/configure_mysql_db_for_ranger.html

3) Install mysql-jdbc and ambari setup

yum install mysql-connector-java

ambari-server setup --jdbc-db=mysql --jdbc-driver=/usr/share/java/mysql-connector-java.jar

4) Configure in ambari,

*This ranger db and db username rangeradmin will be created by ambari

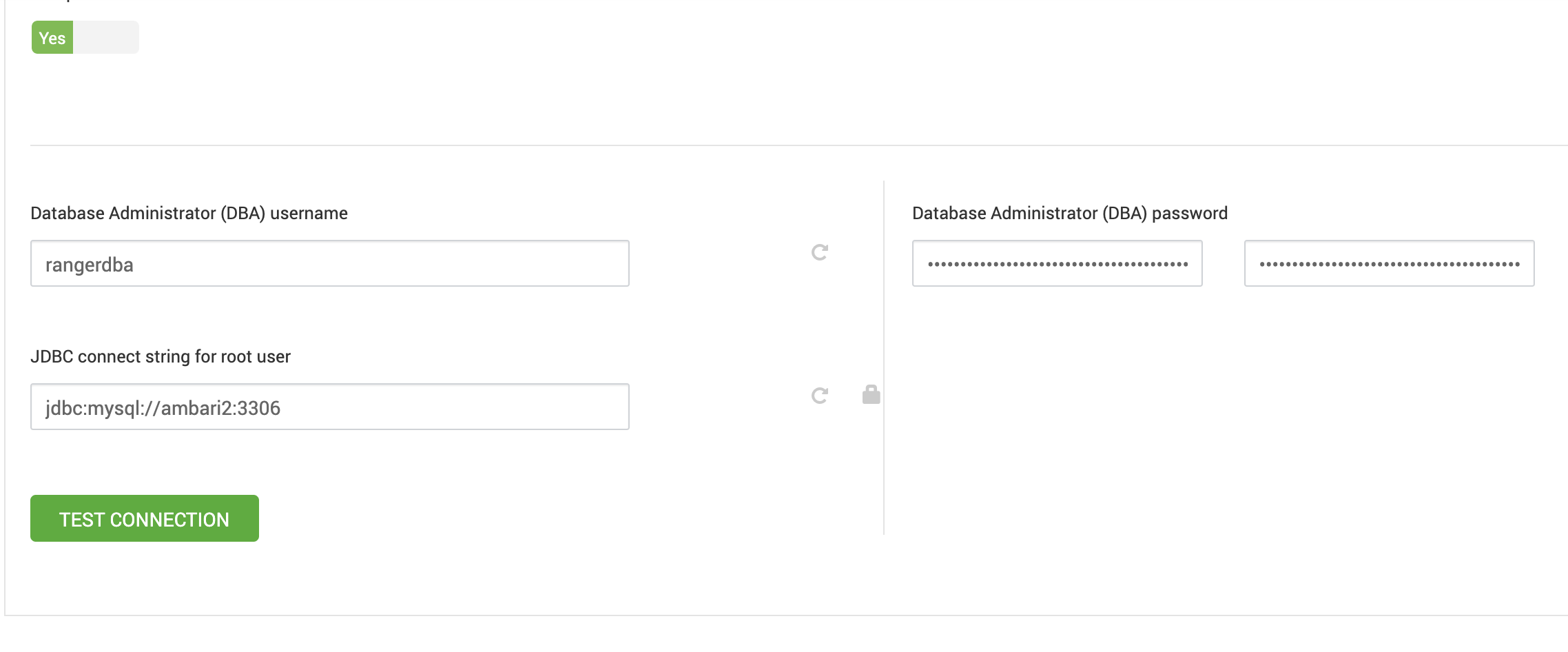

*Following shows use what user to do the db creation things, we just created user rangerdba, set to here and click Test Connection. And Same as ranger kms:

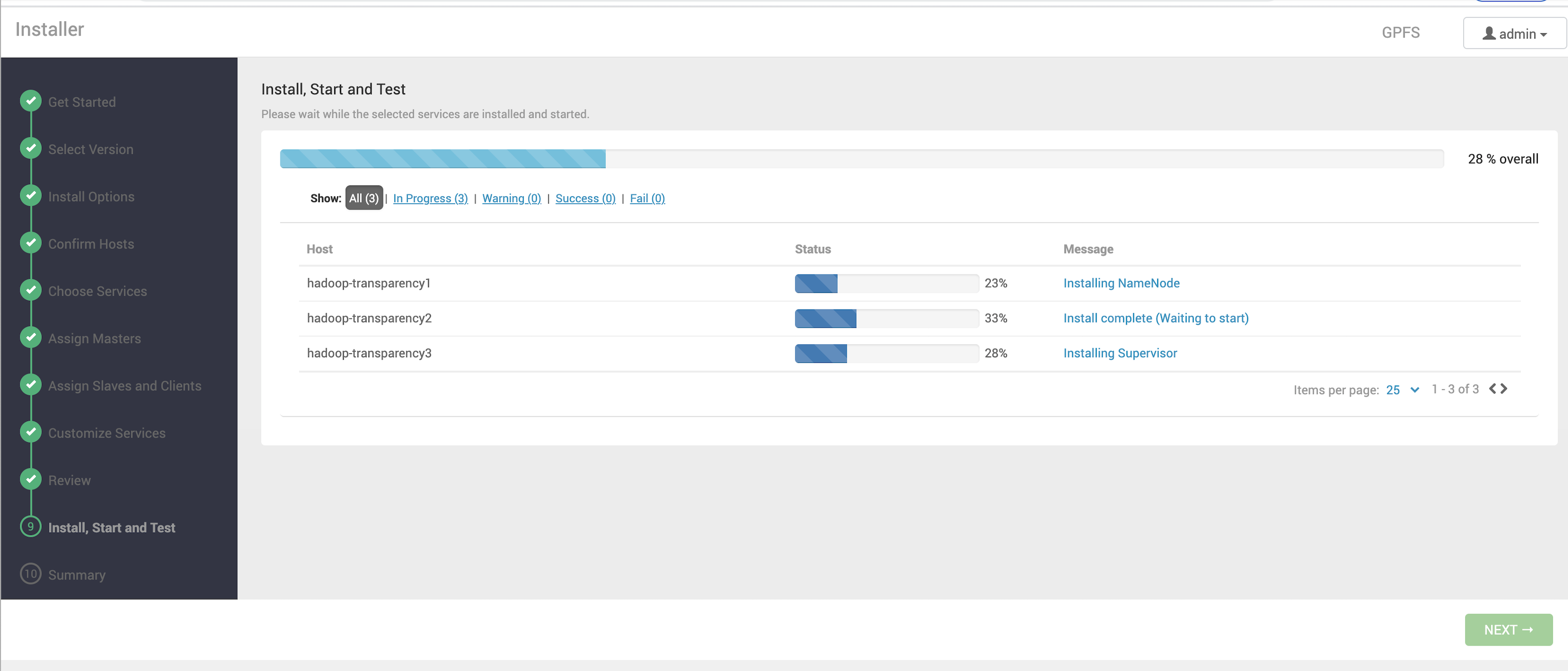

Start to install, waiting:

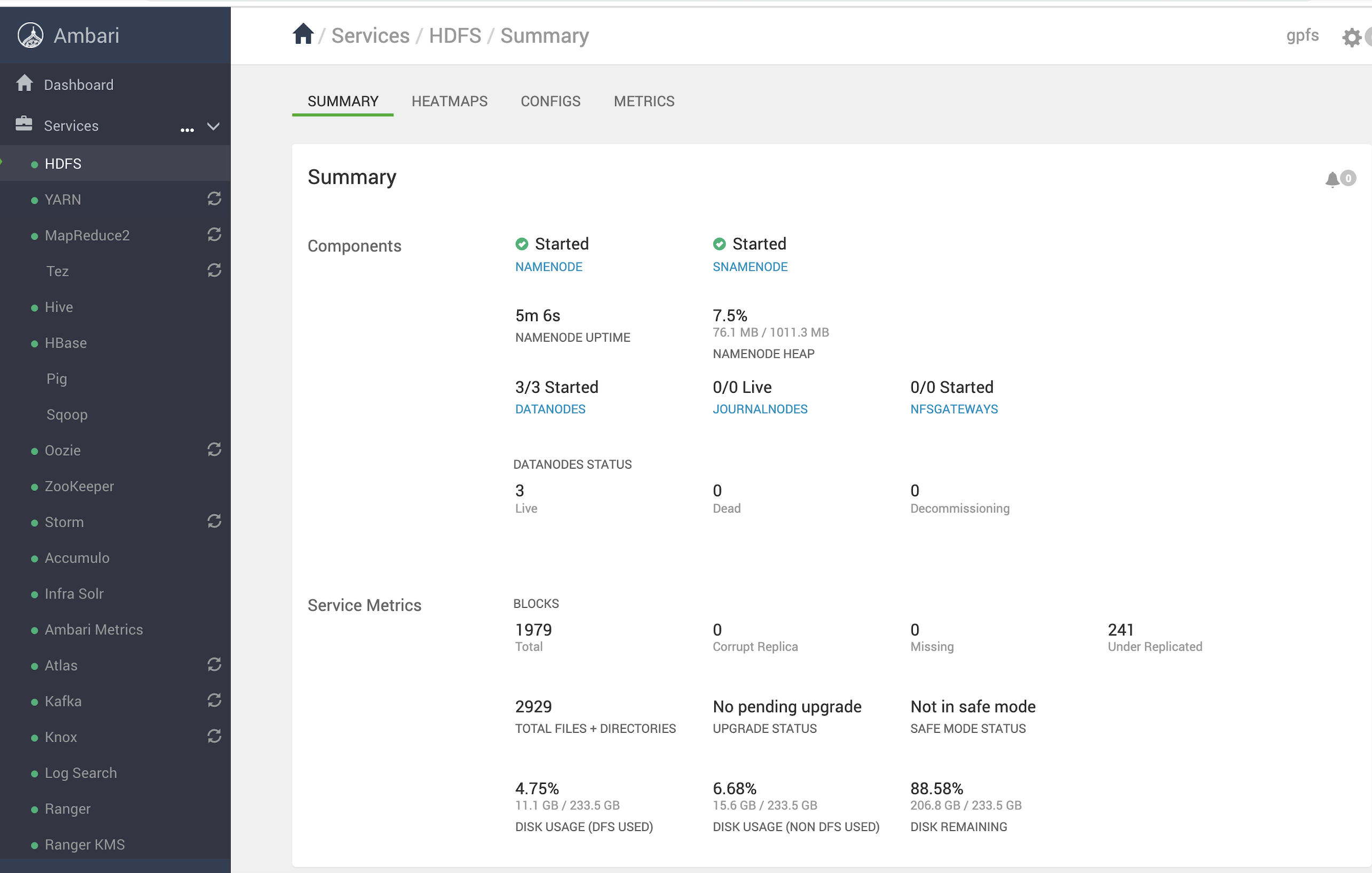

If install properly, all the services will become green:

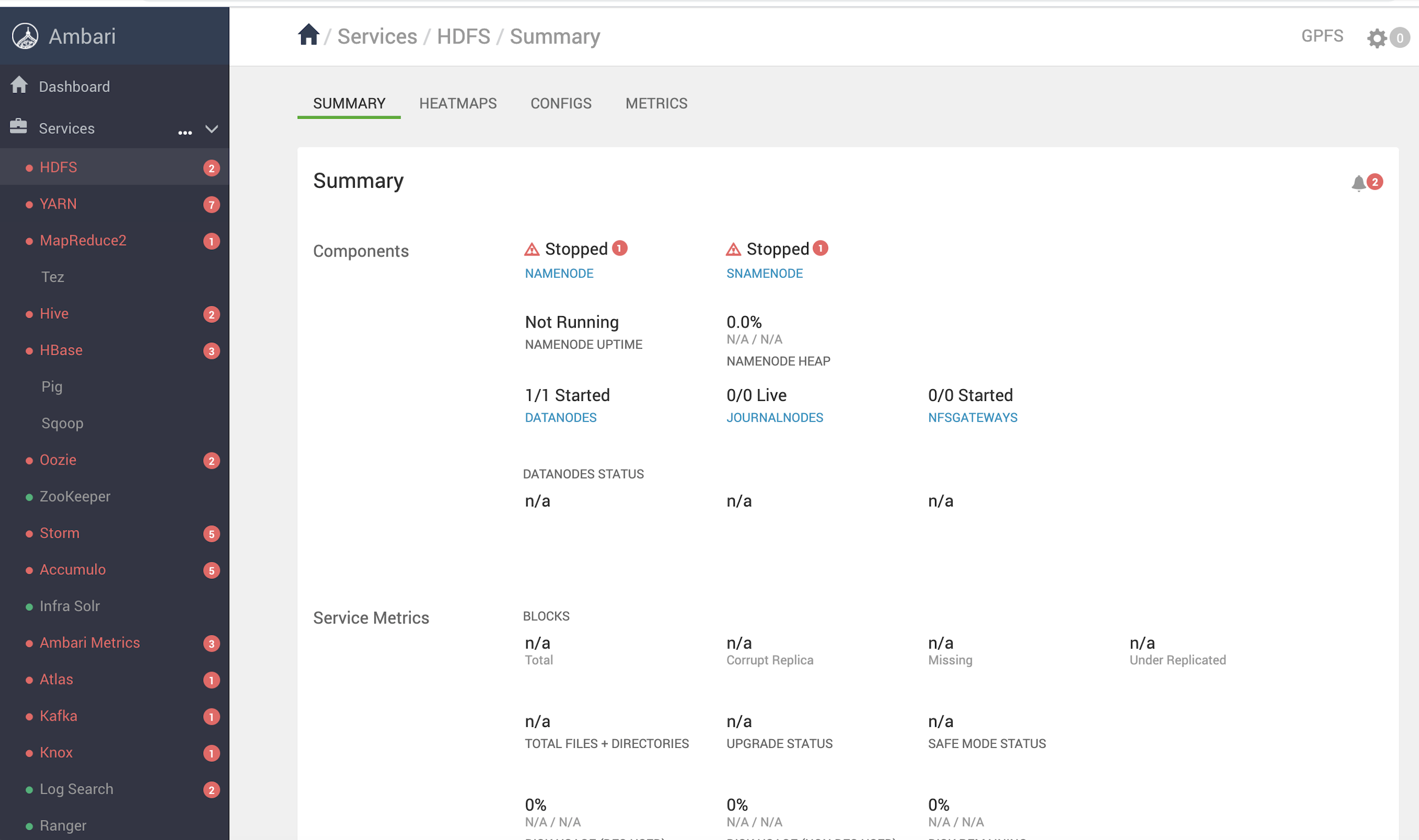

If not, services will show RED, try click … and start all service, if failed to start again, check the logs under ambari server: /var/log/{service} if any issue.

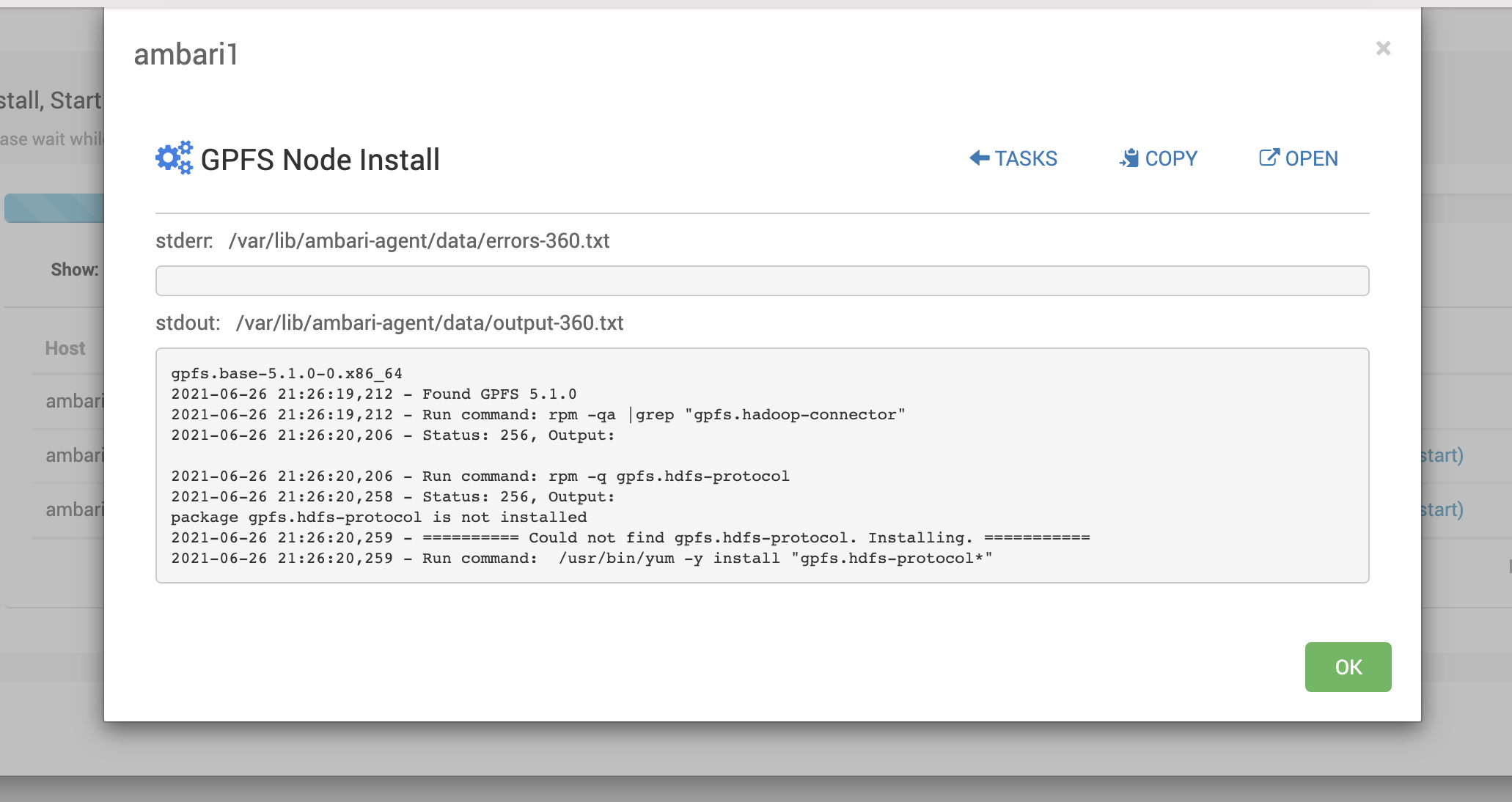

[Step 4. Env ready, and install GPFS MPack to change community HDFS to HDFS tranparency]

Now the Ambari+HDP env are ready, but HDFS is still community version (using local disk rather than GPFS fs), we need to install Spectrum Scale MPack to connect to GPFS transparency.

0) Before you install GPFS MPack, you need to install a normal GPFS cluster(5.1) on this ambari cluster, and create a new filesystem named hadoop for example.

[root@ambari1 ~]# mmlscluster

GPFS cluster information

========================

GPFS cluster name: hadoop.ambari1

GPFS cluster id: 16688944068525815190

GPFS UID domain: hadoop.ambari1

Remote shell command: /usr/bin/ssh

Remote file copy command: /usr/bin/scp

Repository type: CCR

Node Daemon node name IP address Admin node name Designation

------------------------------------------------------------------

1 ambari1 10.61.0.90 ambari1 quorum

2 ambari2 10.61.0.91 ambari2

3 ambari3 10.61.0.92 ambari3

[root@ambari1 ~]# mmlsdisk hadoop

disk driver sector failure holds holds storage

name type size group metadata data status availability pool

------------ -------- ------ ----------- -------- ----- ------------- ------------ ------------

nsd_a nsd 512 1001 Yes Yes ready up system

nsd_b nsd 512 1001 Yes Yes ready up system

nsd_c nsd 512 1001 Yes Yes ready up system

In our BDA doc, ESS is required, and remote mount is suggested, but this is a test environment so we can just use local nsd based filesystem instead.

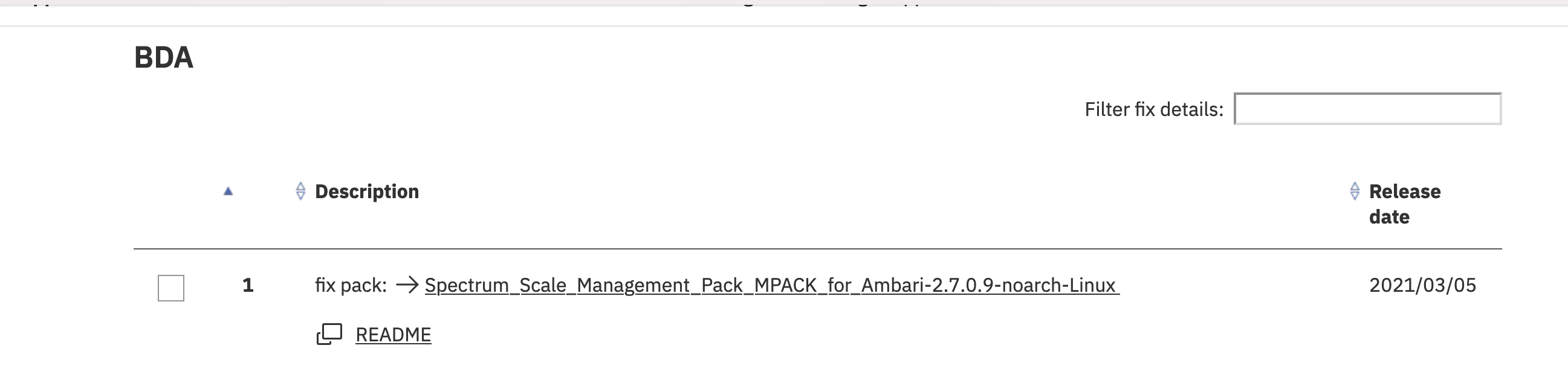

a) Download mpack from Fix Center, we can find 2.7.0.9 to download:

https://www.ibm.com/support/fixcentral/swg/selectFixes?parent=Software%20defined%20storage&product=ibm/StorageSoftware/IBM+Spectrum+Scale&release=5.0.5&platform=All&function=all#BDA

b) Extract and install mpack on ambari-server:

https://www.ibm.com/docs/en/spectrum-scale-bda?topic=installation-install-mpack-package

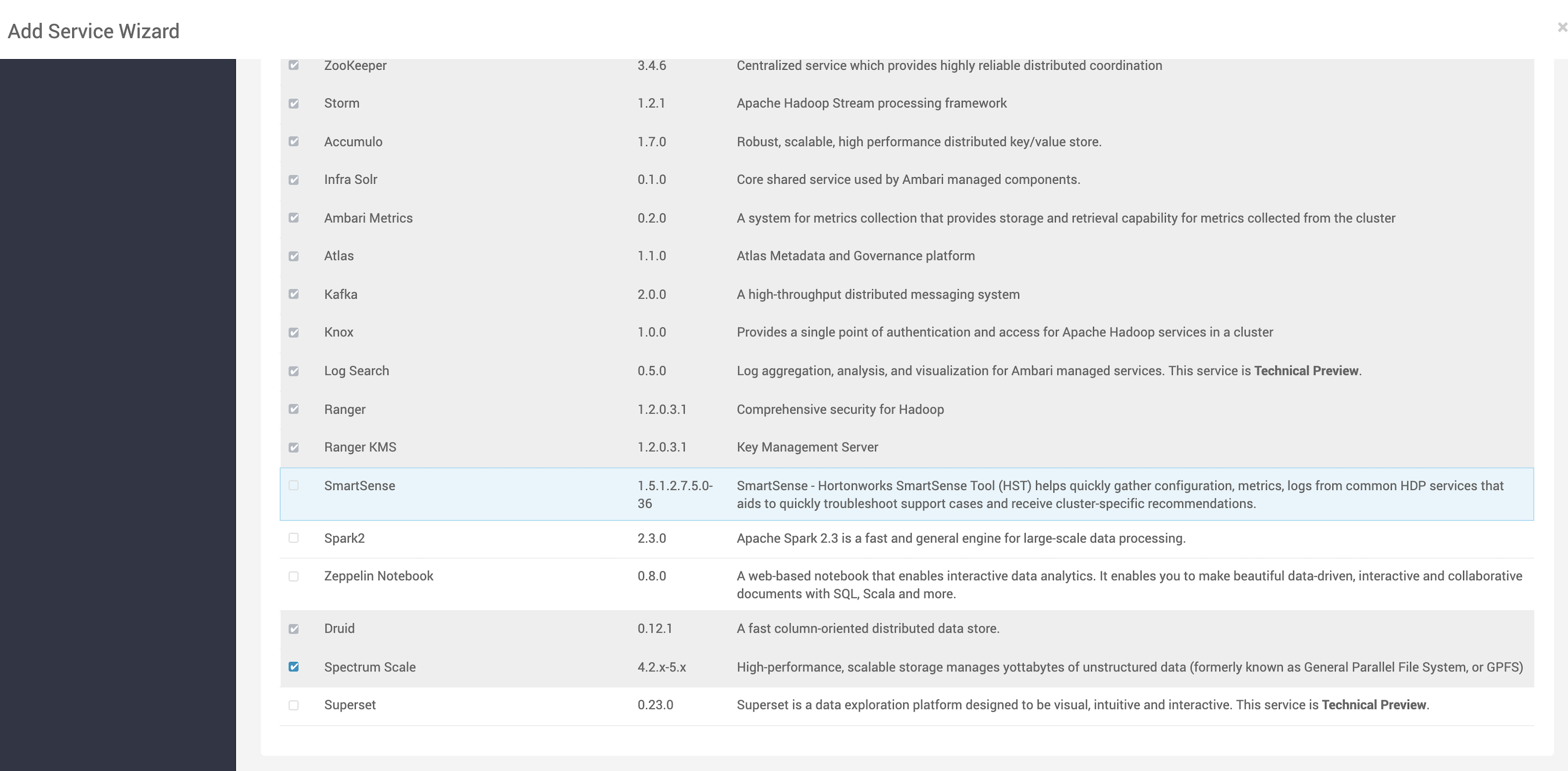

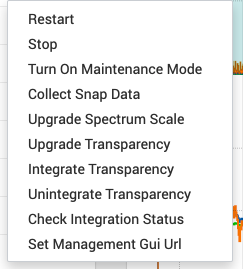

c) After you install properly, you can add new service which named: ”Spectrum Scale”

Choose the master node, master node is the node that take care of running operations like collect snap, upgrade transparency check status, etc, normally assign the same node as ambari server.

Set the ambari server credential and gpfs repo url:

*Tips: Here the GPFS repo url should include hdfs_rpm(you can run createrepo in that folder and export to yum by http), ambari mpack would fetch hdfs-protocol rpm from there and install to gpfs nodes.

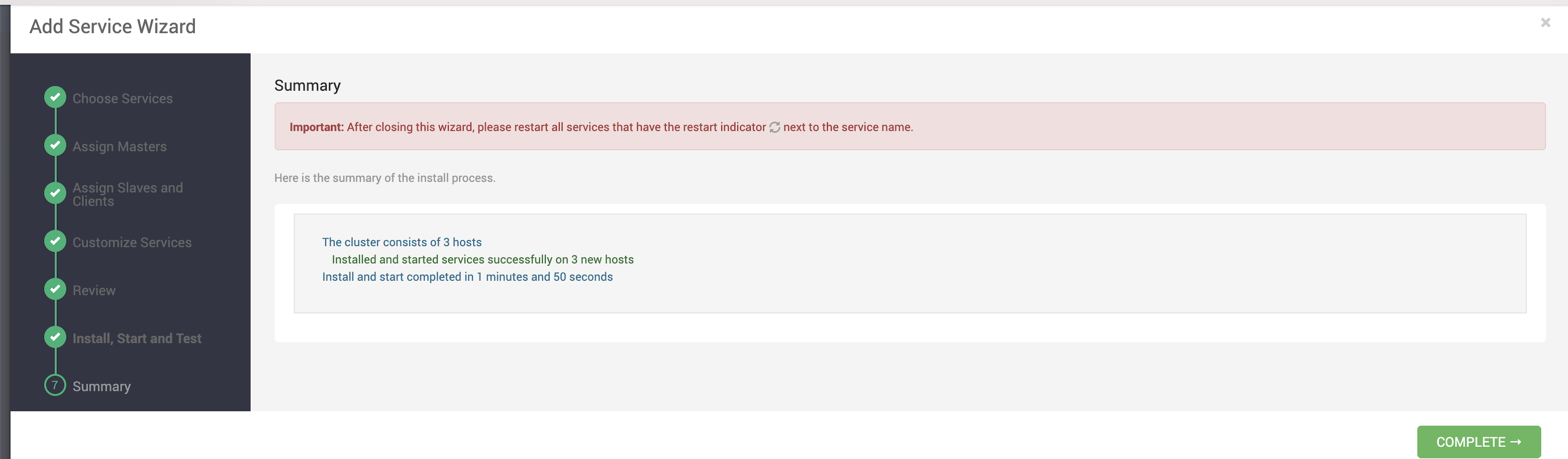

Finish:

All done, and now you can verify that the native datanode/namenode services are replaced by transparency services.

[root@ambari1 ~]# ps -ef| grep -e namenode -e datanode

root 3275 1 0 Jun27 ? 00:06:07 /home/jdk1.8.0_271//bin/java -Dproc_datanode -Dhdp.version= -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -server -XX:ParallelGCThreads=4 -XX:+UseConcMarkSweepGC -XX:OnOutOfMemoryError="/usr/hdp/current/hadoop-hdfs-datanode/bin/kill-data-node" -XX:ErrorFile=/var/log/hadoop/root/hs_err_pid%p.log -XX:NewSize=200m -XX:MaxNewSize=200m -Xloggc:/var/log/hadoop/root/gc.log-202106272013 -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+PrintGCDateStamps -Xms1024m -Xmx1024m -Dhadoop.security.logger=INFO,DRFAS -Dhdfs.audit.logger=INFO,DRFAAUDIT -XX:CMSInitiatingOccupancyFraction=70 -XX:+UseCMSInitiatingOccupancyOnly -Djava.library.path=:/usr/hdp/3.1.5.0-97/hadoop/lib/native/Linux-amd64-64:/usr/lpp/mmfs/hadoop/lib/native -Dhadoop.log.dir=/var/log/hadoop/root -Dhadoop.log.file=hadoop-root-datanode-ambari1.log -Dhadoop.home.dir=/usr/lpp/mmfs/hadoop -Dhadoop.id.str=root -Dhadoop.root.logger=INFO,RFA -Dhadoop.policy.file=hadoop-policy.xml org.apache.hadoop.hdfs.server.datanode.DataNode

root 6353 1 1 Jun27 ? 00:20:52 /home/jdk1.8.0_271//bin/java -Dproc_namenode -Dhdp.version= -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Dhdfs.audit.logger=INFO,NullAppender -server -XX:ParallelGCThreads=8 -XX:+UseConcMarkSweepGC -XX:ErrorFile=/var/log/hadoop/root/hs_err_pid%p.log -XX:NewSize=128m -XX:MaxNewSize=128m -Xloggc:/var/log/hadoop/root/gc.log-202106272014 -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+PrintGCDateStamps -XX:CMSInitiatingOccupancyFraction=70 -XX:+UseCMSInitiatingOccupancyOnly -Xms1024m -Xmx1024m -Dhadoop.security.logger=INFO,DRFAS -Dhdfs.audit.logger=INFO,DRFAAUDIT -XX:OnOutOfMemoryError="/usr/hdp/current/hadoop-hdfs-namenode/bin/kill-name-node" -Dorg.mortbay.jetty.Request.maxFormContentSize=-1 -Djava.library.path=:/usr/hdp/3.1.5.0-97/hadoop/lib/native/Linux-amd64-64:/usr/lpp/mmfs/hadoop/lib/native -Dhadoop.log.dir=/var/log/hadoop/root -Dhadoop.log.file=hadoop-root-namenode-ambari1.log -Dhadoop.home.dir=/usr/lpp/mmfs/hadoop -Dhadoop.id.str=root -Dhadoop.root.logger=INFO,RFA -Dhadoop.policy.file=hadoop-policy.xml org.apache.hadoop.hdfs.server.namenode.NameNode

root 32233 25238 0 23:03 pts/1 00:00:00 grep --color=auto -e namenode -e datanode

[root@ambari1 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

…

hadoop 300G 6.4G 294G 3% /hadoop

[Other tips]

- Set java_home to /etc/profile then all the services user could reach java home.

export JAVA_HOME="/root/jdk1.8.0_271/"

export PATH=$PATH:/usr/lpp/mmfs/bin/

export PATH=$JAVA_HOME/bin:$PATH

- Hive failed to start due to port 10002 not released

2021-06-25T04:14:50,702 INFO [main]: server.HiveServer2 (HiveServer2.java:init(319)) - Starting Web UI on port 10002

021-06-25T00:03:27,767 INFO [main]: server.HiveServer2 (HiveServer2.java:addServerInstanceToZooKeeper(551)) - Created a znode on ZooKeeper for HiveServer2 uri: ambari3:10000

2021-06-25T00:03:27,767 INFO [main]: metadata.HiveUtils (:()) - Adding metastore authorization provider: org.apache.hadoop.hive.ql.security.authorization.StorageBasedAuthorizationProvider

2021-06-25T00:03:27,768 INFO [main]: imps.CuratorFrameworkImpl (:()) - Starting

2021-06-25T00:03:27,768 INFO [main]: zookeeper.ZooKeeper (:()) - Initiating client connection, connectString=ambari1:2181,ambari3:2181,ambari2:2181 sessionTimeout=1200000 watcher=org.apache.curator.ConnectionState@17134190

2021-06-25T00:03:27,771 INFO [main-SendThread(ambari2:2181)]: zookeeper.ClientCnxn (:()) - Opening socket connection to server ambari2/10.61.0.91:2181. Will not attempt to authenticate using SASL (unknown error)

2021-06-25T00:03:27,772 INFO [main-SendThread(ambari2:2181)]: zookeeper.ClientCnxn (:()) - Socket connection established, initiating session, client: /10.61.0.92:35674, server: ambari2/10.61.0.91:2181

2021-06-25T00:03:27,775 INFO [main-SendThread(ambari2:2181)]: zookeeper.ClientCnxn (:()) - Session establishment complete on server ambari2/10.61.0.91:2181, sessionid = 0x27a414d81d60065, negotiated timeout = 60000

2021-06-25T00:03:27,775 INFO [main-EventThread]: state.ConnectionStateManager (:()) - State change: CONNECTED

2021-06-25T00:03:27,781 INFO [main]: server.HiveServer2 (HiveServer2.java:startPrivilegeSynchronizer(1048)) - Find 1 policy to synchronize, start PrivilegeSynchronizer

2021-06-25T00:03:27,935 INFO [HiveMaterializedViewsRegistry-0]: metadata.HiveMaterializedViewsRegistry (:()) - Materialized views registry has been initialized

2021-06-25T00:03:27,937 INFO [main]: server.Server (:()) - jetty-9.3.25.v20180904, build timestamp: 2018-09-04T17:11:46-04:00, git hash: 3ce520221d0240229c862b122d2b06c12a625732

2021-06-25T00:03:27,937 INFO [PrivilegeSynchronizer]: authorization.PrivilegeSynchronizer (:()) - Start synchronize privilege org.apache.hadoop.hive.ql.security.authorization.HDFSPermissionPolicyProvider

2021-06-25T00:03:27,982 INFO [main]: http.CrossOriginFilter (:()) - Allowed Methods: GET,POST,DELETE,HEAD

2021-06-25T00:03:27,982 INFO [main]: http.CrossOriginFilter (:()) - Allowed Headers: X-Requested-With,Content-Type,Accept,Origin,X-Requested-By,x-requested-by

2021-06-25T00:03:27,982 INFO [main]: http.CrossOriginFilter (:()) - Allowed Origins: *

2021-06-25T00:03:27,982 INFO [main]: http.CrossOriginFilter (:()) - Allow All Origins: true

2021-06-25T00:03:27,982 INFO [main]: http.CrossOriginFilter (:()) - Max Age: 1800

2021-06-25T00:03:27,983 INFO [main]: handler.ContextHandler (:()) - Started o.e.j.w.WebAppContext@4a660b34{/,file:///tmp/jetty-0.0.0.0-10002-hiveserver2-_-any-8092909205933695034.dir/webapp/,AVAILABLE}{jar:file:/usr/hdp/3.1.5.0-97/hive/lib/hive-service-3.1.0.3.1.5.0-97.jar!/hive-webapps/hiveserver2}

2021-06-25T00:03:27,983 INFO [main]: handler.ContextHandler (:()) - Started o.e.j.s.ServletContextHandler@362a561e{/static,jar:file:/usr/hdp/3.1.5.0-97/hive/lib/hive-service-3.1.0.3.1.5.0-97.jar!/hive-webapps/static,AVAILABLE}

2021-06-25T00:03:27,983 INFO [main]: handler.ContextHandler (:()) - Started o.e.j.s.ServletContextHandler@2df3545d{/logs,file:///var/log/hive/,AVAILABLE}

2021-06-25T00:03:27,984 ERROR [main]: server.HiveServer2 (HiveServer2.java:start(744)) - Error starting Web UI:

java.net.BindException: Address already in use

at sun.nio.ch.Net.bind0(Native Method) ~[?:1.8.0_271]

at sun.nio.ch.Net.bind(Net.java:444) ~[?:1.8.0_271]

at sun.nio.ch.Net.bind(Net.java:436) ~[?:1.8.0_271]

at sun.nio.ch.ServerSocketChannelImpl.bind(ServerSocketChannelImpl.java:225) ~[?:1.8.0_271]

at sun.nio.ch.ServerSocketAdaptor.bind(ServerSocketAdaptor.java:74) ~[?:1.8.0_271]

at org.eclipse.jetty.server.ServerConnector.openAcceptChannel(ServerConnector.java:351) ~[jetty-runner-9.3.25.v20180904.jar:9.3.25.v20180904]

at org.eclipse.jetty.server.ServerConnector.open(ServerConnector.java:319) ~[jetty-runner-9.3.25.v20180904.jar:9.3.25.v20180904]

at org.eclipse.jetty.server.AbstractNetworkConnector.doStart(AbstractNetworkConnector.java:80) ~[jetty-runner-9.3.25.v20180904.jar:9.3.25.v20180904]

at org.eclipse.jetty.server.ServerConnector.doStart(ServerConnector.java:235) ~[jetty-runner-9.3.25.v20180904.jar:9.3.25.v20180904]

at org.eclipse.jetty.util.component.AbstractLifeCycle.start(AbstractLifeCycle.java:68) ~[jetty-runner-9.3.25.v20180904.jar:9.3.25.v20180904]

at org.eclipse.jetty.server.Server.doStart(Server.java:406) ~[jetty-runner-9.3.25.v20180904.jar:9.3.25.v20180904]

at org.eclipse.jetty.util.component.AbstractLifeCycle.start(AbstractLifeCycle.java:68) ~[jetty-runner-9.3.25.v20180904.jar:9.3.25.v20180904]

at org.apache.hive.http.HttpServer.start(HttpServer.java:254) ~[hive-common-3.1.0.3.1.5.0-97.jar:3.1.0.3.1.5.0-97]

at org.apache.hive.service.server.HiveServer2.start(HiveServer2.java:741) [hive-service-3.1.0.3.1.5.0-97.jar:3.1.0.3.1.5.0-97]

at org.apache.hive.service.server.HiveServer2.startHiveServer2(HiveServer2.java:1078) [hive-service-3.1.0.3.1.5.0-97.jar:3.1.0.3.1.5.0-97]

at org.apache.hive.service.server.HiveServer2.access$1700(HiveServer2.java:136) [hive-service-3.1.0.3.1.5.0-97.jar:3.1.0.3.1.5.0-97]

at org.apache.hive.service.server.HiveServer2$StartOptionExecutor.execute(HiveServer2.java:1346) [hive-service-3.1.0.3.1.5.0-97.jar:3.1.0.3.1.5.0-97]

at org.apache.hive.service.server.HiveServer2.main(HiveServer2.java:1190) [hive-service-3.1.0.3.1.5.0-97.jar:3.1.0.3.1.5.0-97]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_271]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_271]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_271]