Spectrum Scale is a complex product for being just a filesystem, especially when you only use it on a single server. It's designed for many servers, multiple sites, and multiple filesystems. We're keeping it simple in this blog series on home NAS, so just one server, and one filesystem. Still, it's a good idea to have a little background information on how spectrum scale works and how you can tune it. Let's go!

The spectrum scale daemon (mmfsd) does ALL the work. we have some extra services around it, like mmsysmon(health), gpfsgui, ssh, and SMB daemon, but core file system and cluster services are provided by the multi-threaded GPFS daemon over a single TCP/IP port, 1191. The cluster and filesystem configuration is stored in the /var/mmfs/gen/mmsdrfs file, with additional information stored in the Central Cluster Repository and other directories. (/var/mmfs/) You should change settings using the mmch* commands only:

- mmchconfig : Change cluster settings, per node, or per nodeclass

- mmchnode: Change node designations like manager, quorum, etc

- mmchfs: Change filesystem settings like quota, default replication

- mmchattr: Change individual file attributes like compression, replication

If you want to know what's happening in the cluster, that's where the mmdiag command comes in. A few helpful options are:

- mmdiag --waiters : shows mmfsd threads that are waiting on something, possibly due to slow IO.

- mmdiag --config : shows ALL the cluster settings for this node.

- mmdiag --stats : shows cache utilisation

The GPFS filesystem cache is not like regular Linux cache, as the GPFS IO is processed in user space. You specify a fixed amount of memory for cache usage that we call the pagepool. This part of memory is used to store cached file data pages. Separate from this is the number of files cached (maxFilesToCache) and the file stats that may be cached (maxStatCache). For a full description, see: https://www.ibm.com/docs/en/spectrum-scale/5.1.1?topic=configuring-parameters-performance-tuning-optimization

We'll set a few cluster wide parameters to do basic tuning:

# mmchconfig pagepool=1G

# mmchconfig maxFilesToCache=10000

# mmchconfig maxStatCache=20000

If you want to see which files are in file cache, use the mmcachectl command:

# mmcachectl show --show-filename

FSname Fileset Inode SnapID FileType NumOpen NumDirect Size Cached Cached FileName

------------------------------------------------------------------------------------------------------------------------------

nas1 0 4040 0 directory 0 0 3968 0 FD /nas1/ces/ces/connections

nas1 1 66097 0 file 0 0 2614817792 939524096 F /nas1/Documents/SpectrumArchive_1.3.0.5_TrialVM.ova

Each filesystem has its own settings, and most are functional. There are some important parameters that influence performance:

- block size; cannot be changed afterwards, data is written to each disk in a pool with this maximum IO size

- Replication; The max setting cannot be changed afterwards, but default values can. Set this to two, and you'll do twice the writes.

- write-cache-threshold; If you have fast SSD for the system pool, smaller writes (max 64k) are stored in the Log, which also needs to be large enough. Look up HAWC in the manual for more information.

Not a performance related parameter, but important when managing a GPFS file system is the number of inodes (file/dir objects) that are defined and pre-defined. Different from most filesystems, GPFS a maximum setting for inodes per filesystem, and per fileset. You can change this value, and also pre-define inodes so new files can be created faster. You can see the current usage with the mmdf command:

# mmdf | tail -6

Inode Information

-----------------

Total number of used inodes in all Inode spaces: 4075

Total number of free inodes in all Inode spaces: 564245

Total number of allocated inodes in all Inode spaces: 568320

Total of Maximum number of inodes in all Inode spaces: 568320

It's important to keep some inodes free, so if the number of free inodes runs low, you can change the values with mmchfs:

# mmchfs nas1 --inode-limit=2M:1M

# mmlsfileset nas1 -L

Filesets in file system 'nas1':1

Name Id RootInode ParentId Created InodeSpace MaxInodes AllocInodes Comment

root 0 3 -- Mon Apr 12 18:03:44 2021 0 2097152 1049600 root fileset

Documents 1 4042 0 Tue Apr 13 09:06:55 2021 0 0 0

WinShare 2 4044 0 Tue Apr 13 09:20:40 2021 0 0 0

For independent filesets, setting inodes is similar, you set the maximum number of inodes and a pre-allocated number of inodes and these are located in a separate inode space from the main filesystem. With dependent filesets inodes from the main inode space are always used. In this example I'll create a new independent fileset and then increase only the maximum inodes, as we still have plenty of pre-allocated inodes:

# mmcrfileset nas1 new-fileset --inode-space new

# mmlinkfileset nas1 new-fileset -J /nas1/new-fileset

Fileset new-fileset linked at /nas1/new-fileset

# mmlsfileset nas1 new-fileset -L

Filesets in file system 'nas1':

Name Id RootInode ParentId Created InodeSpace MaxInodes AllocInodes Comment

new-fileset 5 786435 0 Mon Jul 5 15:01:01 2021 6 100352 100352

# mmchfileset nas1 new-fileset --inode-limit 200K

Set maxInodes for inode space 6 to 204800

Fileset new-fileset changed.

# mmlsfileset nas1 new-fileset -L

Filesets in file system 'nas1':

Name Id RootInode ParentId Created InodeSpace MaxInodes AllocInodes Comment

new-fileset 5 786435 0 Mon Jul 5 15:01:01 2021 6 204800 113664

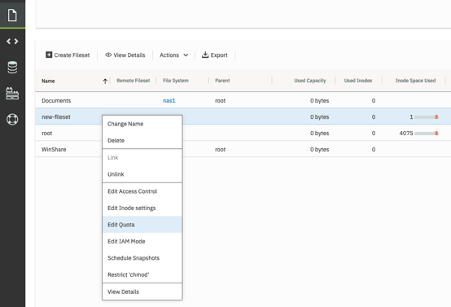

Or you can use the GUI to do this, of course, perhaps a little easier. A lot easier when setting maximum usage for an independent fileset. Open the Web GUI, and navigate to the left bar, Files, Filesets, and right click on the new-fileset and select "Edit Quota"

You will be greeted by a warning messages saying: "Quota is Disabled for the File System nas1"

As this is true, let's enable Quota and fileset specific "Disk Free" information:

# mmchfs nas1 -Q yes

# mmchfs nas1 --filesetdf

Reload the web page to freshen the information, and try again:

The GUI said OK, so all is well, right? Let's check that on command line:

# mmlsquota -j new-fileset nas1

Block Limits | File Limits

Filesystem type KB quota limit in_doubt grace | files quota limit in_doubt grace Remarks

nas1 FILESET no limits

nas1: Quota management has been enabled but quota accounting information is outdated. Run mmcheckquota to correct and update quota information.

# mmcheckquota nas1

nas1: Start quota check

1 % complete on Mon Jul 5 15:32:49

...

100 % complete on Mon Jul 5 15:33:09 2021

Finished scanning the inodes for nas1.

Merging results from scan.

mmcheckquota: Command completed.

# mmlsquota -j new-fileset --block-size=auto nas1

Block Limits | File Limits

Filesystem type blocks quota limit in_doubt grace | files quota limit in_doubt grace Remarks

nas1 FILESET 0 500G 500G 0 none | 1 0 0 0 none

# mmlsfileset nas1 new-fileset -d --block-size=auto

Collecting fileset usage information ...

Filesets in file system 'nas1':

Name Status Path Data

new-fileset Linked /nas1/new-fileset 4M

When exporting the new fileset with SMB, and mounting it on my Mac, the df statement shows the difference between a dependent fileset and a quota enabled independent fileset:

root@scalenode1:~# mmsmb export add new-fileset /nas1/new-fileset/

mmsmb export add: The SMB export was created successfully.

maarten@MacBookPro ~ % df -h -T smbfs

//maarten@192.168.178.199/Documents 1.1Ti 18Gi 1.1Ti 2% 18620414 1208225792 2% /Volumes/Documents

//maarten@192.168.178.199/new-fileset 500Gi 0Bi 500Gi 0% 18446744073709551614 524288000 3518437222305% /Volumes/new-fileset

For some reason the calculation of inode information is a little off, and looks rather strange. Anyway, this concludes the filesystem Tuning and Quota part of the blog, and indeed the blog itself. There are many more subjects that can be discussed, but for building a home NAS I think this should give you a good start. Comment below if you have a subject you really think should be included and I'll consider it. Thanks for reading, and enjoy Spectrum Scale!

#Highlights-home#Highlights