IBM Storage Ceph Object Storage Attribute-based Access Control (ABAC) Authorization

Introduction

In the current era of technology, safeguarding confidential data is of utmost importance. With the advent of cloud technology, establishing efficient access control mechanisms to ensure compliance with industry regulations regarding data is imperative. Because of its cloud nature, Object storage is the most popular access method used by the modern analytical tool landscape; with Analytical datasets, there is an inherent need to share the data among different teams. IBM Storage Ceph, in conjunction with STS, IAM and an Attribute-Based Access Control (ABAC) authorization, will help us achieve this objective.

Let's briefly introduce STS and IAM Roles.

STS

Ceph provides the Secure Token Service (STS) feature. STS is a set of APIs that return a temporary set of S3 access and secret keys. IBM Storage Ceph supports a subset of Amazon Secure Token Service (STS) APIs. Users first authenticate against STS and, upon success, receive a short-lived S3 access and secret key that can be used in subsequent requests.

IAM Roles

Identity and Access Management (IAM) is a powerful tool for controlling access to your resources. With IAM, you can apply various access control techniques, such as user—and resource-based policies. Additionally, you can use Attribute-Based Access Control (ABAC) by leveraging the AWS Security Token Service (STS) to pass attributes to IAM policies. This gives you more granular control over who can access your resources based on custom attributes that can be present in your IDP schema, for example.

S3 Tags

IBM Storage Ceph S3 implementation allows users to assign tags, which are arbitrary key-value pairs, to different resources like objects, buckets, and roles. These tags give users the flexibility to categorize resources as per their preferences. Once tagged, attribute-based access control (ABAC) can control access to these resources.

The IBM Storage Ceph solutions guide redbook, chapter Seven, provides a more in-depth coverage of the previous features.

Introducing S3 IAM with ABAC Authorization

The conventional authorization scheme used in IBM Ceph Object storage with IAM roles provides Role-Based Access Control (RBAC). With RBAC, roles are defined based on service or job functions (implemented as IAM Roles) and granted the necessary privileges. However, when new resources are added, the privileges assigned to principals may need to be modified, which can become challenging to manage at scale.

An alternative way of authorizing access to resources is by assigning tags to both the principals and resources and then granting permissions based on the combination of these tags. For instance, all principals tagged as belonging to Project Orange can access resources tagged as belonging to Project Orange. In contrast, principals tagged with Project Blue can only access resources tagged with Blue. This authorization model, known as "attribute-based access control" (ABAC), can result in fewer and smaller permission policies that rarely need updating, even as new principals and resources are added to the accounts. In practice, a combination of ABAC and RBAC can be used to achieve desired access control policies.

How IBM Storage Ceph Object implements ABAC

You still have principals and resources with identity-based and resource-based permission policies. However, instead of having a lot of specifics in the resource and principal fields, an ABAC permission policy will have wildcards in those fields with the actual logic implemented using conditions. Five primary tag keys to utilise in the policy condition:

-

aws:ResourceTag: control access based on the values of tags attached to the resource being accessed.

-

aws:RequestTag: control the tag values that can be assigned to or removed from resources.

-

aws:TagKeys: control access based on the tag keys specified in a request. This is a multi-valued condition key.

-

aws:PrincipalTag: control access based on the values of tags attached to the principal making the API request.

-

s3:ResourceTag: This key compares tags on the s3 resource (bucket or object) with those in the role’s permission policy.

Let’s provide an example to make things easier to understand if we use the following json policy on an IAM role:

{

"Effect":"Allow",

"Action":["s3:*"],

"Resource":["*"],

"Condition":{"StringEquals":{"s3:ResourceTag/Department":\"Orange"}}

}

It will allow the principal assuming the role to access any S3 bucket, as long as the Bucket and its objects have a tag named “Department” whose value is “Orange.”

However, to leverage ABAC's powers, we must introduce variables in the condition statements; let's examine the following example of a JSON policy.

{

"Effect":"Allow",

"Action":["s3:*"],

"Resource":["*"],

"Condition":{"StringEquals":{"s3:ResourceTag/Department":"${aws:PrincipalTag/Department}"}}

}

Now, any principal assuming the IAM role that has a tag named “Department” with the value “Orange” can access buckets/objects whose “Department” tag is also “Orange”, avoiding the need to create permission policies that know about every tag value in use, dramatically reducing the number of roles that the platform administrators need to maintain.

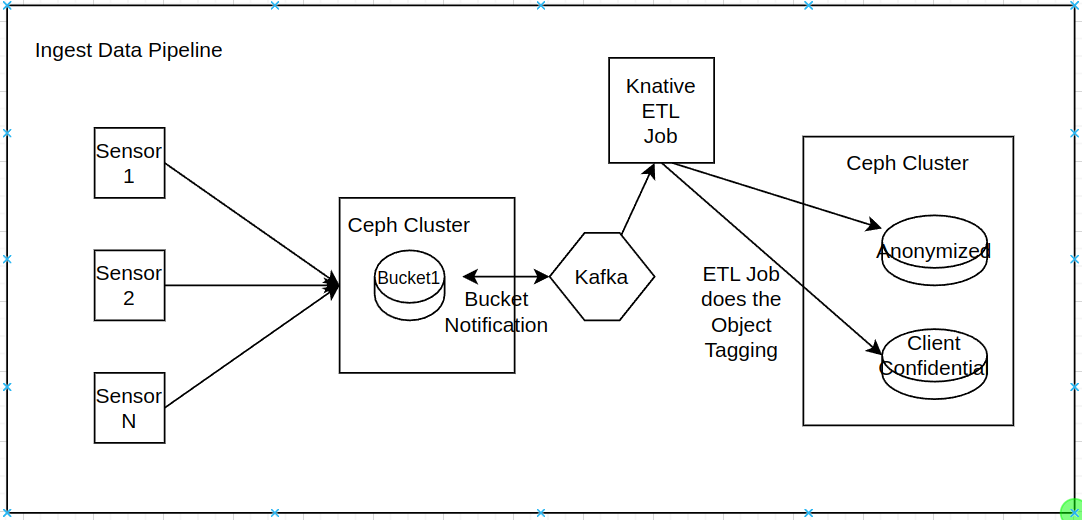

ABAC has challenges like maintaining the correct tags for each bucket/object and caring about who can add or remove tags, as tags are the key to accessing the data. One idea can be for the ETL process that takes care of ingesting data into IBM Storage Ceph to tag the objects as they are being stored in the bucket.

Hands-on Example

We will walk you through a simple step-by-step example of leveraging ABAC to simplify the authorization scheme in a data collaboration project. To run this step-by-step guide, we have a running IBM Storage Ceph Cluster integrated with an RHSSO deployment through OIDC; the RHSSO is federated with a Red Hat Identity Manager server that provides the Enterprise LDAP users. All the steps to set up the environment and follow this example are available in the IBM Storage Ceph Redbook Chapter 7.

In this example, we have a data pipeline ingesting data from Edge sites. The data is ETLed at a central site, where it is anonymized, aggregated, and moved into parquet format. The ETL process tags each object as it’s ingested into IBM Storage Ceph S3 buckets.

The data is stored in two buckets:

-

The data is anonymised in the `anonymized` bucket, and all customer/sensitive data is removed from the parquet files. Objects in this bucket get the “green” security clearance tag.

-

The confidential bucket contains sensitive customer data; only specific departments can access particular files, and the ETL process will set an “orange” or “red” security clearance tag, depending on the file's confidential character.

From the Data Analyst point of view, we have three different groups in this example:

The first step is for the application integrator to create the required buckets so the data can start to be dumped in the buckets after the ETL process finishes; we create the two buckets:

#aws --profile abacadmin s3 mb s3://anonymized

#aws --profile abacadmin s3 mb s3://confidential

Now, we will create an IAM role with the following policy; essential things to notice: we need to add the "sts:TagSession" action to our policy. Then, the condition in the policy will be checked to match the tags in the incoming request with the tag attached to the role. aws:RequestTag is the incoming tag in the JWT (access token), and iam:ResourceTag is the tag attached to the role being assumed

# cat role-abac.json

{

"Version":"2012-10-17",

"Statement":[

{

"Effect":"Allow",

"Action":["sts:AssumeRoleWithWebIdentity","sts:TagSession"],

"Principal":{"Federated":["arn:aws:iam:::oidc-provider/keycloak-sso.apps.ocp.stg.local/auth/realms/ceph"]},

"Condition":{"StringEquals":{"aws:RequestTag/Deparment":"${iam:ResourceTag/Department}"}}

}]

}

We create the role:

# radosgw-admin role create --role-name analyticrole --assume-role-policy-doc=$(jq -rc . /root/role-abac.json)

Now, we will add the tags to the role to allow the people who belong to department analytics to assume the role

# aws --no-verify-ssl --profile abacadmin --endpoint https://172.16.28.158:8443 iam tag-role --role-name analyticrole --tags Key=Department,Value=Analytics

# aws --no-verify-ssl --profile abacadmin --endpoint https://172.16.28.158:8443 iam list-role-tags --role-name analyticrole

{

"Tags": [

{

"Key": "Department"

},

{

"Value": "Analytics"

}

]

}

The next step is to configure the resource policy for the role, where we will create a resource policy; with this condition, we will allow all s3 actions on any object that is tagged with the principal tag on the incoming STS request, so for example, if I assume a role with a user that has the green label tag, he will be able only to access resources that also have the green label tag:

# cat principal_tag.json

{

"Version":"2012-10-17",

"Statement":[

{

"Effect":"Allow",

"Action":[

"s3:ListBucket",

"s3:Get*",

"s3:PutObject",

"s3:DeleteObject",

"s3:AbortMultipartUpload",

"s3:ListMultipartUploadParts"

],

"Resource":[

"arn:aws:s3:::anonymized",

"arn:aws:s3:::anonymized/*",

"arn:aws:s3:::confidential",

"arn:aws:s3:::confidential/*"

],

"Condition":{"StringEquals":{"s3:ResourceTag/Secclearance":"${aws:PrincipalTag/Secclearance}"}}

}]

}

Our policy limits the scope of resources and actions to which this IAM role can have access, even if the tags match the condition. When working with ABAC, tags are the key to Allowing or Disallowing access to our S3 resources. We must tightly control the users who can put/delete tags on S3 resources; otherwise, this will create a huge security hole.

Using the radosgw-admin command, we can now create a new " access-with-tags " policy for the "analyticrole" role.

# radosgw-admin role policy put --role-name=analyticrole --policy-name=access-with-tags --policy-doc=$(jq -rc . /root/principal_tag.json)

Permission policy attached successfully

Once created, we can use the s3 API to get the details of the role poly we just created.

# aws --profile abacadmin iam get-role-policy --role-name analyticrole --policy-name access-with-tags

{

"RoleName": "analyticrole",

"PolicyName": "access-with-tags",

"PolicyDocument": {

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:ListBucket",

"s3:Get*",

"s3:PutObject",

"s3:DeleteObject",

"s3:AbortMultipartUpload",

"s3:ListMultipartUploadParts"

],

"Resource": [

"arn:aws:s3:::anonymized",

"arn:aws:s3:::anonymized/*",

"arn:aws:s3:::confidential",

"arn:aws:s3:::confidential/*"

],

"Condition": {

"StringEquals": {

"s3:ResourceTag/Secclearance": "${aws:PrincipalTag/Secclearance}"

}

}

}

]

}

}

IMPORTANT: We must notice that we need to tag our buckets and objects for the previous role to work.

With these two steps, we have all the required authorization on the ceph side. We need to configure our analytic users at the IDP level to have the “department” and “secclearance” attributes in place.

In our case, we have federated our RHSSO with LDAP as the IDP backend. When users are created in LDAP, we specify their LDAP attributes; these attributes are forwarded to the JWT SSO token once the user authenticates against the RHSSO.

We won’t cover the part of creating the users. Still, we have three users created: “daexternal”, “dasales”, and “dabusiness” Each user has LDAP custom attributes set that gets forwarded into the SSO, and we can check they are part of the JWT token when we authenticate with the user, they're all part of the same Department, this attribute/tag will allow them to assume the role analytics role we created, and the Secclearence will give them access to the S3 datasets depending on the labels set from the ETL jobs.

We are using the check_token.sh script just to check the JWT token contents:

# bash ./check_token.sh daexternal passw0rd | jq .\"https://aws.amazon.com/tags\"

[

{

"principal_tags": {

"Department": [

"Analytics"

],

"Secclearance": [

"Green"

]

}

}

]

# bash ./check_token.sh dasales passw0rd | jq .\"https://aws.amazon.com/tags\"

[

{

"principal_tags": {

"Department": [

"Analytics"

],

"Secclearance": [

"Orange"

]

}

}

]

# bash ./check_token.sh dabusiness passw0rd | jq .\"https://aws.amazon.com/tags\"

[

{

"principal_tags": {

"Department": [

"Analytics"

],

"Secclearance": [

"Red"

]

}

}

]

So before we test accessing the different buckets/objects, as the application integrator “abacadmin”, let’s check if the ETL jobs are creating the parquet objects with labels.

First, check if the bucket has the correct label:

# aws --profile abacadmin s3api get-bucket-tagging --bucket anonymized

{

"TagSet": [

{

"Key": "Secclearance",

"Value": "Green"

}

]

}

Then let’s check one of the objects:

# aws --profile abacadmin s3api get-object-tagging --bucket anonymized --key data_anon.parquet

{

"TagSet": [

{

"Key": "Secclearance",

"Value": "Green"

}

]

}

Both have green security tags, which are correct for the anonymized buckets.

TIP: just in case you need to configure tags on objects or buckets manually, you can use this example:

# aws --profile abacadmin s3api put-bucket-tagging --bucket bucket_name --tagging 'TagSet=[{Key=Department,Value=EXAMPLE}]

# aws --profile abacadmin s3api put-object-tagging --bucket bucket_name --key object_name --tagging 'TagSet=[{Key=Department,Value=EXAMPLE}]

We now have everything in place to do a first test with the “daexternal” user that should have access to the anonymized bucket, and we are going to use a bash script to help out assuming the role; here is a link to the script's content. It follows these steps:

- Authenticates against the SSO

- Pulls a JWT token

- With the JWT token, it assumes an IAM role

- If successful, it exports to our shell ENV the STS credentials provided by RGW when assuming the IAM Role

Let's run it with the “daexternal” user, assuming the “analyticrole” we created previously.

# . ./test-assume-role.sh daexternal passw0rd analyticrole

Getting the JWT from SSO...

Trying to Assume Role analyticrole using provided JWT token..

Now that we have the STS AWS credentials exported into our shell environment let's try accessing the anonymized bucket.

# aws s3 ls s3://anonymized/data_anon.parquet

2024-03-07 20:08:07 7792 data_anon.parquet

One quick way of checking that we have permission to query our parquet object is by using an S3 select query to count the number of entries in the parquet file:

# aws s3api select-object-content --bucket anonymized --key data_anon.parquet --expression-type 'SQL' --input-serialization '{"Parquet": {}, "CompressionType": "NONE"}' --output-serialization '{"CSV": {}}' --expression "select count(*) from s3object;" /dev/stdout

<Payload>

8001

We can verify that we can successfully access the parquet file and that the response to our query to count all entries “select count(*) from s3object” shows that there are 8001 entries on the file.

If we attempt to access the confidential bucket, it should fail.

# aws s3 ls s3://confidential

An error occurred (AccessDenied) when calling the ListObjectsV2 operation: Unknown

Let's move on to our confidential bucket; again, let’s do a quick check to verify that our ETL process is tagging the objects as expected:

# aws --profile abacadmin s3api get-object-tagging --bucket confidential --key data_low_confidential.parquet

{

"TagSet": [

{

"Key": "Secclearance",

"Value": "Orange"

}

]

}

# aws --profile abacadmin s3api get-object-tagging --bucket confidential --key data_very_confidential.parquet

{

"TagSet": [

{

"Key": "Secclearance",

"Value": "Red"

}

]

}

Let's assume the role of the data analytics sales user “dasales,” who has an Orange security clearance. This user should be able to access objects with the Orange "secclabel" tag; in this example, it’s the ”data_low_confidential.parquet” file.

# . ./test-assume-role.sh dasales passw0rd analyticrole

Getting the JWT from SSO...

Trying to Assume Role analyticrole using provided JWT token..

Export AWS ENV variables to use the AWS CLI with the STS creds..

# aws s3api select-object-content --bucket confidential --key data_low_confidential.parquet --expression-type 'SQL' --input-serialization '{"Parquet": {}, "CompressionType": "NONE"}' --output-serialization '{"CSV": {}}' --expression "select count(*) from s3object" /dev/stdout

<Payload>

3001

But the user shouldn't be able to access the data_very_confidential.parquet as this object required the "red" security clearance

# aws s3 cp s3://confidential/data_very_confidential.parquet /tmp/ok2

fatal error: An error occurred (403) when calling the HeadObject operation: Forbidden

# aws s3api select-object-content --bucket confidential --key data_very_confidential.parquet --expression-type 'SQL' --input-serialization '{"Parquet": {}, "CompressionType": "NONE"}' --output-serialization '{"CSV": {}}' --expression "select count(*) from s3object" /dev/stdout

An error occurred (AccessDenied) when calling the SelectObjectContent operation: Unknown

Everything is working as expected. Let's check with our last user, “dabusiness,” who has the Red security clearance and should be able to access all objects in all the buckets.

[root@cephs1n1 ~]# . ./test-assume-role.sh dabusiness passw0rd analyticrole

Getting the JWT from SSO...

Trying to Assume Role analyticrole using the provided JWT token..

Export AWS ENV variables to use the AWS CLI with the STS creds..

# aws s3api select-object-content --bucket confidential --key data_very_confidential.parquet --expression-type 'SQL' --input-serialization '{"Parquet": {}, "CompressionType": "NONE"}' --output-serialization '{"CSV": {}}' --expression "select count(*) from s3object" /dev/stdout

<Payload>

115

That's fantastic!, Our tests have confirmed that our user attributes and policies are functioning correctly, and our datasets are now secured and shared among teams. This simplistic example highlights the enormous benefits of working with IAM roles and ABAC. With a single IAM role, we can control who can assume an IAM role and what S3 datasets they can access. With this IAM role policy in place, when onboarding new users, we only need to ensure they have the correct LDAP attributes set regarding the Department they belong to and their Security Clearance. These examples are just the tip of the iceberg to give you an idea of how ABAC works. Still, there are infinite ways in which we can define policy in ABAC to share and configure granular(even at the prefix level) access to our datasets.

Summary and Next up

Through the simplistic hands-on example, we have demonstrated how IBM Storage Ceph Object Storage, through its implementation of the IAM and STS features, lets us leverage all the benefits of an ABAC authorization model.

Here's a quick recap of some advantages of implementing an ABAC authorization model: It provides granular access and flexibility. Although ABAC may have a steep learning curve, it delivers a highly scalable authorization model compatible with complex enterprise environments.

IBM Storage Ceph resources

Find out more about IBM Storage Ceph.

#Featured-area-2#Highlights#Highlights-home