IBM Storage Ceph Object Storage Multisite Replication Series. Part Six

In the previous episode of the series, We discussed the Multisite Sync Policy and shared some hands-on examples of configuring granular bucket bi-directional replication. In today's blog, part six, we will continue configuring different Multisite Sync Policies, like unidirectional replication with one source to many destination buckets.

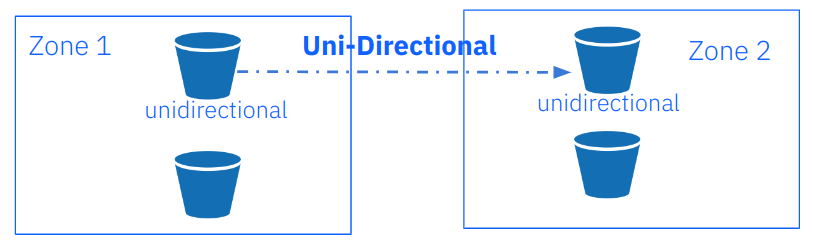

Unidirectional bucket Sync

In the previous article, we showed a bucket sync policy with a bi-directional configuration; let’s check out another example of how to enable unidirectional sync between two buckets. Again to give a bit of context, we currently have our zonegroup sync policy set to “allowed”, and a bi-directional flow configured at the zonegroup level. With the zonegroup sync policy allowing us to configure the replication per-bucket basis, we can start with our unidirectional replication configuration.

We create the unidirectional bucket, then create a sync group with the id `unidirectiona-1`, then select the status to be enabled; as you already know, when we configure the status of the sync group policy to enabled, the replication will start happening once the pipe has been applied to the bucket.

[root@ceph-node-00 ~]# aws --endpoint https://object.s3.zone1.dan.ceph.blue:443 s3 mb s3://unidirectional

make_bucket: unidirectional

[root@ceph-node-00 ~]# radosgw-admin sync group create --bucket=unidirectional --group-id=unidirectiona-1 --status=enabled

Once the sync group is in place, we need to create a pipe for our bucket; in this example, we are specifying the source and destination zones; the source will be zone1 and destination zone2. In this way, we are creating a uni-directional replication pipe for bucket `unidirectional` with data only being replicated in one direction: zone1 —> zone2

[root@ceph-node-00 ~]# radosgw-admin sync group pipe create --bucket=unidirectional --group-id=unidirectiona-1 --pipe-id=test-pipe1 --source-zones='zone1' --dest-zones='zone2'

With `sync info`, we can check the flow of our bucket replication; as you can see, the sources field information is empty as we are running the command from a node in zone1, and we are not receiving data from an external source. After all, from the zone where we are running the command, we are doing uni-directional, so we are sending data to a destination. We can see that the source is zone1, and the destination is zone2 for the `unidirectional` bucket.

[root@ceph-node-00 ~]# radosgw-admin sync info --bucket unidirectional

{

"sources": [],

"dests": [

{

"id": "test-pipe1",

"source": {

"zone": "zone1",

"bucket": "unidirectional:89c43fae-cd94-4f93-b21c-76cd1a64788d.34955.1"

},

"dest": {

"zone": "zone2",

"bucket": "unidirectional:89c43fae-cd94-4f93-b21c-76cd1a64788d.34955.1"

….

}

If we run the same command from zone2, we can see the same information but on the sources field, as in this case, is receiving data from a source, that is zone1. For the unidirectional bucket zone2 is not sending out any replication data, which is why the destination field is empty on the output of the sync info command.

[root@ceph-node-04 ~]# radosgw-admin sync info --bucket unidirectional

{

"sources": [

{

"id": "test-pipe1",

"source": {

"zone": "zone1",

"bucket": "unidirectional:66df8c0a-c67d-4bd7-9975-bc02a549f13e.36430.1"

},

"dest": {

"zone": "zone2",

"bucket": "unidirectional:66df8c0a-c67d-4bd7-9975-bc02a549f13e.36430.1"

},

….

"dests": [],

Once we have our configuration ready for action, let’s do some checking to see if everything is working as expected, let’s PUT 3 files to zone1:

[root@ceph-node-00 ~]# for i [1..3] do ; in aws --endpoint https://object.s3.zone1.dan.ceph.blue:443 s3 cp /etc/hosts s3://unidirectional/fil${i}

upload: ../etc/hosts to s3://unidirectional/fil1

upload: ../etc/hosts to s3://unidirectional/fil2

upload: ../etc/hosts to s3://unidirectional/fil3

We can check that strait away they have been synced on zone2

[root@ceph-node-00 ~]# aws --endpoint https://object.s3.zone2.dan.ceph.blue:443 s3 ls s3://unidirectional/

2024-02-02 17:56:09 233 fil1

2024-02-02 17:56:10 233 fil2

2024-02-02 17:56:11 233 fil3

Now let’s check what happens when we PUT a file in zone2; we shouldn’t see the file get replicated to zone1, as our replication configuration for the bucket is unidirectional.

[root@ceph-node-00 ~]# aws --endpoint https://object.s3.zone2.dan.ceph.blue:443 s3 cp /etc/hosts s3://unidirectional/fil4

upload: ../etc/hosts to s3://unidirectional/fil4

[root@ceph-node-00 ~]# aws --endpoint https://object.s3.zone2.dan.ceph.blue:443 s3 ls s3://unidirectional/

2024-02-02 17:56:09 233 fil1

2024-02-02 17:56:10 233 fil2

2024-02-02 17:56:11 233 fil3

2024-02-02 17:57:49 233 fil4

We checked in zone1 after a while and can see that the file is not there, meaning it did not get replicated from zone2 as expected.

[root@ceph-node-00 ~]# aws --endpoint https://object.s3.zone1.dan.ceph.blue:443 s3 ls s3://unidirectional

2024-02-02 17:56:09 233 fil1

2024-02-02 17:56:10 233 fil2

2024-02-02 17:56:11 233 fil3

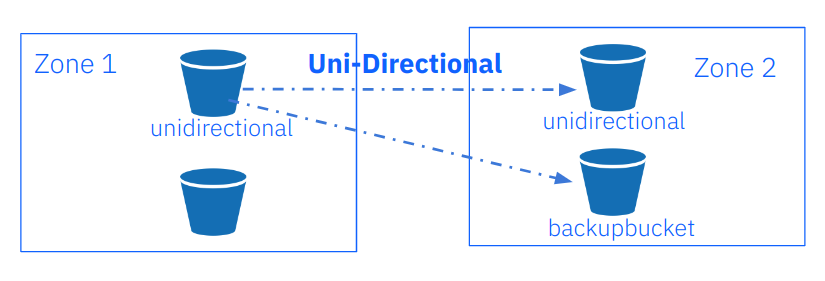

Unidirectional bucket Sync + One source to many destinations

In this example we are going to modify the previous unidirectional sync policy by adding a new replication target a bucket called backupbucket, once we finish setting on the sync policy, every object uploaded to bucket `unidirectional` in zone1 will be replicated to buckets `unidirectional` and `backupbucket` in zone2.

To get started, let's create the bucket `backupbucket`:

[root@ceph-node-00 ~]# aws --endpoint https://object.s3.zone2.dan.ceph.blue:443 s3 mb s3://backupbucket

make_bucket: backupbucket

We will add a new pipe to our existing sync group policy called `backupbucket`; we created the group sync policy in our previous `unidirectional` example.

Again, we specify the source and destination zones, so our sync will be unidirectional. The main difference is that now we are specifying a destination bucket called `backupbucket` with the `--dest-bucket` parameter

[root@ceph-node-00 ~]# radosgw-admin sync group pipe create --bucket=unidirectional --group-id=unidirectiona-1 --pipe-id=test-pipe2 --source-zones='zone1' --dest-zones='zone2' --dest-bucket=backupbucket

Again, let's check the sync info output, which gives us a representation of the replication flow we have configured; the sources field is empty because in zone1 we are not receiving data from any other source; in destinations, we now have two different Pipes, the first `test-pipe1` we created in our previous example, the second pipe as you can see has `backupbucket` set as the destination of the replication in zone2

[root@ceph-node-00 ~]# radosgw-admin sync info --bucket unidirectional

{

"sources": [],

"dests": [

{

"id": "test-pipe1",

"source": {

"zone": "zone1",

"bucket": "unidirectional:66df8c0a-c67d-4bd7-9975-bc02a549f13e.36430.1"

},

"dest": {

"zone": "zone2",

"bucket": "unidirectional:66df8c0a-c67d-4bd7-9975-bc02a549f13e.36430.1"

},

"params": {

"source": {

"filter": {

"tags": []

}

},

"dest": {},

"priority": 0,

"mode": "system",

"user": "user1"

}

},

{

"id": "test-pipe2",

"source": {

"zone": "zone1",

"bucket": "unidirectional:66df8c0a-c67d-4bd7-9975-bc02a549f13e.36430.1"

},

"dest": {

"zone": "zone2",

"bucket": "backupbucket"

},

"params": {

"source": {

"filter": {

"tags": []

}

},

"dest": {},

"priority": 0,

"mode": "system",

"user": "user1"

}

}

],

"hints": {

"sources": [],

"dests": [

"backupbucket"

]

},

Let’s check it out; from our previous example, we had zone1 with three files:

[root@ceph-node-00 ~]# aws --endpoint https://object.s3.zone2.dan.ceph.blue:443 s3 ls s3://unidirectional/

2024-02-02 17:56:09 233 fil1

2024-02-02 17:56:10 233 fil2

2024-02-02 17:56:11 233 fil3

In zone2 with four files, fil4 will not get replicated to zone1 because replication is unidirectional.

[root@ceph-node-00 ~]# aws --endpoint https://object.s3.zone2.dan.ceph.blue:443 s3 ls s3://unidirectional/

2024-02-02 17:56:09 233 fil1

2024-02-02 17:56:10 233 fil2

2024-02-02 17:56:11 233 fil3

2024-02-02 17:57:49 233 fil4

Let's add three more files to zone1; we should get the three files replicated to the `unidirectional` bucket and `backupbucket` in zone2

[root@ceph-node-00 ~]# for i [5..7] do ; in aws --endpoint https://object.s3.zone1.dan.ceph.blue:443 s3 cp /etc/hosts s3://unidirectional/fil${i}

upload: ../etc/hosts to s3://unidirectional/fil5

upload: ../etc/hosts to s3://unidirectional/fil6

upload: ../etc/hosts to s3://unidirectional/fil7

[root@ceph-node-00 ~]# aws --endpoint https://object.s3.zone1.dan.ceph.blue:443 s3 ls s3://unidirectional

2024-02-02 17:56:09 233 fil1

2024-02-02 17:56:10 233 fil2

2024-02-02 17:56:11 233 fil3

2024-02-02 18:03:51 233 fil5

2024-02-02 18:04:37 233 fil6

2024-02-02 18:09:08 233 fil7

[root@ceph-node-00 ~]# aws --endpoint http://object.s3.zone2.dan.ceph.blue:80 s3 ls s3://unidirectional

2024-02-02 17:56:09 233 fil1

2024-02-02 17:56:10 233 fil2

2024-02-02 17:56:11 233 fil3

2024-02-02 17:57:49 233 fil4

2024-02-02 18:03:51 233 fil5

2024-02-02 18:04:37 233 fil6

2024-02-02 18:09:08 233 fil7

[root@ceph-node-00 ~]# aws --endpoint http://object.s3.zone2.dan.ceph.blue:80 s3 ls s3://backupbucket

2024-02-02 17:56:09 233 fil1

2024-02-02 17:56:10 233 fil2

2024-02-02 17:56:11 233 fil3

2024-02-02 18:03:51 233 fil5

2024-02-02 18:04:37 233 fil6

2024-02-02 18:09:08 233 fil7

Excellent, everything is working as expected. We have all the files replicated to all buckets except fil4. This is expected as the file was uploaded to zone2, and our replication is unidirectional, so there is no sync from zone2 to zone1.

What will `sync info` tell us if we query `backupbucket`? , this bucket is only referenced on another bucket policy, but bucket `backupbucket` doesn't have a sync policy of its own:

[root@ceph-node-00 ~]# ssh ceph-node-04 radosgw-admin sync info --bucket backupbucket

{

"sources": [],

"dests": [],

"hints": {

"sources": [

"unidirectional:66df8c0a-c67d-4bd7-9975-bc02a549f13e.36430.1"

],

"dests": []

},

"resolved-hints-1": {

"sources": [

{

"id": "test-pipe2",

"source": {

"zone": "zone1",

"bucket": "unidirectional:66df8c0a-c67d-4bd7-9975-bc02a549f13e.36430.1"

},

"dest": {

"zone": "zone2",

"bucket": "backupbucket"

},

For this situation, we use hints, so even if the backup is not directly involved in the `unidirectional` bucket sync policy, it is referenced by a hint.

Note that in the output, we have resolved hints, which means that the bucket `backupbucket` found about bucket `unidirectional` syncing to it indirectly, and not from its policy (the policy for `backupbucket` itself is empty).

Bucket Sync Policy Considerations

One important thing to notice, and that can be a bit confusing, is that the metadata is always synced to the other zone independent of the bucket sync policy, so every user and bucket, even if not configured for replication, will show up on all the zones that belong to the zone group.

Just as an example, I create a new bucket called `newbucket`

[root@ceph-node-00 ~]# aws --endpoint http://object.s3.zone2.dan.ceph.blue:80 s3 mb s3://newbucket

make_bucket: newbucket

I confirm this bucket doesn’t have any replication configured:

[root@ceph-node-00 ~]# radosgw-admin bucket sync checkpoint --bucket newbucket

Sync is disabled for bucket newbucket

But all the metadata syncs to the secondary zone so that the bucket will appear in zone 2; in any case, the data inside the bucket won’t get replicated.

[root@ceph-node-00 ~]# aws --endpoint https://object.s3.zone2.dan.ceph.blue:443 s3 ls | grep newbucket

2024-02-02 02:22:31 newbucket

Another thing to notice is that objects uploaded before a sync policy was configured for a bucket won’t get synced to the other zone until we upload an object after enabling the bucket sync. This example syncs when we upload a new object to the bucket.

[root@ceph-node-00 ~]# aws --endpoint https://object.s3.zone1.dan.ceph.blue:443 s3 ls s3://objectest1/

2024-02-02 04:03:47 233 file1

2024-02-02 04:03:50 233 file2

2024-02-02 04:03:53 233 file3

2024-02-02 04:27:19 233 file4

[root@ceph-node-00 ~]# ssh ceph-node-04 radosgw-admin bucket sync checkpoint --bucket objectest1

2024-02-02T04:17:15.596-0500 7fc00c51f800 1 waiting to reach incremental sync..

2024-02-02T04:17:17.599-0500 7fc00c51f800 1 waiting to reach incremental sync..

2024-02-02T04:17:19.601-0500 7fc00c51f800 1 waiting to reach incremental sync..

2024-02-02T04:17:21.603-0500 7fc00c51f800 1 waiting to reach incremental sync..

[root@ceph-node-00 ~]# aws --endpoint https://object.s3.zone1.dan.ceph.blue:443 s3 cp /etc/hosts s3://objectest1/file4

upload: ../etc/hosts to s3://objectest1/file4

[root@ceph-node-00 ~]# radosgw-admin bucket sync checkpoint --bucket objectest1

2024-02-02T04:27:29.975-0500 7fce4cf11800 1 bucket sync caught up with source:

local status: [00000000001.569.6, , 00000000001.47.6, , , , 00000000001.919.6, 00000000001.508.6, , , ]

remote markers: [00000000001.569.6, , 00000000001.47.6, , , , 00000000001.919.6, 00000000001.508.6, , , ]

[root@ceph-node-00 ~]# aws --endpoint https://object.s3.zone2.dan.ceph.blue:443 s3 ls s3://objectest1

2024-02-02 04:03:47 233 file1

2024-02-02 04:03:50 233 file2

2024-02-02 04:03:53 233 file3

2024-02-02 04:27:19 233 file4

Objects created, modified, or deleted when the bucket sync policy was in an allowed or forbidden state will not automatically sync when the policy is enabled again.

We need to run the' bucket sync run' command to sync these objects and get the bucket with both zones in sync. For example, I disable the sync for bucket objectest1, and PUT a couple of objects in zone1, that don’t get replicated to zone2 even after I enable the replication again.

[root@ceph-node-00 ~]# radosgw-admin sync group create --bucket=objectest1 --group-id=objectest1-1 --status=forbidden

[root@ceph-node-00 ~]# aws --endpoint https://object.s3.zone1.dan.ceph.blue:443 s3 cp /etc/hosts s3://objectest1/file5

upload: ../etc/hosts to s3://objectest1/file5

[root@ceph-node-00 ~]# aws --endpoint https://object.s3.zone1.dan.ceph.blue:443 s3 cp /etc/hosts s3://objectest1/file6

upload: ../etc/hosts to s3://objectest1/file6

[root@ceph-node-00 ~]# radosgw-admin sync group create --bucket=objectest1 --group-id=objectest1-1 --status=enabled

[root@ceph-node-00 ~]# aws --endpoint http://object.s3.zone2.dan.ceph.blue:80 s3 ls s3://objectest1

2024-02-02 04:03:47 233 file1

2024-02-02 04:03:50 233 file2

2024-02-02 04:03:53 233 file3

2024-02-02 04:27:19 233 file4

[root@ceph-node-00 ~]# aws --endpoint https://object.s3.zone1.dan.ceph.blue:443 s3 ls s3://objectest1/

2024-02-02 04:03:47 233 file1

2024-02-02 04:03:50 233 file2

2024-02-02 04:03:53 233 file3

2024-02-02 04:27:19 233 file4

2024-02-02 04:44:45 233 file5

2024-02-02 04:45:38 233 file6

To get the buckets back in sync again, I will use the `radosgw-admin sync run` command from the destination zone.

[root@ceph-node-00 ~]# ssh ceph-node-04 radosgw-admin bucket sync run --source-zone zone1 --bucket objectest1

[root@ceph-node-00 ~]# aws --endpoint http://object.s3.zone2.dan.ceph.blue:80 s3 ls s3://objectest1

2024-02-02 04:03:47 233 file1

2024-02-02 04:03:50 233 file2

2024-02-02 04:03:53 233 file3

2024-02-02 04:27:19 233 file4

2024-02-02 04:44:45 233 file5

2024-02-02 04:45:38 233 file6

Summary & up next

We continued discussing the Multisite Sync Policy in Part Six of this series. We shared some hands-on examples of configuring multisite sync policies, like unidirectional replication with one source to many destination buckets. In the final blog of the IBM Storage Ceph Multisite series, we will be introducing the Archive Zone feature, which provides us with an immutable copy of all versions of all the objects that we have in our production zones.

Links to the rest of the blog series:

IBM Storage Ceph resources

Find out more about IBM Storage Ceph

#Featured-area-1#Featured-area-1-home

#Highlights#Highlights-home