SMC-R is a new communication protocol solution that is based on sockets over RDMA and the Internet Engineering Task Force (IETF) Request for Comments (RFC)

7609 publication. SMC-R enables TCP socket applications to transparently use RDMA, which enables direct, high-speed, low-latency communications. It is confined to applications using Transmission Control Protocol (TCP) sockets over IPv4 or IPv6. It also provides transparent high availability and load balancing if an additional RDMA capable adapter is detected in the same path.

SMC-R based communication provides significant performance gains and CPU resource reduction for multitier server workloads with request/response patterns or for data center server communication with streaming data patterns. AIX SMC-R is fully interoperable with the IBM z/OS® and the Linux on Z SMC-R implementations.

SMC-R communication in AIX adheres to the following characteristics:

-

-

Beginning with IBM® AIX® 7.2 with Technology Level 2, the AIXoperating system supports Shared Memory Communications over Remote Direct Memory Access (SMC-R).

-

Virtual LAN (VLAN) support for SMC-R is available with IBM® AIX® 7.2 Technology Level 3 or later releases.

-

AIX SMC-R supports SR-IOV (Single Root IO Virtualization) RDMA over Converged Ethernet Adapter(RoCE) logical ports. Customers having IBM POWER9 Server and ConnectX-4/ConnectX-5 100Gb adapters should be able to take advantage of this.

-

SMC-R protocol version 2 interoperability support - The SMC-R protocol solution version 2 supports multiple IP subnets. The SMC-R protocol solution version 1 that is supported in AIX operating system provides interoperability with SMC-R protocol solution version 2 peers that support both versions of the SMC-R protocol solution. The SMC-R protocol solution version 2 peers can be another host to which a TCP/SMC-R connection can be established.

-

Hosts participating in SMC-R communication must provide RDMA-capable Network Interface Cards (RNICs). All RoCE adapters that are supported on IBM Power Systems servers can facilitate SMC-R protocol-based communication. RoCE adapters must be dedicated to the logical partition (LPAR) communication endpoints.

-

The IP addresses of the communication endpoints must be in the same IP sub-net (or prefix if you are using IPv6).

-

SMC-R can be enabled only for TCP-based workloads.

-

Loopback transport is not supported. The AIX fast path loopback option is suited to meet ambitious performance requirements for connections that are initiated from and to the localhost.

-

No EtherChannel and IEEE 802.3ad Link Aggregation is supported because the SMC-R protocol inherently facilitates failover and load-balancing capabilities if the required adapter resources are available.

-

Kernel space TCP sockets are not supported.

- RDMA (Remote Direct Memory Access) Overview

Enables a host to register memory and allow remote host to directly read / write into this memory. CPU cycles are involved only for notifications (interrupts) post the RDMA (read/write) operation are performed. The AIX operating system supports RDMA over Converged Ethernet adapters (RoCE). Open Fabrics Enterprise Distribution (OFED) verbs programming is used to exploit RDMA capabilities in AIX operating system. SMC-R enables transparent exploitation of RDMA capability of RoCE adapters for TCP socket-based workloads, eliminating the need for application re-writes using OFED verbs.

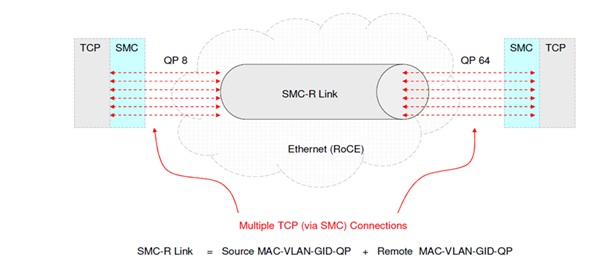

The following figure depicts the SMC-R communication flow between two hosts.

The SMC-R protocol solution is a hybrid solution that can be characterized as follows:

- Uses TCP connection (three-way handshake) to establish the SMC-R connection

- Each TCP endpoint exchanges the TCP option that indicates whether the endpoint supports the SMC-R protocol. The SMC-R rendezvous information about the reliable connected queue pairs (RC QP) attributes is exchanged within the TCP data stream, which is like SSL handshake.

- Application data is exchanged using RDMA post the RC QP connection is established over RoCE adapter.

- TCP connection remains active along with SMC-R (RC QP) connection. No data exchange happens over TCP connection post SMC-R rendezvous information exchange.

The Hybrid model of using TCP/IP connection along with SMC-R helps in preserving many critical existing operational and network management features of TCP/IP.

SMC-R introduces a rendezvous protocol that is used to dynamically discover the RDMA capabilities of TCP connection partners and exchange credentials necessary to exploit that capability if present.

Negotiation for SMC-R connection:

-

-

- Exchange credentials necessary to exploit the RDMA capability if present

- New TCP option that indicates SMC-R capability via TCP 3-way handshake

- Connection level control (CLC) messages are used once TCP connection is established, and this flows over TCP.

- CLC messages are used to negotiate SMC-R credentials like QP, remote address (Remote Memory Buffers), keys etc.

- If at any time during this negotiation a failure or decline occurs, the TCP connection falls back to using the IP fabric.

Note: Once SMC-R connection is established successfully, the data communication flows only via SMC-R and it cannot fall back to TCP even if SMC-R connection fails.

A logical connection between two hosts or logical partitions that enables RDMA communication between the specific pair of hosts. Links represent the Reliable Connected (RC) Queue Pair (QP). The first TCP connection between peers establishes a Link and the links are re-used by subsequent TCP connections.

-

-

-

Links are created over RoCE adapters and it is nothing but a RDMA RC (Reliable Connected) Queue pair.

-

Bound to a specific hardware path, meaning a specific RNIC on each peer

-

Multiple TCP connections between peers will be multiplexed over a single SMC-R link

-

Links are associated with a single LAN (or VLAN) segment and is not routable.

-

- Link Groups - High availability and Load balancing

A logical grouping of two SMC-R Links between two peers. Link Groups helps in achieving redundancy and load balancing for SMC-R connections. Links within a Link Group must have the same TCP server and client roles, must be on same network (LAN). TCP connections using SMC-R protocol can be assigned to either of the available links and can be moved from one to another in case of failure of one of the links.

-

-

-

Set of SMC-R links logically bonded together to provide redundant connectivity.

-

Typically, each over unique RoCE adapters between the same two SMC-R peers.

-

Benefits

- Load balancing

- Increased bandwidth

- Seamless failover

- Remote Memory Buffers (RMBs)

RMBs are used for receiving RDMA data written by the peer. The sending peer performs RDMA write operation to place the TCP applications data into the RMB. The receiving peer copies this data into TCP applications receive buffer. RMBs are partitioned into different elements of equal size and are called RMB Elements (RMBEs). Every TCP connection will get its own RMBE for remote peer to write into. The size of the RMBEs are based on the receive buffer size of a TCP socket.

TCP Applications payload is copied and maintained in staging buffers, until the payload is successfully RDMA’d to remote peer.

- AIX SMC-R considerations

- AIX Implementation of SMC-R is interoperable with zOS implementation.

- Dedicated RoCE adapters need to be assigned to LPAR for SMC-R support. The loopback transport is not supported.

- SMC-R can be enabled only for TCP based workloads.

- There is no support for Ether channel since SMC-R protocol inherently provides failover and load-balancing capabilities, dependent on the availability of the redundant hardware.

- Current implementation supports up to 2 links per Link Group.

- Current implementation does not support kernel space TCP sockets.

Note: While SMC-R feature is available from AIX 7.2 TL 02, VLAN support for SMC-R is available only from AIX 7.2 TL 03

SMC-R ships as a new fileset – ofed.smcr and it has dependency on ofed.core fileset.

Following are some of the useful commands:

To load SMC-R:

mkdev -c tcpip -t smcr

To enable SMC-R:

chdev -l smcr0 -a enabled=1

To add list of TCP ports that should use SMC-R:

chdev -l smcr0 -a port_range=“any | comma separated list of ports”

To add list of IP address that should use SMC-R:

chdev -l smcr0 -a ip_addr_list=“any | comma separated list of IP addresses”

To unload SMC-R:

chdev -l smcr0 -a enabled=0 - First disable SMC-R

rmdev -l smcr0 - Then issue rmdev

Following are the attributes SMC-R supports for tuning:

# lsattr -El smcr0

conns_per_lg 16 Number of connections per link group True

enabled 1 SMCR Enabled True

init_snd_pools 2 Number of send buffer pools to allocate quickly True

ip_addr_list any IP Address List True

max_memory 512 Max Memory in MB True

port_range any TCP Port Range True

rx_intr_packets 0 Number of packets to process in interrupt context True

tx_intr_cnt 128 Tx Interrupt event coalesce counter True

tx_intr_time 10000 Tx Interrupt event coalesce timer (microseconds) True

- SMCR-R device-specific statistics

AIX SMC-R supports entstat command which displays run-time statistics.

‘entstat –d smcr0’ is the command to use.

Following are some important run-time statistics that it provides currently:

-

- Active Link Groups: Number of link groups created. If a TCP application is started from Host A to Host B, then this should be showing 1.

- TCP Payload Bytes Sent: Amount of data sent through SMC-R

- TCP Payload Bytes Recv: Amount of data received through SMC-R

- CLC Messages Sent: Number of CLC messages sent.

- CLC Messages Recv: Number of CLC messages received.

- LLC Messages Sent: Number of LLC messages sent.

- LLC Messages Recv: Number of LLC messages received.

- TCP Failback Count: Number of connections that failed back to TCP during CLC negotiation.

- TCP connections reset count during CLC: Number of TCP connections that were reset during CLC due to some error scenarios.

- Active TCP connections using SMC-R: Active TCP connections that are currently using SMC-R.

Following are some run-time memory allocation information from SMC-R

-

-

Total amount of memory allocated on send/TX side

-

Total number of TX pools of 128 bytes mbuf size allocated

-

Total number of TX mbufs of 128 bytes size created

-

Total number of TX mbufs of 128 bytes size used from pool

-

Total number of mbufs of 128 bytes currently in use

-

Total amount of memory allocated on receive side (RMBs)

- Identifying if traffic is flowing through SMC-R

There are several ways to check if the traffic is flowing through SMC-R:

-

-

Run 'entstat –d smcr0' and check if "Active TCP connections using SMC-R" is not zero. Make sure you see the number of TCP connections that the workload uses is same as this value.

-

From the above entstat output, check "TCP Payload Bytes Sent" and "TCP Payload Bytes Recv" and this should be increasing, when workload is running.

-

Run tcpdump on the interface on which workload is started and one will notice the initial SMC-R handshake exchange via TCP (Note that the actual payload is sent via RDMA connection and is not captured in tcpdump).

- Identifying TCP workloads to enable SMC-R

Following are typical workloads that see potential benefits by using SMC-R:

-

-

Long lived TCP connections. Short lived connections do not benefit from SMC-R given the initial rendezvous protocol exchange.

-

Higher network packet rates. Workloads which use lower packet rates typically do not benefit from SMC-R.

-

For streaming workloads, SMC-R benefits will be higher for large sized data transfers.

- Performance tuning considerations

Following are some of the attributes that can be used to tune the performance based on the amount of network traffic generated by a workload and the system resource availability.

To change this attribute use

chdev –l smcr0 –a conns_per_lg=<value>

Default value for this attribute is 16. This attribute decides how many TCP connections are assigned to a one link group.

If this number is less, lesser TCP connections are assigned to each link group. So it helps reduce the load on each SMC-R link and thus reduces lock contention and may improve throughput. For example, if this value set to 16, and the workload creates 64 connections, after each 16th connection, a new link group is created. So totally at the end of creating 64 connections, we will have 4 link groups each serving 16 TCP connections.

To change this attribute use

chdev –l smcr0 –a init_snd_pools=<value>

Default value for this attribute is 2. This attribute decides how many send memory pools are created initially when SMCR is loaded. After changing this value, SMCR needs to be re-loaded or system needs to be rebooted for this attribute to take effect.

When there are more CPU's and the workload use multiple threads, increasing this value helps reduce the bottleneck in send buffer allocation and may help to improve the throughput. But increasing this value will also increase the memory usage by SMC-R.

To change this attribute use

chdev –l smcr0 –a rx_intr_packets=<value>

This attribute decides how many incoming packets are processed in the interrupt path before offloading the receive processing to a different thread.

Increasing this value may help in workloads which push data in a lower packet rate. This helps by processing of certain number of packets in the interrupt path itself thereby providing faster response time when the packet rate is lower.

- tx_intr_cnt and tx_intr_time:

These are interrupt coalescing attributes that are set in the RoCE adapter. They control the frequency of interrupts generated by the network adapter for informing SMC-R layer of completion of transmission of data sent. SMC-R uses this interrupt to free the resources used for sending that chunk of data.

When these values are set in the adapter, it generates interrupt either when the number of packets sent by it reaches the value of tx_intr_cnt or when time since last interrupt reaches the value of tx_intr_time, which ever happens earlier.

If these values are reduced, more interrupts are generated leading to increased CPU usage at the transmit side. For certain workloads, decreasing this value also may increase the throughput at the cost of higher CPU usage.

Note: Default tunable settings are optimal for most workloads. Whereas, these tunable may need changes for specific workload characteristics and system resources. We recommend trying the tunable changes in a test environment before applying on production setup.

If traffic is not flowing through SMCR, following is a checklist to go through:

-

-

Check if both peer’s RNIC adapters are in the same network/subnet.

-

Check if interfaces on both peer systems have same netmask.

-

Check if smcr is enabled in 'lsattr -El smcr0' output.

-

Check if connections are being established on RoCE adapters (ConnectX-2, ConnectX-3 and ConnectX-4).

-

On RoCE adapter, check if stack type is set to 'ofed' on hba0 (lsattr -El hba0), and rdma capability is set to 'enabled' or 'desired' on ent device (lsattr -El entx).

-

Check if RDMA connectivity is fine by running 'rping -s' on one host and 'rping -c -a <peer-ip address>' on the other host.

-

In kdb, check if a smcr_device structure is created for all RoCE interfaces by running 'smcr dev' in kdb prompt.

-

Capture packet trace using IPtrace or tcpdump, open the file in wireshark, change the 'decode as' option to SMCR and verify the traffic flow.

- SMC-R performance benefits relative to TCP for FTP workload

The below tests were performed by reading from /dev/zero and writing to remote peer’s /dev/null, to rule out disk related latencies.

Below graph represents SMC-R achieving 45% higher throughput with reduced CPU on both server and client end of the ftp connections by 16% and 20% respectively.

Following chart represents Request-Response workload performance of SMC-R with respect to TCP, measured using AWM (Application Workload Modeler -

https://www-01.ibm.com/software/network/awm/awmzos.html) between AIX and z/OS. The blue bars here show the throughput of SMCR relative to TCP (the higher the better). The green and yellow bars show the CPU utilization of transactions both at server and client ends. Across payload sizes we see lesser CPU utilized by SMC-R. Finally, the purple bar shows the response time which is better if it is lesser. We see that SMC-R provides lesser response time across varied payload sizes.

Following chart represents Request-Response workload performance of SMC-R with respect to TCP, measured using 'netop' tool between P8 client on AIX to P8 server on AIX both having connectx 4 adapters with 2 ports. The blue bars here show the throughput of SMCR relative to TCP (the higher the better). The grey and yellow bars show the CPU utilization of transactions both at server and client ends. Across payload sizes we see lesser CPU utilized by SMC-R. Finally, the orange bar shows the response time which is better if it is lesser. We see that SMC-R provides lesser response time across varied payload sizes.

Note: The actual performance benefits that can be achieved by using SMC-R will be unique to each workload and other system environmental factors (CPU and memory utilization, network bandwidth, network path and congestion, etc.)