INTRODUCTION

As you begin to validate the performance capabilities of your Kafka cluster, it’s a good idea to have a firm understanding of your scaling, throughput, and performance requirement. The workload generator application allows you to generate like workloads that represents your environment.

Lab Objective

The objective of this lab is to demonstrate the step-by-step process to download and install the workload generation application. The workload generation application is a pre-built Java application to generate events at configurable rate, or you can down and modify the source to simulate your own workload.

In this lab you will configure properties for an Apache Kafka producer that allows connection to an IBM Event Streams instance. Once connected, the producer will send messages to a topic within that IBM Event Steams instance.

App details: https://github.com/IBM/event-streams-sample-producer

Environment used for this lab

- IBM Cloud Pak for Integration 2020.2.1 or later

- IBM Event Streams 10.0

- Apache Kafka 1.3.2

- Java version 8

- Apache Maven 10.14.6 or later

Lab Environment Pre-Requisites

- The Cloud Pak for Integration has been deployed and the access credentials are available

- Available IBM Events Streams 10.0 instance

- Java version 8 installed on local environment

- Apache Maven Installed on local environment

Getting started with the Producer Generation Application

- Login to your Cloud Pak for Integration instance. Once you've logged in, you'll see the IBM Cloud Pak for Integration Platform Home page also known as the navigation console.

Downloading your Sample Producer Source Code

- Go to the Navigation Console and select Runtimes.

- Select the instance you created for this lab. In our case it's demo-env.

- Select ToolBox. Then select View in GitHub in the Workload generation application panel.

- There are two options for running this producer. In this lab we are going use the pre-built es-producer.jar. To download the .jar file, scroll down to the Getting Started section and select download. For now, let’s just download the es-producer.jar file. We will run it later, after configuration is completed.

- Next, download the sample producer project to your local environment. Select es-producer.jar to start download.

- Once your download is complete, move the es-producer.jar file to the project directory where you will run the sample producer application.

Configuring the Producer Application

- Open a terminal and navigate to your project directory. Next, let’s create the configuration file. With the pre-built producer, you have to run the es-producer.jar with the -g option to generate the configuration file. At the command prompt enter:

java -jar es-producer.jar -g

You should see the following results:

A 'producer.config' file has been successfully generated in your current working directory. Modify this file as described and provide this file to future runs via the --producer.config argument.

A producer.config file has been created in the project directory.

- Open the producer.config in an editor. There are a few parameters that must configure to allow a Producer application to connect to an IBM Event Streams instance.

- Bootstrap Server

- Cluster Certificate - SSL Truststore

- SCRAM SHA 512 Credentials

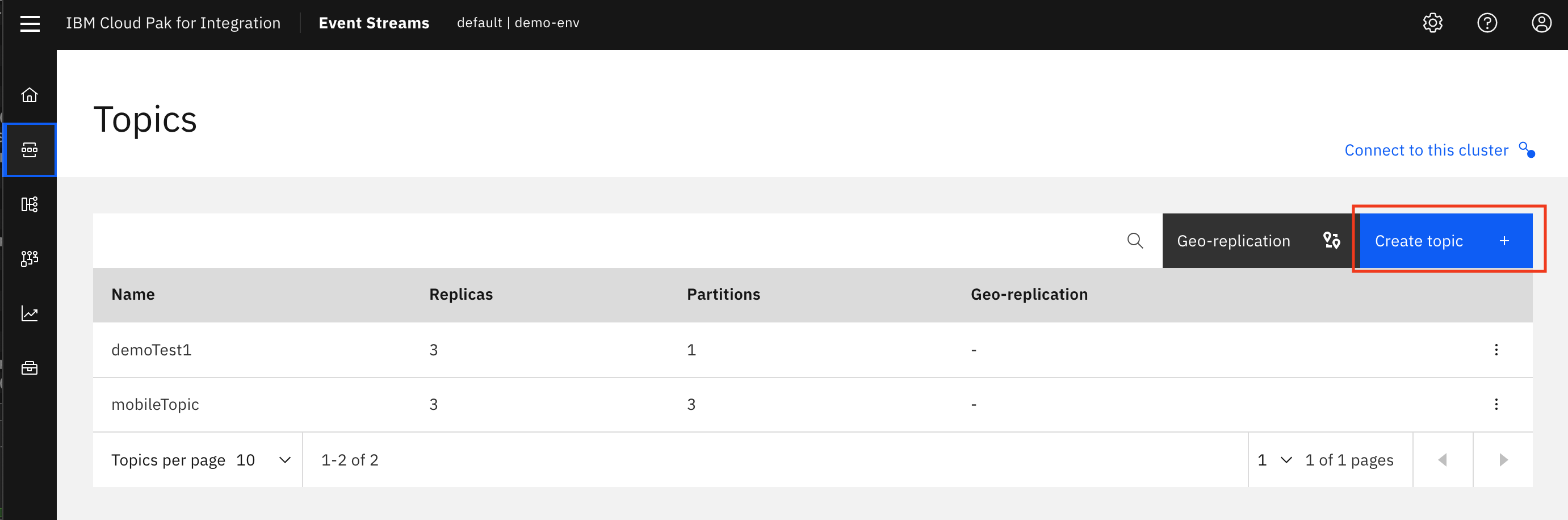

- Our first step is to create the topic where our producer application will send messages. Go back to Event Streams console and select Topics. Then select Create topics.

- Enter topic name, wl-topic and change Partitions value to 3. We will keep the other default values. Select Create topic.

- Now let's get the security parameters required to connect your producer application to your IBM Event Streams instance. Select Connect to this cluster.

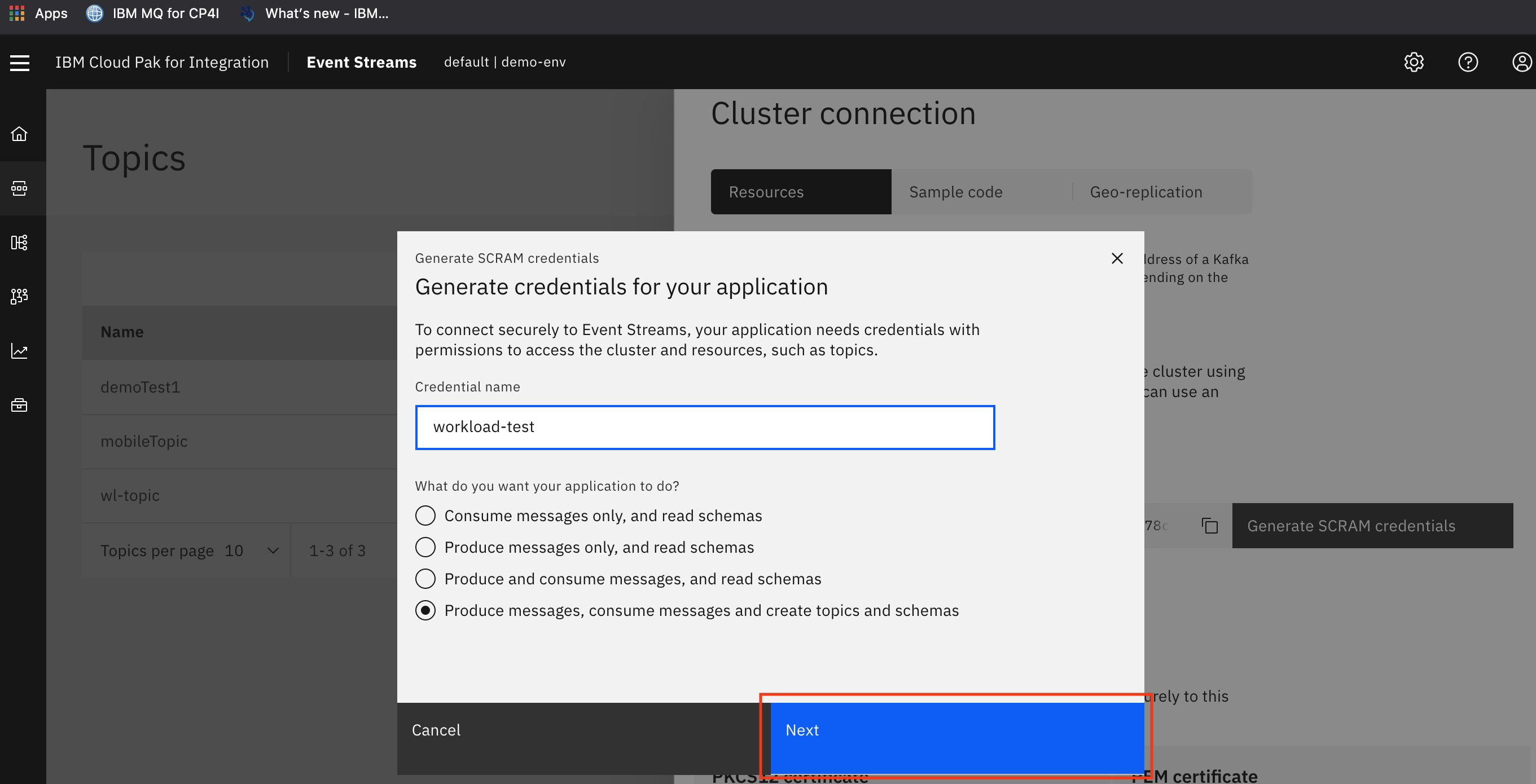

- Go to the Resourcestab and then Scroll down to the Kafka listener and credentials Make sure you have selected External. Select the button, Generate SCRAM credentials next to the listener chosen as the bootrap.servers configuration.

- Credential name = workload-test

- Select: Produce messages, consume messages and create topics and schemas

- Select: Next

- Select All topics and then select Next.

- Select All Consumer Groups and then select Next.

- Select No Transactional IDs and then select Generate credentials

- Copy SCRAM username and password. Store them in a safe place, we will use them later.

- Copy the Bootstrap server address. Store it in a safe place, we will use it later.

- Now, lets obtain the Event Streams PKCS12 certificate. Scroll down to the Certificate section and select Download certificate.

- Copy the downloaded es-cert.p12 file to to your project directory. Navigate to your project directory. Open the producer.config file in an editor.

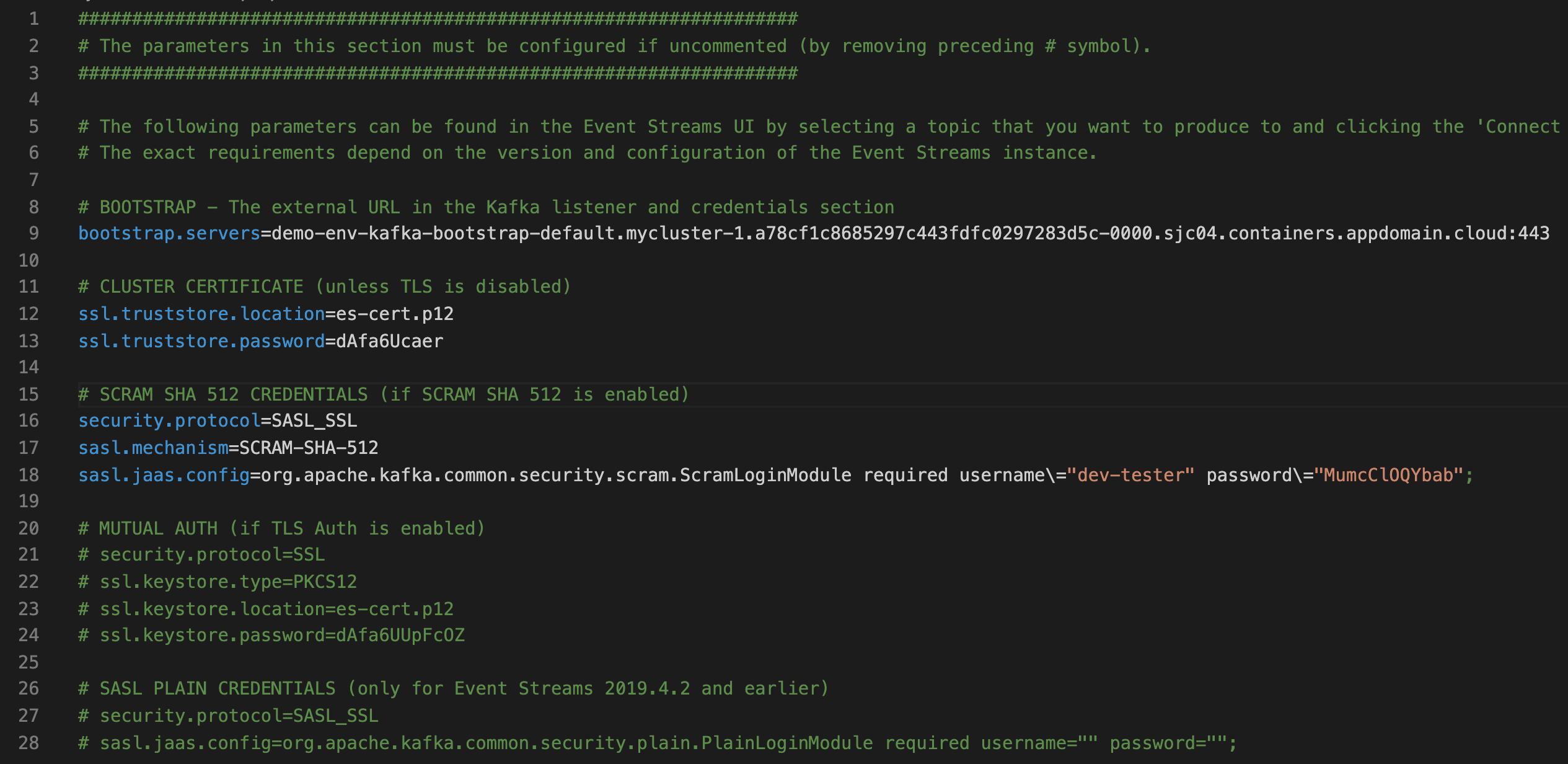

- Now let’s enter the parameters into the producer.config file. You’ll need to make 5 changes at a minimum. For this lab we will accept all the other defaults.

- Enter your server

- Enter your location and truststore.password

- Uncomment protocal-SASL_SSL

- Uncomment mechanism-SCAM-SHA-512

- Enter username and password for jaas.config

- Once changes have been made, save your producer.config file.

Running the Producer Application

Running the Workload generation application

- Open a terminal and navigate to your project directory. In the terminal run:

java -jar ./es-producer.jar -t wl-topic -s large

A full list of parameters can be found at https://github.com/IBM/event-streams-sample-producer .

- In few seconds, you will begin to see the producer application generating records that are being sent to your topic in the IBM Event Streams cluster.

Monitoring Message Workloads in IBM Event Streams

The IBM Event Streams monitoring UI provides information about the health of your environment. At a glance, you can gain insights for the overall health of Kafka cluster to ensure deployments run smoothly. Additionally, IBM Event Streams monitoring provides detailed aggregated information at the topic, producer and message levels.

Monitoring topics and messages

- Go back to Event Streams console. Select Then select the topic wl-topic.

- Here you can monitor the number producers, message produced per second, and the data produced per second in a specific topic.

- To view live messages and payloads, select Messages.

Congratulations! You have successfully run your Workload generation application.

Learning summary

In summary, you have learned the following in this lab:

- How to configure and run an Apache Kafka Java producer application that generates workload simulation.

- How to retrieve connection and security properties required for an Apache Kafka producer to connection to an IBM Event Streams instance.

- Using IBM Event Streams monitoring UI to track performance and status of topics, and messages.

#kafka#IBMEventStreams