Hello Robert,

We replicate this tables in two subscriptions: one subscription only in LiveAudit for daily incremental load data to DWH; second sub in standard or AdaptiveApply for tables failed up to add/update rows.

And all subscriptions read the same log files and shared staging store. And SCN is the same for both subscriptions.

DDL files for source and target failed to upload:

so I post them here:

Source DDL:

-- Create table

create table T_BAL

(

val_id NUMBER(10) not null,

dep_id NUMBER(10) not null,

id NUMBER(10) not null,

flzo NUMBER(1) default 0 not null,

planfl NUMBER(1) default 0 not null,

fromdate DATE not null,

bal_in NUMBER(18,2) not null,

bal_out NUMBER(18,2) not null,

specfl NUMBER(1) default 0 not null,

natval_in NUMBER(18,2),

natval_out NUMBER(18,2)

)

tablespace COLVIR

pctfree 10

initrans 1

maxtrans 255

storage

(

initial 8M

next 1M

minextents 1

maxextents unlimited

);

-- Create/Recreate indexes

create index IE_T_BAL_DATE on T_BAL (FROMDATE, DEP_ID)

tablespace COLVIR_INDEX

pctfree 10

initrans 2

maxtrans 255

storage

(

initial 8M

next 1M

minextents 1

maxextents unlimited

)

compress;

-- Create/Recreate primary, unique and foreign key constraints

alter table T_BAL

add constraint PK_T_BAL primary key (DEP_ID, ID, VAL_ID, FLZO, PLANFL, FROMDATE)

using index

tablespace COLVIR_INDEX

pctfree 10

initrans 2

maxtrans 255

storage

(

initial 27M

next 1M

minextents 1

maxextents unlimited

);

alter table T_BAL

add constraint FK_T_BAL_T_ACC foreign key (DEP_ID, ID)

references T_ACC (DEP_ID, ID);

alter table T_BAL

add constraint FK_T_BAL_T_VAL foreign key (VAL_ID)

references T_VAL_STD (ID);

-- Create/Recreate check constraints

alter table T_BAL

add constraint CKC_T_BAL_FLZO

check (FLZO IN (0, 1));

alter table T_BAL

add constraint CKC_T_BAL_PLANFL

check (PLANFL IN (0, 1, 2));

alter table T_BAL

add constraint CKC_T_BAL_SPECFL

check (SPECFL IN (0, 1));

Target table DDL:

create table COLVIR.T_BAL

(

val_id NUMBER(10) not null,

dep_id NUMBER(10) not null,

id NUMBER(10) not null,

flzo NUMBER(1) default 0 not null,

planfl NUMBER(1) default 0 not null,

fromdate DATE not null,

bal_in NUMBER(18,2) not null,

bal_out NUMBER(18,2) not null,

specfl NUMBER(1) default 0 not null,

natval_in NUMBER(18,2),

natval_out NUMBER(18,2),

cdc_tv TIMESTAMP(6) default TO_TIMESTAMP('01.01.1980 00:00:00.000000', 'DD.MM.YYYY HH24:MI:SS.FF') not null,

cdc_scn NUMBER(22) default -1 not null

)

tablespace COLVIR_DATA

pctfree 10

initrans 1

maxtrans 255

storage

(

initial 64K

next 1M

minextents 1

maxextents unlimited

);

-- Create/Recreate indexes

create index COLVIR.IE_T_BAL_DATE on COLVIR.T_BAL (FROMDATE, DEP_ID)

tablespace COLVIR_INDEX

pctfree 10

initrans 2

maxtrans 255

storage

(

initial 64K

next 1M

minextents 1

maxextents unlimited

);

create index COLVIR.IE_T_BAL_DEP_FROMDATE on COLVIR.T_BAL (DEP_ID, ID, FROMDATE)

tablespace COLVIR_INDEX

pctfree 10

initrans 2

maxtrans 255

storage

(

initial 64K

next 1M

minextents 1

maxextents unlimited

);

-- Create/Recreate primary, unique and foreign key constraints

alter table COLVIR.T_BAL

add constraint PK_T_BAL primary key (DEP_ID, ID, VAL_ID, FLZO, PLANFL, FROMDATE)

using index

tablespace COLVIR_INDEX

pctfree 10

initrans 2

maxtrans 255

storage

(

initial 64K

next 1M

minextents 1

maxextents unlimited

);

Best wishes,

Anuar

------------------------------

Anuar Akhsambayev

------------------------------

Original Message:

Sent: Tue September 27, 2022 05:15 AM

From: Robert Philo

Subject: Failed to add/update a row to table

Hello Anuar

Please post the definitions of the source and target tables

I assume that you are using standard replication or adaptive apply as a fall-back option.

I don't see the relevance of the transaction time, or the SCN unless you are using LiveAudit mapping type.

Thanks

Robert

------------------------------

Robert Philo

Original Message:

Sent: Tue September 27, 2022 02:03 AM

From: Anuar Akhsambayev

Subject: Failed to add/update a row to table

Hi Robert,

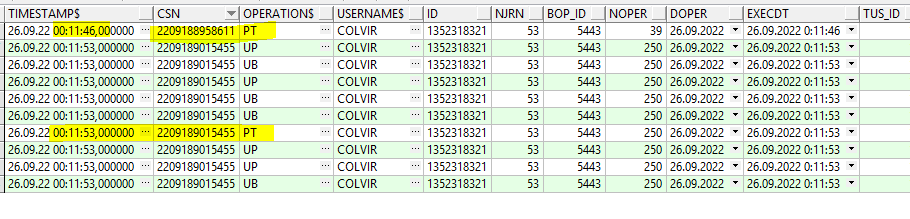

We've got this type error when records with same primary keys applied to insert (operation type - PT) within different (SCN) transactions.

For example, in screenshots below, there is two insert operations come sequently from CDC within different SCN. Time difference between them is 0.07 sec.

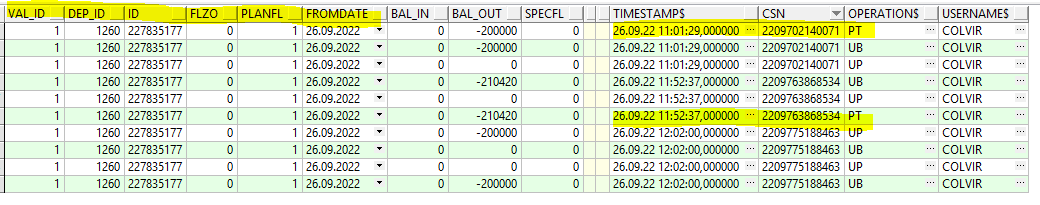

But there are cases when difference between two inserts is more than seconds, for example, in case below, 51 minute between two insert operation and I assume that they were processed in different log files.

But there are cases when difference between two inserts is more than seconds, for example, in case below, 51 minute between two insert operation and I assume that they were processed in different log files.

Is this parsing error? Technical support also couldn't suggest any decision to this.

Is this parsing error? Technical support also couldn't suggest any decision to this.

According to documentation it is recommended to reduce batch size to 1 but then we face to latency problem with log reading and parsing due to a lot number of data to be applied from source.

We have this problem for a long time.

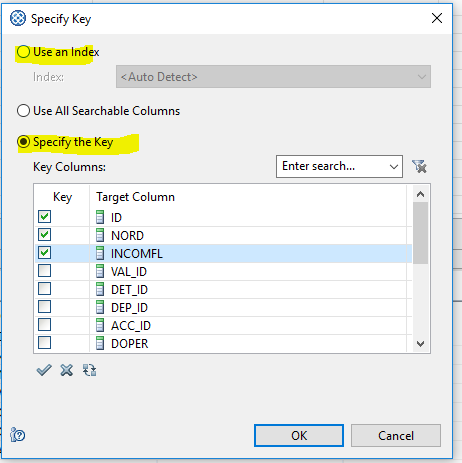

P.S. Target tables have primary keys exactly as in source, and we tried to specify key columns as using "Use an Index" as "Specify the Key" but error occurs in both cases.

Regards,

Anuar

------------------------------

Anuar Akhsambayev

Original Message:

Sent: Mon September 26, 2022 07:58 AM

From: Robert Philo

Subject: Failed to add/update a row to table

Hello

Assuming that you have not manually updated the bookmarks and processed the some log entries twice, you are getting unique constraint violations on the target apply because what is unique on the source is not unique on the target for any of the following reasons:

1) There are unique constraints on the target that are more restrictive than on the source - e.g. an additional unique constraint on the target, or a unique with say 3 columns on the source and only 2 of the columns on the target;

2)Columns in the unique constraint specification on the target are being truncated or losing precision resulting in a loss of uniqueness - e.g. varchar(40) on source and varchar(30 on target, or different encodings on source and target resulting in the data on target requiring more storage than on source, or timestamps with only precision to whole seconds on target

Suggest that you review and compare the definition of the source and target table that is failing as a first step

Using adaptive apply will not resolve the core problem as you acknowledge

If you are unable to determine the root cause, I suggest you open a case

Regards

------------------------------

Robert Philo

Original Message:

Sent: Mon September 26, 2022 07:36 AM

From: Anuar Akhsambayev

Subject: Failed to add/update a row to table

Hi everyone!

We have IIDR CDC (build 11.4.0.4-5652) for Oracle source/target databases.

And there is an error during applying to add or update record to target database:

1) CDC couldn't update records which were inserted earlier to target database: it failed with unique constraint error:

"Event ID: 315

Publisher(S)/Subscriber(T): T

System ID: CBS001

Event Text: Failed to add a row to table COLVIR.T_LIMJRN. ID: [18286567] NJRN: [4] . Database operation failed.

A SQL exception has occurred. The SQL error code is '1'. The SQL state is: 23000. The error message is:

[CDC][Oracle JDBC Driver][Oracle]ORA-00001: unique constraint (COLVIR.PK_T_LIMJRN) violated"

2) CDC couldn't insert a new record when batch of more than 1 rows comes input CDC and have PT, UP, UB or DL operations in one batch within the same ID.

"Event ID: 317

Publisher(S)/Subscriber(T): T

System ID: CBS000

Event Text: Failed to update a row in table COLVIR.TT_POINT. ID: [25925606] NORD: [88105] . IBM InfoSphere Data Replication applied a batch of 100 rows and the JDBC driver reported back that 0 rows have been updated for entry 3 from the batch. This may mean that the row from the batch could not be found in the database. Please review the documentation and turn off batching or reduce the batch size and retry the operation to narrow down to the rows that are failing to be applied.

".

Current value of "global_max_batch_size" is 100. But reducing the batch size increase log processing time and lead to mirroring latency.

All table are synchronized before starting mirroring and take full refresh. But after a few minutes it failed to add or update rows.

After error we restarted instance and machine where instance is installed and took full refresh of tables but it didn't help. Problem is still occuring time by time even with new records.

We switch mapping type to "Adaptive Apply" for that tables which have such error but it doesn't guarantee full synchronization target to source.

Does anyone face this case? If yes could you please share your experience how did you handle it?

------------------------------

Anuar Akhsambayev

------------------------------

#DataReplication

#DataIntegration