Introduction

Kernel-based Virtual Machine (KVM) is an open-source virtualization technology built into Linux®. Specifically, KVM allows you turn Linux into a hypervisor that allows a host machine to run multiple, isolated virtual environments called “guests” or “virtual machines” (VMs). KVM converts Linux into a type-1 (bare-metal) hypervisor. All hypervisors need some operating system-level components—such as a memory manager, process scheduler, input/output (I/O) stack, device drivers, security manager, a network stack, and more —to run VMs. KVM comprises of all these components as it is a part of the Linux kernel. Every VM is implemented as a regular Linux process, scheduled by the standard Linux scheduler, with dedicated virtual hardware like a network card, graphics adapter, CPU(s), memory, and disks.

Proxmox VE Proxmox VE is a complete, open-source server management platform for enterprise virtualization. It tightly integrates the KVM hypervisor and Linux Containers (LXC), software-defined storage and networking functionality, on a single platform. With the integrated web-based user interface you can manage VMs and containers, high availability for clusters, or the integrated disaster recovery tools with ease.

VMware Virtual Machine Disk File (VMDK) is a format specification for virtual machine (VM) files. A file with a VMDK file extension is essentially a complete and independent virtual machine. An OVA is a tar archive file with Open Virtualization Format (OVF) files inside, which is composed of an OVF descriptor with extension .ovf, a virtual machine disk image file with extension .vmdk, and a manifest file with extension .mf. OVA requires stream optimized disk image file (.vmdk) so that it can be easily streamed over a network link.

QCOW2 is a storage format for virtual disks. QCOW stands for QEMU copy-on-write. The QCOW2 format decouples the physical storage layer from the virtual layer by adding a mapping between logical and physical blocks.

This article showcases the migration of a VMWare OVA to a KVM virtual machine. This will involve converting VMWare vmdk to QCOW2 format and attaching it to virtual machine in a KVM environment. I will leverage Proxmox in the final stages of the KVM and will use its console for maximum work.

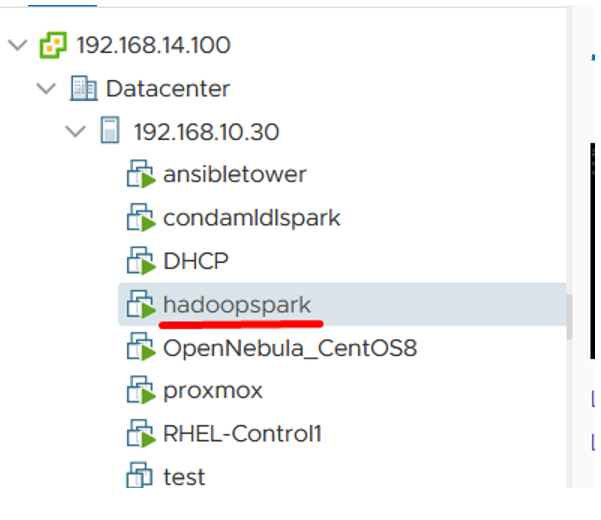

This article showcases migration of the OVA application rom the Hadoop infrastructure to a KVM based Proxmox server. The application is currently hosted on VMWare Infrastructure as shown below.

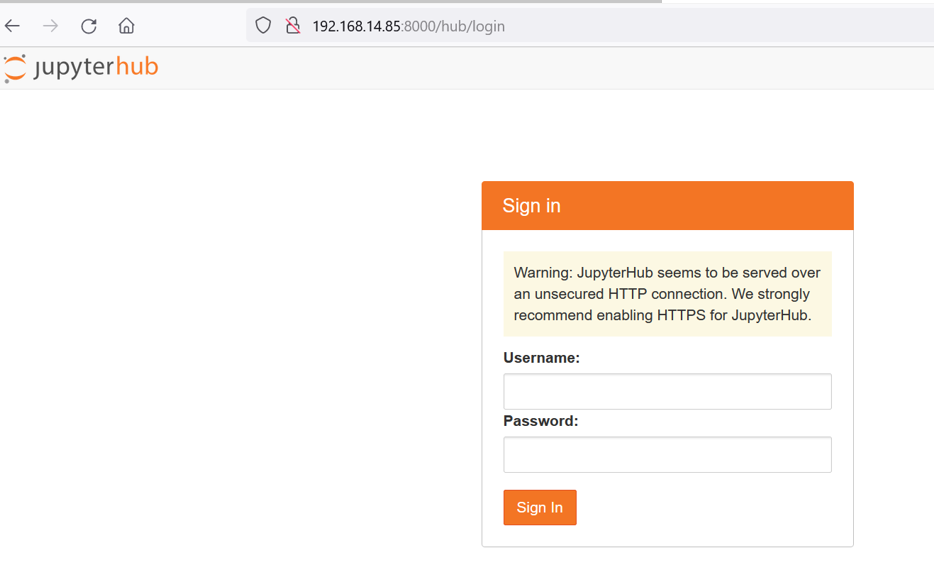

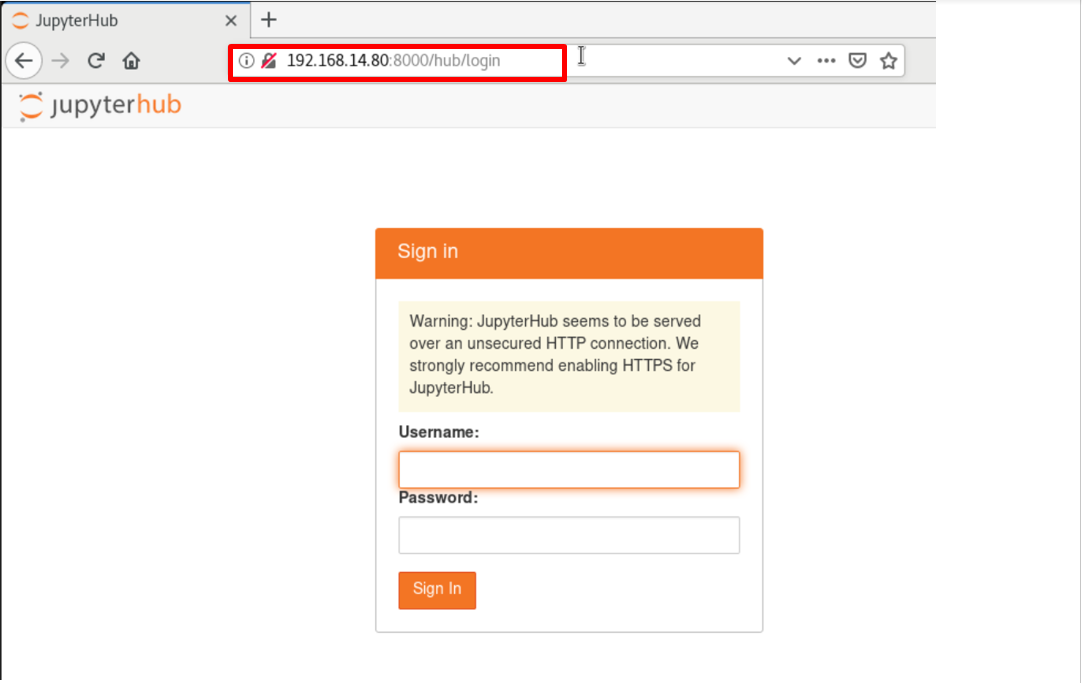

This is a JupyterHub based application in OVA format using which one can run machine learning and deep learning programs. This OVA also has complete Hadoop Infrastructure as well. I will show case the migration of this OVA application to KVM in this article.

Let’s verify the application execution on VMWare by checking it in browser -

It seems to work fine. Let’s now proceed to migration.

It seems to work fine. Let’s now proceed to migration.

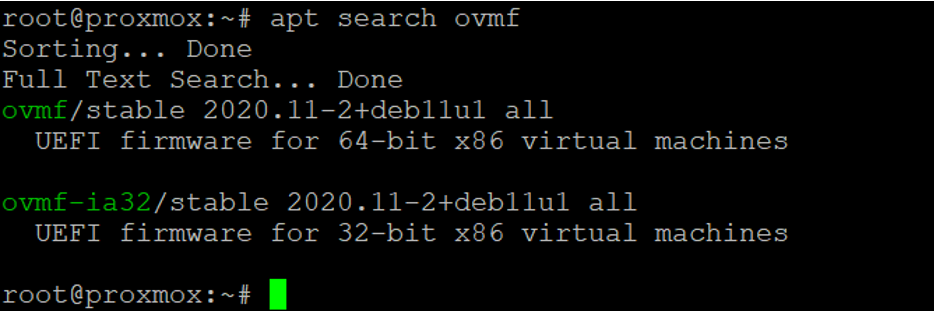

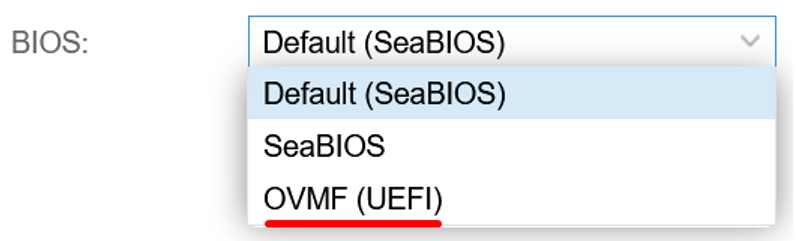

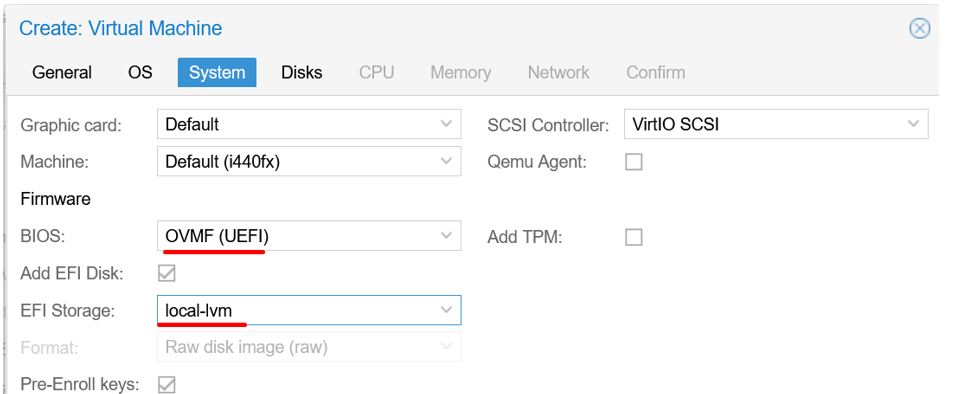

Enable UEFI Support for KVM Virtual Machines In Linux

Before creating virtual machines, we need to install OVMF package on the KVM host system. OVMF is a port of Intel's Tian core firmware to the KVM/QEMU virtual machines. It contains sample UEFI firmware for both KVM and QEMU. It allows easy debugging and experimentation with UEFI firmware for either testing virtual machines or for using the (included) EFI shell. The UEFI might be already installed as a dependency when installing KVM. In case it is not installed, you can install it as shown below on your Proxmox server.

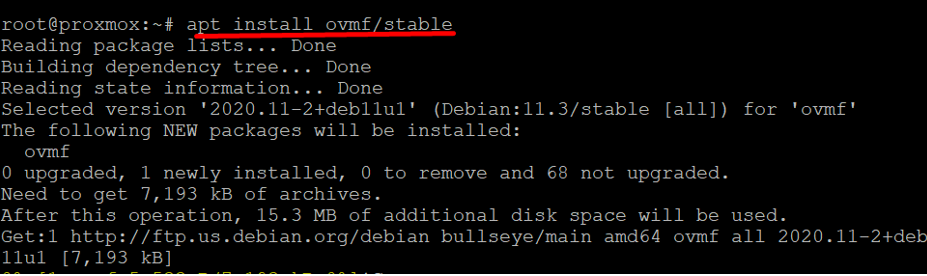

Run apt-install ovmf/stable command

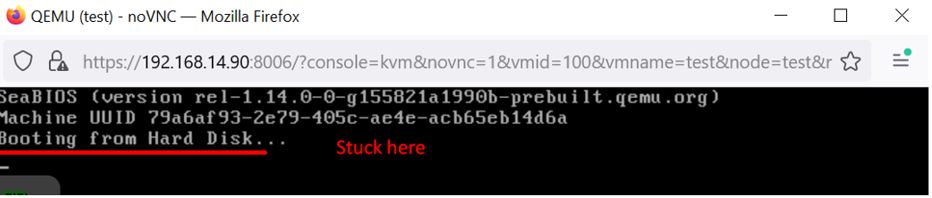

This is an essential pre-requisite to migrate .vmdk to KVM You will get only BIOS option and not completing this step will result in a booting error.

After the installation of this package, you will get UEFI option as well which is essential for deploying a UEFI-based (latest) virtual machine. UEFI is also referred to as “secure boot”.

Note: UEFI-based virtual machines fail to boot with default SeaBIOS options.

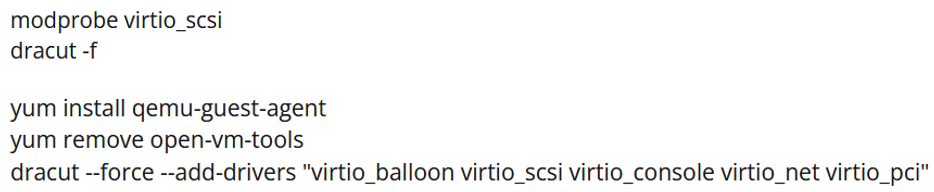

Install Necessary Packages on Guest Virtual Machine on VMWare

Before we proceed to migrate to KVM we need to perform some pre-requisites as otherwise a migrated virtual machine will fail to boot giving “Dracut Errors” and terminating at a Dracut prompt.

Let’s login into a VMWare based Guest virtual machine on deployed OVA with root user and install/un-install a few packages. Run the below commands:

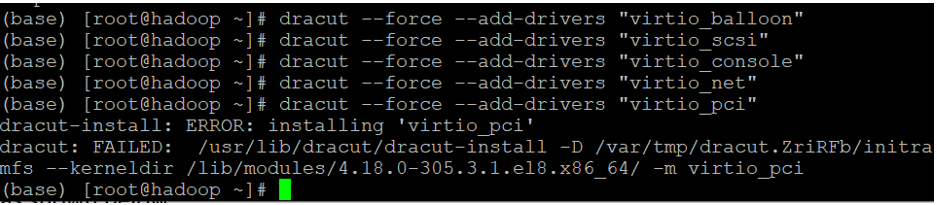

Note: dracut commands might not run successfully in one single line. You may run it one-by-one as shown below. One can ignore the “pci” error.

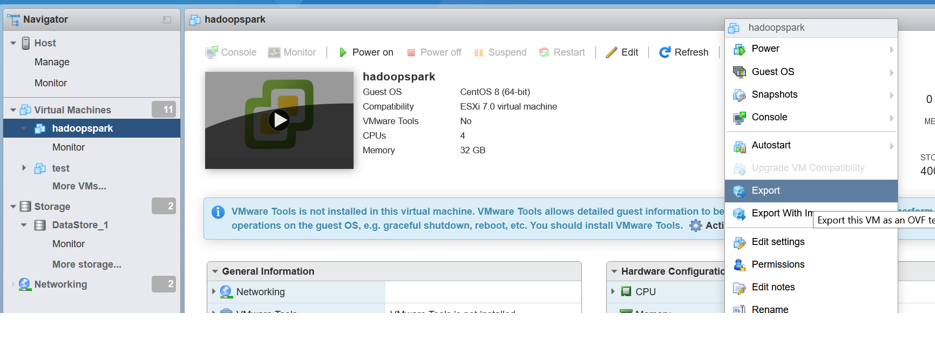

Power-off the virtual machine once the above commands run successfully and export the respective vmdk file from vSphere.

Click Export.

Click Export.

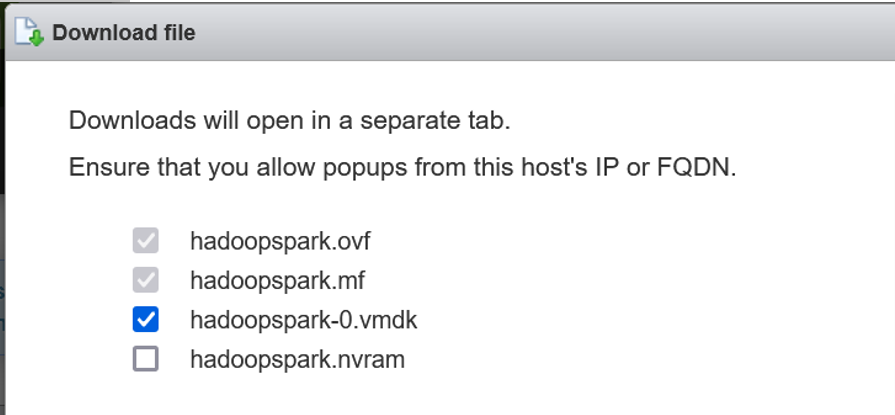

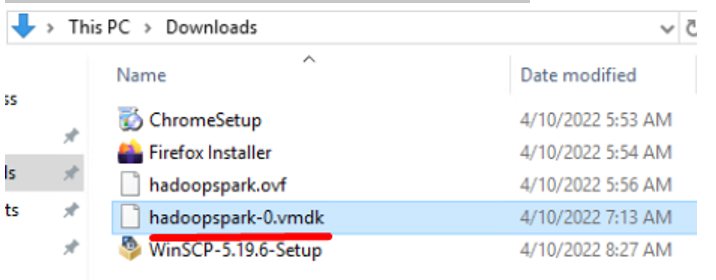

This will locally download a vmdk file to your machine

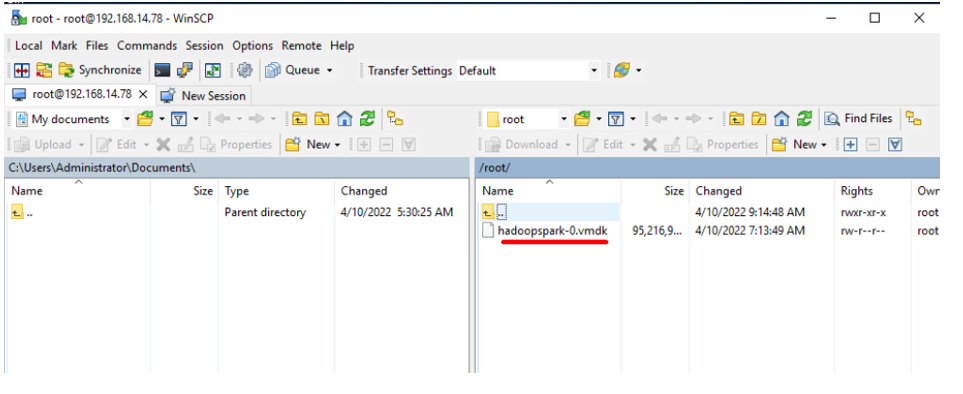

The vmdk will now be uploaded on the Proxmox server instance to take further action to create the KVM.

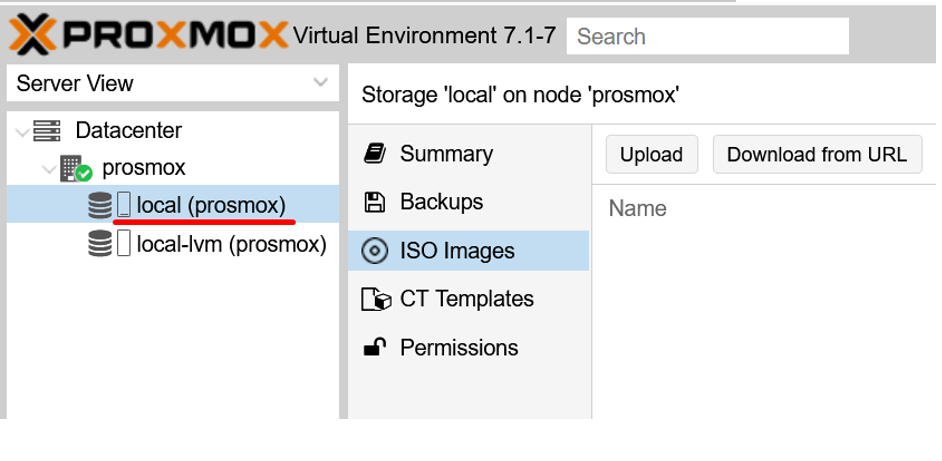

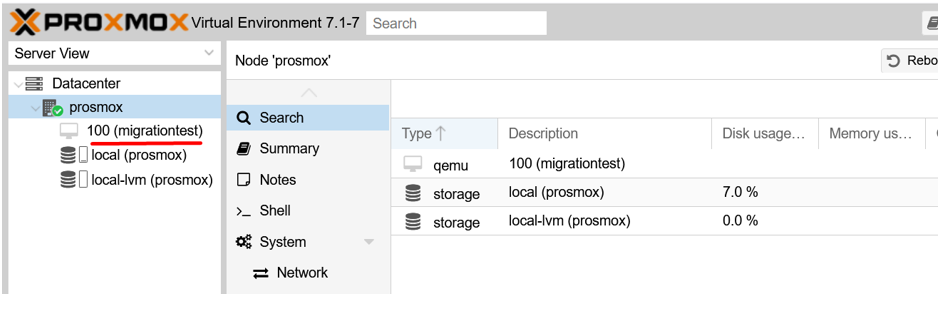

Lets login into Proxmox server and prepare it for our activity.

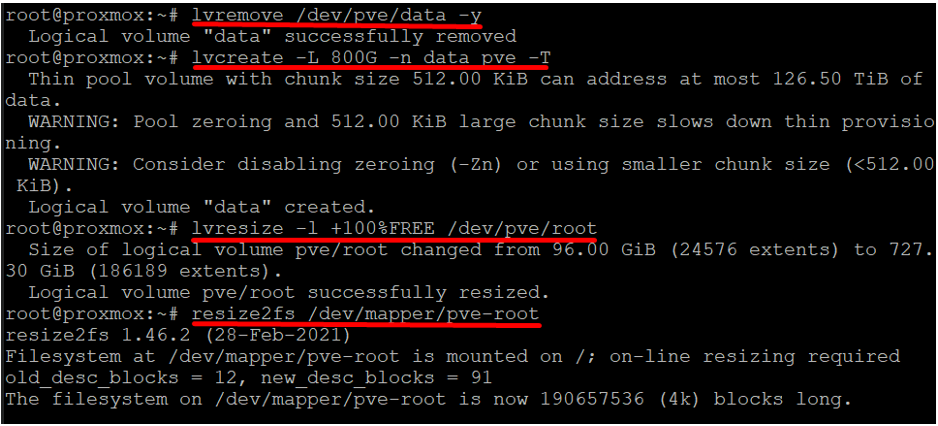

The default space available to root drive is 100G, but if your vmdk size is big, this will not be able to accommodate it. Hence, we need to resize the root volume of Proxmox.

I allocated 1.5TB to Proxmox during its installation, however its root volume will take only 100G. So, we need to resize this root volume to add more space to it. You will otherwise face space issues and will not be able to proceed further.

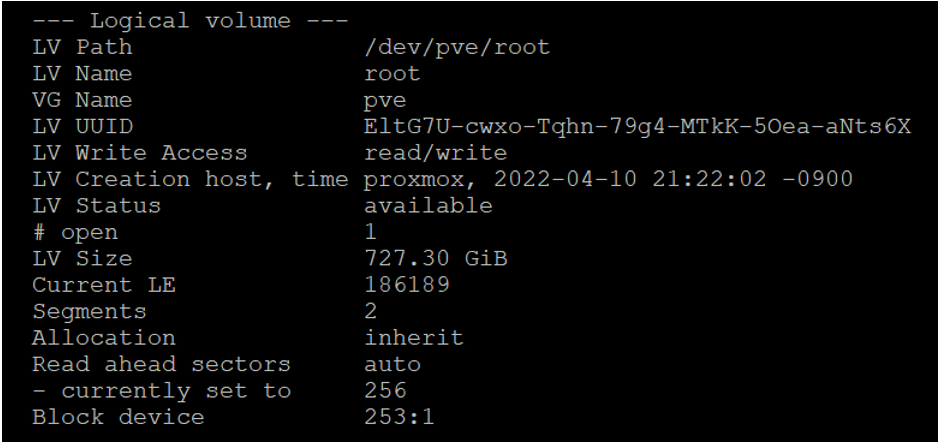

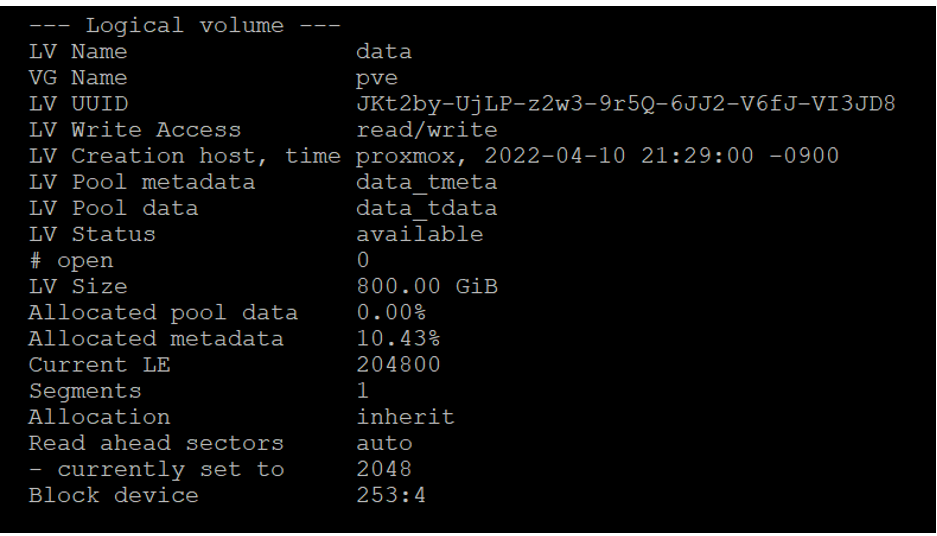

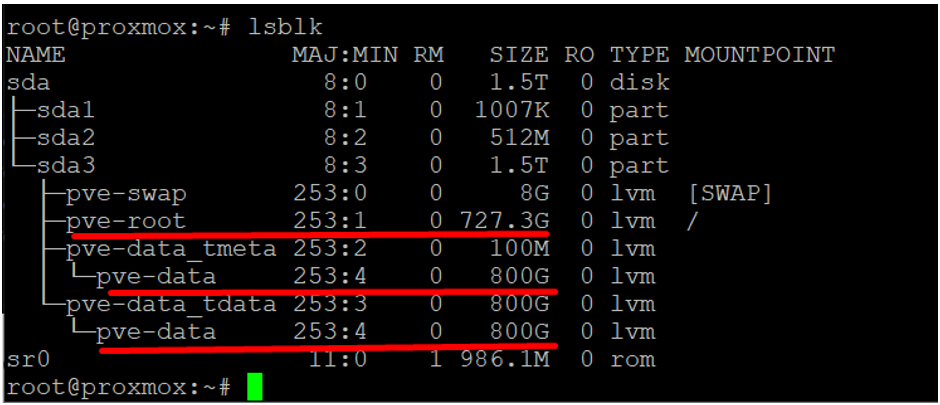

I have allocated 800G to data and the rest to root as shown above. We can verify this through a lvdisplay command

Now one can see that 727.3G is assigned to root volume and the remaining 800G is to data.

One can now see that there is ample disk space available in root and data.

Enable Thin LVM Automatic Extension

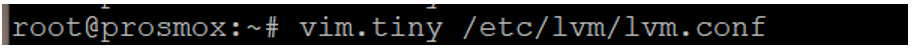

Edit /etc/lvm/lvm.conf and change default settings of variable

“thin_pool_autoextend_threshold” from 100 to 70 and

“thin_pool_autoextend_percent” to 20%

Which means whenever a pool exceeds 70% usage, it will be extended by another 20%. For a 1G pool, using 700M will trigger a resize to 1.2G. When the usage exceeds 840M, the pool will be extended to 1.44G, and so on.

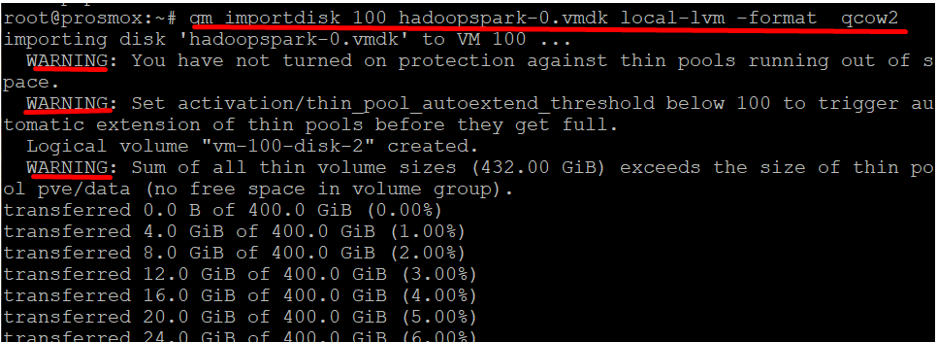

Else If this is not the case, you will get a below warning such as below.

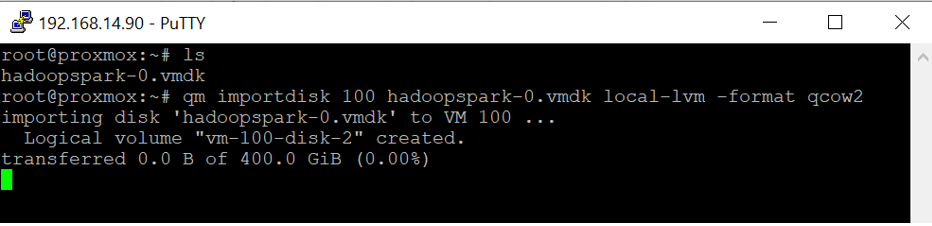

Steps to convert VMDK to QCOW2 and associate to a virtual machine

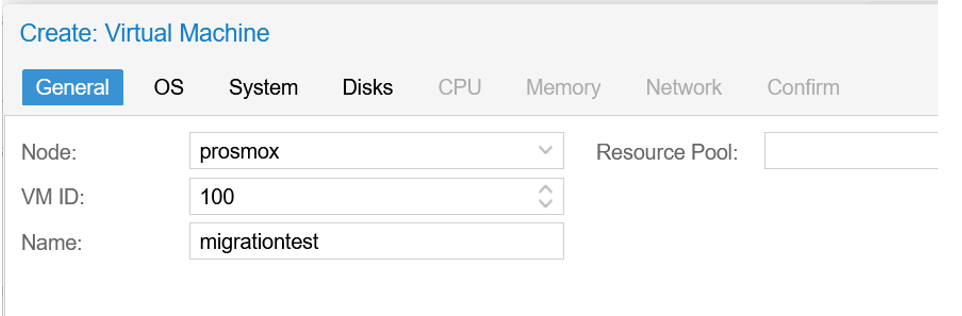

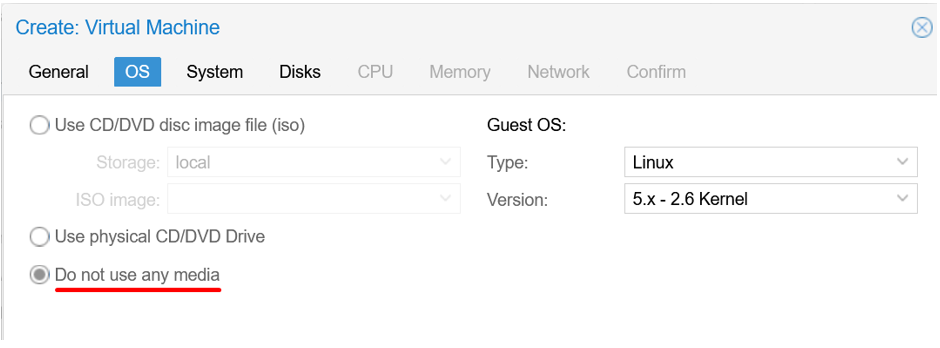

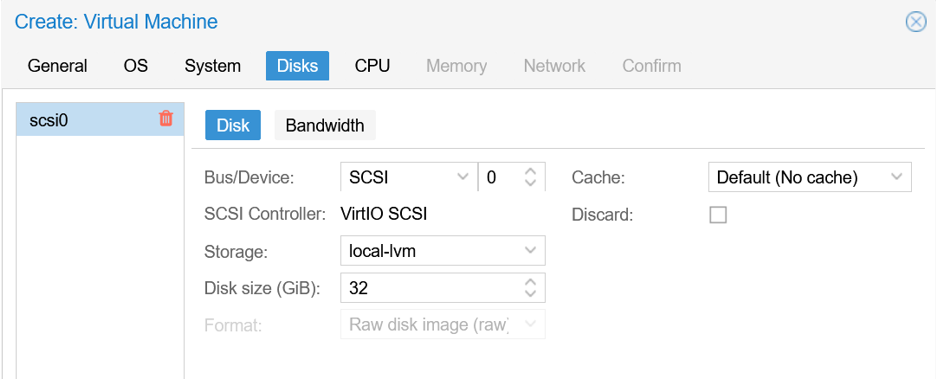

Let’s create a blank virtual machine and assign a converted QCOW2 disk to it. Follow the steps below:

Step1:

Step2:

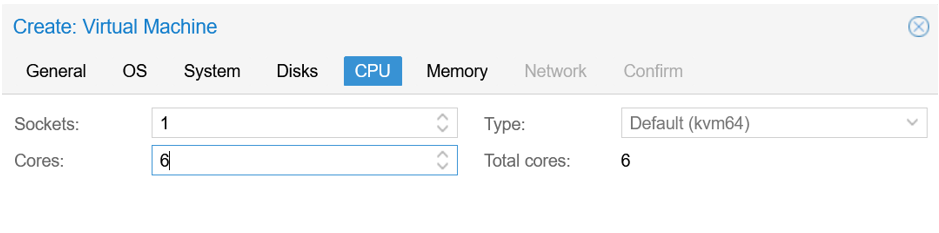

Step3:

Step4:

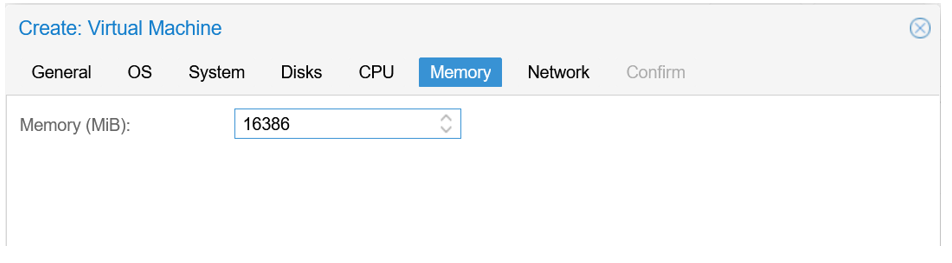

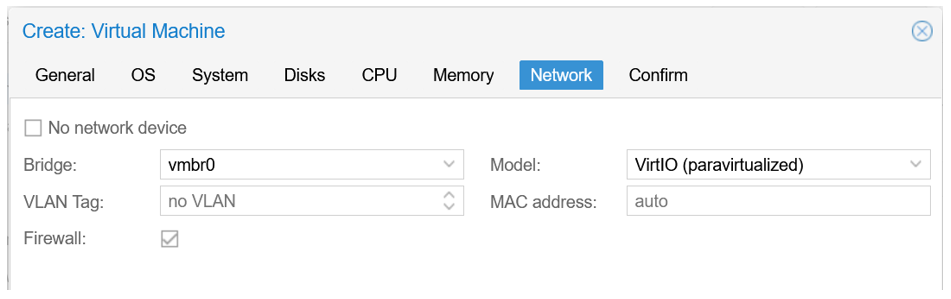

Step5:

Step6:

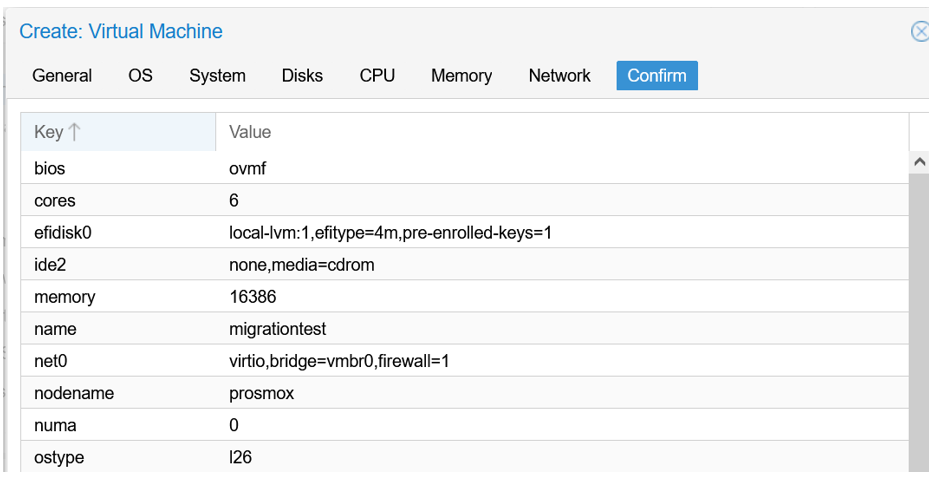

Step 7:

Step 8:

The virtual machine is deployed now.

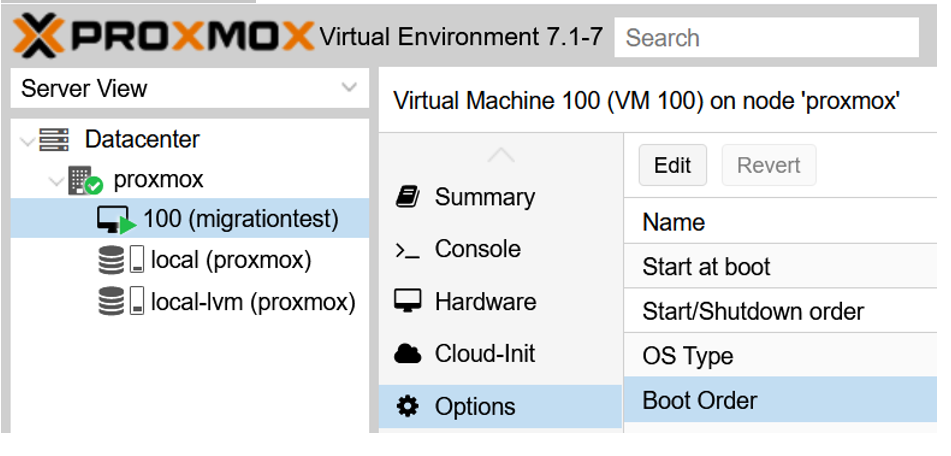

The point to note in the above image is the VM ID which we will use to attach the converted QCOW2 image.

The point to note in the above image is the VM ID which we will use to attach the converted QCOW2 image.

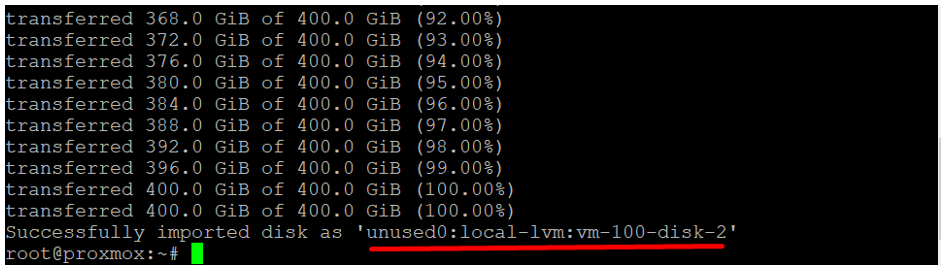

This message will appear when the command is completed.

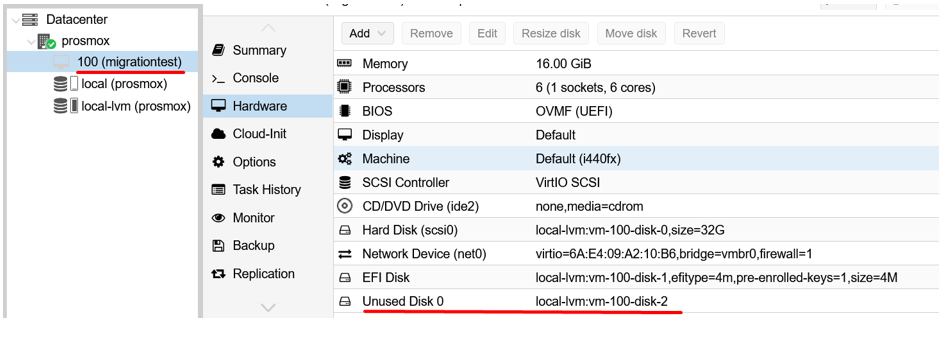

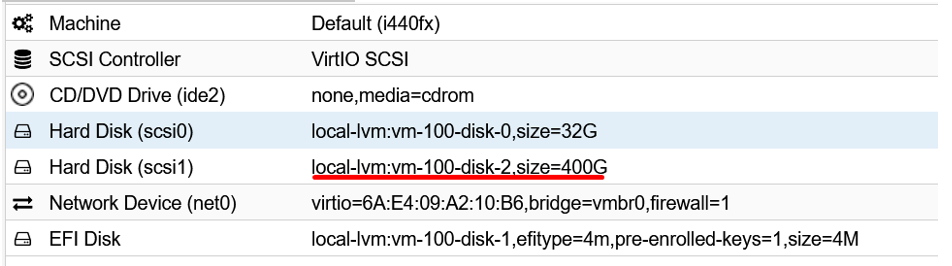

One can now see the disk attached to VM.

Double click the disk and add it to the VM by clicking the “Add” button. After which one can see that 400G disk is added to VM.

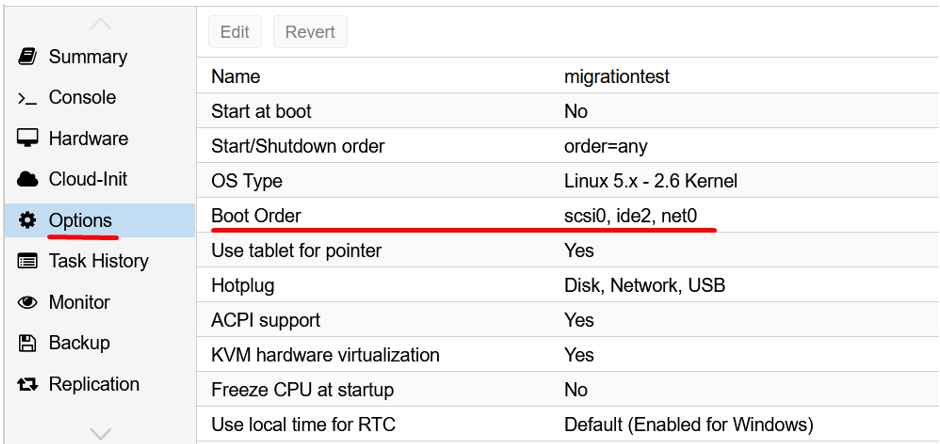

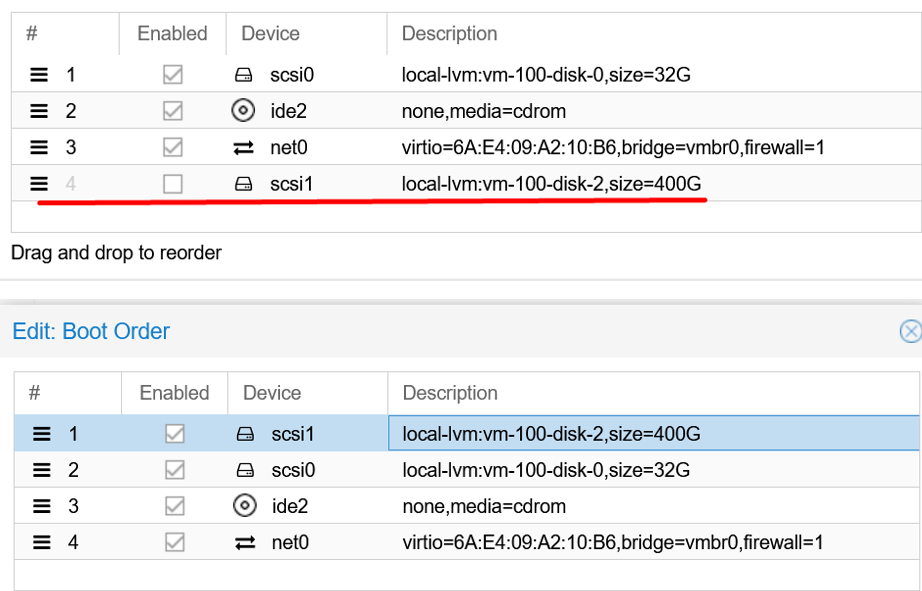

Change the boot order by going to “Options” and double click “Boot Order”.

Drag Option 4, scsi1 to the top.

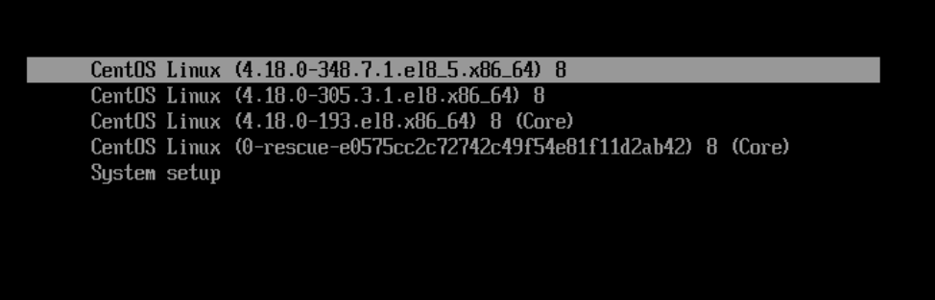

Boot the virtual machine

We can see that CentOS8 is being shown below, but in the beginning, we selected “No-OS” option in Proxmox wizard while creating the virtual machine.

Setting-up the Network

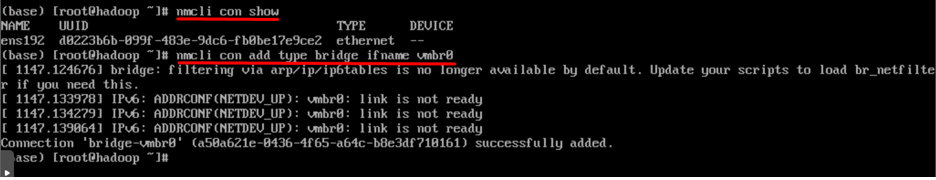

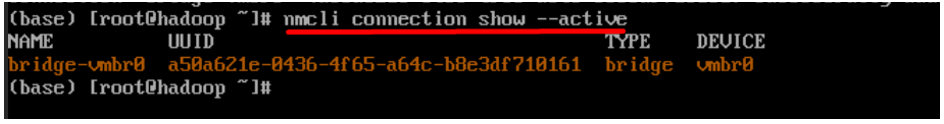

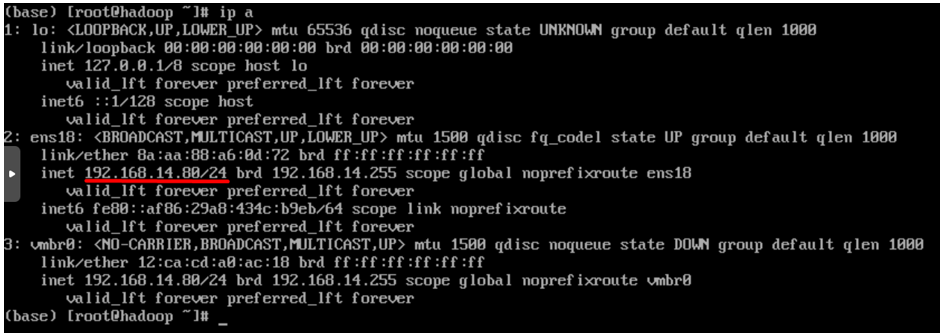

Adding a bridge to the ethernet device

Note: If things still don’t work, run command “nmtui” then delete and re-add interface again with the static IP address.

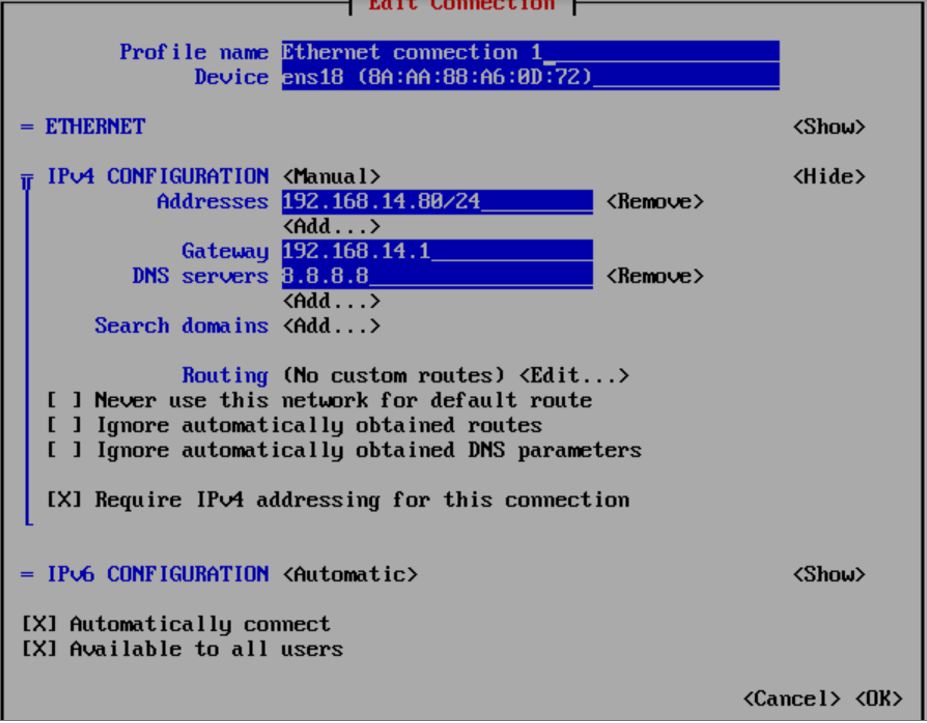

Edit and add IP address using "nmtui" command.

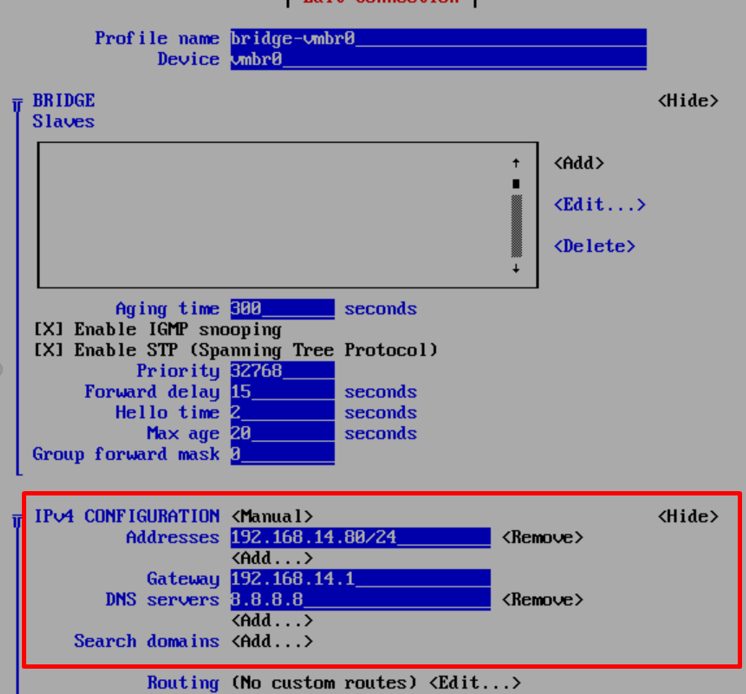

Edit the bridge.

We are now done with migration. Lets verify whether application is accessible.

The application is now accessible on KVM. Hence the migration is successfully completed.