Modernizing a legacy application, such as Cognos Analytics, as containers can be quite challenging but employing some best practices can result in a lot of benefits including:

- Simplifies the process of deploying multiple environments: development, test, and production.

- Easier to upgrade and roll back because containers are tagged with versions.

- Easier to scale up and down for demand.

- Automatic fault tolerance.

In this blog I will demonstrate how to not only deploy Cognos Analytics as containers but as Swarm services across a three node cluster consisting of one master and two worker nodes. Once the services are deployed, I will demonstrate how to scale one of the services to handle more users. I am quite familiar with the IBM implementation of Cognos on Cloud Pak for Data which is one of IBM's approaches to containerize Cognos Analytics. The other being their own Cognos as a Service offering. Since IBM offers Cloud Pak for Data it is unclear if they will provide Cognos Analytics as independent containers outside of that implementation. Be aware that IBM will not truly support any custom container approach otherwise and may request that any issues encountered be reproduced in an ordinary installation for troubleshooting. With that out of the way, on to the good stuff.

Content Store Container

You could certainly leverage an existing database platform instead of including a container and skip this step. Cognos Analytics supports a handful of vendors to store meta data and the best, aka free for my demonstration, option I found so far for a light weight database is actually Microsoft SQL Server 2019, which is offered as a fully functional container even with the developer edition license. This process will create a container to host the Content Store database. There are other comparable options that are not free including Informix and, of course, DB2. As always, check the

supported software pages to ensure the use of a database platform that is supported.

First, create a volume in your docker environment to persist our data. There are several ways to do this but the most common is a named volume. In order to work in a swarm cluster though it has to be a filesystem that can be accessed from any cluster node. In this case I use an NFS share to do that which does require that each node in the cluster be set up with the share beforehand. I'll skip the details of setting up an NFS share since that information can easily be found on the internet.

$ docker volume create --driver local --opt type=nfs --opt o=nfsvers=4,addr=master,rw --opt device=:/opt/ibm/cognos_data cognos_data

Download the base MSSQL 2019 image and initialize our container. Note that by not specifying a license type I am by default enabling the developer edition.

$ docker pull mcr.microsoft.com/mssql/server:2019-latest

$ docker run --name mssql2019 -v cognos_data:/var/opt/mssql -e 'ACCEPT_EULA=Y' -e 'SA_PASSWORD=yourStrong(!)Password' -p 1433:1433 -d mcr.microsoft.com/mssql/server:2019-latest

$ docker logs --follow mssql2019

Customize the container to create a dedicated database for the Content and Audit stores.

$ docker exec -it mssql2019 bash

mssql@14de7da50a13:/$ cd /opt/mssql-tools/bin

mssql@65d4bd7582e0:/opt/mssql-tools/bin$ ./sqlcmd -S localhost -U sa -P yourStrong(!)Password -d master

1> create database content_store

2> create database audit_store

3> go

1> exit

mssql@14de7da50a13:/opt/mssql-tools/bin$ exit

Now commit the modified container to image, stop the original container and remove it.

$ docker commit mssql2019 cognosdb:v11.1.7

$ docker stop mssql2019

$ docker rm mssql2019

I highly recommend re-tagging and pushing the image to a registry. In my case I used Google Cloud registry. After setting that up I used the following commands to do that:

$ docker tag cognosdb:v1 us.gcr.io/project-289415/cognosdb:v11.1.7

$ docker push us.gcr.io/project-289415/cognosdb:v11.1.7

Installations of Cognos Analytics

Many containerization approaches that I've seen perform the installation in the image. This is actually not best practice because it adds to container start up time. Cognos Analytics already takes at least 5 minutes and often up to 15 minutes to start without having to install software. Instead, perform separate installations of data, application and web tiers onto a bastion host which in my case was the master. This does mean that the typical requirements must be met in order to perform the installation. Those can also be found in the above linked supported software environments pages.They are temporary installations that will be copied into the container images at build time rather than installed into the container at start up saving at least 5 minutes on the start up time. I performed three installations to the following paths:

- /opt/ibm/cognos/analytics/cm

- /opt/ibm/cognos/analytics/app

- /opt/ibm/cognos/analytics/web

Configuration of Cognos Analytics

Because I have performed installations outside of any container image, many configuration changes can be applied prior to building the images in order to simplify the configuration during container startup. Settings like authentication source and content store connections will not change each time the data tier service is created in a swarm. In this example, I plan to use Google Identity Platform as an authentication source via OpenID Connect integration. Communication across containers will be able to reference the swarm service and not worry about individual containers. This means in order to configure things like the content store connection ahead of time I need to decide what the name of the various services for the content store, content manager, application tier and web server will be ahead of time and use those as the host values. For example, in my demonstration I decide to use cognos-db as the service name for the MSSQL database container and use that as host name in the following configuration of the data tier installation:

- Configure the Google Identity Platform name space

- Change the content store connection type to Microsoft SQL

- Change the content store host to cognos-db, the name that will be given to the service

- Set the user name to sa and the password to yourStrong(!)Password

- Set the Database name to content_store which was created in the previous step

- Optionally configure an audit database for audit_store

- Export the configuration to cogstartup.xml.tmpl and exit

- Remove the following files to maintain as small a footprint as possible for the image and to help avoid confusion in the event that template processing fails:

- temp/*

- data/*

- logs/*

- configuration/certs/CAM*

- configuration/cogstartup.xml

- Copy the Microsoft SQL Server JDBC driver to the drivers folder

Similarly update the configuration of the application tier. Note that I elected to use content-manager as the service name for the data tier:

- Change the host in Content Manager URIs to content-manager

- Change the configuration group contact host to content-manager

- Optionally configure an audit database for audit_store

- Add a Mobile Store configuration of type SQL Server with the same configuration of the Content Store

- Optionally configure a mail server connection to an external SMTP server

- Add a Notification Store configuration of type SQL Server with the same configuration of the Content Store

- Save and Export the configuration to cogstartup.xml.tmpl and exit (Note: it will complain about not being able to connect to content-manager at this time so just save as plain text)

- Remove the following files to maintain as small a footprint as possible for the image and to help avoid confusion in the event that template processing fails:

- temp/*

- data/*

- logs/*

- configuration/certs/CAM*

- configuration/cogstartup.xml

- Copy any required JDBC drivers to the drivers folder

In this case the gateway installation doesn't require any configure because I will not be using Windows Authentication with IIS to perform single sign on and instead will be utilizing Google Identity Platform as an OpenID Connect name space which will handle single sign on.

Entry-point Script

When containers are started within a Swarm cluster they are given dynamic names as well as IP addresses. Unfortunately the exported configuration files have these hard coded within them. Therefore the containers will require a script to update these configurations as well as start the application. I've utilized the open source confd utility, A lightweight configuration management tool, to perform simple string replacement using environment variables. This does require modifying the exported configuration files as well as a template both of which I will cover in a minute. The script, which I've named docker-entrypoint.sh, itself is pretty simple really:

export IPADDRESS=`ifconfig eth0 | grep 'inet' | awk '{print $2}'`

test $IPADDRESS

confd -onetime -backend env

cd /opt/ibm/cognos/analytics/cm/bin64/

./cogconfig.sh -s

sleep infinity

It captures the dynamic IP address as an environment variable since there isn't one already. The host name for the container is a standard environment variable that will already be defined. Then runs confd to update the configured host name and IP Address in the configuration template that I need to create yet. At the end it starts IBM Cognos Analytics and then sleeps for infinity which is a common practice when running legacy applications in a container when they do not run in the foreground.

Configuration Templates

Two template files are required for confd to be able to dynamically update the configuration when the entry-point script runs: the actual Cognos configuration that has been edited and a file that tells confd what values to replace in the configuration and where to save the updated configuration when that is done. Update the Cognos configuration templates, cogstartup.xml.tmpl, that were exported from the Configuration UI as follows:

The second template, cogstartup.xml.toml, simply informs confd to substitute the two keys with values into the template and save the resulting file at /opt/ibm/cognos/analytics/cm/configuration/cogstartup.xml. Due to the path this will require two copies of the template: one for the content manager container and another for the application tier container.

[template]

src = "cogstartup.xml.tmpl"

dest = "/opt/ibm/cognos/analytics/cm/configuration/cogstartup.xml"

keys = [

"HOSTNAME",

"IPADDRESS",

]

[template]

src = "cogstartup.xml.tmpl"

dest = "/opt/ibm/cognos/analytics/app/configuration/cogstartup.xml"

keys = [

"HOSTNAME",

"IPADDRESS",

]

Data Tier Container

Now that we have all the pieces in place the data tier container can be created. In a standard installation, additional data tier instances operate in standby mode to take over when the active one fails. The data tier container running in a Docker Swarm cluster can and should have a health check defined for the image. Then the swarm master(s) will monitor the state of the container and restart it if ever found to be unhealthy making it unnecessary to scale the data tier to more than a single container in order to accomplish fail over. This fact alleviates some complexity when configuring a distributed service architecture which would include having to update the configuration in each container to add additional host names and restarting.

I recommend to create a directory that will serve as the docker context for building the container. Create the data_tier directory and copy the data tier installation, /opt/ibm/cognos/analytics/cm, to it. Also, copy the configuration templates and entry script in it as well so that you have the following:

data_tier

- cm/

- cogstartup.xml.tmpl

- cogstartup.xml.toml

- docker_entrypoint.sh

The last piece needed is the Dockerfile to build the container.

FROM centos:7

RUN yum -y update && \

yum install -y glibc glibc.i686 \

libstdc++ libstdc++.i686 \

libX11 libX11.i686 \

libXext libXext.i686 \

openmotif openmotif.i686 \

wget net-tools bind-utils && \

yum clean all

USER root

RUN mkdir -p /opt/ibm/cognos/analytics/cm

COPY /cm /opt/ibm/cognos/analytics/cm

ADD https://github.com/kelseyhightower/confd/releases/download/v0.16.0/confd-0.16.0-linux-amd64 /usr/local/bin/confd

RUN chmod +x /usr/local/bin/confd && \

mkdir -p /etc/confd/{conf.d,templates}

ADD cogstartup.xml.toml /etc/confd/conf.d

ADD cogstartup.xml.tmpl /etc/confd/templates

COPY /docker-entrypoint.sh /opt/ibm/cognos

RUN chmod +x /opt/ibm/cognos/docker-entrypoint.sh

CMD /opt/ibm/cognos/docker-entrypoint.sh

HEALTHCHECK --start-period=5m --interval=30s --timeout=5s CMD curl -f http://localhost:9300/p2pd/servlet | grep Running || exit 1

The required libraries are installed, the installation is copied, the confd utility is downloaded and set up, the entry-point script is copied and lastly a health check is defined. It may be necessary to modify the parameters of the health check if your container takes longer to start. Build the container making sure to tag it with the version:

$ docker build --tag=content-manager:v11.1.7 .

Again, I highly recommend re-tagging and pushing the image to a registry for safe keeping and convenience:

$ docker tag content-manager:v11.1.7 us.gcr.io/project-289415/content-manager:v11.1.7

$ docker push us.gcr.io/project-289415/content-manager:v11.1.7

Application Tier Container

This is the container that will handle the majority of requests such as running a report and therefore will make sense to be able to scale, possibly more than the web server. Again I recommend to create a directory that will serve as the docker context for building the container. Create the app_tier directory and copy the application tier installation, /opt/ibm/cognos/analytics/app, to it. Also, copy the configuration templates and entry script in it as well so that you have the following:

app_tier

- app/

- cogstartup.xml.tmpl

- cogstartup.xml.toml

- docker_entrypoint.sh

The last piece needed is the Dockerfile to build the container.

FROM centos:7

RUN yum -y update && \

yum install -y glibc glibc.i686 \

libstdc++ libstdc++.i686 \

libX11 libX11.i686 \

libXext libXext.i686 \

openmotif openmotif.i686 \

wget net-tools bind-utils && \

yum clean all

USER root

RUN mkdir -p /opt/ibm/cognos/analytics/app

COPY /app /opt/ibm/cognos/analytics/app

ADD https://github.com/kelseyhightower/confd/releases/download/v0.16.0/confd-0.16.0-linux-amd64 /usr/local/bin/confd

RUN chmod +x /usr/local/bin/confd && \

mkdir -p /etc/confd/{conf.d,templates}

ADD cogstartup.xml.toml /etc/confd/conf.d

ADD cogstartup.xml.tmpl /etc/confd/templates

COPY /docker-entrypoint.sh /opt/ibm/cognos

RUN chmod +x /opt/ibm/cognos/docker-entrypoint.sh

CMD /opt/ibm/cognos/docker-entrypoint.sh

HEALTHCHECK --start-period=5m --interval=30s --timeout=5s CMD curl -f http://localhost:9300/bi/ | grep "Cognos Analytics" || exit 1

The required libraries are installed, the installation is copied, the confd utility is downloaded and set up, the entry-point script is copied and lastly a health check is defined. It may be necessary to modify the parameters of the health check if your container takes longer to start. Build the container making sure to tag it with the version:

$ docker build --tag=application-tier:v11.1.7 .

Again, I highly recommend re-tagging and pushing the image to a registry for safe keeping and convenience:

$ docker tag application-tier:v11.1.7 us.gcr.io/project-289415/application-tier:v11.1.7

$ docker push us.gcr.io/project-289415/application-tier:v11.1.7

Web Tier Container

With IBM Cognos Analytics, the web tier is a little more than simply a web server. In fact, a web server isn't absolutely required since you could expose the application tier directly to the web. It is still a very common and best practice to utilize one however because doing so offloads a lot of the static content processing from the application tier. Since Apache is open source and free, using an Apache container makes a lot of sense for my web tier.

I recommend to create a directory that will serve as the docker context for building the container. Create the web_tier directory and copy the gateway installation, /opt/ibm/cognos/analytics/web, to it.

web_tier

- web/

Next, pull a standard image and run it to capture a couple configuration files that will need to be customized:

$ docker pull httpd

$ docker run --rm httpd:2.4 cat /usr/local/apache2/conf/httpd.conf > my-httpd.conf

$ docker run --rm httpd:2.4 cat /usr/local/apache2/conf/extra/httpd-ssl.conf > httpd-ssl.conf

Edit the my-httpd.conf and uncomment the following modules along with the SSL configuration:

LoadModule socache_shmcb_module modules/mod_socache_shmcb.so

LoadModule ssl_module modules/mod_ssl.so

LoadModule rewrite_module modules/mod_rewrite.so

LoadModule expires_module modules/mod_expires.so

LoadModule proxy_module modules/mod_proxy.so

LoadModule proxy_http_module modules/mod_proxy_http.so

LoadModule proxy_balancer_module modules/mod_proxy_balancer.so

LoadModule deflate_module modules/mod_deflate.so

LoadModule slotmem_shm_module modules/mod_slotmem_shm.so

LoadModule slotmem_plain_module modules/mod_slotmem_plain.so

LoadModule lbmethod_byrequests_module modules/mod_lbmethod_byrequests.so

Include conf/extra/httpd-ssl.conf

Next, I need to obtain a valid key and certificate for the purpose of running a web site with SSL. I'm just going to generate a self signed pair and live with the browser warning but an actual company will likely have their own procedures for obtaining certificates.

$ openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout web/server.key -out web/server.crt

Now, add the Cognos Analytics configuration items from the provided template to the end of httpd-sso.conf just before the closing virtual host.

<IfModule mod_expires.c>

<FilesMatch "\.(jpe?g|png|gif|js|css|json|html|woff2?|template)$">

ExpiresActive On

ExpiresDefault "access plus 1 day"

</FilesMatch>

</IfModule>

<Directory /opt/ibm/cognos/analytics/web>

<IfModule mod_deflate>

AddOutputFilterByType DEFLATE text/html application/json text/css application/javascript

</IfModule>

Options Indexes MultiViews

AllowOverride None

Require all granted

</Directory>

<Proxy balancer://mycluster>

BalancerMember http://application-tier:9300 route=1

</Proxy>

Alias /ibmcognos /opt/ibm/cognos/analytics/web/webcontent

RewriteEngine On

# Send default URL to service

RewriteRule ^/ibmcognos/bi/($|[^/.]+(\.jsp)(.*)?) balancer://mycluster/bi/$1$3 [P]

RewriteRule ^/ibmcognos/bi/(login(.*)?) balancer://mycluster/bi/$1 [P]

# Rewrite Event Studio static references

RewriteCond %{HTTP_REFERER} v1/disp [NC,OR]

RewriteCond %{HTTP_REFERER} (ags|cr1|prompting|ccl|common|skins|ps|cps4)/(.*)\.css [NC]

RewriteRule ^/ibmcognos/bi/(ags|cr1|prompting|ccl|common|skins|ps|cps4)/(.*) /ibmcognos/$1/$2 [PT,L]

# Rewrite Saved-Output and Viewer static references

RewriteRule ^/ibmcognos/bi/rv/(.*)$ /ibmcognos/rv/$1 [PT,L]

# Define cognos location

<Location /ibmcognos>

RequestHeader set X-BI-PATH /ibmcognos/bi/v1

</Location>

# Route CA REST service requests through proxy with load balancing

<Location /ibmcognos/bi/v1>

ProxyPass balancer://mycluster/bi/v1

</Location>

Note that I've utilized the service name that I plan to use for the proxy balancer host. That is the only change that is required unless you desire to use a different virtual directory. The following Dockerfile is used to build the container:

FROM httpd:2.4

COPY ./my-httpd.conf /usr/local/apache2/conf/httpd.conf

COPY ./httpd-ssl.conf /usr/local/apache2/conf/extra/httpd-ssl.conf

COPY ./server.key /usr/local/apache2/conf/server.key

COPY ./server.crt /usr/local/apache2/conf/server.crt

USER root

RUN mkdir -p /opt/ibm/cognos/analytics/web

COPY /web /opt/ibm/cognos/analytics/web

Build the container making sure to tag it with the version:

$ docker build -t cognos-apache2:v11.1.7 .

Again, I highly recommend re-tagging and pushing the image to a registry for safe keeping and convenience:

$ docker tag cognos-apache2:v11.1.7 us.gcr.io/project-289415/cognos-apache2:v11.1.7

$ docker push us.gcr.io/project-289415/cognos-apache2:v11.1.7

Creating the cluster

With the images created it is fairly simple to create an IBM Cognos Analytics environment in a Swarm cluster. I would want to utilize different Swarm clusters to create development, test and production environments. First create the Swarm master:

$ docker swarm init --advertise-addr $(hostname -i)

The above will generate something similar to the following command that should be copied and executed on every worker node.

docker swarm join --token SWMTKN-1-49nj1cmql0jkz5s954yi3oex3nedyz0fb0xx14ie39trti4wxv-8vxv8rssmk743ojnwacrr2e7c 192.168.99.100:2377

Next, a very important step is to create the network overlay which essentially is what allows containers to resolve each other:

$ docker network create --driver overlay cognet

Before creating the Swarm services I had to pull all the images I created onto each node with the following pull requests:

$ docker pull us.gcr.io/project-289415/cognosdb:v1

$ docker pull us.gcr.io/project-289415/content-manager:v11.1.7

$ docker pull us.gcr.io/project-289415/application-tier:v11.1.7

$ docker pull us.gcr.io/project-289415/my-apache2

Then create our services starting with the content store and finishing with the web server. Note the use of mount for the content store service to mount the share that will persist data through restarts.

$ docker service create --name cognos-db --network cognet --mount type=volume,dst=/var/opt/mssql,volume-driver=local,volume-opt=type=nfs,\"volume-opt=o=nfsvers=4,addr=master-1\",volume-opt=device=:/opt/ibm/cognos_data us.gcr.io/project-289415/cognosdb:v11.1.7

$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

u4q1nho6zg0d cognos-db replicated 1/1 cognosdb:v11.1.7

$ docker service create --name content-manager --network cognet us.gcr.io/project-289415/content-manager:v11.1.7

$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

u4q1nho6zg0d cognos-db replicated 1/1 cognosdb:v11.1.7

w7b9505vjngf content-manager replicated 1/1 content-manager:v11.1.7

$ docker service create --name application-tier --network cognet us.gcr.io/project-289415/application-tier:v11.1.7

$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

y3xag00nx3lk application-tier replicated 1/1 application-tier:v11.1.7

9gtej1kluvj6 cognos-db replicated 1/1 cognosdb:v11.1.7

o7vrx1r8yc7j content-manager replicated 1/1 content-manager:v11.1.7

$ docker service create --name web-tier --network cognet --publish 443:443 us.gcr.io/project-289415/cognos-apache2:v11.1.7

$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

u4q1505vyc7j web-tier replicated 1/1 cognos-apache2:v11.1.7

y3xag00nx3lk application-tier replicated 1/1 application-tier:v11.1.7

9gtej1kluvj6 cognos-db replicated 1/1 cognosdb:v11.1.7

o7vrx1r8yc7j content-manager replicated 1/1 content-manager:v11.1.7

This is when it really gets good. I can scale the application tier to multiple containers and Swarm will do it's best to balance those out across nodes:

$ docker service scale application-tier=2

$ docker service ps application-tier

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

wz3m1tf9b497 application-tier.1 application-tier:v11.1.7 worker-1 Running Running 3 minutes ago

8dp309oowwnx application-tier.2 application-tier:v11.1.7 worker-2 Running Running 12 seconds ago

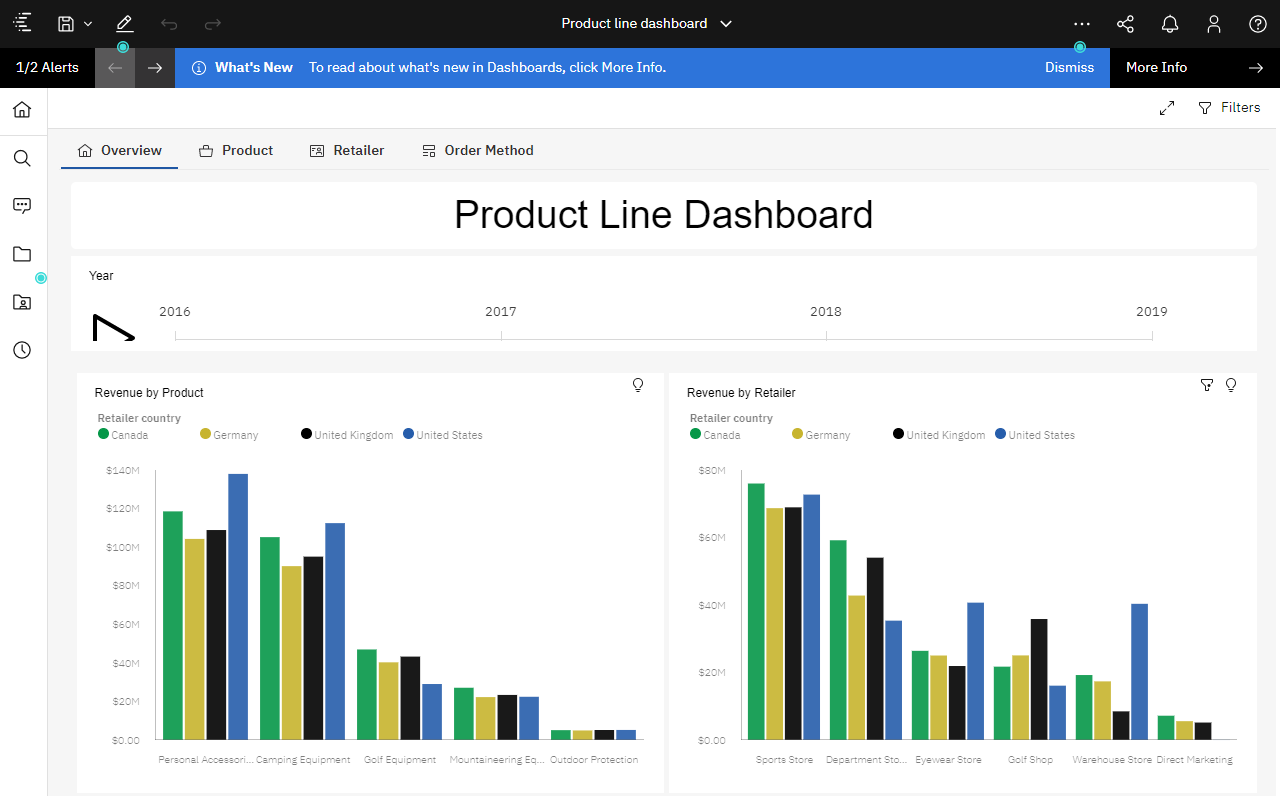

Now that all services are running I can log on with my Google authentication.

I've loaded the basic samples which rely solely upon uploaded data sets and am able to open them. When creating the containers I would have included any additional data source drivers, preferably JDBC to avoid an installation process, and thus should be able to connect to additional data sources including Big Query.

Caveats

There are several caveats with this approach. Many of which are simply the result of running within containers.

- Importing and exporting content will be challenging since I did not connect the deployment location to a share.

- Using a share presents a single point of failure but then again so does a single master node in my example.

- Start times can vary widely depending on configuration so adjust the container health parameters accordingly.

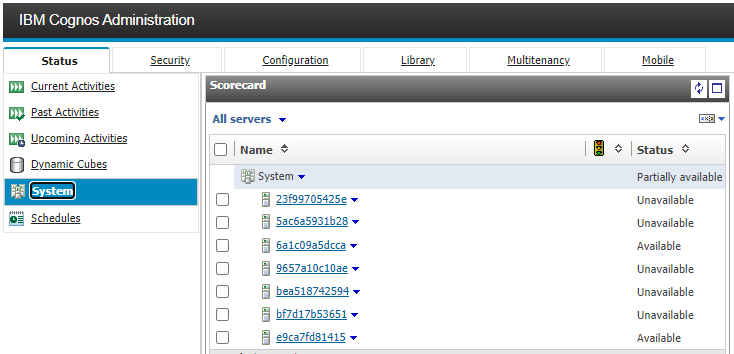

- While dynamic containers yields elasticity it also presents an unusual challenge in that Cognos Analytics automatically registers each one and will require clean up on restarts as can be seen in the following screen shot:

Notice the unavailable systems in the above listing. I have to remove those every time a container is stopped which shouldn't be too often in a production system.

#News-BA

#News-BA-home

#ho

#CognosAnalyticswithWatson#home#LearnCognosAnalytics