Introduction

The Planning Analytics API is a powerful tool, enabling you to drive Planning Analytics programmatically with similar functionality to the web interface. Using APIs is a modern way to help make data more integrated and automate processes like backups to improve efficiency.

This blog will focus on how to use API calls to drive the movement of files to and from the Planning Analytics as a Service database storage. This blog will provide step by step instructions on how to perform many common functions, however you are also welcome to use these commands as inspiration to write your own requests to suit your individual requirements.

In this blog, we will be using Postman which is a very common API development platform as it allows us to test the commands individually before adding it to an app, however you are welcome to use whatever API tool your company prefers.

Jump to a Section

Part 1 – Preparing to use APIs

Part 2 – Authentication

Part 3 – Single Part File Management

Part 4 – File Management Best Practices

Part 5 – Multi Part File Management

Part 1 - Preparing to use APIs

In order for us to use rest APIs to connect to a Planning Analytics as a Service instance, we first need to gather some variables from the web interface. In this section, we will walk through how to create an API key and gather the correct Planning Analytics URL, Tenant ID and database URL.

The first variable we will generate is the API Key. This key is used to authenticate the user when accessing Planning Analytics via API calls. It is important to note that the API key generated will have the same level of access as the user who generates the key, so ensure that this user has all the rights and permissions that your API calls will need to avoid errors.

An alternate option would be to create a service account which is not assigned to a particular person but has appropriate access to the correct environments and databases you will be making calls to. Please bear in mind that if you choose to create a service account it will count as an additional user on your account so please make sure you don’t go over your allowance.

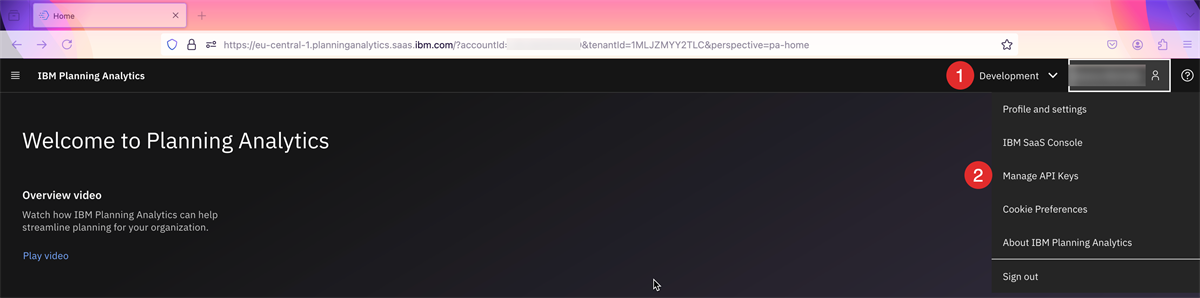

Step 1: Log onto your Planning Analytics environment using your URL. For users with multiple environments, use the dropdown to make sure you are in the correct environment. In this example, we will work in the ‘Development’ Environment

Step 2: The first variable we will generate is the API key. To do this, click on your name in the top right-hand corner of the page and select ‘Manage API Key

Step 3: Once the ‘API Keys’ window has opened, click the blue ‘Generate Key’ button

Step 4: Add a name and a description (optional) for your API key and click the ‘Generate Key’ button

Step 5: A unique API key for your login will then be generated. This API key will be unrecoverable after 300 seconds so ensure that you copy it to your clipboard using the blue button or download the key as a JSON file so you can access it later.

Note: If you do lose your API key, you will need to generate a new one by following the steps above again.

Step 6: We will now use the Planning Analytics URL to collect the PAW URL and the Database URL

Step 7: For the PAW URL, we will take the beginning of the URL, starting at https:// and ending with .ibm.com (see box below) to state the host URL. We will then also copy the Tenant ID (see box below). The PAW URL is then formatted as below.

Note: For users with more than one environment, please ensure that you are in the correct one. To check, use the drop down next to your name, in this example, we are using ‘Development’.

PAW URL Format: {{host URL}}/api/{{Tenant ID}}/v0

PAW URL Example: https://eu-central-1.planninganalytics.saas.ibm.com/api/1MLJZMYY2TLC/v0

Step 8: For the Database URL, we will copy the PAW URL to create the first part of the URL. Using the web interface, click the burger icon (Point 1 below) in the top left corner , then click ‘New’ (Point 2 below) and then ‘Workbench’ (Point 3 Below). This will open a new workbench which will have a list of databases on the left-hand side (Point 4). Find the database you want to work with (in this example ‘Dev’ – Point 5) and use the format below to add it to create the database URL.

Database URL Format URL: {{host URL}}/api/{{Tenant ID}}/v0/tm1/{{database name}}/api/v1

Database URL Example: https://eu-central-1.planninganalytics.saas.ibm.com/api/1MLJZMYY2TLC/v0/tm1/Dev/api/v1

Step 9: At this point we have gathered all the information we need for a successful API request. To make your calls more efficient, we recommend that the two URLs and the API key are parameterised so that values can be reused and we can protect sensitive data. To do this in Postman, click into Collections (Point 1 below), click the name of your collection (Point 2) and click the ‘Variables’ tab (Point 3).

Step 10: Create a variable for your PAW URL (Point 4), your database URL (Point 5) and the API key (Point 6) and insert your unique values, then save (Point 7).

Part 2 - Authentication

For an API request to Planning Analytics to work, each request must be authenticated. For this there are two options, the first is to add authentication to a single request, the second, and more efficient if making multiple calls, is to have a separate Authentication request which is run once at the beginning of each session. We will take the second approach in this blog. In Postman, the GET request will look like this:

Example Request: {{pawURL}}/rolemgmt/v1/users/me

Note: this request uses the {{pawURL}} we generated in Step 7 and added as a variable in Steps 9 and 10, but this can be hard coded if preferred.

If this request returns as successful, a connection to Planning Analytics has been made and further calls can now be made during the session. Sessions timeout after 60 minutes of inactivity.

Part 3 - Single Part File Management

Now that we have connected to our Planning Analytics instance, we can start to manage files. Depending on the size of the file we can either do this with a singular request, or we may need to break files down into multiple parts.

While it is possible to process files up to 100MB with a single command, we would consider splitting the file after 10MB to reduce the likelihood of retries (depending on the total size of the file). Part 3 of this blog will focus on the smaller files which can be accessed using a single command. For larger files, please see Part 5 where we’ll look at multi-part file management.

When managing files it is important to note that files are isolated to a particular database.

To get a list of files within Planning Analytics as a Service Database Storage

Step 1: Create a GET request called GET Files (or similar) (Point 1).

Step 2: Within this request, you will use your database URL (as created in Part 1) and access the /Contents(‘Files’)/Contents file path. (Point 2)

Example Request: {{databaseURL}}/Contents(‘Files’)/Contents

Step 3: Once you send this request (Point 3), you will receive a list of folders and files within your current database, matching what you would see within the File manager of your database if using the Planning Analytics Workspace (Point 4). Note that any files within folders will not be shown at this level.

Step 4: To see files within folders add, (‘Foldername’)/Contents to the end of the request. For more information on folders and subfolders, see Part 4 – File Management Best Practices.

To download a specific file

To view the contents of a specific file, we will need to carry out the following steps:

Step 1: Create a GET request for downloading a file (Point 1 Below)

Step 2: Within the request, use your database URL and copy the command below, using a variable to call a specific file (Point 2). In this example, we are accessing the ‘tmpHier.csv’ file. To change the contents of the variable within the request in Postman, hover over the variable and edit the value in the textbox that appears

Example Request: {{databaseURL}}/Contents('Files')/Contents('{{fileName}}')/Content

Step 3: Send the request (Point 3)

Step 4: The output will be the contents of your file (Point 4). Note: This response would need to be processed further in a real life use case, for example, writing the results to a local file and removing header rows

To create and add data to a file

For us to create a new file within Planning Analytics and add data to it, we will need two separate commands. The first of which will be a POST command to create an empty file within the Planning Analytics as a Service database storage, the second will be a PUT command to upload the data to the new file.

Creating the file:

Step 1: Create a POST request to create the file (Point 1 Below).

Step 2: Within the request command, copy the file path below using the database URL we created earlier (Point 2). Within the body of the request, add the name of the new file (Point 3)

Request Example: {{databaseURL}}/Contents('Files')/Contents

Body Example:

{

"@odata.type": "#ibm.tm1.api.v1.Document",

"Name": "{{fileName}}"

}

Step 3: Ensure you replace the value stored within the ‘filename’ variable with your own filename including the file format (Point 4)

Step 4: Send the request (Point 5)

Step 5: Once you have created a successful request, you will receive confirmation in the output body that an empty file has been created within your instance (Point 6)

Adding data to the file:

Step 1: Create a new PUT request for uploading file content (Point 1 Below)

Step 2: Copy the request shown below (Point 2), checking that you have the correct database URL and file name stored within your variables. Within the body of the request, add in your file content (Point 3), for this example we are using raw text data

Request Example: {{databaseURL}}/Contents('Files')/Contents('{{fileName}}')/Content

Step 3: Send the request (Point 4). As you can see below, no data is returned to the API tool, but we can see that the request has been successful (Point 5). To check the data stored within the file, we can run the GET download file request we created earlier to check that the file has been created and that the correct data has been uploaded to the file

Download File Example: {{databaseURL}}/Contents('Files')/Contents('{{fileName}}')/Content

Delete a File

Using APIs we can also delete files that we no longer need or created in error.

Step 1: Create a new request, DELETE file

Step 2: Copy the request below, using variables for your database URL and the filename you want to delete.

Request Example: {{databaseURL}}/Contents('Files')/Contents('{{fileName}}')

Step 3: Send the request. Note: There will not be an output for this request if it is successful.

Run Process

The final type of command covered in this part is the ‘RUN Process Command’. Using this command will allow you to run a process to load/extract a file into Planning Analytics cubes and/or dimensions so it can be used in the model. Please note that the example below is only one example of a process that can be run using the Planning Analytics as a Service API. The creation of processes is outside of the scope of this blog.

Step 1: Create a POST request called RUN Process

Step 2: Copy the code shown below, using your database URL, to load the data and send

Example Request: {{databaseURL}}/Processes('Load Data')/tm1.ExecuteWithReturn?$expand=ErrorLogFile

Step 3: Check the output to ensure that it has run successfully

If the request has not been successful, like the example below, it is possible to view the error log using APIs to help you fix the request.

Step 1: Copy the Filename from the output above, in this example ‘TM1ProcessError_20250116100823_52144288_Load Data_df97c7d0.log’

Step 2: Any error files that are created when using Planning Analytics as a Service are stored within the .tmp folder. In order to access any error files we will need to navigate to the subfolder first before requesting the specific error log. Create a GET request and navigate to the ‘.tmp’ Folder, before searching for your error file (see syntax below). Note that to access the .tmp folder, we have added an additional /Contents(‘{{filename}}’) within the request

Example Request: {{databaseURL}}/Contents('Files')/Contents('.tmp')/Contents('{{fileName}}')/Content

Step 3: By reviewing the results we can see that we have a circular reference within our dimension which we will need to fix before trying again

Part 4 - File Management Best Practices

Once we start creating many files within Planning Analytics, it is recommended that sub folders are created to organise your environment. For example, by department or by project. In this section of the blog we will cover how to create, rename and delete a subfolder.

Create Folder/Subfolder

The first step is to create a folder:

Step 1: Create a POST request called CREATE Folder

Step 2: Using your database URL and the path below create the request.

Request Example: {{databaseURL}}/Contents(‘Files’)/Contents

Step 3: Ensuring that you add the body of the request with your Folder name (in this example ‘Delete Me’)

Body Example:

{

"@odata.type": "#ibm.tm1.api.v1.Folder",

"Name": "Delete Me",

"ID": "Delete Me"

}

Step 4: Send the request

Step 5: You should be able to see that the new folder has been created.

To further organise your files, you can create subfolders, simply repeat the steps above adding the name of the folder you want to add the subfolder to and /Contents to access the contents of the folder.

Request Example: {{databaseURL}}/Contents(‘Files’)/Contents(‘New subfolder’)/Contents

Renaming Folders/Subfolders

If you’ve accidently named the folder wrong in the last step, or want to change it at a later stage, you can use an API request to rename the folder/subfolder

Step 1: Create a POST request called Rename Folder

Step 2: Copy the code below replacing ‘Delete Me’ with the folder you want to rename

Request Example: {{databaseURL}}/Contents('Files')/Contents('Delete Me')/tm1.Move

Step 3: In the body of the request, under the name variable, give the new name of the folder, in this example ‘Finance’

Body Example:

{

"Name":"Finance"

}

Step 4: Send the request, if it returns as successful the output will display the new folder name

For subfolders, complete the same steps as above adding /Contents('Old Subfolder name') as shown below, replacing ‘Old Subfolder name’ with your own folder name. Within the body of this request, ensure that the new subfolder name has been added.

Request Example: {{databaseURL}}/Contents('Files')/Contents('Finance')/Contents(‘Rename Me’)/tm1.Move

Body Example:

{

"Name":"Payroll"

}

Deleting Folders/Subfolder

If we need to delete a folder or a subfolder, it is very easy to do using APIs. For this example, we’ll delete a folder called ‘Delete Me’.

Step 1: Create a DELETE request called Remove Folder

Step 2: Copy the syntax below, replacing ‘Delete Me’ with your folder’s name. This can be parameterised instead of hard coded, if you prefer.

Request Example: {{databaseURL}}/Contents(‘Files’)/Contents(‘Delete Me’)

Step 3: Send the request, if it is successful, no content will be returned

To delete a subfolder, we will use the same syntax with a minor addition. For this example, I’ve recreated the ‘Delete Me’ Folder and added the ‘Delete Subfolder’ folder to it

Step 1: Create a delete request

Step 2: Use the syntax below as a guide for your request, replacing ‘Delete Me’ and ‘Delete Subfolder’ with your own folder names

Request Example: {{databaseURL}}/Contents(‘Files’)/Contents(‘Delete Me’)/Contents(‘Delete Subfolder’)

Step 3: Send the request, if it’s successful, no content will be returned

The process is the same if you have multiple levels of nested folders (folders within folders), all you need to do is add an additional /Contents(‘Folder name’) for each layer. This will work for creating, renaming or deleting subfolders.

Note: Please take care when deleting folders and subfolders. Any content or subfolder stored within the folder will also be deleted by the one request

Part 5 - Multi Part File Management

The last part of this blog will focus on how we can work with larger files. By splitting up larger files into chunks, we can reduce the chance of retries, saving time in the event of errors. With this in mind, we would recommend that you consider using multi-part methods for any files over 10MB and it is highly recommended that multi-part file uploads are used for any files over 100MB. In this part of the blog we will look at how we upload multi-part files and how we can download them.

Uploading multi-part files

Working with multi-part files will require a few additional steps compared to using a single part file request. To upload a file using multi-parts we will need to run separate requests to create the file, upload each individual part, check to ensure all the parts have uploaded and finalise the upload.

Step 1: Create the file

Step 1a: Create a POST request

Step 1b: Copy the syntax shown below, using the body of the request to give your file its name. Note: if you want to store the file within a folder/subfolder, add (‘My Folder name’)/Contents for each level of folder

Example 1: For a new file within the Files Folder:

Request Example : {{databaseURL}}/Contents(‘Files’)/Contents

Body Example:

{

"@odata.type": "#ibm.tm1.api.v1.Document",

"Name": "{{fileName}}"

}

Example 2: For a file within a subfolder

Request Example: {{databaseURL}}/Contents(‘Files’)/Contents(‘My subfolder’)/Contents

Body Example:

{

"@odata.type": "#ibm.tm1.api.v1.Document",

"Name": "{{fileName}}"

}

Step 1c: Send the request, a new empty file has been created

Step 2: Upload individual parts

The next step involves us sending two commands, the first initiates the multi-part file upload process, the second request allows us to upload the individual chunks of data

Step 2a: Create a POST request

Step 2b: Add the following code, ensuring you also add a script to set the UploadID which we will need to upload the individual parts.

Step 2c: Send the request.

Request Example: {{databaseURL}}/Contents('Files')/Contents('{{fileName}}')/Content/mpu.CreateMultipartUpload

Script Example – For Postman:

var jsonData = pm.response.json();

pm.collectionVariables.set("UploadID", jsonData["UploadID"]);

Step 2d: Now we can upload the individual parts. In this example we’re using raw text data in the body of the request and uploading each chunk of data with a separate command. In a real-life scenario each chunk would be processed using the same request by implementing your chosen programming method, for example implementing a loop for each chunk of data. Before sending the request, we have used Postman to create a post-response script where we’ll create variables which will store the Part Number and the etag for each chunk, you may need to use a different method if you are using a Postman alternative. This saves us manually copying them for a later step.

Request Example: {{databaseURL}}/Contents('Files')/Contents('{{fileName}}')/Content/!uploads('{{UploadID}}')/Parts

Body Example: This is the first part of the file

Script Example:

var jsonData = pm.response.json();

pm.collectionVariables.set("mpuPart1", jsonData["PartNumber"]);

pm.collectionVariables.set("mpuPart1Etag", jsonData["@odata.etag"])

Step 2e: Then we will upload the second (and for this example final) chunk of data. As you can see, the request stays the same, but the scripting changes slightly for the second part by changing the variable names and moving onto the next chunk of the data that is being uploaded. E.g mpuPart1 -> mpuPart2

Step 3: Check that all parts have been uploaded

The next request is to check whether all the parts have been successfully sent. This next step is not compulsory for the upload to work, however it is recommended to reduce the chance of errors.

Step 3a: Create a GET request

Step 3b: Copy the code below, ensuring that all the variables are correct for this file upload

Example Request: {{databaseURL}}/Contents('Files')/Contents('{{fileName}}')/Content/!uploads('{{UploadID}}')/Parts

Step 3c: Send the request and check that there are the right number of parts within the upload. If you’ve copied the part numbers in the previous step you can check them here

Step 3d: If the parts are not all here, or are in the wrong order, go to Step 5 where we’ll clear the contents of the file so we can try again

Step 4: Finalise the Upload

The last step to upload a multipart file is to finalise the upload because at this stage, no data has been committed to the file.

Step 4a: Create a POST request and copy the syntax below

Example Request: {{databaseURL}}/Contents('Files')/Contents('{{fileName}}')/Content/!uploads('{{UploadID}}')/mpu.Complete

Step 4b: In the body of the request list the part number and etag of each part that you uploaded in the correct order.

Step 4c: Send the request.

Example Body:

{

"Parts": [

{

"PartNumber": "{{mpuPart1}}",

"ETag": "{{mpuPart1Etag}}"

},

{

"PartNumber": "{{mpuPart2}}",

"ETag": "{{mpuPart2Etag}}"

}

]

}

Common errors at this stage would be copying the part numbers or etags wrong (if not using variables) or if the parts uploaded in the previous step and the parts listed in the body of the request are different. If this does happen, clear the contents of the file by following the instructions in Step 5 and try again.

Step 5: How to clear the contents of a file

This step can be used if you want to clear the contents of any file, regardless of whether it has been uploaded using APIs (single or multipart uploads) or the PAW workspace. It can also be used if any errors have been made during the upload process.

Step 5a: Create a DELETE request and copy the code below, ensuring that the database URL and the filename are correct

Request Example: {{databaseURL}}/Contents('Files')/Contents('{{fileName}}')/Content

Step 5b: Send the request, no content will be returned for a successful request.

Step 5c: To check whether the file is clear, you can run the ‘To download a specific file’ command we explored in Part 3

Request Example: {{databaseURL}}/Contents('Files')/Contents('{{fileName}}')/Content

Step 5d: For a multi part file upload that has failed, return to step 2 of this section and try again

Downloading Multi-Part Files

The last process covered in this blog will be how to download larger files by splitting it up into multiple parts.

Step 1: Getting the file size

Step 1a: Create a GET request and copy the syntax below, making sure that the database URL and the filename are correct

Request Example: {{databaseURL}}/Contents('Files')/Contents('{{fileName}}')

Step 1b: Send the request.

Step 1c: Look at the size that has been returned below, in this example it is 43 bytes. We will split the file into two chunks, one 22 bytes and the other 21 bytes.

Note: This file is small enough be downloaded as a single part file, but a file of any size can be downloaded using a multi part download if preferred. When downloading larger files (i.e. more than 10MB), the first range should be 0-999999, the second 1000000-1999999 and so on until the end of the file so that you are downloading 10MB chunks each time. The last download section does not need to be the exact end of the file, for example, for a file of size 43 bytes, the final range could be from 26-50 bytes and it should still be successful.

Step 2: Download the file in ranges.

Step 2a: For the first command we will specify the range of 0-22. If we look at the output, we can see the first part of the file

Step 2b: We will then work through the file incrementally, increasing the range each time. As you can see below, we will now request the range 22-44 (the rest of the file). However for larger files we may need additional requests.

Thank you for reading this blog and we hope that it has helped you use API requests to manage files within Planning Analytics as a Service.

We welcome your comments, feedback and questions. Please note, in order to comment you will need to log into the Community or create an IBM Community Profile