In this blog, will build a simple MFT use case using webMethods.io MFT.

webMethods.io MFT is an extension of the on-premises webMethods ActiveTransfer and brings Managed File Transfer to the cloud as part of the webMethods.io iPaaS. IBM webMethods MFT helps you quickly transfer files of any size in a secure, governed and encrypted cloud-based platform, meeting the need for secure, mission-critical exchanges.

Before starting with this use case development, let me quickly go through the key Capabilities of webMethods.io MFT

- Secure and Reliable File Exchange

Ensure complete data security with support for stringent encryption standards, including AES and PGP for data at rest. Advanced security features such as DMZ protection and IP filtering further safeguard your file transfers. - Complete Virus Scanning

Automatically terminate file transfers if a virus is detected. Virus protection can be directly configured within the MFT Gateway to enhance security. - Centralized Management and Control

Monitor and manage all file transfer activities with centralized visibility. Quickly identify and resolve transfer issues as they arise. Incoming files can trigger automated business events, such as order processing or invoicing, to streamline workflows. - Support for Popular Transfer Protocols

Enable file exchanges with trading partners using standard tools, including web browsers. IBM webMethods MFT supports HTTP, HTTPS, FTP, FTPS, SFTP, SCP, and WebDAV protocols. - Integration with IBM webMethods iPaaS

Leverage the combined capabilities of IBM webMethods Integration Server and MFT for secure and reliable document exchanges. These MFT functions are complemented by integration services, enabling you to invoke messaging capabilities and additional workflows as needed. - Guaranteed Delivery of File Transfers

Transfer files of any size with guaranteed delivery. The system automatically resumes interrupted transfers from where they stopped, eliminating the need for manual intervention. - Event-Driven Transfers

Trigger actions based on specific events using webMethods.io MFT. Post-processing actions, such as invoking services and APIs, help ensure deadlines are met with sophisticated automation. - User-Friendly Web Interface

Enable seamless collaboration between business and IT users with an intuitive web interface accessible from any browser. - Cloud Storage Support

Facilitate file transfers between ActiveTransfer and cloud-based storage providers such as Amazon S3, Google Cloud, and Azure. Administrators can easily configure these storage options as file sources or destinations, similar to local or remote folders. - Checkpoint and Restart Functionality

Resume file transfers automatically from the point of interruption, ensuring reliable delivery without manual intervention. - Encryption for Enhanced Security

Encrypt files using robust ciphers such as AES and 3DES, with integrated PGP support for both streaming data and data at rest. - Compression Support

Optimize file transfers with built-in support for compression formats, including Zip/Unzip, Gzip, and zLib, as well as in-stream compression capabilities.

Here is the simple use case which we will develop step by step using webMethods.io MFT.

Scenario:

A company wants to securely transfer files from its internal systems to external cloud storage providers (Amazon S3 and Azure Storage). IBM webMethods.io MFT acts as an SFTP server to receive files from internal systems, encrypts the files for security, and stores them in Amazon S3 , Google Cloud and Azure Storage for further processing or archiving.

For this specific scenario, we are not using any other iPaaS components except MFT. But as all these components are part of IBM Hybrid iPaaS, MFT can call integration services directly if you need to modify any file content before further processing.

Now let’s build the required components one by one.

1) We can set up webMethods.io MFT listeners to set up SFTP, HTTPS and FTPS server.

Using this SFTP/FTPS server, it can receive files.

On HTTPS port, we can access IBM webMethods Managed File Transfer webclient. Your MFT users can use this in-built web client to view and manage the files and folders in the ActiveTransfer Server instance to which they have been granted access privileges.

1) IBM webMethods Managed File Transfer enables you to create a Virtual File System (VFS) to provide an abstract view of resources in your remote FTP and SFTP servers. This capability enables users and client applications to access a variety of file structures in a uniform way. We can then grant users access to these virtual folders.

1) First we will create following Virtual Folder (VFS)

- Source Virtual Folder (PartnerAvfs) – Virtual folder pointing to MFT file system only . IBM webMethods Managed File Transfer offers a default virtual folder backed by cloud storage. The location information for this folder is inaccessible, but you can create subfolders within it and configure new virtual folders pointing to these subfolders. We will create a MFT user and associate with this virtual folder giving required permission to upload any files.This MFT user will be shared with source system.

- Azure_Blob_Storage_tnk- Create a VFS pointing to Azure blob storage

- AWS_S3_tnk- Create a VFS pointing to Amazon S3.

- googleCloudStorage_tnk – Create a VFS pointing to Google Cloud Storage

Create VFS – PartnerAvfs

Go to Virtual folders from left bar and then click on + icon to create a new VFS

Give name of VFS

Next screen select Partner as No Partner, Location as “Configure with default Virtual folder location” ( As this will be the local webMethods.io MFT folder)

Click on Browse

It will show all the folders already created within webMethods.io MFT storage.

I will select tnkdemo for this.

Now under permission, will create a user to associate with this VFS

Click on + icon on the right side under permission

Create User and click on Add to User List

Then click on Create

Then we can provide specific access for this user. This access will be applied to this specific VFS. Then click on Save. It will create this VFS.

1) Before sharing this SFTP user/password with source system, we can test it using any SFTP client tool or we can use MFT Web Client (HTTPS port in listeners)

After login, user will be able to see all the virtual folders they have access to. We pointed this VFS to local tnkdemo folder and within that folder there was 4 subfolders – incoming, working, completion, error

After login, user will be able to see all the virtual folders they have access to. We pointed this VFS to local tnkdemo folder and within that folder there was 4 subfolders – incoming, working, completion, error

2) Now we will create VFS for Azure Blob Storage, Amazon S3 bucket and also Google Cloud Storage following same process. Only change here will be this will point to remote location instead of configuring local folder. But for these VFS, we no need to add any users. As these VFS we will use within MFT as target location to send the encrypted file.

Here is configuration for AWS_S3_tnk

For each of the remote VFS, we can test connection before saving.

Here is configuration for Azure_Blob_Storage_tnk

Here is configuration for googleCloudStorage_tnk

3) 2) Now we will create MFT event (Actions). There are two types of Actions – Post-processing and Scheduled. Post processing action will be triggered when any file is uploaded to source folder whereas scheduled event we can schedule based on requirement.

For this use case, we would like to transfer the file immediately when any file is uploaded to source folder. So will create post processing event.

Click on Add

It will open MFT event editor

Then give a name and description for this post processing Event

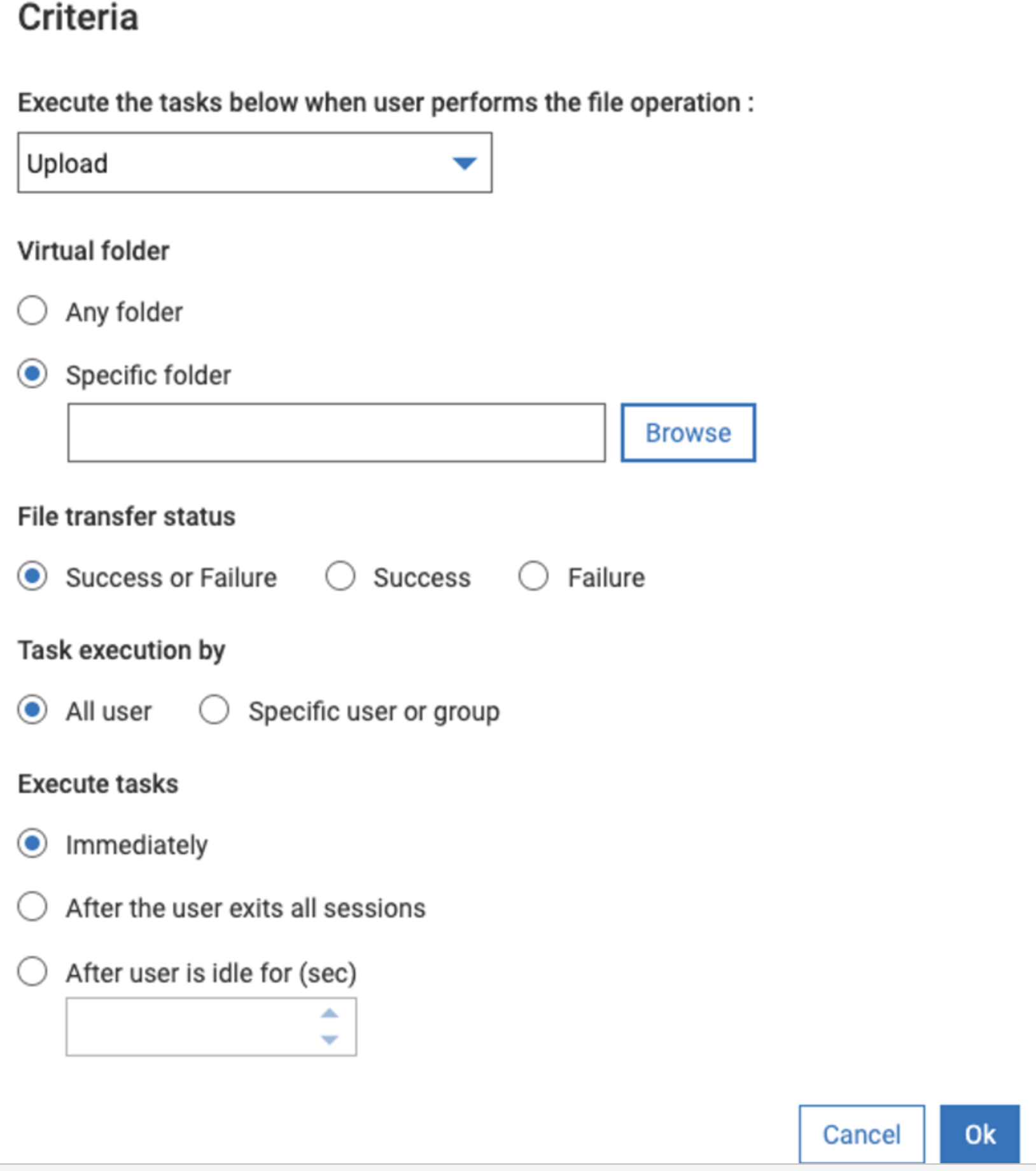

First we need to select the criteria. Which stated based on what event this post processing action will get triggered.

So click on Criteria on the above screen

We want to select partnerA VFS incoming folder

Click on Browse, select the VFS for partnerA.

Now within that VFS, we want to monitor incoming sub folder so added /incoming after VFS

We can then add multiple tasks one by one and orchestrate this event with multiple steps as needed.

Here are different steps possible

First we will move the file from incoming to working folder. So select Move from the tasks list

As we want to move from incoming to working, this will be virtual folder. Select Virtual folder, browse the VFS and add subfolder /working after VFS name

For each Task under advanced there are many other configurations possible such as renaming the file, retry mechanism etc… We will not change anything under advanced configuration for this use case.

Then next task will add is to Encrypt the file

Now to encrypt the file, we need a pgp key. Which I have already uploaded under certificates.

So in the event, click on + icon after move step , and select Encrypt

Then select the PGP key from drop down

Then next Copy the encrypted file to AWS and also select Execure error task. If there is any error in this step , this will call error step.

After that to Copy Azure

And finally copying to Google Cloud

In the error task we can add send email, so that in case of any error we can send email. We can also add additional task under error such as moving the file to a different error location.

For email we can select variable/templates to format the email body.

Then activate and save the post processing event.

4) 3) Now we can test this post processing event

So this post processing event will be triggered when there are any new files uploaded to partnerAvfs/incoming folder

We can use any SFTP client or programmatically send the file. For now we will use MFT web client to upload a file.

Login to webclient with the user we associated with partnerAvfs

Click on upload

Select the file and upload

Click start upload

5) 4) Monitoring logs

There are transaction log and Action log.

In the transaction log, we can see all file transactions.

It also provides client details

In the action log, we can see detailed logs for that post processing event.

If we click on that event log, we can see detailed logs for each step.

Here in this case file is encrypted successfully, Delivered to AWS S3 and Azure Storage. Google cloud storage failed as account is expired. So it triggers the error action. In the error email it send all relevant details of the error message along with transaction ID

Error also has been sent as error email

5) verify pgp encrypted file in AWS S3 bucket

It also delivered to Azure blob storage successfully

Thank you.