NOTE: This is the open preprint of the article.

1. Introduction

The problem of “unknown” exists almost for each AI system. Speaking about AI, the word “unknown” has several meanings: unknown data types, wrong data formats, unknown classes of data, data insufficiency, etc. All these meanings can be replaced by “unknown data” in the borders of this article.

Unknown data leads to significant quality and accuracy losses for 4 of 5 main machine learning (ML) tasks: classification, clusterization, regression and dimension reduction. The problem of unknown data is extremely important for applied AI in the development of new molecules and materials. The cost of mistakes here is billions of dollars and lack of new materials and substances, for example for healthcare. It is extremely important in COVID times.

One of the specificities of AI projects in the development of new materials is a lack of data. Thus the direct solving task with artificial neural networks (ANN) and deep machine learning are not always possible. Such systems should have complicated preprocessing modules that cannot be simply fitted for unknown data types.

The problem of unknown data was mirrored in such AI/ML directions as Lifelong Learning (LL), Generative adversarial network (GAN), unsupervised learning. But these directions have several general cons:

- Need significant volumes of data and computation power.

- Need significant time frames for training and scaling new data types and classes.

- Inability to handle new unknown data types/classes without updating.

These cons make the mentioned solutions insufficient for use in a major part of custom AI projects for new material development NMD.

2. Problem

APRO Software faced an "unknown data" problem during the development of an AI solution for NMD for healthcare needs. The additional challenges in this project were the requirement of an automatic system fitting with the new data, which can be considered as “unknown” in many cases. The challenge had the following manifestations:

- Insufficiency of data.

- Variations in the methods of obtaining data.

- A wide range of materials descriptions and characteristics.

- An ambiguity of criteria of assessing required characteristics.

- The proximity of signs of irrelevant materials.

The mentioned aspects and automatic system fitting requirements made the project quite complicated in implementation, but the solution was found.

3. Solution

The solution is based on the conception of lifelong learning in combination with supervised learning and classical statistical methods. It is represented as a “re-training AI Core layer” on the system (software) level.

The solution is AI-based (ANNs) with partial human-driving control of the fitting process of the most important parts of the AI system. It has the following features for “unknown data” problem control:

- AI-based quality control sub-system;

- AI-based checks for unknown data cases (for example, if data contain information about the wrong subject areas);

- AI-based recognition of unknown features and fitting processing according to this information (for example, it can detect properties of unknown types and fit the meta-data generation and a material assessment process based on this event);

- AI-based usage of new data fitting (re-training) and updating the system to ensure better quality and accuracy of predictions (NMD).

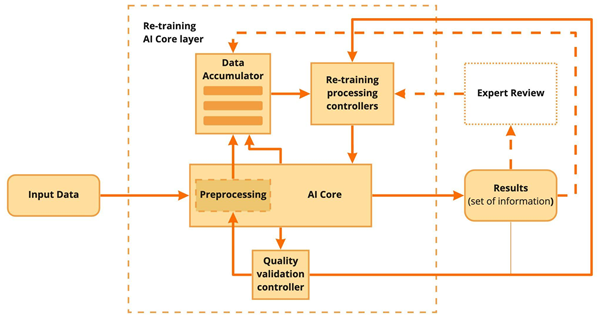

These features fully cover the requirements. The descriptions of the used approach are presented below. The re-training AI core layer is a wrapper around the AI core of the system. It automatically controls processes of fitting and unknown data cases (Image 1).

Image 1. - Re-training AI core layer

3.1 AI quality control sub-system

The suggested method includes an AI-based quality control sub-system, which is based on ANN for images processing and classical computer vision (CV) normalization methods. The quality sub-system is a part of the Quality validation controller. It controls all the steps of the processing inside the AI Core and assesses the quality of each sub-module by specially developed metrics. Also, it calculates the quality and accuracy of the entire system.

The controller operates a set of flexible rules that decide whether to continue the processing of input data or automatically adjust the system and repeat the processing of the data. Decisions for each AI Core module are made independently.

The controller works on the principle of a negative feedback loop (NFL). It is based on convolutional neural networks (CNN). If the controller decides adjustments, then it will perform an impact on the appropriate module.

The novelty of this approach is that the adjustment process occurs recursively and includes not only classical ML algorithms but also the impact on ANNs.

3.2 AI checks for unknown data cases

AI-based data checks for unknown data cases are part of the Preprocessing module (see Image 1.). This module solves 2 main cases in the borders of the “unknown data” problem:

- Intellectual checking and rejection of wrong data formats and data samples.

- Intellectual fitting insufficient data samples to an acceptable state.

Examples of totally wrong data formats: not allowed data formats (including wrong files types) or data samples that represent a wrong subject area. Such images of the 1st cases should be rejected from the processing and re-training process.

Also, examples of insufficient data samples can be data is provided from hardware modules that do not have 100% support. Such data should be transformed up to the state that can be used for processing.

Cases 1 and 2 are interrelated, but in the article, they are presented as independent cases.

3.2.1 Intellectual checking and rejection

The module uses approaches with simple algorithmic checks (for such cases as wrong data formats) and ML ANN-based approaches for checking wrong subject areas. A simplified structure of the module is presented in Image 2.

#GlobalAIandDataScience#GlobalDataScience