We are going to see how to use watsonx.data to query across file formats and different sources. The files that I will use are BOOKS.parquet which will be in object storage which is managed through an Iceberg catalog; BORROWS is a table in PostgreSQL relational database. Ahana Presto engine can be used to query across the catalogs.

Note: If you are using IBM Cloud to explore watsonx.data, there is a $1500 USD free trial coupon available. To get started and explore it, use the coupon listed in your catalog listing of the service.

Here are some of the key components of IBM Watsonx.data or Data Lakehouse on IBM Cloud -

I. Infrastructure Manager

Architect Your Own Lakehouse using Watsonx.data on IBM Cloud

One unique feature and major differentiator of watsonx.data is that it supports several opensource formats in the lakehouse world. It gives flexibility to architect your own lakehouse. Instead of limiting it to IBM databases/warehouses and lakehouse engines, watsonx.data lets us leverage storage, catalog and engine of our choice. Here are the major components of lakehouse architecture and how this can be setup on IBM Cloud -

1. Provision an instance of Watsonx.data from IBM Cloud Catalog - https://cloud.ibm.com/catalog

2. Storage: Lakehouse requires an object storage where it can store your raw files. With watsonx.data, you can provision a managed one or bring your own buckets.

3. Catalog: Lakehouse requires a metadata catalog using which your lakehouse table metadata is managed. Watsonx.data on IBM Cloud has several catalog options - Apache Iceberg(widely adopted in the lakehouse space), Apache Hudi and Apache Hive.

4. Engine: Lakehouse needs a query engine which can work with your iceberg/hudi/hive tables. Watsonx.data uses Ahana Presto as engine to query tables, engines like DB2/Netezza and Spark are also supported.

5. Databases: Data from relational and big data can be connected to lakehouse. Watsonx.data supports structured, semi-structured and unstructured databases. Some of the supported databases are - DB2, Netezza, Kafka, Mongo, Postgres etc. However, to setup your lakehouse, database is not a mandatory option to start with, this can be setup later.

- The core components of watsonx.data infrastructure are engines which process the data, catalogs which manage the metadata and where to find that data/how to read that data, the actual storage/relational data sources where the data is stored. Everytime a new database/storage is added, a catalog will also get added.

- For the above mentioned data, once I add the respective data sources, here's how the infrastructure would look like.

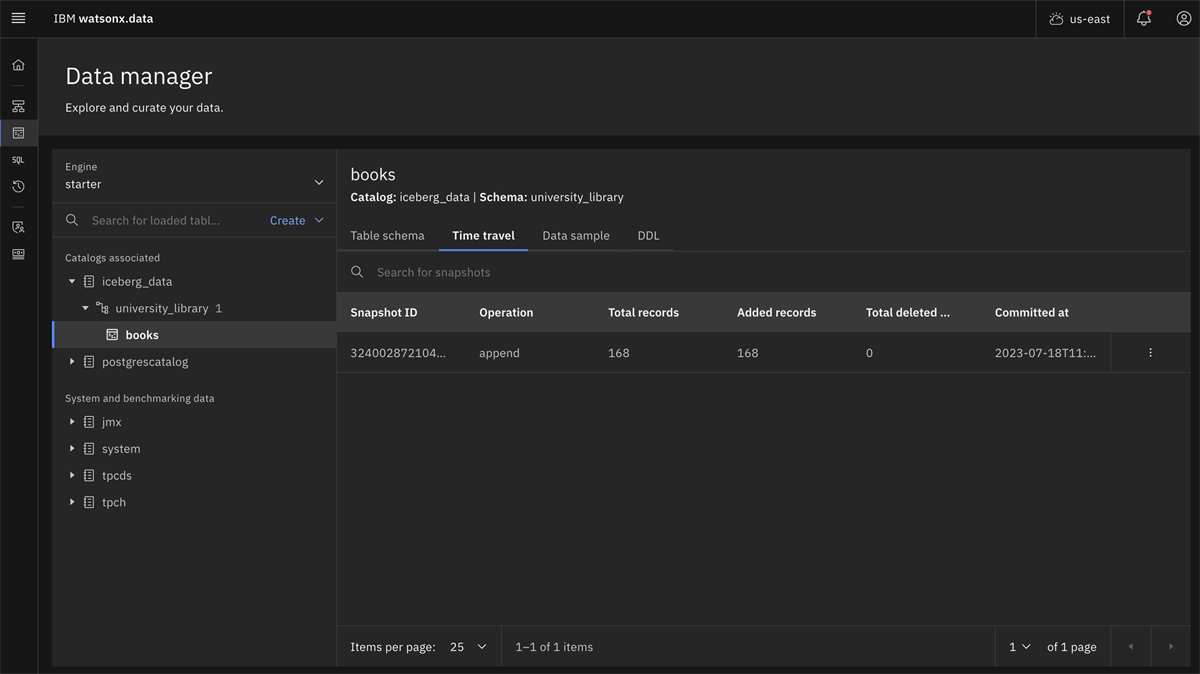

II. Data Manager

1. Catalogs: All the catalogs that are created added to the infrastructure manager will be listed in the data manager along with their respective schemas, tables and data samples.

2. Time Travel: This is available only with Iceberg catalogs, it's a feature with which the history of operations performed on the tables is captured. This feature is not available with other catalogs.

3. SQL: Once the data is added to the platform, data can be queried using the Presto engine using Presto SQL. For example, if you have to query across a Iceberg table which has parquet files and a relational table in postgreSQL, using presto engine, they can be queried. Here are some of the sample queries -

i. I have books.parquet in Iceberg table which looks something like the following when queried using Presto

ii. I have borrows table in postgresql which looks like the following when queried with Presto

iii. If these two have to be joined, a parquet file and a relational table, here's how it can be done using lakehouse.

So, that's how tables with different file formats can be joined and managed with lakehouse.