When managing large volumes of logs in IBM Cloud, cost and visibility can quickly become challenging. IBM Cloud Logs offers a flexible tiering system and a built-in TCO (Total Cost of Ownership) Optimiser that helps you balance performance and cost by routing logs to appropriate storage tiers.

However, many users face confusion when configuring or troubleshooting TCO policies, leading to misplaced logs, missing alerts, or unexpected billing.

This article explains:

- How IBM Cloud Log tiers work and how to choose the right one.

- How to create and apply TCO policies correctly.

- Common configuration mistakes that cause missing logs or alerts.

- How to verify and debug your TCO Optimiser setup using Data Prime queries.

- Best practices to ensure cost-efficient and reliable log management.

When working with IBM Cloud Logs, you have the flexibility to categorize your logs into different tiers based on your performance and cost requirements. Each tier offers distinct features, visibility options, and pricing, giving you full control over how your log data is stored, analyzed, and alerted.

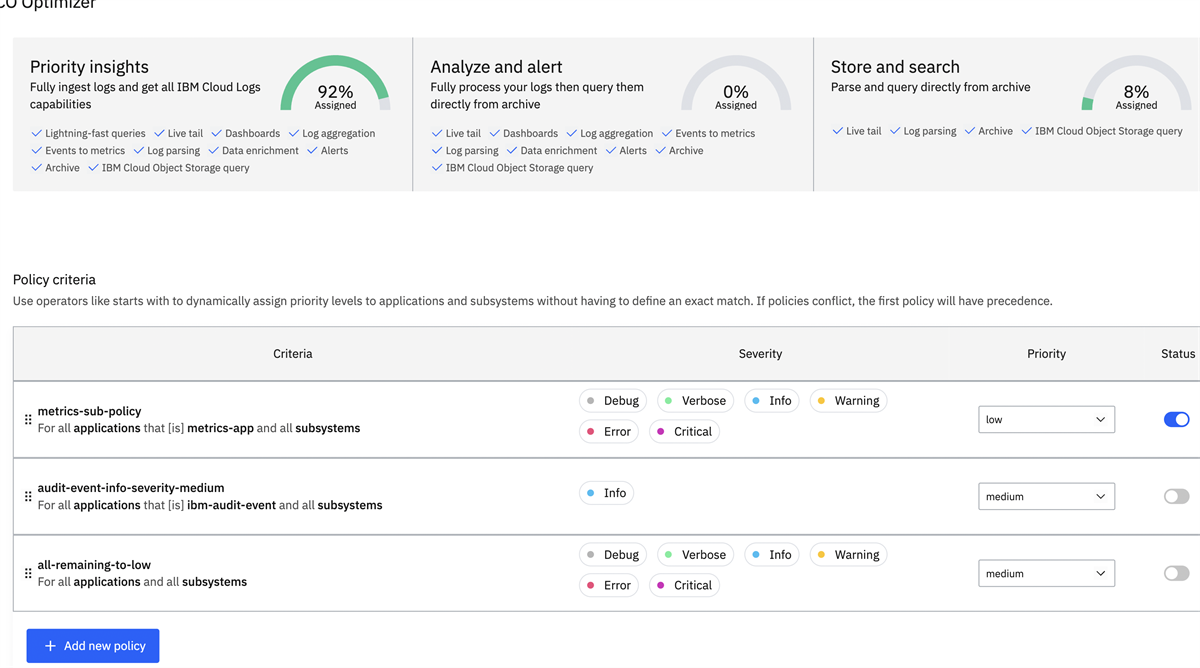

Before assigning logs to a specific tier, it’s essential to understand the capabilities and limitations of each. To simplify this process, IBM Cloud provides the TCO (Total Cost of Ownership) Optimizer, a powerful tool that helps you manage, route, and optimize logs efficiently across tiers.

Overview of Log Tiers

IBM Cloud Logs tiers are designed to help you optimise cost and performance according to your logging needs. Each tier provides its own capabilities:

The Priority Insights tier, also known as the High Tier, is designed for logs that require immediate access and full IBM Cloud Logs analysis capabilities. It is best suited for high-severity or business-critical logs that need to be lightning-fast queried, monitored through alerts, visualized in dashboards, or enriched with contextual data to support rapid troubleshooting and data-driven decision-making.

Logs in this tier are accessible through the Priority Insights tab on the Logs Explorer page. As this tier provides the complete feature set and processes high-priority logs, it comes with a higher cost.

Note: By default, all logs are initially routed to the Priority Insights tier, ensuring that no critical information is lost during ingestion or early analysis. However, this default behaviour can lead to unintended or unnecessary costs.

It is therefore important to regularly review and adjust your TCO policies whenever new logs or log sources are added. Log optimization is an ongoing process that helps maintain the right balance between visibility and cost efficiency.

The Analyze and Alert tier, also known as the Medium Tier, offers a balanced approach between functionality and cost. Logs in this tier can be viewed in the All Logs tab in logs explorer page and are available to be visualized through dashboards, monitored using alerts, and queried from archives.

To utilize this tier, you must have an IBM Cloud Object Storage (COS) bucket configured with your IBM Cloud Logs instance. It is best suited for logs that require periodic analysis or ongoing monitoring.Because logs in this tier are retrieved from COS archives, query performance may be slightly slower compared to the High Tier. However, it provides a more cost-efficient option for managing logs that do not demand real-time visibility.

The Store and Search tier, also known as the Low Tier, offers a cost-optimized solution for retaining less critical log data. Logs in this tier are visible in the All Logs tab; however, visualization through dashboards and monitoring through alerts are not available.

This tier is ideal for logs that need to be retained for compliance, auditing, or post-processing purposes, but do not require real-time analysis. Logs can still be queried from archives when needed, making it a reliable choice for long-term storage and cost-efficient log management.

To use this tier, you must have IBM Cloud Object Storage (COS) buckets configured with your IBM Cloud Logs instance. Based on its limited feature set, the Low Tier is slightly more affordable than the Medium Tier, making it suitable for cost-sensitive workloads.

The three log tiers -> Priority Insights (High), Analyze and Alert (Medium), and Store and Search (Low) — are inclusive and cumulative in nature. Each tier builds upon the capabilities of the one below it, offering progressively greater functionality, visibility, and performance — but at a higher cost. This tiered structure allows you to balance cost and analytical depth by assigning logs to the tier that best fits their criticality and usage needs.Although the capabilities are cumulative, the pricing is not cumulative. Each tier has its own independent price, and when logs are assigned to a higher tier (e.g., Priority Insights), you only pay the rate for that tier — not for all lower tiers.

Creating a TCO Policy

To create a TCO policy:

- Navigate to:

Data Pipeline → TCO Optimizer

This will open the TCO Optimizer page, where you can view your current log flow and the features associated with each tier.

- Create a New Policy:

Click on Add New Policy.

For example, to send all logs from metrics-app (including all subsystems and severities) to a lower tier, configure the policy accordingly.

- Apply and Order the Policy:

Click Apply once you’ve configured your policy.

Remember: Policy order matters. If multiple policies apply to the same application or subsystem, the first matching policy in the list takes precedence. You can drag and drop policies to reorder them and ensure the intended one is evaluated first.

Tip: Always review your policy order after creating or updating a rule. For example, if you have a general policy that routes all logs to the Medium Tier and a more specific policy for a single application that should go to the Low Tier, make sure the specific policy appears above the general one. Otherwise, the general rule will override it, and your logs won’t be routed as expected.

To avoid confusion, it’s a good practice to:

- Group related policies logically (e.g., by application or environment).

- Add clear, descriptive names to each policy to make maintenance easier.

Periodically review your policy list, especially after adding new log sources.

4. Enable the Policy:

Make sure to toggle the policy ON after creation.

Note: TCO policy rules apply only to new logs. Existing logs will remain in their current tiers.

To verify your policy, run the following Data Prime query, and ensure that you select the correct application and subsystem filters in the left-hand filter panel

choose $m.priorityclass

This query displays the priority class (tier) assigned to each log record — for example, High, Medium, or Low. By applying the same filters or selection criteria used in your TCO policy (such as specific applications, subsystems, or severities), you can confirm whether logs are being routed to the intended tier.If the logs appear with the expected priority class, it means your TCO policy is working as configured. Conversely, if no logs are returned, this indicates that your policy may be blocking or misrouting logs, and further review of your rules is needed.

Note: Avoid running this query in the Priority Insights tab, as all logs displayed there will always belong to the High Tier. To validate your policy results accurately, always run the query in the All Logs tab.

Refer to the screenshot below for an example of the query output showing how the active TCO policy assigns logs to their respective tiers.

create $d.priorityclass from $m.priorityclass

This query adds metadata fields to your log lines, showing the tier assignment for each log. Check the screenshot below for a sample output illustrating how the results are displayed.