SMB Meets Ceph: Configuring SMB Clustering for High Availability

Introduction

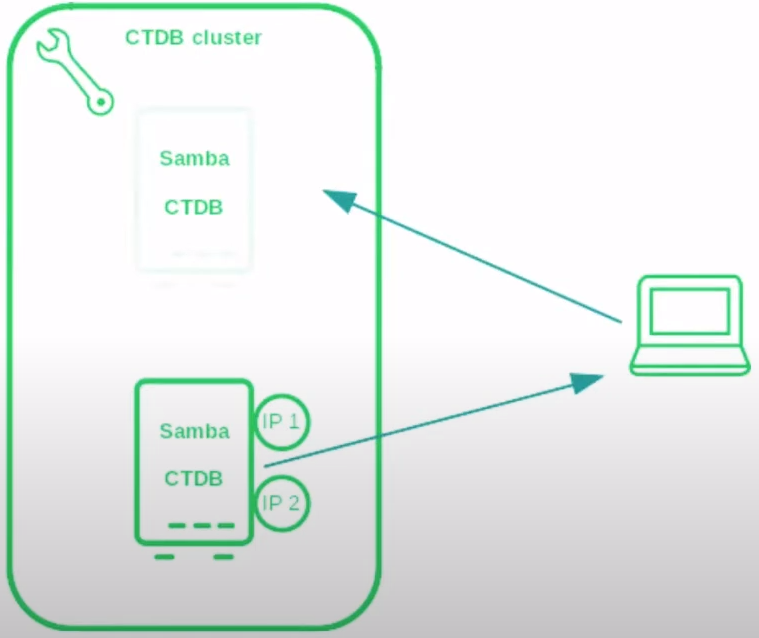

SMB (Server Message Block) is a widely-used protocol that enables seamless file and printer sharing across networks, primarily for Windows clients. To ensure these services remain resilient and accessible in enterprise environments, clustering becomes essential. Samba CTDB (Cluster Trivial Database) addresses this need by managing Samba in a clustered setup, providing high availability through failover and IP address migration across nodes.

At the heart of this setup is TDB (Trivial Database) - a fast, lightweight key-value store originally developed for Samba. It efficiently handles small datasets, such as configuration and state data, making it ideal for high-performance clustered environments. CTDB uses TDB to coordinate cluster operations, ensuring that services can gracefully failover from one node to another with minimal disruption.

With the release of IBM Storage Ceph 8, the integration of SMB clustering has become even more streamlined through the introduction of the SMB MGR Module. This module allows administrators to deploy, manage, and monitor Samba services with built-in clustering support for high availability SMB access to CephFS. The SMB MGR module supports both CLI and UI-based management, as well as declarative and imperative workflows via simple YAML specs or commands.

Why Clustering ?

In traditional file server deployments, a single point of failure can result in service downtime, data inaccessibility, and user disruption. This is especially problematic in enterprise environments where file services must be continuously available for business-critical applications. That’s where clustering plays a crucial role.

Clustering provides high availability (HA) by ensuring that services continue to function even when one or more components (like a server node) fail. In the context of Samba, clustering is made possible using CTDB (Cluster Trivial Database) - a mechanism that allows multiple Samba nodes to work together as a single logical unit.

Let’s consider a practical scenario:

A client is connected to a CTDB-backed SMB cluster consisting of two Samba nodes, each with a public IP managed by CTDB. These public IP are what clients use to access the SMB service.

Normal Operation: The client is connected to Node 1 using one of the CTDB-managed IP addresses. The file share is mounted, and file operations are ongoing.

Failure Event: Suddenly, Node 1 goes down (due to hardware failure, network issue, or maintenance).

Fail-over Mechanism: CTDB continuously monitors node health. Upon detecting the failure of Node 1, CTDB automatically migrates the affected IP address to Node 2.

Result: The client remains connected to the same IP, but the backend node handling that IP has shifted to Node 2 - no need for manual re-connection or remounting, and no data loss or interruption occurs.

Managing SMB Clustering

The SMB MGR module in Ceph offers multiple interfaces for managing clustered SMB services with clustering, providing flexibility to suit different operational preferences and automation needs. Users can choose from the following methods to configure, deploy, and control SMB clustering:

-

Imperative Method: Use direct CLI commands via the ceph smb interface to perform actions such as creating clusters, adding nodes, configuring IPs, and managing shares.

-

Declarative Method: Define SMB resources in YAML or JSON format and apply them using the ceph smb apply command. This approach is well-suited for automation, version control, and infrastructure-as-code practices. It allows for consistent and repeatable SMB cluster deployments.

-

Ceph Dashboard (UI): The Ceph Dashboard provides a graphical user interface for managing SMB services. Administrators can deploy and monitor clustered SMB nodes, assign virtual IP, and manage shares- all through an intuitive web interface. This is especially useful for teams that prefer visual management over command-line tools.

Simple IBM Storage Ceph 8 with SMB Configuration with clustering workflow

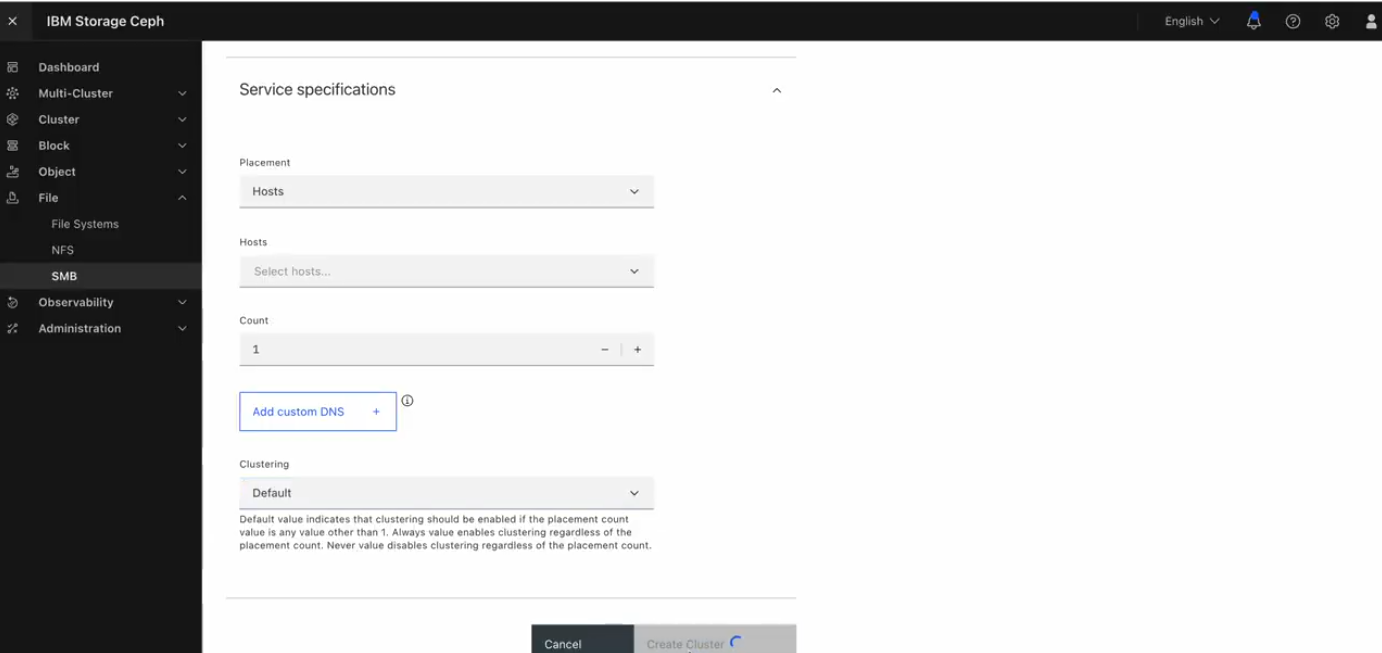

Clustering Configuration Options

The clustering parameter controls whether SMB clustering is enabled for a given service. It accepts one of the following values:

-

default (default behaviour): Clustering is enabled only if the placement.count is greater than 1. This allows clustering to be dynamically determined based on the number of service instances.

-

always: Clustering is explicitly enabled, regardless of the placement.count value—even if only a single instance is deployed.

-

never: Clustering is explicitly disabled, even if multiple instances are defined.

If the clustering field is not specified, the system defaults to default behaviour.

1. Imperative Method:

Create Cephfs volume/subvolume

# ceph fs volume create cephfs

# ceph fs subvolumegroup create cephfs smb

# ceph fs subvolume create cephfs sv1 --group-name=smb --mode=0777

# ceph fs subvolume create cephfs sv2 --group-name=smb --mode=0777

Enable SMB management Module

# ceph mgr module enable smb

Assign an SMB Label to Nodes Designated for SMB Clustering

# ceph orch host label add <hostname> smb

Creating smb cluster/share with clustering enable

# ceph smb cluster create smb1 user --define_user_pass user1%passwd --placement label:smb --clustering default --public_addrs 10.x.x.x/21

# ceph smb share create smb1 share1 cephfs / --subvolume=smb/sv1

Check clustering status

# rados --pool=.smb -N smb1 get cluster.meta.json /dev/stdout

Map a network drive from windows clients

2. Declarative Method:

Create Cephfs volume/subvolume

# ceph fs volume create cephfs

# ceph fs subvolumegroup create cephfs smb

# ceph fs subvolume create cephfs sv1 --group-name=smb --mode=0777

# ceph fs subvolume create cephfs sv2 --group-name=smb --mode=0777

Enable SMB management Module

# ceph mgr module enable smb

Assign an SMB Label to Nodes Designated for SMB Clustering

# ceph orch host label add <hostname> smb

Creating smb cluster/share with clustering enable

# ceph smb apply -i - <<'EOF'

# --- Begin Embedded YAML

- resource_type: ceph.smb.cluster

cluster_id: smb1

auth_mode: user

user_group_settings:

- {source_type: resource, ref: ug1}

placement:

label: smb

public_addrs:

- address: 10.x.x.x/21

- resource_type: ceph.smb.usersgroups

users_groups_id: ug1

values:

users:

- {name: user1, password: passwd}

groups: []

- resource_type: ceph.smb.share

cluster_id: smb1

share_id: share1

cephfs:

volume: cephfs

subvolumegroup: smb

subvolume: sv1

path: /

Check clustering status

# rados --pool=.smb -N smb1 get cluster.meta.json /dev/stdout

Map a network drive from windows clients

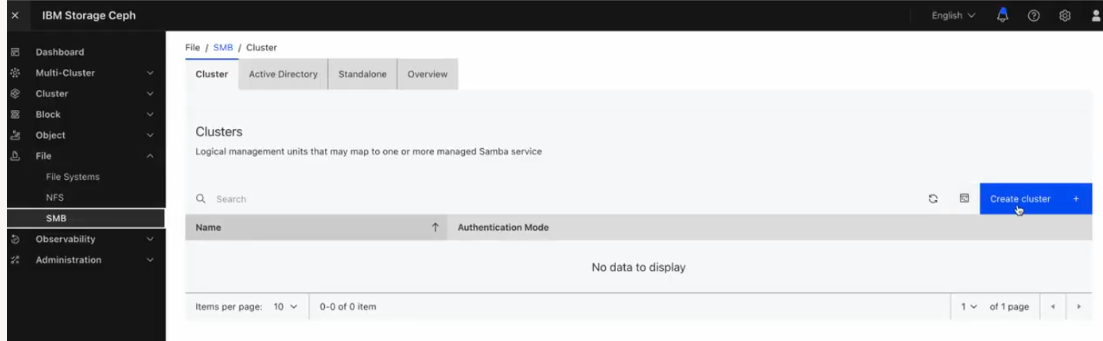

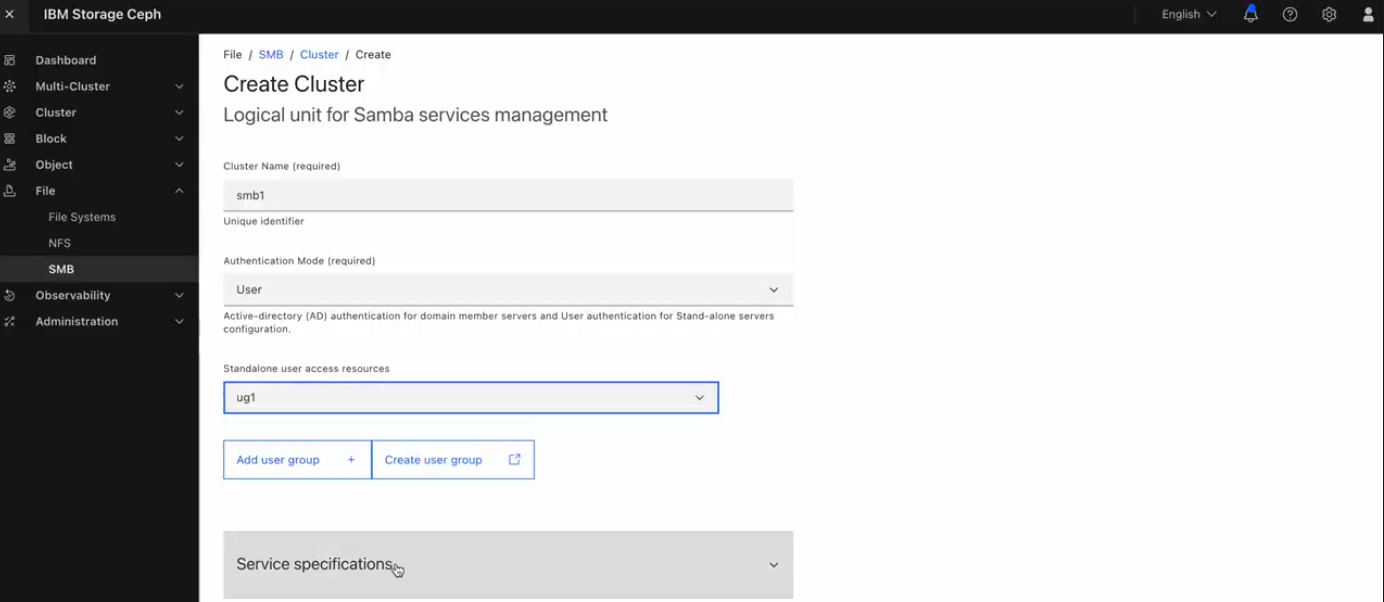

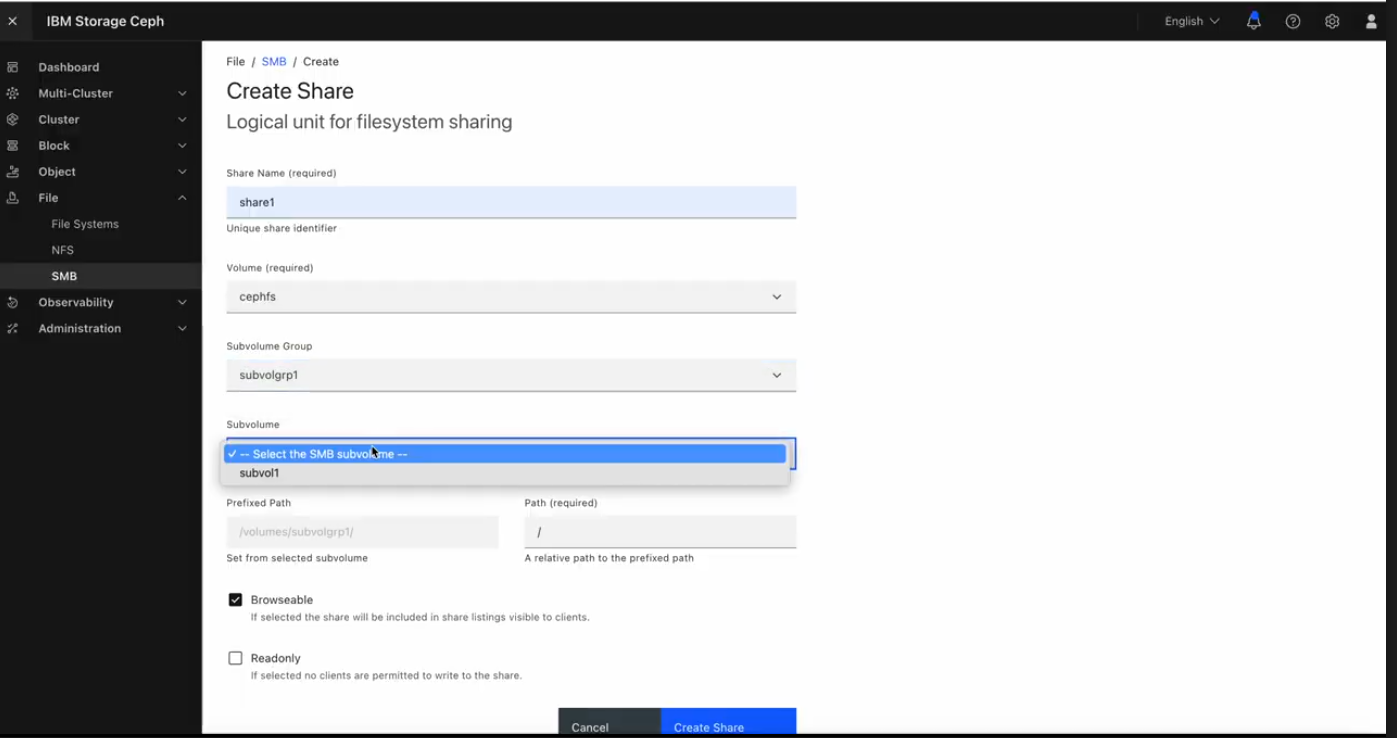

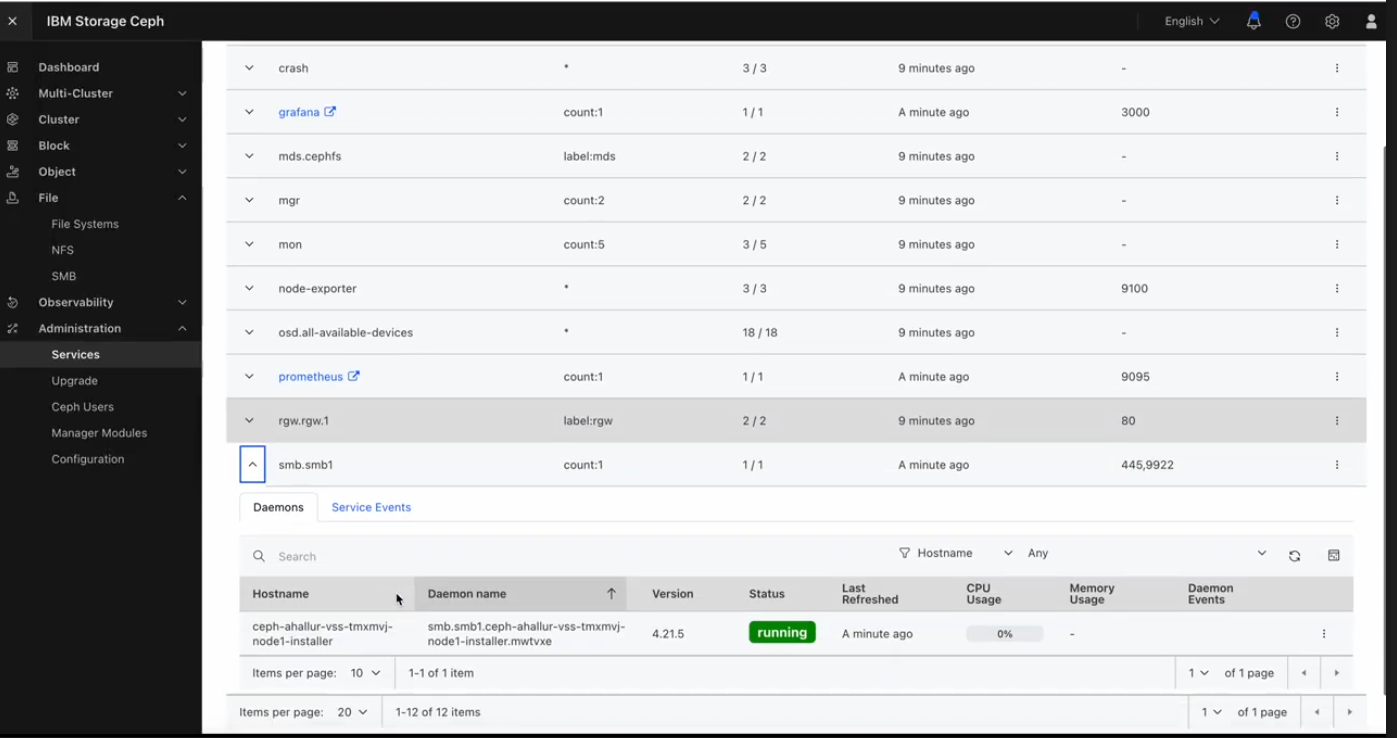

3. Dashboard (UI) Method:

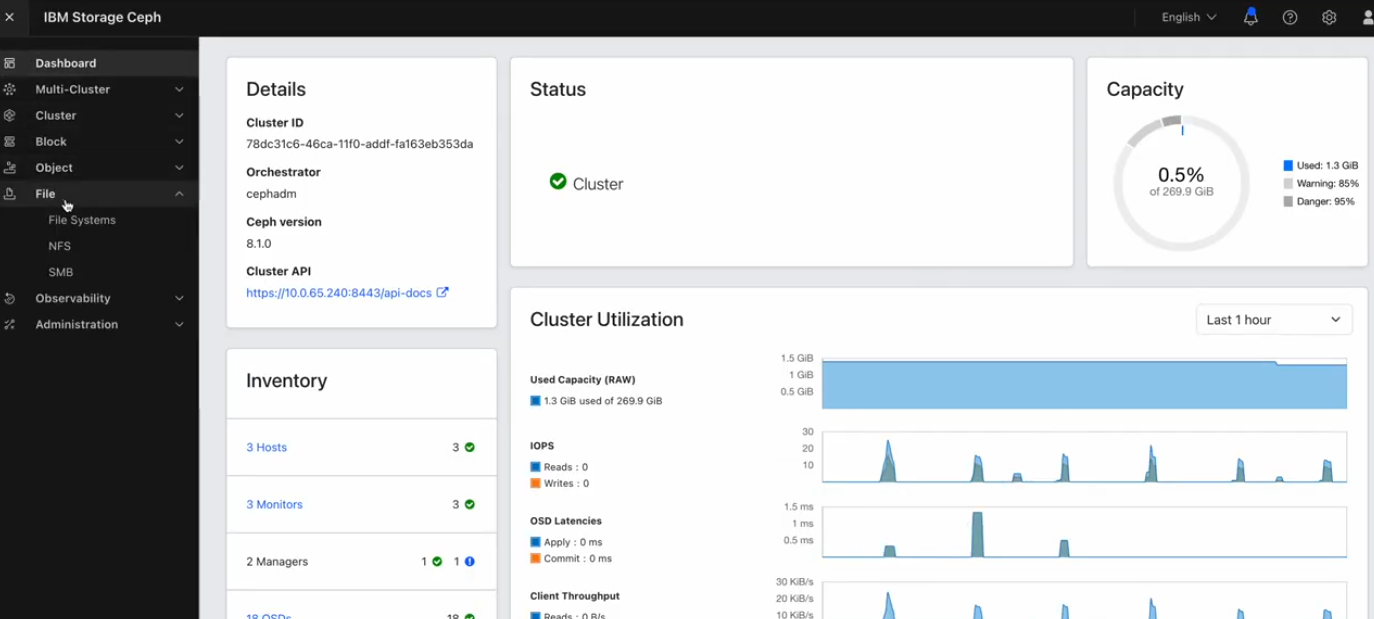

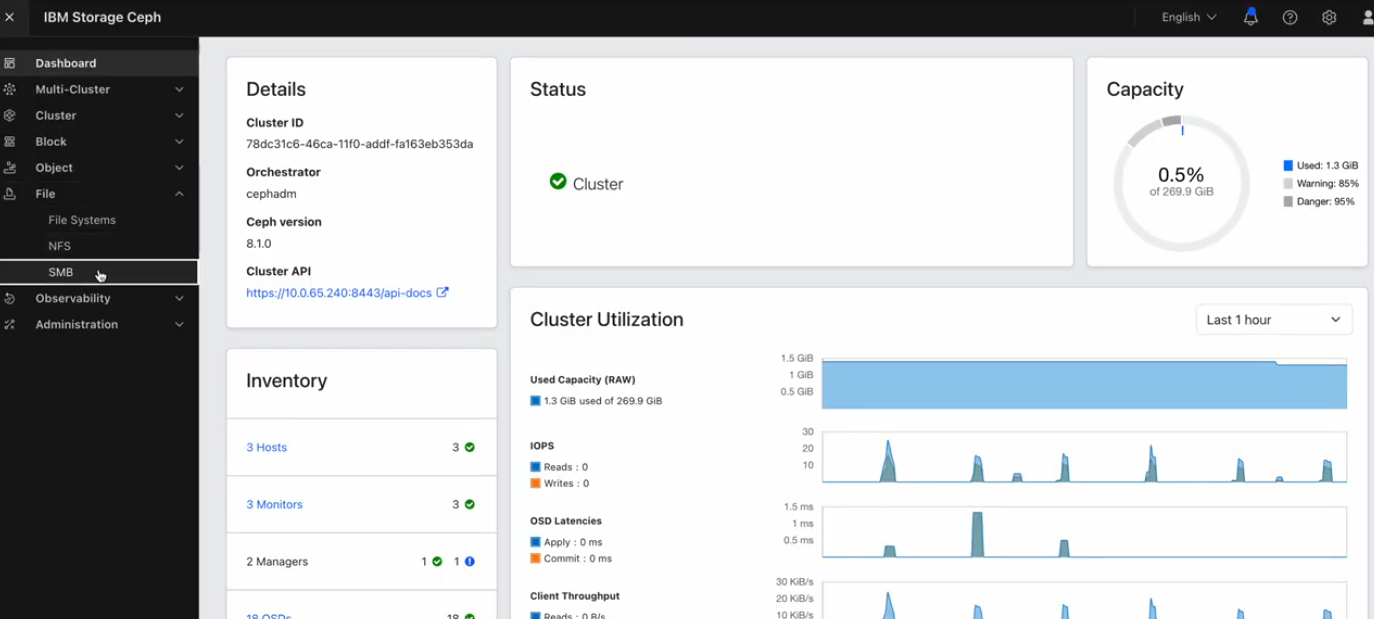

Go to the 'File' Menu

Click on SMB from the left-hand menu.

Select the 'Create Cluster' option

Enter the SMB cluster configuration details and ensure clustering is enabled.

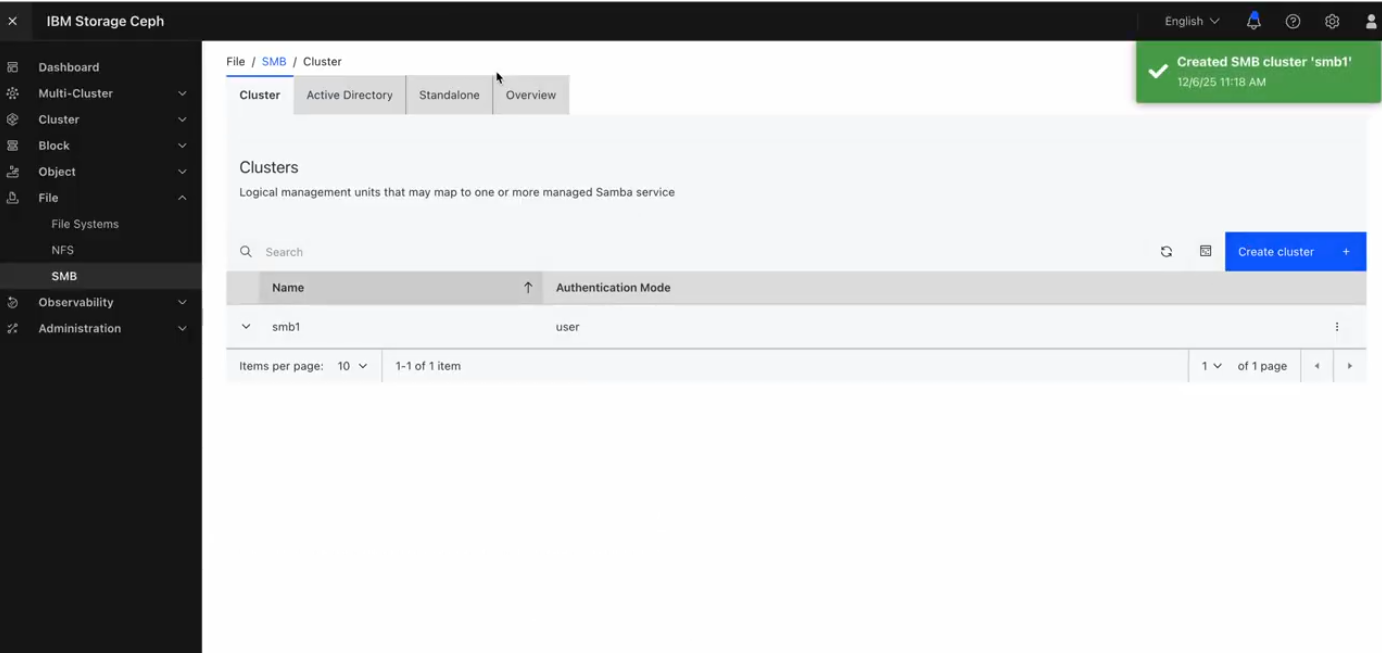

SMB cluster will be updated shortly.

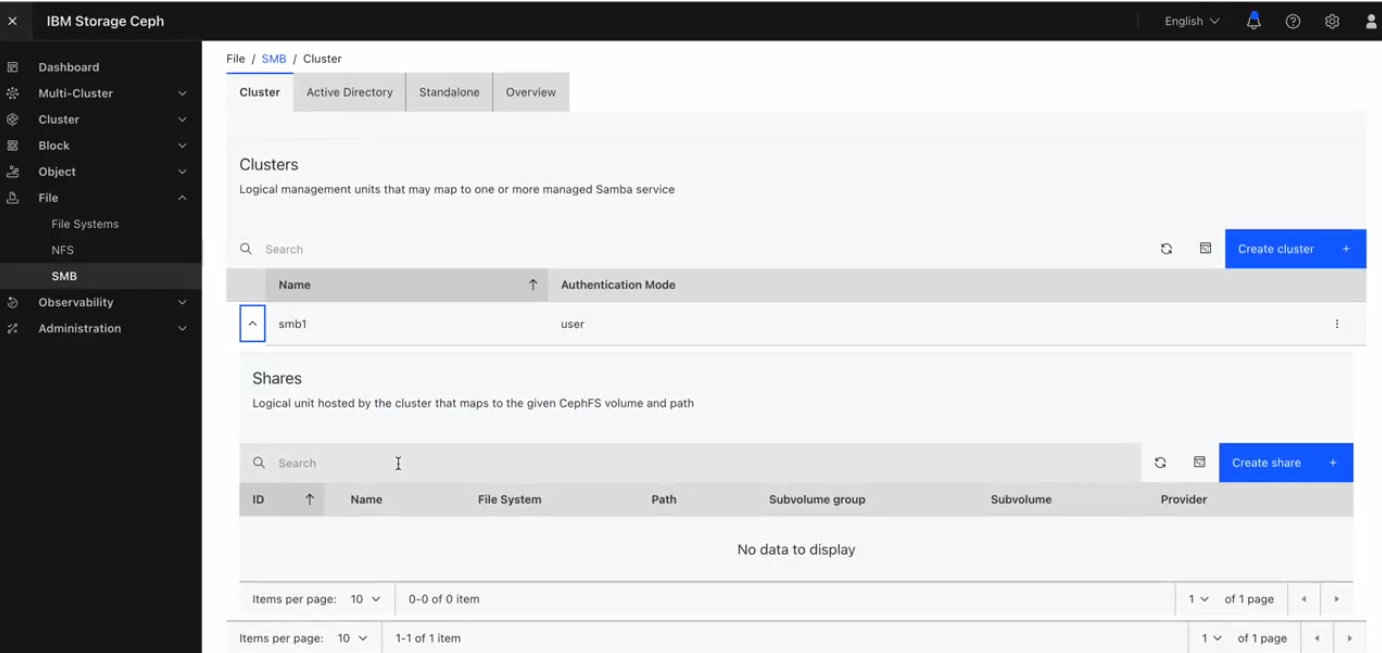

Once the cluster is created, use the 'Create Share' option to define and configure SMB shares

View SMB Service Status and Overview

Map a network drive from windows clients

Conclusion

The introduction of the SMB MGR Module in IBM Storage Ceph 8 marks a significant advancement in how clustered SMB services are deployed and managed in enterprise environments. By integrating Samba CTDB-based clustering directly into Ceph’s orchestration layer, it provides a robust, high-availability solution for SMB access to CephFS, ensuring continuous service even in the event of node failures.

Whether you prefer the imperative method using direct CLI commands, the declarative method using structured YAML/JSON specs, or a more visual approach through the Ceph Dashboard, the SMB MGR module supports a wide range of workflows for different user profiles from system administrators to DevOps engineers.

By simply assigning SMB labels to nodes, enabling the module, and configuring clustering options (default, always, or never), administrators can easily tailor the setup based on their availability requirements. With seamless failover handled by CTDB, the system ensures clients remain connected even when a node goes offline—delivering true high availability.