Written by Todd Havekost on November 16, 2022.

As indicated in the last article in this series, XCF (Cross-System Coupling Facility) is a reliable but often taken-for-granted workhorse in modern sysplexes, facilitating efficient and robust point-to-point communication among dozens of z/OS components and subsystems across the systems of the sysplex. That prior article highlighted CPU efficiency opportunities that may surface if you can invest a little more time in effectively monitoring XCF message volumes and performance.

In z/OS 2.4 IBM delivered Transport Class Simplification (for brevity, we will refer to this enhancement as ‘TCS’ in this article), designed to eliminate the need for customers to configure and tune XCF resources themselves on a regular basis. This article (and the next one in this series) will provide insights into understanding and interpreting the related new Path Usage Statistics fields in the RMF 74.2 SMF records, illustrated with actual data from multiple customers. We will also discuss configuration changes that might be appropriate in the new XCF-managed environment.

Readers are encouraged to reference Frank's ‘XCF Transport Class Simplification’ article (in Tuning Letter 2020 No. 2) which covers the technical details of how XCF signaling works. Frank and I also presented a recent IntelliMagic zAcademy webinar (Insights into New XCF Path Usage Metrics) that you might find it a helpful as an introduction to the topics covered in this article.

Because of the volume of information, we decided to focus on the receiving system’s XCF resources in this article, and the sending system’s resources (including identifying the appropriate number of signaling paths) in the next article.

A Little Background

XCF signaling can be a complex topic. And, to some extent, XCF is a victim of its own success. Because it just chugs along merrily in the background, faithfully delivering tens or even hundreds of thousands of messages per second, it is common to find that technicians are not very familiar with how it works. To help you get the maximum value from this article (and the next one in this series), the following sections provide a little insight into how XCF worked before IBM delivered TCS, and how it works now (assuming that TCS has not been disabled).

How XCF Worked Prior to Transport Class Simplification

Figure 1 on page 4 illustrates how XCF signaling resources were managed and used prior to TCS. In order to ensure smooth and timely delivery of XCF messages, every signaling path has a pool of buffers on both the sending and receiving end of the path. The initial size of each buffer is based on the CLASSLEN value of the transport class that the path is assigned to (on the PATHOUT statement in the COUPLExx Parmlib member). The maximum size of the buffer pool for each end of the path is controlled by the MAXMSG parameter.

Note: One thing to point out here, because it causes some confusion, is that the MAXMSG parameter controls the maximum size in KB of an XCF buffer pool; it is not the maximum number of messages in the buffer pool - yes, I know it is called MAXMSG, but don’t blame me, I’m only the messenger.

Note that in normal usage, the amount of memory consumed by the buffer pool is usually a lot less than the MAXMSG value because XCF only allocates memory when it needs it, and releases it when it is no longer needed.

XCF messages vary in size, but most sysplexes have a large percent of small messages (under 1KB), some medium size, and some large ones. Accordingly, some of the signaling paths would be assigned to a transport class for small messages, some would be assigned to a transport class for medium-sized messages, and some would be assigned to a transport class for large messages, as shown in Figure 1.

Figure 1 - XCF Signaling Resources Prior to TCS

The blue, red, and green boxes on the right side of the figure represent the size of each buffer on the PATHIN end of the path. As you can see, the buffer size is smaller for the paths that are used for small messages (the blue ones, at the top of the chart) than for the paths that are used for large messages (the green ones, at the bottom of the chart). In this example, each 1 KB message uses 6 KB of memory (4 KB for the buffer and 2 KB for associated control information). Each 61 KB message uses 66 KB of memory (64 KB for the buffer plus 2 KB for the control information). Accordingly, 6000 KB of memory would be required to hold 1000 small messages, but 66,000 KB would be required to hold 1000 large messages. This illustrates how, prior to TCS, a given number of small messages would consume a lot less storage (both virtual and real) than the same number of large messages.

When an XCF exploiter sends a message via XCF, the message will be sent to the transport class that has the smallest CLASSLEN that is larger than the message size. If the transport class buffer pool is using less than the MAXMSG amount of memory (which would nearly always be the case), the message will be accepted and sent to the target system.

Note: Once a message has been assigned to a transport class, it will not be re-directed to another transport class, even if the buffer pools at the other end of the associated paths are full, or if all the paths are busy. This effectively limits the maximum amount of storage that can be used by a given message size to something less than the total MAXMSG of all the PATHINs on the target system. For example, if there are 3 transport classes defined (small, medium, and large), and each has 4 PATHOUTs assigned to it, there will be a total of 12 paths to the target system, but each transport class is limited to its 4 paths.

If there are no available buffers in the transport class buffer pool and MAXMSG has been reached, the exploiting program will receive a response indicating that the request can't be serviced because no buffers are currently available. Note that, generally speaking, XCF will not re-route a message to a different transport class as a result of running out of space in the buffer pool of that transport class - programs that wish to exploit XCF signaling services should be coded to handle such situations.

Most sites configure each transport class with enough signaling paths to handle the maximum expected message rate for that transport class. Because the mix of messages that are sent to XCF typically varies over time, it is likely that one transport class might be relatively idle when another transport class is at its maximum rate. As a result, the combined capacity of all the signaling paths is usually far greater than the overall capacity that is required at any one point in time.

One last, but very important, thing to point out is that XCF works on a point-to-point basis. Figure 1 on page 4 shows the signaling resources for a 2-way sysplex. If there were three systems in the sysplex, all the resources that SYSA has for communicating with SYSB (transport classes, PATHOUTs, PATHINs, buffers, and so on) would be replicated for communication between SYSA and SYSC. And if there were 10 systems in the sysplex, each system would replicate those resources nine times, once for each other system in the sysplex.

How XCF Works When TCS Is Enabled

To address customer challenges with optimizing the XCF signaling infrastructure, IBM delivered XCF transport class simplification in z/OS 2.4. There are many changes in XCF in support of this change, but the ones we want to call out here are:

- To eliminate the hassle of customers having to identify appropriate CLASSLEN and MAXMSG values and the ‘right’ number of signaling paths for each transport class, XCF was enhanced to logically group all transport classes and their associated PATHOUTs into one large pseudo-transport class (called _XCFMGD). As you can imagine, this has the knock-on benefit of greatly reducing the chances of an XCF exploiter being told that XCF doesn't have any available buffers.

- To ensure that the buffer on the receiving system is large enough to hold any incoming message, all PATHIN buffers are permanently set to 64 KB (plus the 2 KB for control information). This provides XCF with complete flexibility to send any message on any signaling path, and eliminates any overhead associated with adjusting the size of the

PATHIN buffers as could happen prior to TCS.

The TCS changes result in a configuration like the one shown in Figure 2. You can see that the total number of signaling paths is unchanged, but now they are all grouped in that single _XCFMGD pseudo-transport class. You can also see the impact on the size of the PATHIN buffers - rather than having some small, some medium and some large buffers, they are all now sized to handle large messages. As a result, the amount of memory required to hold the same number of messages would increase. However, due to many changes in XCF, both as part of the Transport Class Simplification enhancements, and in previous z/OS releases, IBM believes that for most sysplexes, each PATHIN's buffer pool should not need space for more than about 30 messages (which equates to a MAXMSG of 2000 when TCS is enabled), meaning that many sites are carrying forward MAXMSG values that are far larger than necessary.

Figure 2 - XCF Signaling Resources When TCS is Enabled

During normal operation, target address spaces retrieve their messages from XCF very quickly, meaning that a relatively small number of buffers are in use at any given time. Given that most systems these days have tens or hundreds of GBs of memory, the extra few tens of KBs for each buffer is normally irrelevant, and certainly is a small price to pay for the improved manageability and performance delivered by the TCS changes.

However, what happens if the target address space stops or is very slow about retrieving its messages for some reason? Let's say that the exploiter in question sends small messages. Prior to TCS, each message would consume 6 KB. If the problem continued for long enough, and new messages kept arriving for the afflicted address space, eventually the MAXMSG limit on the amount of memory the buffer pool for that PATHIN can consume would be reached. And in a really bad situation, this might happen on all the PATHINs

associated with that transport class. Using the configuration in Figure 1 on page 4, the transport class for small messages had two signaling paths, so if each one had a MAXMSG value of 6000, the SYSB PATHIN buffer pools could hold a total of 2000 messages from SYSA before they filled.

So that was how XCF worked prior to TCS. Let's consider a similar situation after TCS. Once again, we have a target address space that is not retrieving its messages from XCF. But now each message is consuming 66 KB of PATHIN storage, rather than 6 KB, so there is more use of virtual and real memory for the same number of messages. Assuming the same MAXMSG values, the same two PATHIN buffer pools could only hold a maximum of 180 messages.

However, that isn't the only impact. Any message can now be sent on any signaling path. Using the sample configuration in Figure 2 on page 6, there are now six PATHINs that can be used to deliver those messages, and each of them could try to allocate and use their full MAXMSG value. Using the same example, and if we assume that every PATHIN has a MAXMSG of 6000, that means that the six PATHIN buffer pools could hold a maximum of 540 messages, however those 540 messages would now be consuming 36,000 KB of memory, compared to needing just 12,000 KB for 2000 messages prior to TCS.

We want to stress that this would be a very unusual scenario - messages generally reside in the XCF buffers for a very short time. However, it is important to understand how the move to TCS could potentially result in your consuming all PATHIN buffer pool space being more likely than it was previously.

You might think, validly, that you are not going to lose sleep over 36 MB of storage. However, that 36 MB is for a small configuration - just two systems, six signaling paths in total, and reasonably sized MAXMSG values. Imagine a larger configuration. Having twelve signaling paths, rather than six would increase the total MAXMSG to 72 MB. MAXMSG values of 20,000, rather than 6000 would increase total MAXMSG to 240 MB. And an 11-way sysplex, rather than a 2-way would increase it again, to 2400 MB. And just is just for

the PATHIN buffer pools.

XCF messages reside in an XCF data space while they wait to be retrieved or sent. The data space is limited to 2 GB, but that must accommodate not only the PATHIN and PATHOUT buffers, but also various other XCF control information. If you have a sysplex with many systems, many signaling paths, large MAXMSG values, a large number of incoming messages, and a slow or stalled target address space, it is possible that XCF will run out of storage in its data space. IF this happens, XCF currently places the system in a 0A2-040 wait state. There is currently an OPEN HIPER APAR, OA62980, that will change XCF to respond to such situations in a similar manner to how it would respond if it had run out of buffers, rather than placing the system in a wait state. However, that APAR is not yet available, and we don’t know when it will be delivered. Even if it was available today, it might be many months before it is rolled out to all your production systems, so this is a topic that should be of interest to all our readers.

A second potential issue can arise from the fact that the 2 GB data space for XCF signal buffers is backed by frames fixed for I/O. In a large sysplex with many signal paths, even modest MAXMSG values can over-commit system resources and create frame shortages. Prior to APAR OA60480 (July 2021 - also HIPER), the fixed frames backing that 2 GB data space were in below-the-bar, 31-bit real storage. The system has a total of 512K frames below the bar. A large sysplex with 24 systems, 15 signal structures, and MAXMSG=4000 could use up to 360K frames on each system. If there is a massive burst of signals, XCF could potentially consume up to 360K of the 512K frames below the bar, which, depending on other activity, could result in a system impact (due to lack of available frames) or even a complete system outage. With the PTF for OA60460 applied, and if XTCSIZE is enabled, XCF PATHIN buffers can now use frames backed above the bar, avoiding this potential system constraint.

Longer term, we expect XCF will be enhanced to place its buffers in 64-bit storage, however we imagine that would be quite a significant undertaking. So, in the interim, Mark Brooks(Mr. XCF) offered the following recommendations at his excellent Parallel Sysplex Update session at SHARE in Columbus:

- Individual PATHINs should ideally use MAXMSG values of not more than 2000 KB.

- The sum of all PATHIN values in a system should not exceed 800 MB.

There is still the limitation that all XCF signaling buffers (PATHIN, PATHOUT, Transport Class, and LOCALMSG), together with other XCF control information must fit in that 2 GB XCF data space. However, in IBM’s experience, customers are more likely to encounter situations where the PATHIN buffer pools consume excessive amounts of storage, so protecting against PATHIN buffer pools overrunning the XCF data space is the focus of these recommendations.

If some customers have had issues with very large PATHIN buffer pools, you are probably wondering why there are not also problems on the PATHOUT end of those path? Remember that there are typically between 50 and 100 exploiters of XCF services on a system. In practice it is more likely that something will happen in one exploiter, causing high levels of communication between the members of that XCF group and a key member of that group (sitting in one system), than it is that all the XCF exploiters on one system will suddenly wake up and start firing off huge numbers of messages to their peers on every other system.

Putting Things in Perspective

PATHIN buffers are part of a chain. The world doesn't end if the PATHIN buffers fill (as long as that doesn't cause the XCF data space to run out of storage). If all the PATHIN buffer pools on a system do fill, messages will queue in the CF. And if the path uses more than its share of the structure, they will queue back in the PATHOUT buffers on the sending system. And if the PATHOUT buffer pools fill, XCF exploiting programs SHOULD have recovery logic that lets them handle that situation. Also, if you get to the point where ALL your buffers are full, then you have some serious problem on the target system, and running out of XCF PATHIN buffers is just a side effect of that problem.

Additionally, running out of buffers may drive the XCF Message Isolation code that will warn you about what is happening, whereas if you have huge PATHIN buffers, the XCF data space might fill before that code gets invoked.

Having said all that, it is true that, when something goes wrong and messages are not being retrieved in a timely manner, the PATHIN buffers will fill much more quickly when XTCSIZE is enabled than they would have previously. So perhaps the price you pay for all the benefits TCS delivers is that you need to be a little more proactive about monitoring XCF usage, and putting alerts in place to generate warnings if you start running out of buffers - have a look at the description of message IXC645E for a list of related messages that are issued by the Message Isolation code. For information about the Message Isolation function, refer to Mark’s SHARE in Orlando 2015 Parallel Sysplex Update presentation.

And that brings us back to the question of monitoring the performance of your XCF infrastructure. In the remainder of this article we are going to focus on understanding the performance and use of XCF resources on the receiving end of the signaling paths. In the next article, we will look at the other side of the picture (the sending end of the paths).

Concepts Behind New Path Usage Metrics

Once z/OS 2.4 is running and the XTCSIZE FUNCTION is enabled (the default value), transport classes defined purely for size segregation become ‘XCF Managed’, and signals normally sent via those traditional transport classes are instead sent via the new ‘_XCFMGD’ pseudo-transport class.

Terminology: There is (of course) some new terminology associated with the metrics that report on XCF signaling resource usage - primarily ‘bucket’ and ‘window’. To understand these terms, you really need both a drawing and accompanying text, so we recommend reading the following few paragraphs and then applying them to Figure 3 on page 11 - hopefully that will make it all clear.

While discussing terminology, there are two other terms that are often used interchangeably - ‘signal’ and ‘message’. Strictly speaking, XCF ‘signals’ include control signals sent between XCF instances, messages sent between XCF exploiters, and control information wrapped around those messages. However, in the context of this part of the article we will not differentiate between the two. Also, the Path sections of the RMF XCF records refer to signals rather than messages, and that is the part of the SMF records we will be focusing in this part of the article, so we will aim to stick with using ‘signals’.

XCF Signaling path utilization is measured on the inbound side. Signals are read in parallel in ‘buckets’ of at most four signals. For purposes of the XCF metrics, utilizations are discrete values of 0% (no read operations active), 25% (1 read active), 50% (2 reads active), 75% (3 reads active), or 100% (4 reads active). A bucket ends when the ‘I/O’ completes for all N signals in the current bucket, at which time a new set of up to four reads may be initiated.

XCF also measures the total time spent in a path utilization ‘window’. Assuming an idle path, a utilization window begins when a bucket of N reads is started. That window continues as long as another N reads are initiated at the end of the current bucket. A given utilization window ends when the value of N changes. If N=0, the path is idle. A different N>0 starts a new utilization window at the corresponding utilization percentage.

Note: The “In use” values in the RMF XCF Path Statistics report are based on these ‘windows’. Neither the SMF nor the RMF manuals make this clear. Metrics for 'buckets' can be calculated from fields in the XCF records using the formulas indicated below, but you need to perform those calculations yourself - that information is currently not presented by XCF or RMF.

Figure 3, from Mark Brooks’ SHARE in Columbus 2022 z/OS Parallel Sysplex Update presentation, illustrates these concepts. The elapsed time for each bucket is marked by the vertical bars, and the number of parallel reads in that bucket is indicated by the number of horizontal yellow, purple, blue, or gray bars above the timeline. As you can see in Mark’s chart, a window spans multiple consecutive buckets if those buckets have the same number of reads.

Figure 3 - Inbound Path Utilization (© IBM Corporation)

Figure 4 (from the IBM SMF manual) shows the definitions of the newly defined Path Usage Statistics Block fields. For each path, there are 4 occurrences of the block, one for each of the defined utilizations, namely 25%, 50%, 75%, and 100%. (The fields following the block will be discussed later in the article.)

Figure 4 - Path Usage Statistics Block (© IBM Corporation)

With this background, we can now define several calculated timing metrics. (Note that the “Percent / 25” operand in the denominator of average time per bucket equates to the number of active reads for that utilization.):

- Path utilization = sum of (TimeSum fields for all 4 blocks) / interval.

- Average time per bucket = TimeSum / (SigCnt / (Percent / 25)).

- Average time per window = TimeSum / Time# (in mics, for each utilization).

- Average time per read = TimeSum / SigCnt (in mics, for each utilization).

Figure 5 - Sample RMF PP XCF Report (© IBM Corporation)

For reference, Figure 5 shows a sample RMF report (annotated with his explanatory notes) for a selected interval from one of Mark Brooks' conference presentations. For each of the four utilizations (blue box center right, reading from right to left), RMF reports the number of signals, number of windows, and the duration at that utilization.

Now let's see what we can learn from analyzing these new Path Usage metrics for a pair of high-volume systems, utilizing reports available through IntelliMagic Vision. In the example shown in Figure 6 on page 13 for a selected pair of high-volume systems, the average elapsed time per bucket is similar for the 25%, 50%, and 75% utilizations, reflecting that the elapsed time to execute a “bucket” of reads is relatively consistent, no matter how many reads were being executed in parallel. The increased times around 9:30 correlate to a burst of occurrences where high signal volumes resulted in buckets with 4 parallel reads (“100% utilization”), as shown in Figure 7 on page 13.

Figure 6 - Time per Bucket by Utilization (© IntelliMagic Vision)

Figure 7 - Count Path in Use at 100% Utilization (© IntelliMagic Vision)

Bucket or Window?

As indicated above, the RMF Postprocessor reports the number and total duration of windows (from which average time per window can be calculated). It is also possible to calculate the number and average duration of buckets from the data in the RMF SMF records using the formulas previously provided. In most cases the number of buckets is only slightly higher than the number of windows, because any change in the number of signals per read (e.g., from 1 to 2, 2 to 3, etc.) resets the window. Thus there is typically little difference between the time per window and time per bucket values, as you can see in Figure 8. Where time per window can provide helpful insights is in the 100% utilization case. Since four is the maximum number of signals read in parallel, a large volume of queued messages can result in sustained intervals where the 4-signal window “stays open.” The elevated time per window (in blue) in the 0930-0940 interval in Figure 14 on page 19 shows such a situation.

Figure 8 - Comparing Bucket and Window Times (© IntelliMagic Vision)

XCF also counts the number of signals that were sent in a window containing 1 read, 2 reads, 3 reads, or 4 reads. As you can see in Figure 9 on page 15, most signals were read in a window with just 1 read, with reducing numbers for windows with 2 or 3 reads.

Figure 9 - Counts of Path in Use by Utilization (© IntelliMagic Vision)

Combining the time to read each bucket with the number of reads in the bucket, we see proportionately lower times per read for 75% utilization, where 3 signals are read in a single bucket, than for the 50% (2 signals), and 25% (a single signal) utilization cases, as seen in Figure 10. This illustrates one of the advantages of keeping a signaling path 'hot' - that is, XCF might get better average transfer times by keeping a subset of paths moderately busy than if it were to use a round-robin mechanism to force equal usage of all paths.

Figure 10 - Time per Read by Utilization (© IntelliMagic Vision)

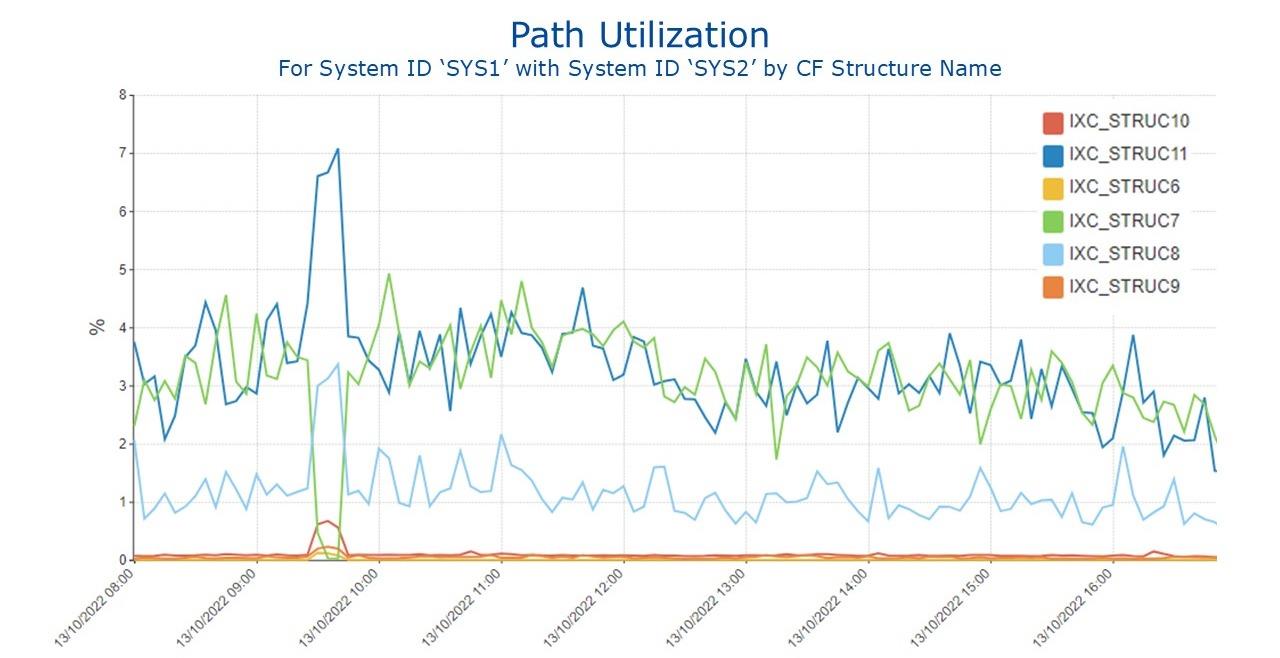

Moving on to path utilization, Figure 11 shows utilizations for the six coupling facility list structures defined to process XCF signals. Note the relatively low utilizations, with three of the six structures rarely being used. Mark points out that XCF aims to select the path that is delivering the best performance at that time, however, the path(s) that meet that description can change over time, so the distribution of signals across the available paths should not be expected to always be the same.

Figure 11 - Path Utilization (© IntelliMagic Vision)

For example, note the abrupt changes in path utilizations around the 9:30 timeframe in Figure 11, particularly the utilization of IXC_STRUC7, which decreased to near zero, while both IXC_STRUC8 and IXC_STRUC11 increased. The shift in load from IXC_STRUC7 to IXC_STRUC8 might have been related to a change in the size of the incoming signals. As you saw in Figure 10 on page 15, the time to read each bucket increased during that period, possibly indicating that larger signals were being read. Or the shift might be XCF’s response to the longer read times experienced by the IXC_STRUC7 path, causing it to select the other paths during that interval, as shown in Figure 12 on page 17.

Figure 12 - Time per Bucket at 25% Utilization (© IntelliMagic Vision)

Note also in Figure 13 on page 18 that the typical CF service times for IXC_STRUC11 and IXC_STRUC7 are around 16 mics which aligns closely with the time per bucket values seen in the prior figure. The one anomaly is that the CF Service times for IXC_STRUC7 don’t show the same increase in response time around 9:30 that we see in Figure 12. A possible explanation is that Figure 12 is only reporting on reads of signals arriving into SYS1 from SYS2. On the other hand, the CF structure response times for IXC_STRUC7 include both reads and writes, and they include the activity from all other systems in the sysplex to SYS1 and the activity from SYS1 to all other systems in the sysplex. As a result, the blip that we see in Figure 12 disappears when averaged in with all the other activity that is going through that structure.

Figure 13 - Signaling Structure Asynchronous Service Time (© IntelliMagic Vision)

There is one last metric we want to discuss before moving on. We mentioned earlier that the number of buckets in this system was generally close to the number of windows, indicating that most windows contain just one bucket. If a window contains multiple buckets, especially buckets with 4 reads, that indicates a burst of incoming messages, resulting in XCF starting a bucket of 4 reads as soon as the previous bucket ended. One way to spot this is to report on the elapsed time for both buckets and windows, as shown in Figure 14 on page 19. This information might be valuable when evaluating how many signaling paths you should have - that 9:30 time period would appear to be a peak, and you should configure sufficient paths to handle those peak times.

Figure 14 - Read Times for Buckets and Windows (©IntelliMagic Vision)

It is possible that the presence of six structures in this example is a holdover from having a pair of structures defined for availability for each of the three former transport classes. If that is the case, and considering IBM's latest guidance about limiting the maximum memory that can be consumed by XCF buffer pools, this is an example where a reduction in the number of paths might be worth considering. We will return to this topic in the next article in this series.

‘Impactful No Buffer’ Conditions

Historically, XCF on the receiving system has reported inbound ‘no buffer’ conditions, under the RMF report heading of ‘Buffers Unavail’ from field R742PIBR in the 74.2 SMF records. Intuitively, those sound ‘bad’ and seem like something to be avoided. But often there was no impact from those conditions, because even though no buffers were available, there might have been no signal awaiting transfer. Unfortunately, there was no way to determine whether there was an impact or not, and if there was one, how large was it. In the absence of that information, the natural reaction was to increase the MAXMSG value - that is part of the reason why some customers have really large MAXMSG values now.

In z/OS 2.4, XCF implemented new, more helpful, metrics to help identify no buffer conditions that actually had an impact. As shown earlier in the bottom section of Figure 4 on page 11, these are:

- Number of times a path was impacted by a no-inbound-buffer condition (R742PNIB_Time#)

- Total time (in microseconds) a path had a no-inbound-buffer impact condition (R742PNIB_TimeSum).

That timer is started when a ‘no buffer’ condition prevents XCF from getting a buffer to receive a message and there is a message pending transfer. Figure 15 illustrates these metrics for one pair of systems.

Figure 15 - Impactful No-Inbound-Buffer Conditions (© IntelliMagic Vision)

These new metrics provide insights into how frequently and for how long ‘impactful no buffer’ conditions delay retrieval of inbound signals. To put these numbers in perspective, compare the total number of inbound messages in that interval, to the count of impactful no buffer events, represented by the orange line. In this example, most intervals didn’t have any impactful no buffer events, a few intervals had between 1 and 18, and one interval had about 175. In this case, there were about 2,000,000 inbound messages per interval, so the peak impactful no buffer count was about 0.009% of all inbound messages - hardly a cause for concern. Similarly, the peak total delay time was about 50 milliseconds in a 15-minute interval, represented by the blue line.

It may be a learning process, as a site and as an industry, to identify the thresholds for acceptable and unacceptable levels.

If you encounter numbers that are high enough to cause concern, one way to reduce these occurrences is to ensure that the address space(s) responsible for processing these messages have adequate CPU resources to perform that function in a timely manner.

While increasing the MAXMSG value in response to large No Buffer counts was the normal course of action in the past, that is no longer recommended. Starting in z/OS 2.4, a small number of No Buffer conditions is actually not a bad thing because it triggers XCF to re-evaluate which paths it is using for which messages, and whether an adjustment is called for. As an extreme example, you might have 10 paths defined, each with massive MAXMSG values, and XCF might quite happily keep sending nearly all the messages over just a subset of those paths because it never encounters the delays that would be experienced if the PATHIN ran out of buffers. However, if the MAXMSG values were smaller, the sending XCF would be aware of the delays on that path and might decide to start spreading the load over more paths.

Configuring MAXMSG Values

TCS has been generally well-received, delivering good performance and reducing issues arising from out-of-date transport class definitions. But to get the maximum value from this enhancement, installations should ensure that their XCF signaling resources are consistent with XCF's new approach and latest guidelines.

(The following all assumes transport class simplification is active). This gets a little complicated, so buckle up and let's go.

To get you rolling, there are two critical sets of numbers you should determine - the maximum amount of storage that could possibly be consumed by XCF signaling buffer pools, and the current 'impactful delay'. Let's look at the storage question first - that will help you determine how close you are to IBM's guideline value.

Figure 16 - MAXMSG Definitions

As shown in Figure 16, the MAXMSG parameter is specified in the COUPLExx Parmlib member and controls the maximum amount of storage that can be used for XCF buffers. MAXMSG values can be specified at the transport class (CLASSDEF), outgoing path (PATHOUT), incoming path (PATHIN), and local message (LOCALMSG) levels. You can also specify a 'default' MAXMSG value on the COUPLE statement. MAXMSG is specified in 1 KB units and defaults to 2000 (that is, about 2 MB).

You can’t explicitly define the _XCFMGD pseudo transport class using a CLASSDEF statement, which means that you can’t specify a MAXMSG value for _XCFMGD. Rather, XCF automatically sets the MAXMSG for the _XCFMGD pseudo-transport class to be the sum of the MAXMSG values of the other defined transport classes.

Note: We generally recommend that customers retain their traditional CLASSDEF statements in their COUPLExx member - this gives you the ability to backout to how you ran prior to TCS should you wish to. However, if you have a good reason for removing those CLASSDEF statements, you need to consider the impact that would have on the _XCFMGD MAXMSG value. If you have (for example), three transport classes defined, each with a MAXMSG of 10000, _XCFMGD’s MAXMSG would drop by 30,000 KB if you delete all those CLASSDEF statements, which is probably not what you want to happen all at one time. But if you still have your heart set on deleting those CLASSDEF statements, Mark recommends this methodology:

- Remove MAXMSG from all the path definitions.

- Use the COUPLE MAXMSG to specify the default MAXMSG you want the inbound paths to use. Call this value ‘PIM’.

- Keep (or create) a CLASSDEF statement for the DEFAULT transport class and set its MAXMSG to be the value you want the outbound paths to use. Call this value POM.

Let N be the number of signal paths for a particular system. Then the total inbound space permitted is equal to the number_of_connected_systems * N * PIM KB, and _XCFMGD will have number_of_connected_systems * (N+1) * POM KB available. Adjust the POM to give you the number you're looking for.

Determining Total Maximum XCF Buffer Storage

The total amount of storage used by XCF on a single system is the sum of:

- MAXMSG for all transport classes

- MAXMSG for all PATHOUTs

- MAXMSG for all PATHINs

- Multiply the sum of these values by (the number of systems in the sysplex - 1)

- Then add the MAXMSG for LOCALMSG (This is used for XCF messages sent from a member of an XCF group on this system to a member of an XCF group on this system, so it is unaffected by the number of other systems in the sysplex.)

You can get this information from SMF or using DISPLAY XCF commands. There are many different products to process SMF data, so in this article we use XCF commands to illustrate how to determine the maximum buffer pool value.

Recommendation: The latest guidance from the XCF experts in IBM (as of August 2022) is to aim to keep the total maximum PATHIN buffer pool space to less than 800 MB. They are aware that, when you add in the total maximum PATHOUT and transport class buffer pool space, you could end up with a number greater than 2 GB. However, as indicated earlier, their experience is that it is unlikely for a system to use its entire outgoing message buffer pool space, and therefore it is OK if the sum of all the maximum buffer pool sizes exceeds 2 GB.

While we understand that it is more work to reduce ALL your MAXMSG values (sending as well as receiving), our (Watson & Walker and IntelliMagic) advice is that you try to reduce your MAXMSG values with an aim to having a total maximum XCF buffer pool sizes of no more than 1000 MB.

This might not be possible in very large sysplexes with many systems and many signaling structures - for those readers, we plan to provide a little more granular advice in the next article in this series that could let you exceed the 1000 MB guideline while still protecting your performance and your resilience.

But if you can achieve it, we believe that would be a worthy goal. Until the XCF buffers are moved to 64-bit storage, and at least until the fix for APAR OA62980 is applied to all your systems, there is a possibility that XCF could run out of storage for its buffers, resulting in a wait state. If you can reduce that possibility by spending a little more time in optimizing your MAXMSG values, we feel that would be a good investment of your time.

Unfortunately there is no DISPLAY XCF command that will show the sum of all the MAXMSG values or even just all PATHIN MAXMSG values. However, performing that calculation manually using the available commands is not that difficult, as long as you don’t have to do it every day. If you would like to keep an ongoing watchful eye on this, we recommend using a tool that will extract the information from your SMF data. IntelliMagic Vision will be performing this MAXMSG calculation and providing an associated health insights rating for its users in an upcoming release.

To display information about ALL the transport classes defined on this system, use the D XCF,CD,CLASS=ALL command as shown in Figure 17 on page 24.

Figure 17 - D XCF,CD Output

For the purposes of this exercise, it will be easier if you request information for only the _XCFMGD pseudo transport class - D XCF,CD,CLASS=_XCFMGD. The key fields in this output are the SUM MAXMSG field for the _XCFMGD transport class, and the ‘FOR SYSTEM’ field (FPK1 in this example).

Note: Whereas each PATHIN has its own buffer pool, XCF groups the transport class buffer pool (specified on the MAXMSG parm) together with the buffer pools for all PATHOUTs assigned to that transport class, creating a single large buffer pool.

Additionally, when TCS is enabled, the buffer pools for the traditional transport classes and all the PATHOUTs assigned to those class, are included in that single large buffer pool. And the sum of all those buffer pools (for each connected system) is reported in the SUM MAXMSG field, as highlighted in Figure 17.

In case you are not familiar with the D XCF,CD command, notice that each section in the output shows the transport class name and the ‘FOR SYSTEM’ name. If you scroll down in the output you will see that there are multiple _XCFMGD sections, one for each connected system. You should extract the SUM MAXMSG: value for every system and include that in your calculation.1

The next piece of information you want is the sum of the PATHIN MAXMSG values, for all connected systems. Figure 18 shows a sample output from the D XCF,PATHIN,STRNM=ALL command.

Figure 18 - D XCF,PATHIN Output

The important fields in this figure are the path name, the name of the system at the other end of that path (the ‘Remote System’), and the MAXMSG values for each path. Once again, you need to sum the MAXMSG values for all PATHINs, from all connected systems. For the purposes of this discussion, you can ignore the information in the bottom half of the display.

Finally, the third class of buffer pools you should include in your calculations is the LOCALMSG buffers. These are a little different to the buffers used for communication with other systems from the perspective that there are no separate PATHIN buffers for local messages - the one set of buffers effectively act as both PATHIN and PATHOUT. Looking back at Figure 17 on page 24, you will see a line that says “_XCFMGD TRANSPORT CLASS USAGE FOR SYSTEM FPK0”. FPK0 is the system where the command was issued, so that section is reporting on local XCF messages. You should use the SUM MAXMSG value from that section for the LOCALMSG part of your equation.

Using this information, we can calculate that the maximum storage that can be consumed by XCF buffer pools on this system is:

_XCFMGD to FPK1 18,288

PATHINS from FPK1 8,000

LOCALMSG 6,096

TOTAL 32,384 KB

This was a very simple example because there are only two systems in this sysplex, only two traditional transport classes, and two signaling paths per transport class. It is very likely that your system will be somewhat more complicated than this. Nevertheless, the basic methodology should work for you as well.

In this example, the maximum total PATHIN buffer pool space, about 8 MB, was well below the IBM recommended maximum of 800 MB, and even the total of all maximum buffer pool storage was only 32 MB. But what if your number was more than 800 MB (we have seen sysplexes where the total in each system was nearly 8000 MB!)? In that case, you have a little more work to do. This is where those new XCF metrics come into play.

Adjusting MAXMSG values

If your maximum XCF buffer pool space significantly exceeds the IBM guidelines, it would be prudent to start adjusting your MAXMSGs downwards. As discussed at the start of this article, we recommend that you start by decreasing the PATHIN values. If you get those down to a point where the ‘impactful no buffer delay time’ is borderline acceptable, but your total MAXMSGs still exceeds our 1000 MB target, we recommend looking at the transport class and PATHOUT MAXMSGs, and reviewing whether your number of signaling paths is still appropriate - but we will cover that side of things in the next article.

While the PATHIN MAXMSG values are defined in the COUPLExx member of Parmlib, they can be adjusted dynamically using the

SETXCF MODIFY, PI, STRNM=structure_name, MAXMSG=nnnn command.

The PATHIN MAXMSG can be adjusted separately on each system (the scope of that MODIFY command is a single system), and there is no definitive relationship between the MAXMSG values for the two ends of the path. In fact, we recommend getting your PATHIN MAXMSG values to your targets before you start adjusting the MAXMSG values for the PATHOUT end of those paths.

Recommendation: If you have a complex environment, with many systems and many signaling paths, we recommend creating an automation script that will apply your MAXMSG adjustments consistently across all your systems and paths.

When you start adjusting your PATHIN MAXMSG values you will obviously want to keep an eye on your XCF performance to ensure that you haven’t reduced your MAXMSG values too much. We recommend focusing on the impactful delay times, as shown in Figure 15 on page 20, while also keeping an eye on the number of No Buffer conditions. Both of these values are reported in the RMF Postprocessor XCF Path Statistics report - an example is shown in Figure 19. Note that the BUFFERS UNAVAIL column contains the traditional count of the number of no buffer conditions, while the NO BUF column contains the new count of no buffer events that actually had an impact. In either case, remember what we said about non-zero No Buffer counts not necessarily being a bad thing if TCS is enabled.

Figure 19 - RMF XCF Path Usage Report

An additional piece of data that might be helpful is the R742PUSE field in the type 74.2 SMF record. This information is provided for each path, and reports the number of KBs of storage in use for that path’s buffers at the end of the RMF interval. Note that that value is not an average, nor is it the peak during the interval. It is certainly possible that the amount of storage in use at some point during the interval is a lot higher than the value shown in the R742PUSE field.

However, if you look at data for a sufficiently long period, the values should be a reasonable representation of how much storage is being used during normal processing. An example is shown in Figure 20 on page 28. This chart shows the number of KB of allocated PATHIN buffer space for each of the PATHINs on a particular system. You can see that, apart from a few spikes, the usage is reasonably consistent across the day. And if you look at the corresponding chart for a number of representative peak days, that should give you a ballpark feel for buffer usage at the moment.

Figure 20 - Displaying XCF Storage Use for PATHIN Buffers (© IntelliMagic Vision)

How quickly to adjust the MAXMSG values?

If your PATHIN MAXMSG is currently set to 20,000, how quickly should you move to 2000? For example, should you go directly from 20,000 to 2000? Or should you go to 10,000 first, followed by smaller reductions?

Sadly, the right answer is “it depends”. We don’t think there is one correct answer that applies to every site or every sysplex. It will depend on your history with XCF, on the nature of your workload, on your ability to make dynamic changes versus each change requiring an IPL, and on how far you are from your target value. I’m afraid that we need to leave this question to your best judgment and knowledge of how things work best in your site.

Once you get your total maximum XCF buffer space down to that your target, it is time to shift into business-as-usual monitoring mode. The metrics that you used to monitor XCF performance while you adjusted the MAXMAG values are likely the same ones you should be monitoring on an ongoing basis. But these are all focused on signaling infrastructure performance - don’t forget the XCF group and member-related metrics we discussed in the previous article in this series.

References

The following documents contain additional information about XCF and its enhancements:

- IBM Manual, Setting Up a Sysplex, SA23-1399.

- IBM TechU 2021 ‘z/OS Parallel Sysplex update: Eliminating XCF transport classes’ presentation by Mark Brooks.

- IntelliMagic zAcademy webinar Insights into New XCF Path Usage Metrics, by Todd Havekost and Frank Kyne.

- SHARE in Columbus 2022, session Parallel Sysplex Update, by Mark Brooks. While Mark’s slides are always comprehensive, we highly recommend attending one of Mark’s sessions in person if you get the opportunity to do so.

- ‘XCF Transport Class Simplification’ article in Tuning Letter 2020 No. 2.

- ‘XCF – A Reliable (But Often Overlooked) Component of Sysplex’ article in Tuning Letter 2022 No. 2.

Summary

I (Frank) would like to start by thanking Todd and Mark for their fantastic work on this article. You would not believe how much time they put into it. However, we all felt that this is an important topic that impacts most of our readers, and therefore was worth the effort.

The Transport Class Simplification changes in z/OS 2.4 make your job easier, and for nearly all customers, they deliver better performance and more resiliency. For a very small percent of customers (those with huge MAXMSG values), in situations with abnormally high message volumes, TCS increases the possibility of XCF running out of storage in the XCF data space. If that were to happen, XCF currently (October 2022) puts the system in a wait state. Hopefully APAR OA62980 will be available in the near term, and that will change XCF so that it does not wait state the system. In the interim, following the recommendations in this article will both protect you from that situation, and also give you a more optimized XCF infrastructure for the long haul.

We hope you found this article to be helpful, and that it answered some questions you’ve about how XCF signaling works, and why it works as it does. Our next article will focus on the resources used for outgoing messages - transport classes, PATHOUTs, and how many paths you really need.

If you have any questions or suggestions, please email us. We always love to hear from our readers.