Cluster deployments and endurance tests - IBM Business Automation Manager Open Editions The focus of this post is on how to deploy KieServer runtime-based applications and to demonstrate the solidity and reliability of the product.

1. IntroductionAfter IBM took over Red Hat's PAM/DM products, I started following this relatively new topic closely.

I've also started seeing customers who have expiring contracts for RH PAM/DM support licenses, contracts previously signed with Red Hat and due to be signed with IBM starting this year. All customers legitimately asked questions about the future of these products and about how IBM intends to continue developing new features and supporting versions already in use.

The answer was easy, IBM will continue the support and development in accordance with what has already been offered in the past by Red Hat and will improve the levels of support equal to those already high level offered for all IBM products.

IBM's involvement in the OSS sector has already been in the public domain for decades and does not need to be justified in any way again.

So I decided to write a post in which to present how easy it is to deploy applications in Production and Production-like environments.

For the sake of completeness I wanted to deploy the same application both in a 'traditional' environment (a pair of virtual machines) and in an OpenShift containerized environment using a specific Operator.

In both scenarios I used a Postgres database instance.

To create a concrete example that would enable both the development of the application and the use of the infrastructure in a simple way, I chose to use BPMN processes with Timer elements to stress the system in a high workload scenario.

To be completely honest, I chose to use the Timers because some customers had complained of problems using them and this prompted me to check for the real presence of bugs.

2. GoalsAs briefly anticipated, the goal of this post is to demonstrate how simple it is to deploy and configure KieServer runtime clustered solutions.

The secondary objective is to offer customers who already use KieServer deployments in traditional environments a possible migration of their solutions to the OpenShift environment.

The migration of applications to the OpenShift environment is nothing more than a trivial functional lift&shift (the applications remain intact) while the infrastructural part is obviously reviewed with productivity improvement and cost-cut for IT operations.

All topped off with a notable improvement in the flexibility of the entire solution.

3. ScenarioThe scenario that we are going to see now consists of an application with two very simple BPMN processes.

As anticipated, two deployment methods have been defined and described below.

The software versions I used are as follows:

- Local cluster: IBM BAM OE 8.0.1 / JBoss EAP 7.4.8

- Openshift cluster: IBM Business Automation 8.0.1-2 provided by IBM

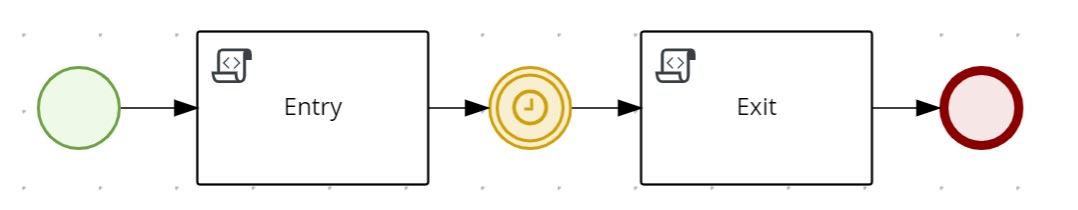

3.1 The applicationThe first process consists of a timer which suspends navigation of the flow for a number of seconds defined by a process input variable; once the timer has expired, the process ends automatically.

In this scenario, the ratio of process-instances to active-timers is 1:1.

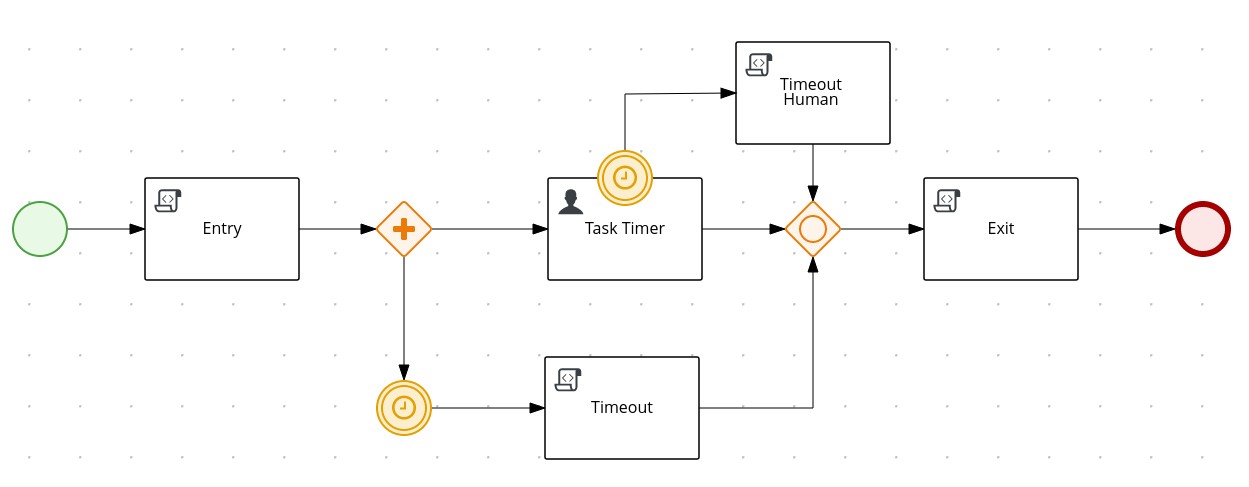

The second process consists of two parallel streams.

In the first parallel flow there is a HumanTask associated with a timer that forcibly completes the human task after a number of seconds defined by the process input variable; once the timer expires the stream rejoins waiting for the other stream to complete.

In the second parallel flow there is a timer which suspends navigation for a number of seconds defined by a second variable input to the process; once the timer expires the stream rejoins waiting for the other stream to complete.

Once the two flows have been completed, the process ends automatically.

The presence of the HumanTask is only useful for stressing the KieServer/Database infrastructure in a high load scenario; no Form/REST interaction with Human Tasks is expected during load testing.

In this scenario, the ratio of process-instances to active-timers is 1:2.

The application is available at the link https://github.com/marcoantonioni/BAMOE-KieServer-TestTimer1

A point of attention to keep in mind is the configuration in the Kie deployment descriptor of the <runtime-strategy> in '

PER_PROCESS_INSTANCE' mode.

3.2 The deploymentsI have defined two deployment methods, the first 'traditional' and the second 'containerized'; both use a remote database instance.

The 'traditional' mode on a pair of RHEL9 virtual machines each with a KieServer instance and a third RHEL9 virtual machine with a PostgreSQL database instance.

The 'containerized' mode in OpenShift environment deployed via Operator with PostgreSQL database.

For both deployments I used a development environment with Business Central for the sole purpose of simplifying the deployment of the application.

3.2.1 DatabaseIn a 'traditional' environment I installed and configured the PostgreSQL database.

For the basic installation only, you can take a look to https://github.com/marcoantonioni/BAMOE-KieServer-PostgreSQL

The correct configurations of datasources for a clustered environment are shown below.

Remember to add the PostegreSQL driver using the following commands

# add postgresql driver (set your file fullpath) using jboss-cli.sh# run cli./jboss-cli.sh# run commandmodule add --name=com.postgresql --resources=/home/marco/Downloads/postgresql-42.5.1.jar --dependencies=javaee.api,sun.jdk,ibm.jdk,javax.api,javax.transaction.api# quit cliexitIn a 'containerized' environment I only had to indicate to the Operator the type of database desired... zero effort !!!

3.2.2 Local VMs cluster3.2.2.1 Database, schema an tables creationTo create the database to support the KieServer cluster I extracted the 'postgresql-jbpm-schema.sql' file from the addons package and ran the following commands

JBPM_SCHEMA_FILE=/tmp/postgresql-jbpm-schema.sqlPGPASSWORD=kie01server psql -U kieserver -h remote.postgres.net -d postgres -c "\l+"PGPASSWORD=kie01server psql -U kieserver -h remote.postgres.net -d postgres -c "DROP DATABASE kieserver01;"PGPASSWORD=kie01server psql -U kieserver -h remote.postgres.net -d postgres -c "\l+"PGPASSWORD=kie01server psql -U kieserver -h remote.postgres.net -d postgres -c "CREATE DATABASE kieserver01;"PGPASSWORD=kie01server psql -U kieserver -h remote.postgres.net -d postgres -c "\l+"PGPASSWORD=kie01server psql -U kieserver -h remote.postgres.net -d kieserver01 -a -f ${JBPM_SCHEMA_FILE}PGPASSWORD=kie01server psql -U kieserver -h remote.postgres.net -d kieserver01 -c "\dt+"3.2.2.2 Datasource and Timer-Service configurationThese are the configurations that require the most attention in a clustered KieServer scenario.

As indicated in the manuals, I used and customized the 'standalone-full.xml' file.

Obviously the configurations for the two KieServer instances are identical.

The only difference between the two virtual machines is the host name which, we will see later, is used as a marker for managing the timers taken over by a KieServer instance (although in my personal opinion it is not the correct way when you have multiple instances of KieServer inside a virtual machine).

I made the following configurations

3.2.2.2.1 Section <system-properties><!-- Update begin --><property name="org.kie.server.location" value="http://localhost:8080/kie-server/services/rest/server"/><property name="org.kie.server.controller" value="http://localhost:8080/business-central/rest/controller"/><property name="org.kie.server.controller.user" value="controllerUser"/><property name="org.kie.server.controller.pwd" value="newPassw0rd"/><property name="org.kie.server.user" value="controllerUser"/><property name="org.kie.server.pwd" value="newPassw0rd"/><property name="org.kie.server.id" value="default-kieserver"/><!-- Data source properties. --><property name="org.kie.server.persistence.ds" value="java:jboss/datasources/PostgresDS"/><property name="org.kie.server.persistence.dialect" value="org.hibernate.dialect.PostgreSQLDialect"/><!-- EJB properties. --><property name="org.jbpm.ejb.timer.tx" value="true"/><property name="org.jbpm.ejb.timer.local.cache" value="false"/><!-- Update end -->In this section, in addition to the references of the access credentials to the KieServer and the type of datasource, the two settings for EJB are important, one enables the management of transactionality (tx) the other (not to be used in production) removes the use of the cache and therefore generates greater load and access contention on the database, one of the objectives of this test is to put the infrastructure under stress.

3.2.2.2.2 Section <subsystem xmlns="urn:jboss:domain:datasources:6.0"> / <datasources><xa-datasource jndi-name="java:/jboss/datasources/EJBTimerDS" pool-name="poolPostgresqlEJB_TIMER" enabled="true" use-java-context="true"><xa-datasource-property name="DatabaseName">kieserver01</xa-datasource-property><xa-datasource-property name="PortNumber">5432</xa-datasource-property><xa-datasource-property name="ServerName">remote.postgres.net</xa-datasource-property> <driver>postgresql</driver><transaction-isolation>TRANSACTION_READ_COMMITTED</transaction-isolation><xa-pool><min-pool-size>30</min-pool-size><max-pool-size>50</max-pool-size></xa-pool><security><user-name>kieserver</user-name><password>kie01server</password></security><validation><validate-on-match>true</validate-on-match><background-validation>false</background-validation><valid-connection-checker class-name="org.jboss.jca.adapters.jdbc.extensions.postgres.PostgreSQLValidConnectionChecker"/><exception-sorter class-name="org.jboss.jca.adapters.jdbc.extensions.postgres.PostgreSQLExceptionSorter"/></validation></xa-datasource><xa-datasource jndi-name="java:/jboss/datasources/PostgresDS" pool-name="poolPostgresDS" enabled="true" use-java-context="true"><xa-datasource-property name="DatabaseName">kieserver01</xa-datasource-property><xa-datasource-property name="PortNumber">5432</xa-datasource-property><xa-datasource-property name="ServerName">remote.postgres.net</xa-datasource-property> <driver>postgresql</driver><xa-pool><min-pool-size>30</min-pool-size><max-pool-size>50</max-pool-size></xa-pool><security><user-name>kieserver</user-name><password>kie01server</password></security><validation><validate-on-match>true</validate-on-match><background-validation>false</background-validation><valid-connection-checker class-name="org.jboss.jca.adapters.jdbc.extensions.postgres.PostgreSQLValidConnectionChecker"/><exception-sorter class-name="org.jboss.jca.adapters.jdbc.extensions.postgres.PostgreSQLExceptionSorter"/></validation></xa-datasource><drivers><driver name="postgresql" module="com.postgresql"><xa-datasource-class>org.postgresql.xa.PGXADataSource</xa-datasource-class></driver></drivers>As indicated in the manuals, I configured two different XA datasources, one generic and one dedicated to timer management via EJB; the latter, as you can see, has a specific 'transaction-isolation'.

Both datasources use the PostgreSQL driver definition.

3.2.2.2.3 Section <subsystem xmlns="urn:jboss:domain:ejb3:9.0"><timer-service thread-pool-name="default" default-data-store="poolPostgresqlEJB_TIMER_ds"><data-stores><database-data-store name="poolPostgresqlEJB_TIMER_ds" datasource-jndi-name="java:/jboss/datasources/EJBTimerDS" database="postgresql" partition="ejb_timer_part" refresh-interval="30000"/></data-stores></timer-service>In this section the important configuration points are the reference to the specific datasource dedicated to the EJB timer (isolation level) the data refresh interval from db and in particular the definition of the 'partition' so that all KieServer instances share the management of timers allocated in this partition; hence a true workload balancer.

3.2.3 Openshift clusterFor deployment in OpenShift environment I used the following commands via OCP CLI interface

3.2.3.1 Operator installation# namespace creationTNS=bamoe-clusteroc new-project ${TNS}# BAM OE operator deploymentcat << EOF | oc create -f -apiVersion: operators.coreos.com/v1kind: OperatorGroupmetadata: generateName: my-ibamoe-operator- name: my-ibamoe-operator-marco spec: targetNamespaces: - ${TNS}---apiVersion: operators.coreos.com/v1alpha1kind: Subscriptionmetadata: name: bamoe-businessautomation-operator namespace: ${TNS}spec: channel: 8.x-stable installPlanApproval: Automatic name: bamoe-businessautomation-operator source: redhat-operators sourceNamespace: openshift-marketplace startingCSV: bamoe-businessautomation-operator.8.0.1-2EOF3.2.3.2 KieApp resource deployment# KieApp deploymentcat << EOF | oc create -f -apiVersion: app.kiegroup.org/v2kind: KieAppmetadata: name: rhpam-authoring namespace: ${TNS}spec: commonConfig: adminPassword: passw0rd adminUser: admin amqClusterPassword: passw0rd amqPassword: passw0rd applicationName: my-app-timers dbPassword: passw0rd disableSsl: true keyStorePassword: passw0rd environment: rhpam-authoring objects: servers: - database: type: postgresql deployments: 1 env: - name: DATASOURCES - name: DB_SERVICE_PREFIX_MAPPING value: my-app-timers-postgresql=RHPAM id: my-app-timers-srv-id jbpmCluster: true replicas: 3EOFThe 'cluster' type KieApp configuration creates 5 new pods, one for the PostgreSQL database, three for KieServer and one with BusinessCentral application through which to import the application from the link https://github.com/marcoantonioni/BAMOE-KieServer-TestTimer1 and then deploy to the 3 pods that contain the KieServer runtime.

Pay attention to 'jbpmCluster: true' and to environment variables, DATASOURCES has empty value and DB_SERVICE_PREFIX_MAPPING with value 'my-app-timers-postgresql=RHPAM'; these two variables guide the operator to create the 'timer-service' configuration which enables load balancing of the timers on all three pods with the KieServer within which our application operates.

To access the BusinessCentral application and import and deploy the application, use the link obtained through the commands

BC_SERVER_URL=http://$(oc get route my-app-timers-rhpamcentr -o jsonpath='{.spec.host}')echo ${BC_SERVER_URL}After the deployment of the application, 3 new KieServer pods will be created to replace the previous ones.

If you are curious you can go and see the contents of the KieServer configuration file in one of the three pods, the file is at the path: /opt/eap/standalone/configuration/standalone-openshift.xml

# select one of the 3 KieServer podsONE_OF_KIE_SRV=$(oc get pods -n ${TNS} | grep ${KIE_APP_NAME}-kieserver | grep -v postgres | grep Running | head -1 | awk '{print $1}')# copy the configuration file used in the KieServer pod to your diskoc cp ${ONE_OF_KIE_SRV}:/opt/eap/standalone/configuration/standalone-openshift.xml ./${ONE_OF_KIE_SRV}-standalone-openshift.xml The partition name used to balance the EJB timer load is named 'my_app_timers_postgresql-EJB_TIMER_part' and is formed by your app name 'my_app_timers' plus a standard suffix.

Obviously for a deployment through Operator in OpenShift environment most of the configurations are done automatically with less commitment of the IT team and lower risk of human errors.

4. TestsTo run the tests and then the stress tests I used some simple scripts that you can find at the link https://github.com/marcoantonioni/BAMOE-KieServer-TestTimer1Load

To use them, remember to change the SERVER_URL variable to use your own servers.

Before starting the stress test, I performed individual process startups to verify the correct deployment and correct balancing configuration; to validate the balancing run a few runs until a process instance is started on one server and terminated on the other.

4.1 Local cluster4.1.1 Smoke testTo run one or more smoke tests use the following commands from one of the two servers

USER_PASSWORD=admin:passw0rdSERVER_URL=http://localhost:8080/kie-serverCTR_ID=TestTimer1_1.0.0-SNAPSHOTPROCESS_TEMPL_ID="TestTimer1.TestTimer1"MIN_SECS=10RANGE_SECS=50DELAY=$(( $RANDOM % $RANGE_SECS + $MIN_SECS ))curl -s -k -u ${USER_PASSWORD} -H 'content-type: application/json' -H 'accept: application/json' -X POST ${SERVER_URL}/services/rest/server/containers/${CTR_ID}/processes/${PROCESS_TEMPL_ID}/instances -d "{\"delay\":\"PT${DELAY}S\"}"Check the log files to verify correctness of execution and balancing.

Once correctness has been verified, proceed to the endurance test.

4.1.2 Endurance testsThe scripts startProcessTimer.sh and startProcessTimerHuman.sh loop (set your values to your liking) generating random values for wait timers in processes.

To start multiple virtual users you can use the scripts spawnCommands.sh and spawnCommandsHumans.sh if you have a graphical linux terminal equipped with gnome-terminal.

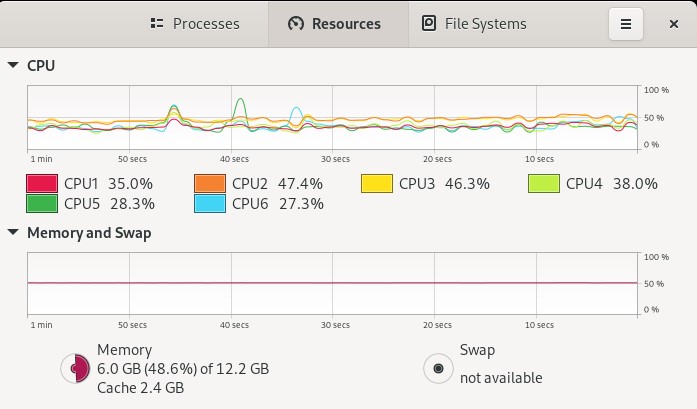

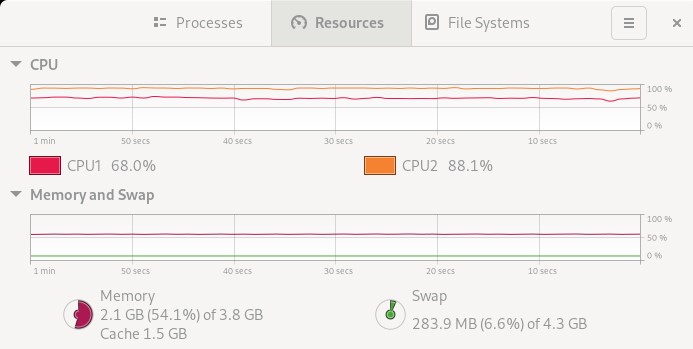

In my tests I used MAX_P=1000 in the startProcess... scripts and MAX_SHELL=10 in the spawnCommands... scripts, I ran them in parallel for a total of 20000 process instances.

Average CPU consumption for a KieServer VM

Average CPU consumption for the PostgreSQL VM

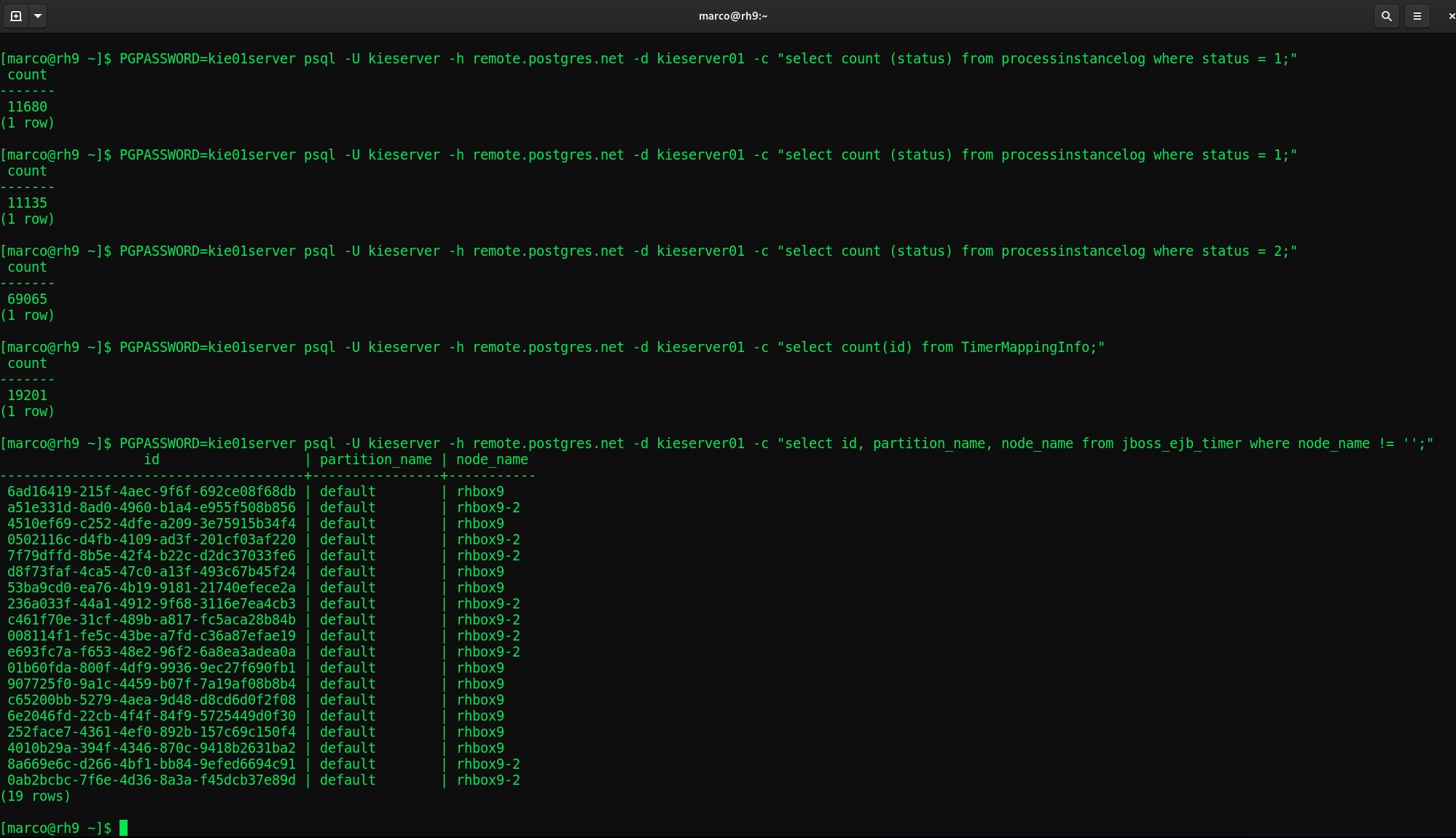

Some queries to the PostgreSQL db during the endurance test; number of process instances in states (1 running, 2 completed) and the number of active timers

4.1.3 Results

4.1.3 ResultsAt the end of the test I found 6 process instances not completed due to XA transactions ended up in rollback and no longer manageable by the server.

I have not made any particular investigations into the causes of the rollbacks and not having done any kind of tuning on the servers, I consider the number of error (only 6 out of 20000 !!!) absolutely acceptable for a solution based entirely on OSS solution stacks.

4.2 Openshift cluster4.2.1 Smoke testTo run smoke tests and endurance test follow the steps described for local deployment and change the value of SERVER_URL env var accordingly to your OCP route.

SERVER_URL=http://$(oc get route my-app-timers-kieserver -o jsonpath='{.spec.host}')echo ${SERVER_URL}# open a new tab to follow the log of each KieServer podoc get pods -n ${TNS} | grep my-app-timers-kieserver | grep -v postgres | grep Running | awk '{print $1}' | xargs -n1 gnome-terminal --tab -- oc logs -f4.2.2 Endurance testsI've used commands as in section 4.1.2 Endurance tests to start scripts with modified URL.

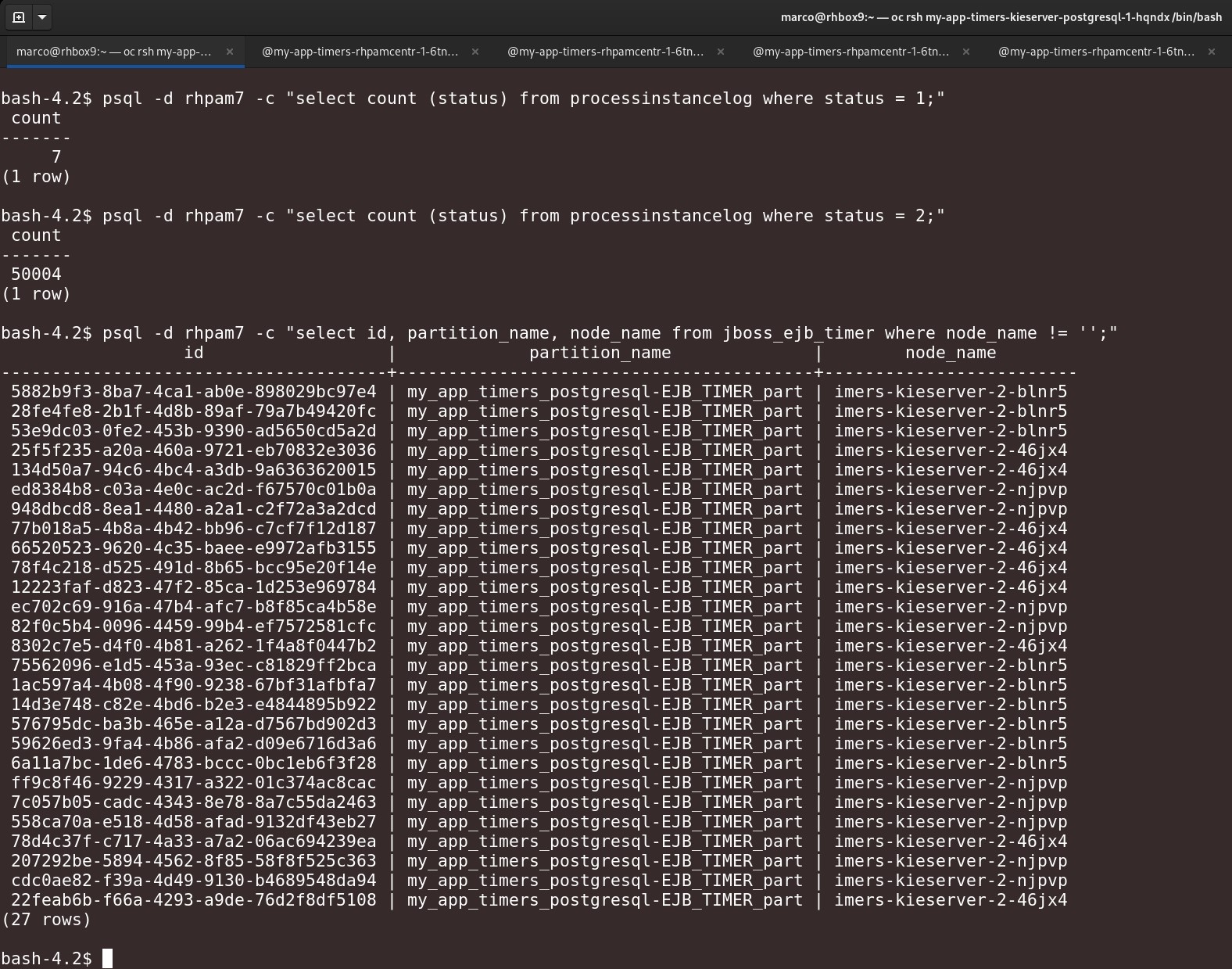

After the test finished, I created many more process instances than the local cluster test, I ran the following database queries with the following results

4.2.3 Results

4.2.3 ResultsAlso in this case very good results were obtained, in my opinion, about 50k instances of processes started and only 7 resulted in error and therefore not terminated.

In summary, the percentage of errors is infinitesimal and I think it is in the real life average of a production system... hard to believe!

Also in this case I have not investigated the reason for this error, I will open a support case in the next few days as well as for the XA transaction errors in the 'traditional' deployment scenario.

I report below some error traces found in the KieServer pod logs.

4.2.3.1 Warning and Error traces#-------------------------------------------------------------------# WARNING#-------------------------------------------------------------------06:41:15,159 INFO [stdout] (default task-5) ===> TestTimer1 ENTRY pid[1941] delay[PT33S]06:41:15,535 INFO [stdout] (default task-5) ===> TestTimer1Human ENTRY pid[1942] delay[PT12S] delayHuman[PT36S]06:41:15,818 INFO [stdout] (default task-5) ===> TestTimer1Human ENTRY pid[1948] delay[PT31S] delayHuman[PT19S]06:41:15,896 WARN [com.arjuna.ats.arjuna] (Transaction Reaper) ARJUNA012117: TransactionReaper::check processing TX 0:ffffac1e2a79:620c58f2:63b27991:2965 in state RUN06:41:15,898 WARN [com.arjuna.ats.arjuna] (Transaction Reaper Worker 0) ARJUNA012095: Abort of action id 0:ffffac1e2a79:620c58f2:63b27991:2965 invoked while multiple threads active within it.06:41:15,901 WARN [com.arjuna.ats.arjuna] (Transaction Reaper Worker 0) ARJUNA012381: Action id 0:ffffac1e2a79:620c58f2:63b27991:2965 completed with multiple threads - thread EJB default - 2 was in progress with java.base@11.0.17/jdk.internal.misc.Unsafe.park(Native Method)java.base@11.0.17/java.util.concurrent.locks.LockSupport.park(LockSupport.java:194)java.base@11.0.17/java.util.concurrent.locks.AbstractQueuedSynchronizer.parkAndCheckInterrupt(AbstractQueuedSynchronizer.java:885)java.base@11.0.17/java.util.concurrent.locks.AbstractQueuedSynchronizer.acquireQueued(AbstractQueuedSynchronizer.java:917)java.base@11.0.17/java.util.concurrent.locks.AbstractQueuedSynchronizer.acquire(AbstractQueuedSynchronizer.java:1240)java.base@11.0.17/java.util.concurrent.locks.ReentrantLock.lock(ReentrantLock.java:267)deployment.ROOT.war//org.jbpm.runtime.manager.impl.lock.DefaultRuntimeManagerLock.lock(DefaultRuntimeManagerLock.java:30)deployment.ROOT.war//org.jbpm.runtime.manager.impl.lock.LegacyRuntimeManagerLockStrategy.lock(LegacyRuntimeManagerLockStrategy.java:60)deployment.ROOT.war//org.jbpm.runtime.manager.impl.AbstractRuntimeManager.createLockOnGetEngine(AbstractRuntimeManager.java:622)deployment.ROOT.war//org.jbpm.runtime.manager.impl.AbstractRuntimeManager.createLockOnGetEngine(AbstractRuntimeManager.java:606)deployment.ROOT.war//org.jbpm.runtime.manager.impl.PerProcessInstanceRuntimeManager.getRuntimeEngine(PerProcessInstanceRuntimeManager.java:155)deployment.ROOT.war//org.jbpm.process.core.timer.impl.GlobalTimerService.getRunner(GlobalTimerService.java:301)deployment.ROOT.war//org.jbpm.process.core.timer.impl.GlobalTimerService.getRunner(GlobalTimerService.java:281)deployment.ROOT.war//org.jbpm.persistence.timer.GlobalJpaTimerJobInstance.call(GlobalJpaTimerJobInstance.java:83)deployment.ROOT.war//org.jbpm.persistence.timer.GlobalJpaTimerJobInstance.call(GlobalJpaTimerJobInstance.java:50)deployment.ROOT.war//org.jbpm.services.ejb.timer.EJBTimerScheduler.executeTimerJobInstance(EJBTimerScheduler.java:131)deployment.ROOT.war//org.jbpm.services.ejb.timer.EJBTimerScheduler$$Lambda$2602/0x00000008420e2c40.doWork(Unknown Source)deployment.ROOT.war//org.jbpm.services.ejb.timer.EJBTimerScheduler.transaction(EJBTimerScheduler.java:228)deployment.ROOT.war//org.jbpm.services.ejb.timer.EJBTimerScheduler.executeTimerJob(EJBTimerScheduler.java:120)java.base@11.0.17/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)java.base@11.0.17/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)java.base@11.0.17/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)java.base@11.0.17/java.lang.reflect.Method.invoke(Method.java:566)org.jboss.as.ee@7.4.6.GA-redhat-00002//org.jboss.as.ee.component.ManagedReferenceMethodInterceptor.processInvocation(ManagedReferenceMethodInterceptor.java:52)org.jboss.invocation@1.6.3.Final-redhat-00001//org.jboss.invocation.InterceptorContext.proceed(InterceptorContext.java:422)org.jboss.invocation@1.6.3.Final-redhat-00001//org.jboss.invocation.WeavedInterceptor.processInvocation(WeavedInterceptor.java:50)org.jboss.as.ee@7.4.6.GA-redhat-00002//org.jboss.as.ee.component.interceptors.UserInterceptorFactory$1.processInvocation(UserInterceptorFactory.java:61)org.jboss.invocation@1.6.3.Final-redhat-00001//org.jboss.invocation.InterceptorContext.proceed(InterceptorContext.java:422).......org.jboss.invocation@1.6.3.Final-redhat-00001//org.jboss.invocation.InterceptorContext.proceed(InterceptorContext.java:422)org.jboss.invocation@1.6.3.Final-redhat-00001//org.jboss.invocation.ChainedInterceptor.processInvocation(ChainedInterceptor.java:53)org.jboss.as.ejb3@7.4.6.GA-redhat-00002//org.jboss.as.ejb3.timerservice.TimedObjectInvokerImpl.callTimeout(TimedObjectInvokerImpl.java:99)org.jboss.as.ejb3@7.4.6.GA-redhat-00002//org.jboss.as.ejb3.timerservice.TimedObjectInvokerImpl.callTimeout(TimedObjectInvokerImpl.java:109)org.jboss.as.ejb3@7.4.6.GA-redhat-00002//org.jboss.as.ejb3.timerservice.TimerTask.invokeBeanMethod(TimerTask.java:211)org.jboss.as.ejb3@7.4.6.GA-redhat-00002//org.jboss.as.ejb3.timerservice.TimerTask.callTimeout(TimerTask.java:207)org.jboss.as.ejb3@7.4.6.GA-redhat-00002//org.jboss.as.ejb3.timerservice.TimerTask.run(TimerTask.java:181)org.jboss.as.ejb3@7.4.6.GA-redhat-00002//org.jboss.as.ejb3.timerservice.TimerServiceImpl$Task$1.run(TimerServiceImpl.java:1365)org.wildfly.extension.request-controller@15.0.15.Final-redhat-00001//org.wildfly.extension.requestcontroller.ControlPointTask.run(ControlPointTask.java:46)org.jboss.threads@2.4.0.Final-redhat-00001//org.jboss.threads.ContextClassLoaderSavingRunnable.run(ContextClassLoaderSavingRunnable.java:35)org.jboss.threads@2.4.0.Final-redhat-00001//org.jboss.threads.EnhancedQueueExecutor.safeRun(EnhancedQueueExecutor.java:1990)org.jboss.threads@2.4.0.Final-redhat-00001//org.jboss.threads.EnhancedQueueExecutor$ThreadBody.doRunTask(EnhancedQueueExecutor.java:1486)org.jboss.threads@2.4.0.Final-redhat-00001//org.jboss.threads.EnhancedQueueExecutor$ThreadBody.run(EnhancedQueueExecutor.java:1377)java.base@11.0.17/java.lang.Thread.run(Thread.java:829)org.jboss.threads@2.4.0.Final-redhat-00001//org.jboss.threads.JBossThread.run(JBossThread.java:513)06:41:15,901 WARN [com.arjuna.ats.arjuna] (Transaction Reaper Worker 0) ARJUNA012108: CheckedAction::check - atomic action 0:ffffac1e2a79:620c58f2:63b27991:2965 aborting with 1 threads active!06:41:15,904 INFO [stdout] (default task-6) ===> TestTimer1 ENTRY pid[1949] delay[PT44S]06:41:15,909 WARN [com.arjuna.ats.arjuna] (Transaction Reaper Worker 0) ARJUNA012121: TransactionReaper::doCancellations worker Thread[Transaction Reaper Worker 0,5,main] successfully canceled TX 0:ffffac1e2a79:620c58f2:63b27991:2965#-------------------------------------------------------------------# ERROR#-------------------------------------------------------------------09:22:33,268 INFO [stdout] (EJB default - 7) ===> TestTimer1Human EXIT pid[48284] delay[PT5S] delayHuman[PT1S]09:22:33,350 INFO [stdout] (EJB default - 7) ===> TestTimer1Human Timeout task pid[48269] delay[PT8S] delayHuman[PT8S]09:22:33,353 ERROR [org.hibernate.internal.ExceptionMapperStandardImpl] (EJB default - 7) HHH000346: Error during managed flush [Row was updated or deleted by another transaction (or unsaved-value mapping was incorrect) : [org.drools.persistence.info.SessionInfo#48270]]09:22:33,353 WARN [com.arjuna.ats.arjuna] (EJB default - 7) ARJUNA012125: TwoPhaseCoordinator.beforeCompletion - failed for SynchronizationImple< 0:ffffac1e2f1c:365f2e1e:63b2920d:f699b, org.wildfly.transaction.client.AbstractTransaction$AssociatingSynchronization@3a18157d >: javax.persistence.OptimisticLockException: Row was updated or deleted by another transaction (or unsaved-value mapping was incorrect) : [org.drools.persistence.info.SessionInfo#48270]at deployment.ROOT.war//org.hibernate.internal.ExceptionConverterImpl.wrapStaleStateException(ExceptionConverterImpl.java:226)at deployment.ROOT.war//org.hibernate.internal.ExceptionConverterImpl.convert(ExceptionConverterImpl.java:93)at deployment.ROOT.war//org.hibernate.internal.ExceptionConverterImpl.convert(ExceptionConverterImpl.java:181)at deployment.ROOT.war//org.hibernate.internal.ExceptionConverterImpl.convert(ExceptionConverterImpl.java:188)at deployment.ROOT.war//org.hibernate.internal.SessionImpl.doFlush(SessionImpl.java:1478)at deployment.ROOT.war//org.hibernate.internal.SessionImpl.managedFlush(SessionImpl.java:512)at deployment.ROOT.war//org.hibernate.internal.SessionImpl.flushBeforeTransactionCompletion(SessionImpl.java:3310)at deployment.ROOT.war//org.hibernate.internal.SessionImpl.beforeTransactionCompletion(SessionImpl.java:2506)at deployment.ROOT.war//org.hibernate.engine.jdbc.internal.JdbcCoordinatorImpl.beforeTransactionCompletion(JdbcCoordinatorImpl.java:447)at deployment.ROOT.war//org.hibernate.resource.transaction.backend.jta.internal.JtaTransactionCoordinatorImpl.beforeCompletion(JtaTransactionCoordinatorImpl.java:352)at deployment.ROOT.war//org.hibernate.resource.transaction.backend.jta.internal.synchronization.SynchronizationCallbackCoordinatorNonTrackingImpl.beforeCompletion(SynchronizationCallbackCoordinatorNonTrackingImpl.java:47)at deployment.ROOT.war//org.hibernate.resource.transaction.backend.jta.internal.synchronization.RegisteredSynchronization.beforeCompletion(RegisteredSynchronization.java:37)at org.wildfly.transaction.client@1.1.15.Final-redhat-00001//org.wildfly.transaction.client.AbstractTransaction.performConsumer(AbstractTransaction.java:236)at org.wildfly.transaction.client@1.1.15.Final-redhat-00001//org.wildfly.transaction.client.AbstractTransaction.performConsumer(AbstractTransaction.java:247)at org.wildfly.transaction.client@1.1.15.Final-redhat-00001//org.wildfly.transaction.client.AbstractTransaction$AssociatingSynchronization.beforeCompletion(AbstractTransaction.java:292)at org.jboss.jts//com.arjuna.ats.internal.jta.resources.arjunacore.SynchronizationImple.beforeCompletion(SynchronizationImple.java:76)at org.jboss.jts//com.arjuna.ats.arjuna.coordinator.TwoPhaseCoordinator.beforeCompletion(TwoPhaseCoordinator.java:360)at org.jboss.jts//com.arjuna.ats.arjuna.coordinator.TwoPhaseCoordinator.end(TwoPhaseCoordinator.java:91)at org.jboss.jts//com.arjuna.ats.arjuna.AtomicAction.commit(AtomicAction.java:162)at org.jboss.jts//com.arjuna.ats.internal.jta.transaction.arjunacore.TransactionImple.commitAndDisassociate(TransactionImple.java:1295)at org.jboss.jts//com.arjuna.ats.internal.jta.transaction.arjunacore.BaseTransaction.commit(BaseTransaction.java:128)at org.jboss.jts.integration//com.arjuna.ats.jbossatx.BaseTransactionManagerDelegate.commit(BaseTransactionManagerDelegate.java:94)at org.wildfly.transaction.client@1.1.15.Final-redhat-00001//org.wildfly.transaction.client.LocalTransaction.commitAndDissociate(LocalTransaction.java:78)at org.wildfly.transaction.client@1.1.15.Final-redhat-00001//org.wildfly.transaction.client.ContextTransactionManager.commit(ContextTransactionManager.java:71)at org.wildfly.transaction.client@1.1.15.Final-redhat-00001//org.wildfly.transaction.client.LocalUserTransaction.commit(LocalUserTransaction.java:53)at deployment.ROOT.war//org.jbpm.services.ejb.timer.EJBTimerScheduler.transaction(EJBTimerScheduler.java:229)at deployment.ROOT.war//org.jbpm.services.ejb.timer.EJBTimerScheduler.executeTimerJob(EJBTimerScheduler.java:120)at jdk.internal.reflect.GeneratedMethodAccessor197.invoke(Unknown Source)at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)at java.base/java.lang.reflect.Method.invoke(Method.java:566)at org.jboss.as.ee@7.4.6.GA-redhat-00002//org.jboss.as.ee.component.ManagedReferenceMethodInterceptor.processInvocation(ManagedReferenceMethodInterceptor.java:52)at org.jboss.invocation@1.6.3.Final-redhat-00001//org.jboss.invocation.InterceptorContext.proceed(InterceptorContext.java:422).......at org.jboss.as.ejb3@7.4.6.GA-redhat-00002//org.jboss.as.ejb3.timerservice.TimedObjectInvokerImpl.callTimeout(TimedObjectInvokerImpl.java:99)at org.jboss.as.ejb3@7.4.6.GA-redhat-00002//org.jboss.as.ejb3.timerservice.TimedObjectInvokerImpl.callTimeout(TimedObjectInvokerImpl.java:109)at org.jboss.as.ejb3@7.4.6.GA-redhat-00002//org.jboss.as.ejb3.timerservice.TimerTask.invokeBeanMethod(TimerTask.java:211)at org.jboss.as.ejb3@7.4.6.GA-redhat-00002//org.jboss.as.ejb3.timerservice.TimerTask.callTimeout(TimerTask.java:207)at org.jboss.as.ejb3@7.4.6.GA-redhat-00002//org.jboss.as.ejb3.timerservice.TimerTask.run(TimerTask.java:181)at org.jboss.as.ejb3@7.4.6.GA-redhat-00002//org.jboss.as.ejb3.timerservice.TimerServiceImpl$Task$1.run(TimerServiceImpl.java:1365)at org.wildfly.extension.request-controller@15.0.15.Final-redhat-00001//org.wildfly.extension.requestcontroller.ControlPointTask.run(ControlPointTask.java:46)at org.jboss.threads@2.4.0.Final-redhat-00001//org.jboss.threads.ContextClassLoaderSavingRunnable.run(ContextClassLoaderSavingRunnable.java:35)at org.jboss.threads@2.4.0.Final-redhat-00001//org.jboss.threads.EnhancedQueueExecutor.safeRun(EnhancedQueueExecutor.java:1990)at org.jboss.threads@2.4.0.Final-redhat-00001//org.jboss.threads.EnhancedQueueExecutor$ThreadBody.doRunTask(EnhancedQueueExecutor.java:1486)at org.jboss.threads@2.4.0.Final-redhat-00001//org.jboss.threads.EnhancedQueueExecutor$ThreadBody.run(EnhancedQueueExecutor.java:1363)at java.base/java.lang.Thread.run(Thread.java:829)at org.jboss.threads@2.4.0.Final-redhat-00001//org.jboss.threads.JBossThread.run(JBossThread.java:513)Caused by: org.hibernate.StaleObjectStateException: Row was updated or deleted by another transaction (or unsaved-value mapping was incorrect) : [org.drools.persistence.info.SessionInfo#48270]at deployment.ROOT.war//org.hibernate.persister.entity.AbstractEntityPersister.check(AbstractEntityPersister.java:2540)at deployment.ROOT.war//org.hibernate.persister.entity.AbstractEntityPersister.update(AbstractEntityPersister.java:3375)at deployment.ROOT.war//org.hibernate.persister.entity.AbstractEntityPersister.updateOrInsert(AbstractEntityPersister.java:3249)at deployment.ROOT.war//org.hibernate.persister.entity.AbstractEntityPersister.update(AbstractEntityPersister.java:3650)at deployment.ROOT.war//org.hibernate.action.internal.EntityUpdateAction.execute(EntityUpdateAction.java:146)at deployment.ROOT.war//org.hibernate.engine.spi.ActionQueue.executeActions(ActionQueue.java:604)at deployment.ROOT.war//org.hibernate.engine.spi.ActionQueue.executeActions(ActionQueue.java:478)at deployment.ROOT.war//org.hibernate.event.internal.AbstractFlushingEventListener.performExecutions(AbstractFlushingEventListener.java:356)at deployment.ROOT.war//org.hibernate.event.internal.DefaultFlushEventListener.onFlush(DefaultFlushEventListener.java:39)at deployment.ROOT.war//org.hibernate.internal.SessionImpl.doFlush(SessionImpl.java:1472)... 92 more09:22:33,354 WARN [org.jbpm.services.ejb.timer.EJBTimerScheduler] (EJB default - 7) Transaction was rolled back for GlobalJpaTimerJobInstance [timerServiceId=TestTimer1_1.0.0-SNAPSHOT-timerServiceId, getJobHandle()=EjbGlobalJobHandle [uuid=48270-48269-Task Timer-2]] with status 609:22:33,354 WARN [org.jbpm.services.ejb.timer.EJBTimerScheduler] (EJB default - 7) Execution of time failed. The timer will be retried GlobalJpaTimerJobInstance [timerServiceId=TestTimer1_1.0.0-SNAPSHOT-timerServiceId, getJobHandle()=EjbGlobalJobHandle [uuid=48270-48269-Task Timer-2]]09:22:33,375 INFO [stdout] (EJB default - 7) ===> TestTimer1Human Timeout timer pid[48270] delay[PT8S] delayHuman[PT2S]09:22:33,376 INFO [stdout] (EJB default - 7) ===> TestTimer1Human EXIT pid[48270] delay[PT8S] delayHuman[PT2S]5. ConclusionsThe 'tech toy' seems to work well beyond my initial expectations.

The setup and configuration of a cluster in a traditional environment are relatively simple even if this type of deployment is not very suitable for business solutions subject to load variability and driven by frequently variable business requirements.

Deployment in the OpenShift environment offers considerable cost-effectiveness and flexibility for the same functional offer.

Surely IBM Business Automation Manager Open Editions is a good choice for all companies with a limited budget and who still want to get the most out of the technology and support offered by IBM.

6. ReferencesIBM Process Automation Manager Open Editions Documentation

https://www.ibm.com/docs/en/ibamoeIBM Business Automation Manager Open Editions Software Support Lifecycle Addendum

https://www.ibm.com/support/pages/node/6596913Setup IBM Process Automation Manager Open Edition (PAM/DM) in OpenShift

https://community.ibm.com/community/user/automation/blogs/marco-antonioni/2022/09/28/setup-ibm-process-automation-manager-open-editionUse OpenShift CLI to setup IBM PAM Open Editions

https://community.ibm.com/community/user/automation/blogs/marco-antonioni/2022/10/09/use-openshift-cli-to-setup-ibm-pam-open-editionsOther my posts related to IBM Business Automation Manager Open Editions

https://community.ibm.com/community/user/automation/search?executeSearch=true&SearchTerm=antonioni