RAG (Retrieval Augmented Generation) has been revolutionizing conversational AI. This technique lets chatbots access and leverage external knowledge bases, i.e., your domain or real-time data, tailoring them to your needs. And that’s led to a boom in RAG chatbots!

In this article, we’ll compare two popular options for managing LLMs (large language models) used in RAG chatbots: IBM’s watsonx.governance and Dataiku, which both can run on-premises or in the cloud. We’ll explore their strengths and see how they can be applied in real-world scenarios.

Firstly, why use an LLM governance solution for RAG chatbots?

Imagine you’ve built a customer service RAG chatbot powered by an LLM. You want it to answer questions accurately, avoid offensive language, and protect customer privacy. A GenAI governance solution can help on all these fronts.

Here are some key features to consider:

- Performance Metrics: These measure how well your LLM is performing. For instance, a tool might use BLEU scores to see how good your RAG chatbot is at generating human-quality responses, like providing clear and concise summaries of complex topics.

- Safety and Compliance: These features help you stay on the right side of regulations. The tool might detect Personally Identifiable Information (PII) like social security numbers in your chatbot conversations to ensure it’s handled securely.

- Explainability and Transparency: This lets you understand how your LLM makes decisions. This is crucial in areas like finance, where you might need to explain why your chatbot recommends a particular product.

AI Governance Landscape — IBM and Dataiku

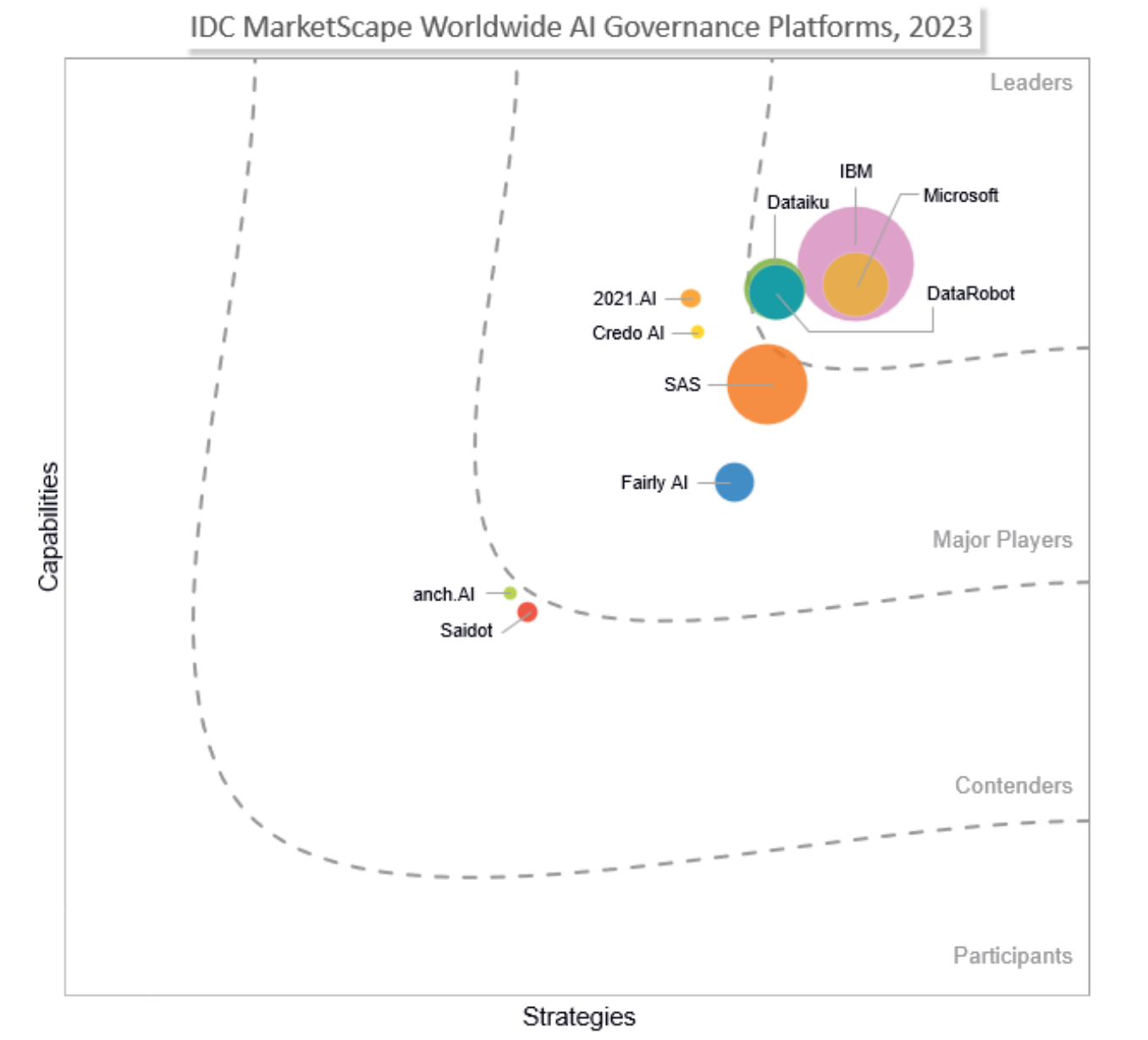

The IDC MarketScape Worldwide AI Governance Platforms for 2023 highlights the leaders in AI governance. According to this report, IBM is positioned as a first-tier leader, while Dataiku is positioned as a second-tier leader as shown in the figure below.

Source: IDC 2023

Source: IDC 2023

watsonx.governance vs. Dataiku: A Closer Look

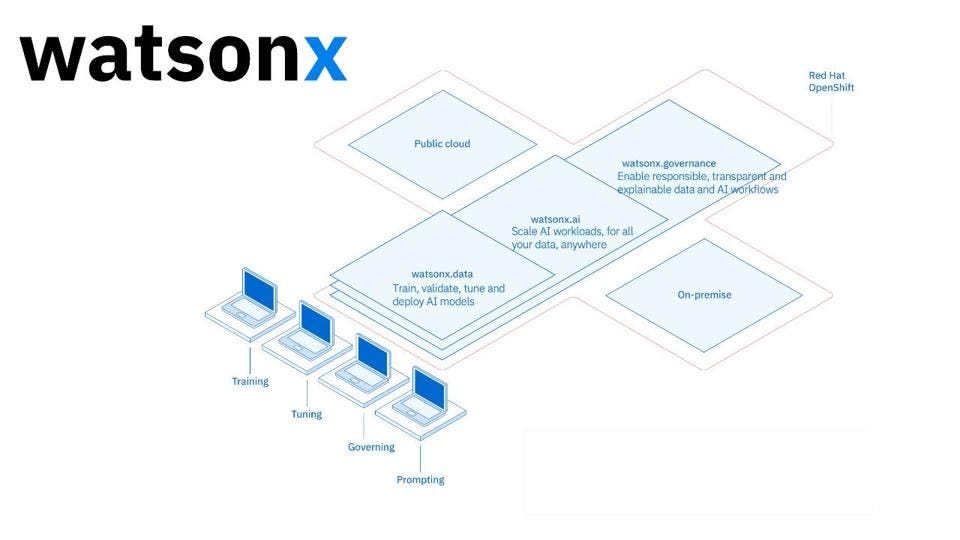

Source: IBM watsonx (on-prem or SaaS)

Source: IBM watsonx (on-prem or SaaS)

watsonx.governance is helping clients govern AI in 3 key areas:

- Lifecycle governance — Accelerate model building at scale. Automate and consolidate multiple tools, applications and platforms while documenting the origin of datasets, models, associated metadata and pipelines.

- Manage risk and protect reputation — Drive responsible, explainable, high-quality AI models, and automatically document model lineage and metadata. Monitor for fairness, bias and drift, set alert tolerances for timely risk mitigation.

- Compliance with regulations, standards and policies — Support compliance using protections and validation to build and deploy models that are fair, transparent, and compliant. Use factsheets to automatically capture and document model metadata and facts in support of audits.

Here are some watsonx.governance highlights for LLMs:

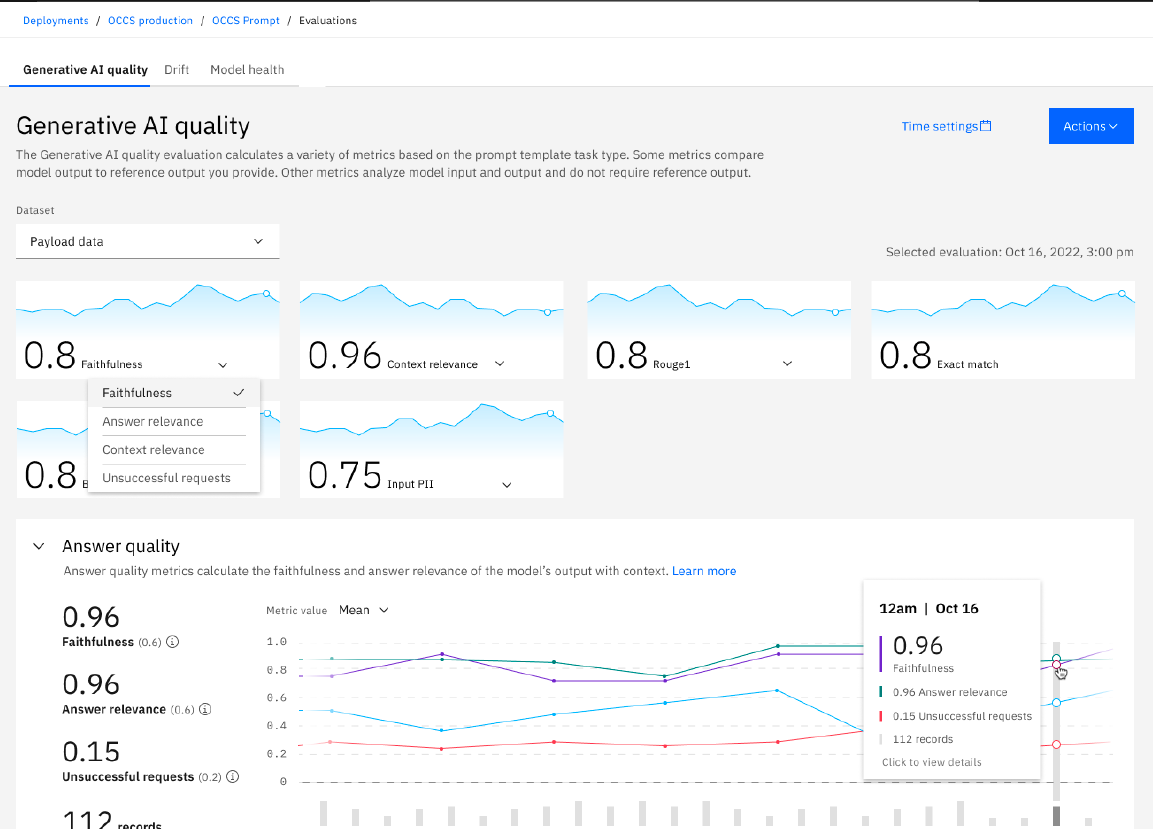

- Evaluate and monitor hallucination and answer quality for RAG: Users can monitor and evaluate GenAI’s RAG use case using out-of-the-box metrics such as faithfulness, answer relevance, unsuccessful requests, keyword inclusion, content analysis, question robustness, and so on to mitigate hallucination. The platform also visualizes trends over time.

You can assess metrics for individual responses with visualization to easily understand / assess response thru watsonx.governance

You can assess metrics for individual responses with visualization to easily understand / assess response thru watsonx.governance

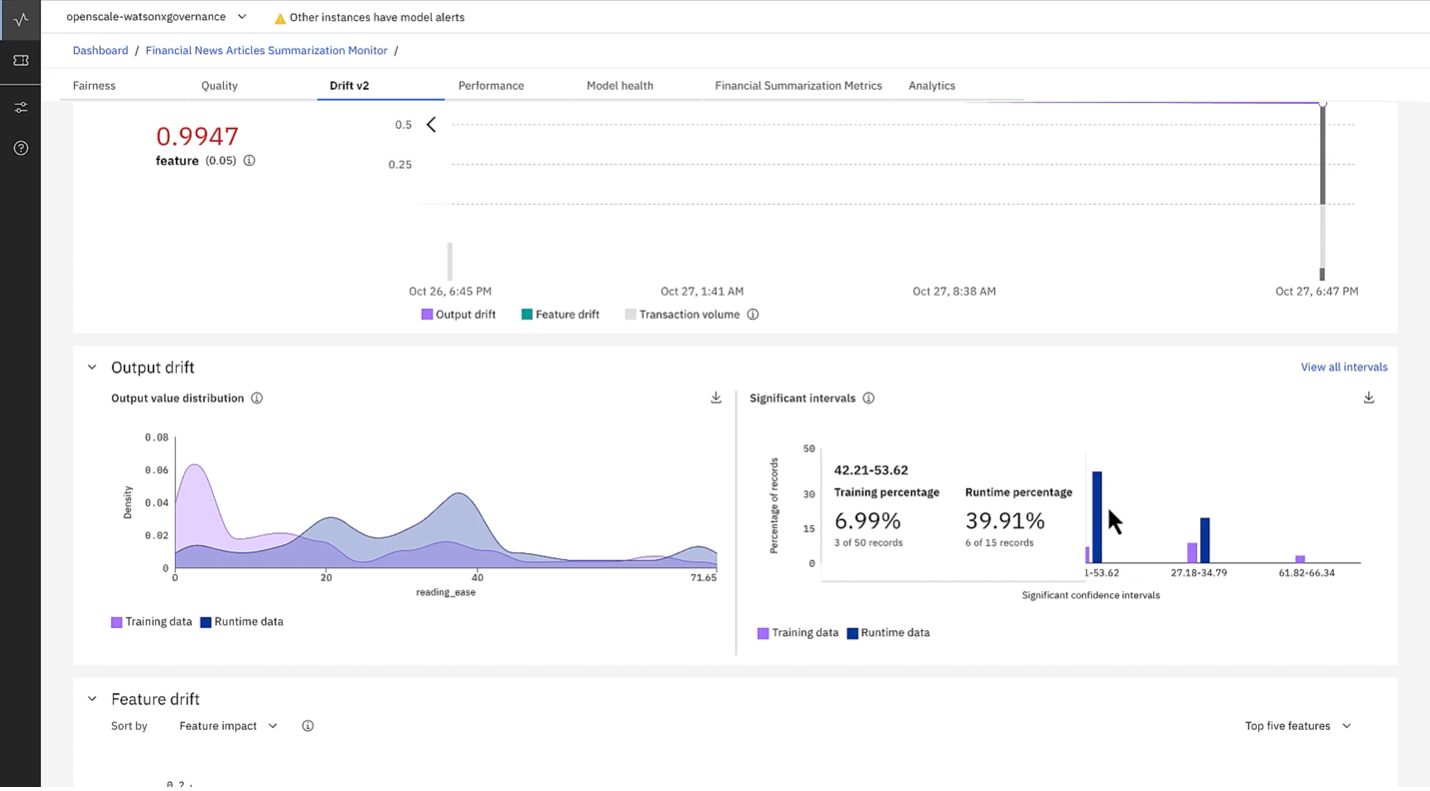

- Monitor drift at runtime: Many users are interested in monitoring model drift, which refers to the gradual decrease in model output quality over time. To mitigate the impact of drift, watsonx.governance monitors how far LLM and predictive model outputs drift from ideal ones, and alerts users should drift metrics fall below specified thresholds. Users may then take appropriate action such as retraining models or changing prompts to mitigate the impact of the drift as shown in the following figure.

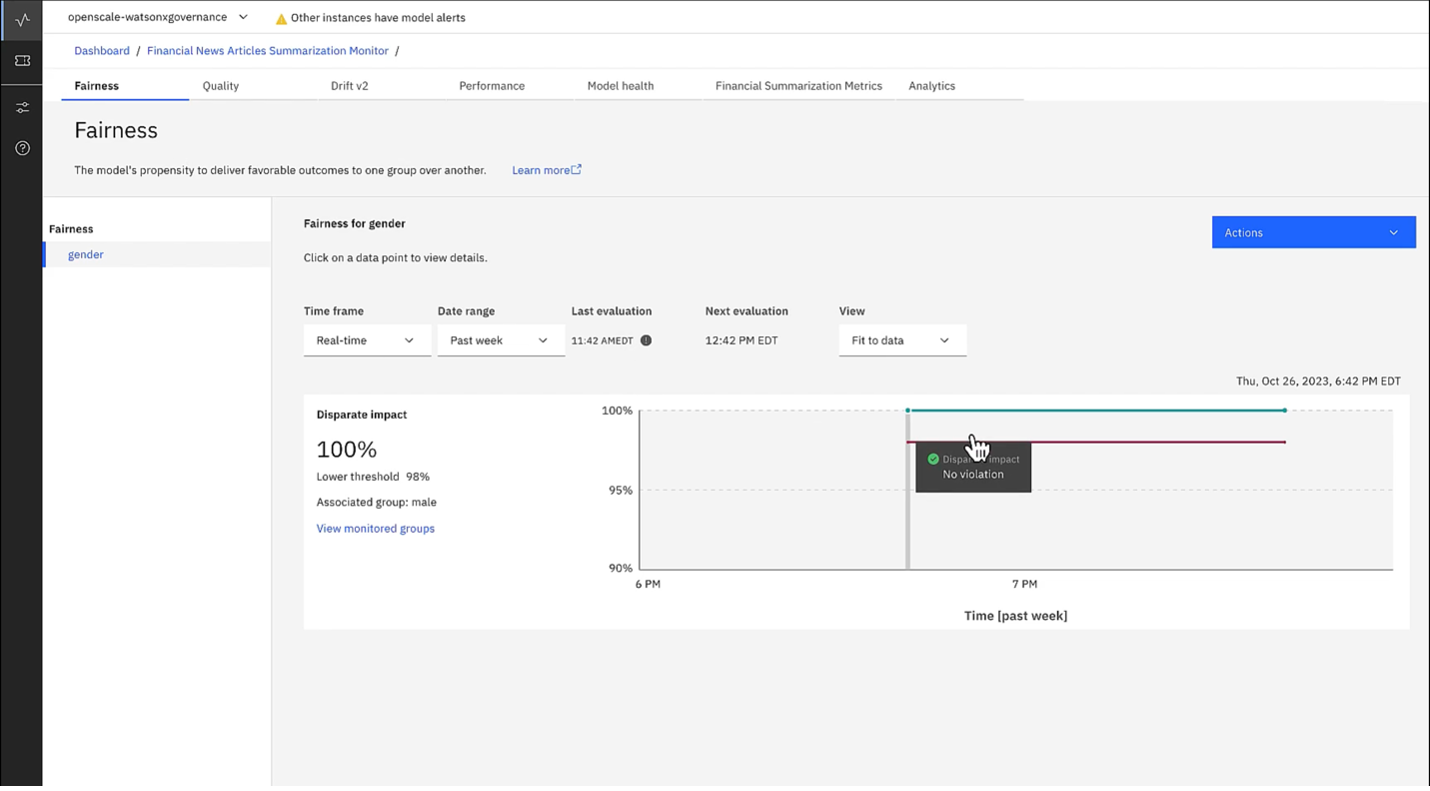

- Fairness tracking: This solution provides detailed reports on feature importance and impact scores. This helps you understand what factors most influence your LLM’s responses, allowing you to fine-tune its behavior for specific tasks.

- Platform-Agnostic Approach: This means you can integrate its governance tools directly with each AI model’s environment, regardless of vendor. This flexibility ensures consistent governance practices are applied across all your AI models, maintaining control and security, even in a multi-vendor environment.

- Customized insights for all levels: You can create centralized dashboards within the platform or export its report. Tailored for different audiences, from data scientists to C-level leaders, these dashboards ensure everyone has the information they need to govern and enable responsible, explainable, and high-quality LLMs.

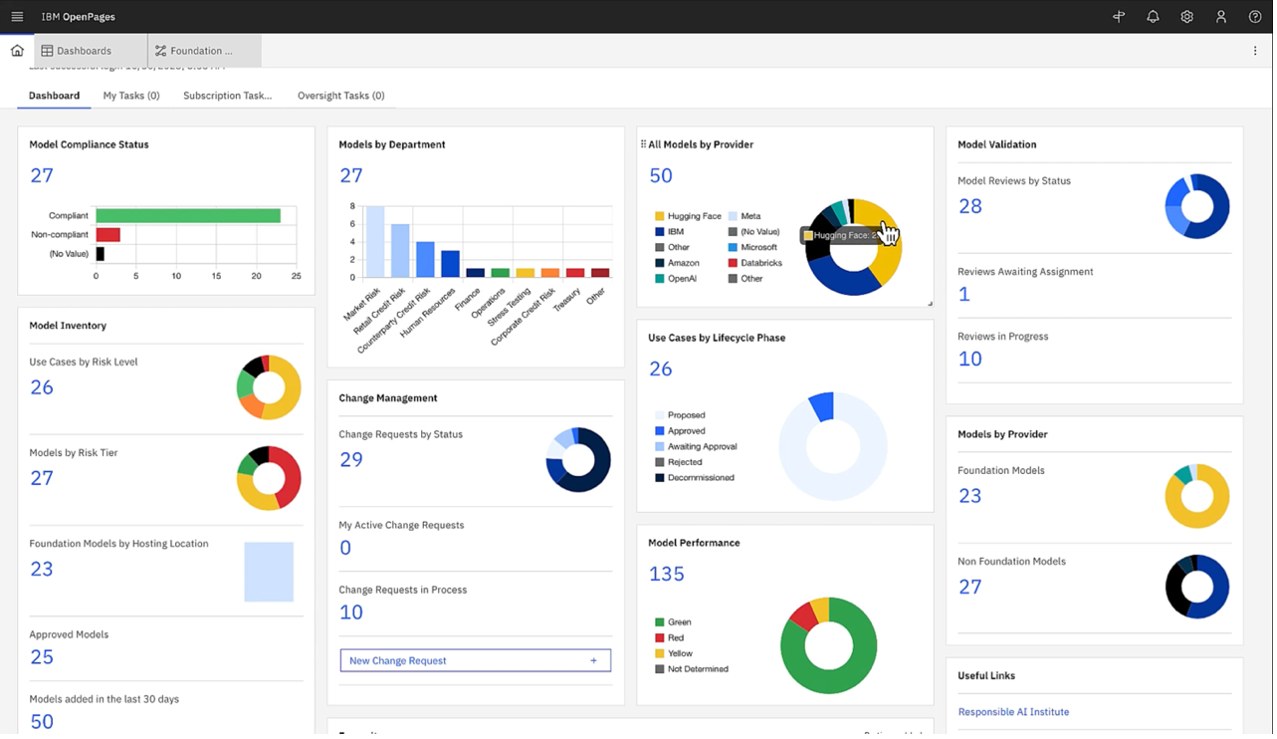

Single and Custom dashboard overview of all kinds of AI/ML model metrics, including risk levels.

Single and Custom dashboard overview of all kinds of AI/ML model metrics, including risk levels.

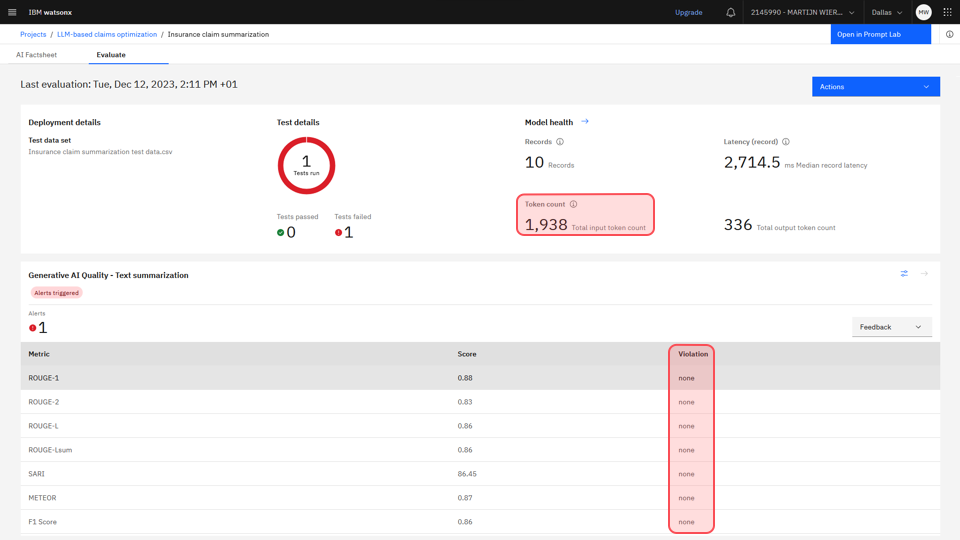

Monitoring dashboard for prompt template deployment: The Model health section shows performance metrics, including a token count. The metrics under the Generative AI Quality header measure the model’s accuracy. The Violation columns show no warnings, which are all within acceptable ranges.

Monitoring dashboard for prompt template deployment: The Model health section shows performance metrics, including a token count. The metrics under the Generative AI Quality header measure the model’s accuracy. The Violation columns show no warnings, which are all within acceptable ranges.

- Assess regulation applicability: It offers an out-of-the-box questionnaire to assess EU AI applicability for AI use cases to tailor to your needs.

Dataiku, on the other hand, empowers IT to take control and help teams build safe and secure GenAI applications with the backbone of LLM Mesh. Here’s what it offers:

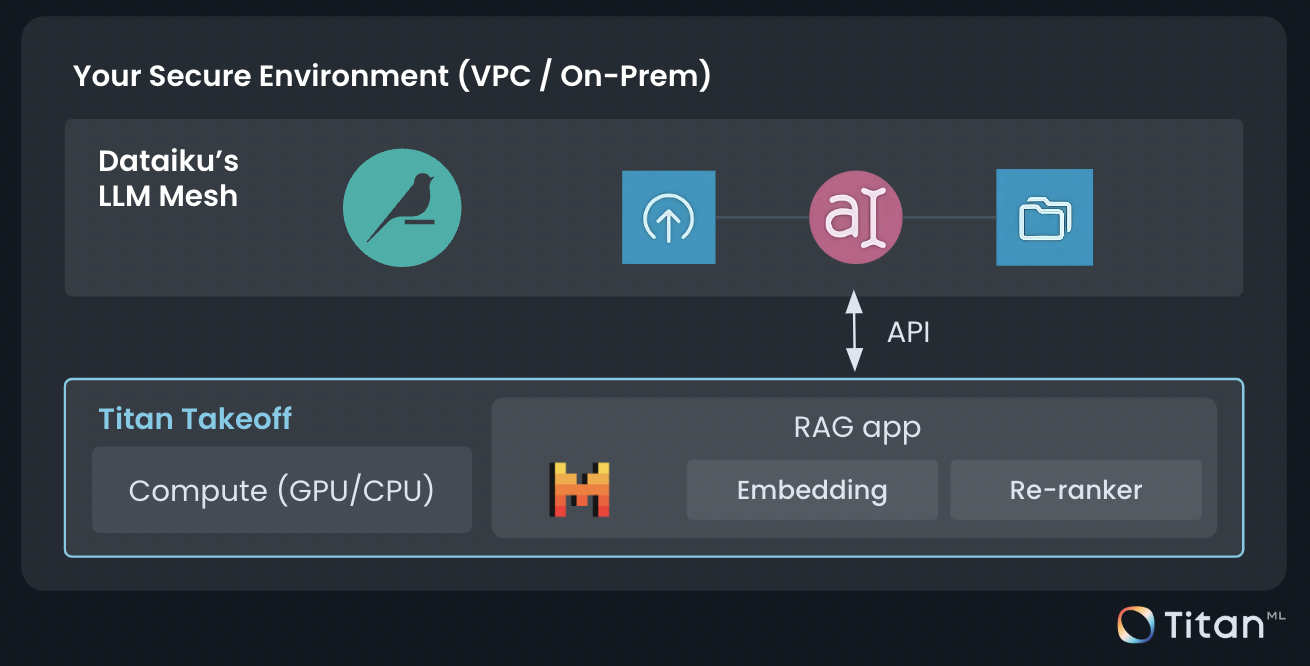

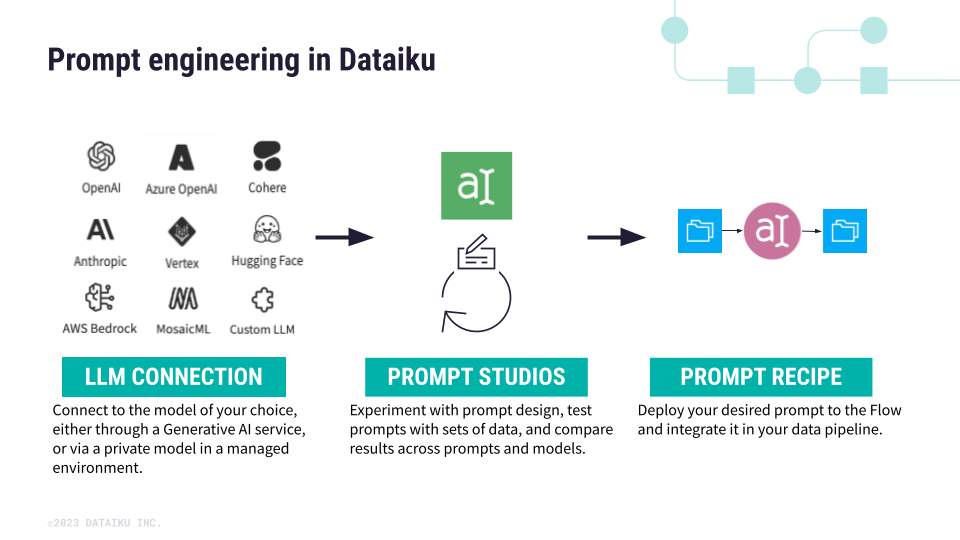

- Directly control your prompts and LLMs: LLM Mesh is the opposite of what watsonx.governance means. Dataiku wants you to actually run everything through the Dataiku platform. It’s a routing and orchestration layer between the applications and the LLMs; it becomes part of the “FMOps” (Foundation Model Operations) landscape.

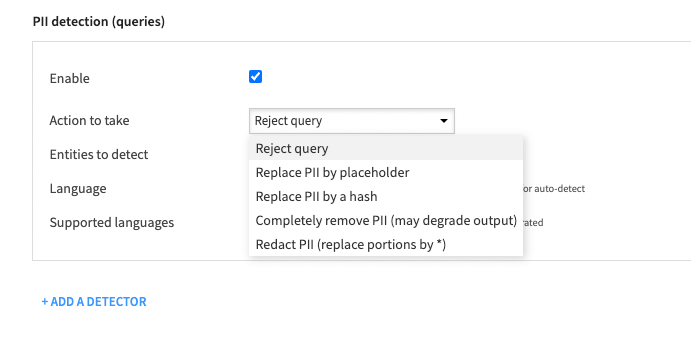

- Detect PII and take action: Leveraging the Presidio analyzer, PII detection can be added at the connection level to filter every query and define an action to take. This can range from preventing the query outright to simply replacing the sensitive data.

- Prompt engineering: Dataiku provides tools to design and manage the prompts you give your LLM, with an estimated cost of 1,000 records. This can be helpful for tasks like crafting different writing styles or adapting the LLM’s tone for various audiences through A/B Testing within budget.

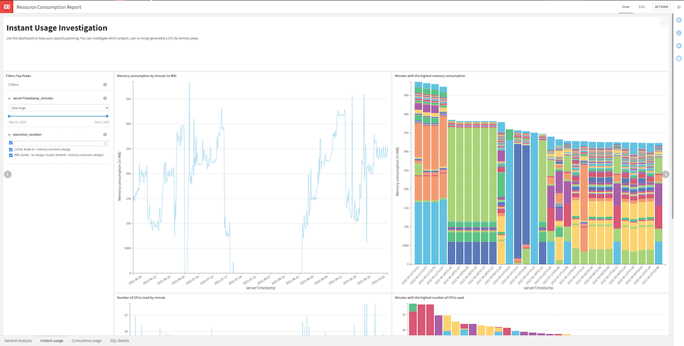

- Cost monitoring: Dataiku can automatically extract LLM cost and Compute Resource Usage (CRU) information from audit logs and present insights in a ready-to-use dashboard.

Choosing the Right Tool for Your LLM

The best GenAI governance solution depends on your specific needs. Here’s a breakdown to help you decide:

Choose watsonx.governance if you:

- Need a comprehensive solution across ML and LLM — across design time and run time, for all technical and non-technical stakeholders.

- Want an open solution — the ability to govern all AI no matter if it’s built in-house, by third parties, or bought as part of business applications.

- Need out of the box regulatory assessments.

- Require advanced safety and compliance features to ensure data privacy.

Choose Dataiku if you:

- need centralized control and management of LLMs within a single platform. Dataiku’s LLM Mesh provides this by acting as a routing and orchestration layer between applications and LLMs, making it convenient for organizations looking for a streamlined, integrated approach.

- have no plan to use other LLM platforms or services in the future.

#watsonx.governance