Event Processing made easy with IBM Event Streams and webMethods Integration

In this article, I will introduce the messaging tools that have been introduced as part of the preview version of Service Designer 11.0 released in December 2023 and how it can be combined with IBM Event Streams.

Why ?

The new tools complement our existing messaging services to recognize the changing landscape that IoT and the increasing multitude of different messaging providers bring to integration. The plethora of event sources and the volume of data makes event processing difficult to implement, to ensure scalability and responsiveness.

That's why our new messaging tools are designed to work natively with any number of different platforms and offers both the traditional transactional event processing, but also a new event streaming model to make it easier to process and leverage large volumes of events and make sense of it.

Message processing versus Event Streaming

Traditional message processing inferred that the individual messages represent critical events such as an Order or Invoice, that must be processed as part of a safe, reliable transaction, where the clients are enterprise-level applications.

However, with the rise of IoT and event-driven architectures both the volume, the type of event, and the variety of producers is much more varied. The events in themselves also might hold little value and it is only in the overall trends or patterns that they produce where the value can be obtained. This new pattern requires a very different messaging platform and a very different processing model in order to be able to process the required volumes.

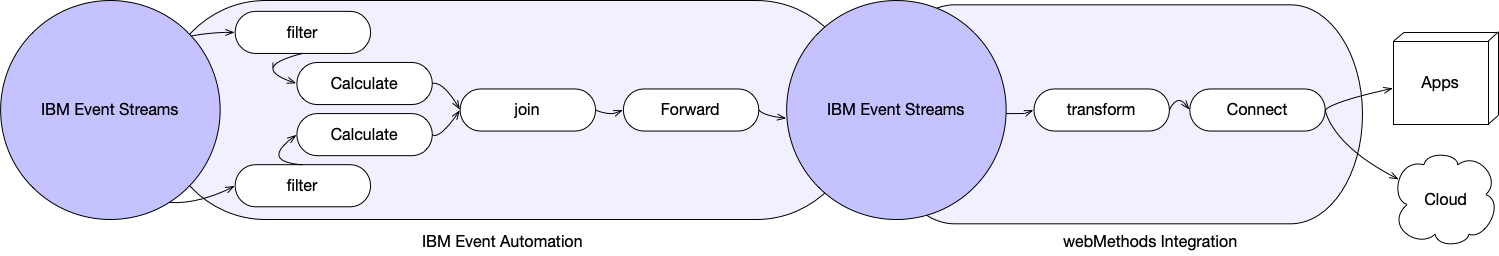

This will involve capturing and joining streams of events from different sources as well then filtering, aggregating or calculating data from the stream before then forwarding or processing the results. This would normally require advanced development skills to avoid incurring performance issues or onerous overhead in network or memory use.

Messaging reboot

Previously webMethods offered you both native messaging with webMethods Universal Messaging or JMS messaging to allow you to use any JMS messaging platform of your choice. Neither solutions are designed with event streaming in mind, and JMS often lacks access to the features that distinguish modern messaging solutions.

With this in mind, for our webMethods customers we decided to introduce a whole new messaging framework that would allow you an agnostic method for sending and receiving events from the messaging platform of your choice and introduce a processing mode to make processing event streams into your app integration easier. The new implementation is provided as a webMethods package called WmStreaming via our webMethods Package Registry

The new webMethods messaging framework has been designed to be agnostic about the messaging platform with the use of a plugin for each provider. The current version is bundled with a plugin for Kafka, but we will be providing both plug-ins for other solutions as well as documenting the plugin SDK to allow developers to integrate natively with any messaging platform of their choice.

Why Use IBM Event Streams ?

There are many messaging solutions to choose between and many hyper scalers will provide varying messaging services. Not all of which will scale or offer the full range of functionality that you will need. With IBM Event Streams you get a fully fledge messaging provider that can scale to your business needs and provide full functionality for both transactional and event stream processing.

The other advantage is that it is built on Kafka and so can easily integrated with webMethods Integration. Using webMethods integration and Event Streams means that you don't need super advanced developer skills to process different messaging streams and with IBM Event Streams you can leverage the power of Kafka without the headache of trying to manage and secure it.

IBM Event Streams is part of a broader portfolio known as IBM Event Automation, which contains an event processing capability based on Apache Flink. This provides powerful UI based tools to take advantage of Flink's distributed stateful streaming that can be used instead before forwarding the results into webMethods.

Remember the goal with webMethods is app integration and the trigger can work either transactionally or in cases where you don't have IBM Event Processing as a stream processor.

We will continue to work on making both products fully fledge citizens within a common arch, ensuring that discoverability, even management and control can be assured end to end.

Pre-requisites for building your own integration ?

You can obviously read this blog without needing to do any of the tasks to get a good idea of how webMethods and Event Streams can help solve your event processing requirements. However, if you do want to follow along then you will need the following;

Sign up and connect to IBM Event Streams

Signing up is easy, you just need to go the IBM cloud, register and then sign up for a free tenant for IBM Event Streams

Download connection properties

After registering, download the Kafka properties file via the Event Streams home page -> Connect to this service

You will also need to generate an API key, which is done via "Service credentials" menu. Be aware that you will need to access the secrets manager itself in order to copy the secret

WxIBMEventStreamsDemo package

I have published an example webMethods package to a git repository (see below) that you can install into your locally installed webMethods Service Designer. Alternative you san skip ahead to the "Try it out" section if you just want create your own integration.

https://github.com/johnpcarter/WxIBMEventStreamsDemo

Download & restart Local development runtime

Clone the package directly into your packages directory and then restart your embedded runtime from Service Designer -> Server menu -> Restart or Start.

$ cd /Applications/WmServiceDesigner/IntegrationServer/packages

$ git clone https://github.com/johnpcarter/WxIBMEventStreamsDemo.git

The path above reflects the default location if you have installed Service Designer on a Macintosh, on windows the path C:\PrOGRAM FILES\...

Configuring the connection

Once your embedded Integration Server has restarted then you can reconfigure the connection with the properties that you downloaded from Event Streams earlier. Open up the admin page for your local webMethods runtime at http://localhost:5555 and enter the default credentials 'Administrator/manage'. Then navigate to the event streams connection view via Streaming -> Provider Settings. Then click on the connection 'WxIBMEventStreamsDemo_IBMEventStream_SaaS' which is provided by our new package and then "Edit Connection Alias Details" to see the following page.

Replace the configuration parameters with the contents of the file that you downloaded earlier, replacing the password value <API_KEY> with the api_key from the API key file. The reference in the Kafka properties refers to the line sasl.jaas.config.

For the provider URI, simply copy one of the bootstrap servers into the field and prefix it with 'https://' and add the port 9093 to the end.

Lastly you will need to select SASL_SSL from the security profile list before saving your changes. Afterwards, you will notice that the pasted API key has been replaced with [***]. Don't worry this is completely normal as any detected passwords get moved to our password manager and encrypted on disk. You can still edit the connection and the reference will be maintained so you won't have to worry about setting. Only if you rename the connection or package will require you to re-enter any passwords.

You can now go back to the streaming -> Event providers page and test the connection. If the works then you can enable the connection and congratulate yourself that you have now successfully connected with webMethods with Event Streams.

FYI, You can replace the url or any id's and password with a global variable with the syntax ${...}. The advantage is that you will be able to reconfigure them more easily via environment variables, Kubernetes secrets or an external vault without having to rebuild or redeploy

Kafka Libraries

I have bundled the Kafka libraries in the package itself, you can find them at

WmServiceDesigner/IntegrationServer/packages/WxIBMEventStreamsDemo/code/jars/static

current version is 3.1.0

Try it out

The package comes with its own home page from where you can try out the connectivity, similar to the java sample app that you download from the IBM Event Streams home page. Simply open the page http://localhost:5555/WxIBMEventStreamsDemo

The left hand side represents the Producer which simulates a number of devices emitting temperature measurements that need to collected and acted on. You can set the min, max temperatures and the number of devices and then click on the button to start producing events that will be sent to your Event Streams platform.

The right hand side shows our consumer which comprises an event trigger that collects the events every 20 seconds, then averages them out for each device before sending the results onto a service. The service leverages the webMethods web-socket server capability to allow the results to be displayed in your browser, without having to resort to polling.

Digging into the package

IF you want to look behind the curtain to see how it works, simply open Service Designer and then expand the package WxIBMEventStreamsDemo from the right hand package navigator.

The key assets are in the folder

- wx.eventstreams.consumer

- wx.eventstreams.producer

The service 'wx.eventstreams.producer:sendTemperatures' is transform the test data into Temperature events and then sends them via the built in service 'pub.streaming:send'. Consumption is implemented via a trigger 'wx.eventstreams.consumer:consumeTemperature', which is show in the screenshot above.

You can also enable the consumer trigger directly via the admin UI or in Service Designer. However, in that case you won't have the link to your web page and the web-socket will not be available to the processing service and so instead it will trace the results to the server log

If you want to know more, we will drill down into how you can build your own producer and consumer in the sections below.

Doing it yourself

Now we will examine how you can use the new streaming package bundled with Service Designer to natively integrate with Kafka and process large volumes of events using our new event trigger yourself step by step.

Create a webMethods package to manage your producer and streamer.

You cannot develop anything in webMethods without first creating a package as it our solution to shipping, sharing and reusing code. We will create a single package for both our producer and consumer, in reality this probably would be a bad idea as the two may have to be deployed into completely different environment, but we will do it to keep things simple.

Call your package what you want, the screenshots below reference a package named JcKafkaExample. 'Jc' is my initials ;-), generally in webMethods we tend to prefix our packages with a common code so that they can be easily distinguished from organisation to another. Hence why all webMethods packages are prefixed with Wm. You also need to create a folder structure inside your package that will also implicate a unique namespace for the assets. This is important for both legibility and to ensure uniqueness when installing packages from different sources. Layout the following structure in your package, you can replace the 'jc' root with the prefix that you used for package.

The folders are

- jc.kafka.example - root folder

- jc.kafka.example.common - Assets common to both the producer and consumer such as the event structure

- jc.kafka.example.producer - This is where we will emit events and send them to kafka

- jc.kafka.example.consumer - We will create our trigger here to the consume the producer emitted events

You can of course replace jc with your initials and note in a real use case it would not be recommended to use the word kafka as the whole point of the new framework is to be provider agnostic i.e. our soluould be easily us

ed with any event streaming/messaging platform by simply swapping in the correct plugin, libraries and updating the connection.

Creating the Document type

Most events will have some kind of structure so it makes sense to model that structure with a Document Type in webMethods (Document types are cool because they allow you to manipulate structured data independently of external format requirements) . The transformation between documents and the events is managed implicitly for events formatted as either JSON or XML. Create a document type in the documents folder called Temperature with three attributes as below;

- deviceId - String, represents a unique identifier for source device

- timestamp - Object with java wrapper attribute set to java.util.Date

- temperature - Object with java wrapper attribute set to java.lang.Double

Bundling the Kafka libraries

You will need to download the Kafka libraries and add them to your package before you can create your connection. Kafka libraries can be downloaded from the Kafka download site and should then be placed into the package e.g.

WmServiceDesigner/IntegrationServer/packages/JcKafkaExample/code/jars/static

The libraries can be found in the libs directory of the download from Kafka and you will need to copy the following to the above directory

- kafka-clients-*.jar

- lz4-java-*.jar (required for compression)

- snappy-java-*.jar (required for compression)

The Kafka client also utilizes the "slf4j-API-.jar", but there is no need to copy it because it is already included in the common JAR distribution of the webMethods Integration runtime.

You will need to then restart your local runtime, again you can do this from Service Designer and the menu Server -> Restart

We now need to establish a connection between our integration runtime and Event Streams. Open a web page to admin UI of the local Integration Server; localhost:5555 → Streaming → Provider settings → Create Connection Alias as below;

Configure as we mentioned above for the WXIBMEventStreamsDemo package, copying the Kafka properties into the configuration parameters, updating the API key and setting the provider URL. Remember to test the connection before enabling it.

Create an event specification for Temperature

The event specification is a means to formalize the complexity of knowing the event structure. As already mentioned event streaming can implicate complex JSON or XML structures or in the case of very simple events a single typed value. Creating event specifications allows developers to share the details of the event structure and allow easier collaboration. Open a web page to admin UI of the local Integration runtime; localhost:5555 → Streaming → Event specifications → Create event specification, name it temperature as below;

The key will be a simple string and the value a JSON string as specified by our earlier created Document Type. The translation of the document from JSON or XML will be done automatically when sending or receiving events. Make sure that the namespace of the document you created earlier is correct, best way is to simply copy the document type from Service Designer and paste into the field.