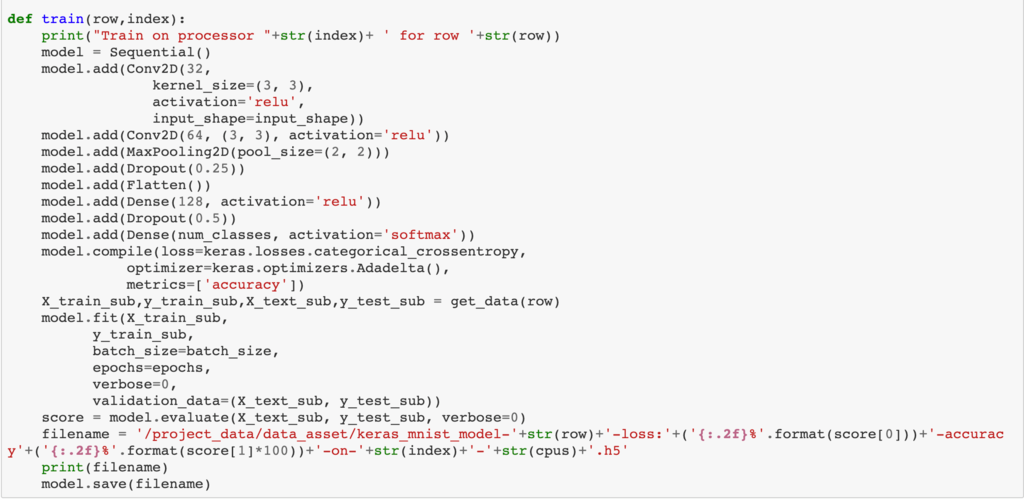

For example, in the following example, we have used Tensorflow to build a CNN neural network model for MNIST . The most important training codes are as follows.

4.3 Integration into the 'worker' training notebook

The worker training template is a template to help simplify training efforts.

The major works done by the template

1. Access to slice metadata

2.Provide slicing data to the model

The training codes gets the required data through the get_data(row) method. The row parameter defines the index of the corresponding slice of the data.

3. Train models in sequence in one process or mult-process (used in the next series: Series 3)

In the above codes, first create a queue and put all the indexes of the data to be trained into the queue. Then is_multiple_processors will be set to False and the path the codes takes will be else path. process(workQueue,0) method will retrieve the slicing index from the queue in order and pass it to the training method train(row,index). The training method reads the corresponding slicing data and trains it.

If is_multiple_processors is set to True in the next series Mult-process training in single notebook, then the path the codes takes will create a pool and start multiple processes to do training in parallel.

The works that users need to customize include:

1. Read the corresponding data from the database or storage according to the index of the provided.

Users can build their own get_data() method based on the model. get_data() method is to read the corresponding slicingdata through the passed index.

2.Provide the training codes

Migrate the training codes to the train() method, and accept the incoming slicing index. Call the get_data() method defined above to read the corresponding sliced data, at the end save the trained model as a file.

4.4 Configure the parameter and start training

The 'worker' framework supports two modes of operation.

- single-process multi-loop

- multi-process multi-loop

In this solution, we use the first approach. The difference between the two ways is achieved by a configuration parameter is_multiple_processors. In the solution, we need to set is_multiple_processors to False.

You can then run the entire Notebook to start sequential training.