Evolution is a change over successive generation. It's a slow process that requires time. Computers and computing too has undergone evolution from distributed computing to today's cloud computing. During this evolutionary phase, technologies like virtualization, a concept made popularized by VMware, emerged which were seen as next step to existing bare metal systems. The era of virtual machines has now seen the next phase of evolution in the form of containers or micro-services.

Since the containerization was made popular by Docker in 2009, for last 13+ years the world has seen a shift from monolithic applications to micro services. Micro services that were made immensely popular when Google's open-sourced Kubernetes. As the swarm of Kubernetes took over the world, everyone felt the heat and need to incorporate or even adopt Kubernetes. The Tanzu Kubernetes Grid (TKG) was the next phase of evolution for virtual machines.

Ok, so that covers the application part, but what about the gold mine we still have around? You guessed that right. I'm talking about data. It was still on remote storage systems outside of reach of Pods and Containers running application logic.

Kubernetes itself underwent metamorphosis with storage technology from hostpath to modern day Container Storage Interface (CSI). CSI helped bridged the gap between compute and storage.

The storage vendors too, willingly wrote CSI drivers to consume (almost all the) storage features.

IBM, a trusted technology leader also created a CSI driver providing ability to use block storage or filesystem storage interface.

Today, we'll be talking about IBM's Block CSI driver for IBM FlashSystem seamlessly integrating the micro-services running under VMware TKG to consume the application data.

About IBM Block CSI driver

IBM block storage CSI driver is leveraged by Kubernetes persistent volumes (PVs) to dynamically provision for block storage used with stateful containers.

IBM block storage CSI driver is based on an open-source IBM project (CSI driver), included as a part of IBM storage orchestration for containers. IBM storage orchestration for containers enables enterprises to implement a modern container-driven hybrid multicloud environment that can reduce IT costs and enhance business agility, while continuing to derive value from existing systems.

By leveraging CSI (Container Storage Interface) drivers for IBM storage systems, Kubernetes persistent volumes (PVs) can be dynamically provisioned for block or file storage to be used with stateful containers, such as database applications (IBM Db2, MongoDB, PostgreSQL, etc) running in Red Hat OpenShift Container Platform and/or Kubernetes clusters. Storage provisioning can be fully automatized with additional support of cluster orchestration systems to automatically deploy, scale, and manage containerized applications.

About Tanzu

VMware Tanzu is the suite or portfolio of products and solutions that allow its customers to Build, Run, and Manage Kubernetes controlled container-based applications.

Tanzu Kubernetes Grid, informally known as TKG, is a multi-cloud Kubernetes footprint that you can run both on-premises in vSphere and in the public cloud.

vSphere Container Storage Plug-in, also called the upstream vSphere CSI driver, is a volume plug-in that runs in a native Kubernetes cluster deployed in vSphere and is responsible for provisioning persistent volumes on vSphere storage.

Installing IBM Block CSI driver for VMware Tanzu

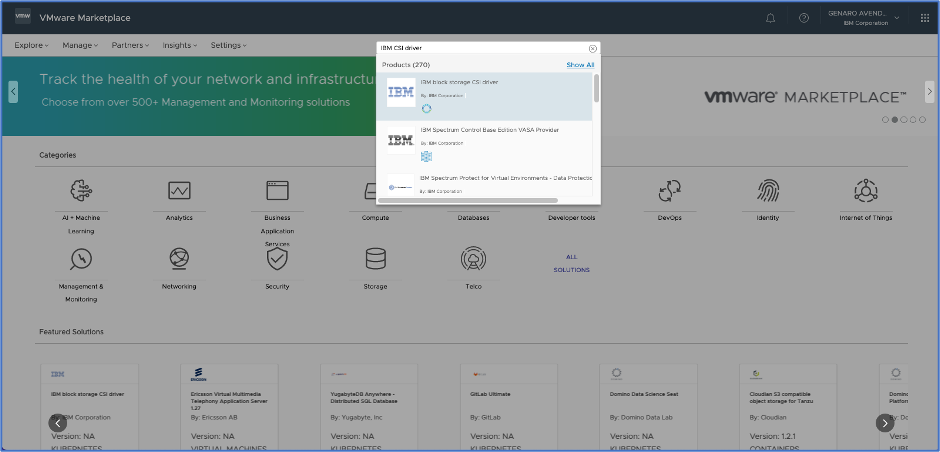

The driver installation instructions can be accessed by logging in to VMware Cloud Services. Search for IBM Block CSI driver and select the option from search results. (See Figure 1)

Upon selection, the certification page information about the IBM Block CSI driver is shown. At present, the IBM Block CSI driver version 1.9.0 is certified with VMware Tanzu Kubernetes Grid 1.5.0 version.

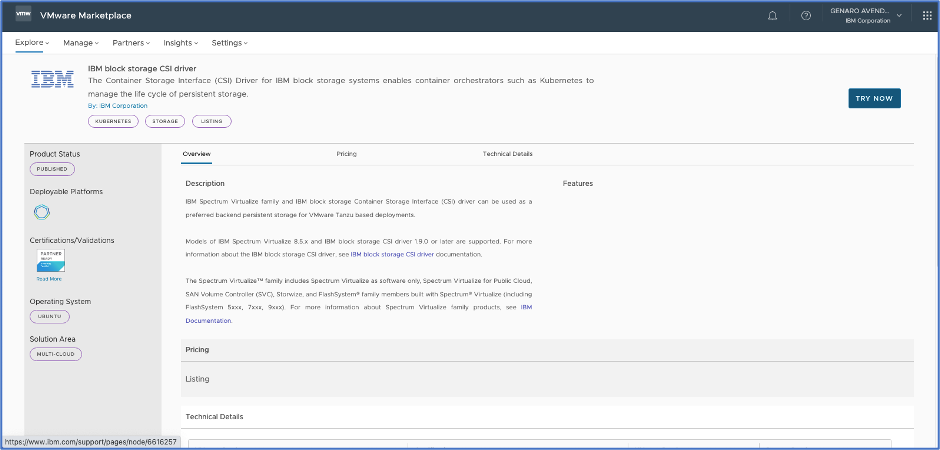

The official installation instructions page available on IBM’s support site, is accessed by clicking Try Now push button. (See figure 2)

Figure 2 Click on “TRY NOW” for redirection to IBM support website

From the driver configuration to persistent volume provisioning it’s a simple 4 step process described as follows.

Step 1 DirectPath I/O setup for worker nodes

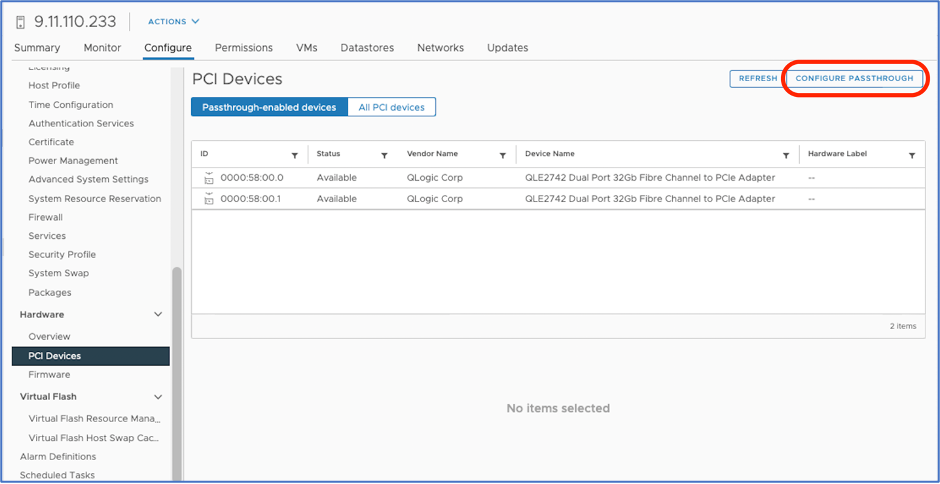

In order to configure the DirectPath I/O, we first enable the FC devices from ESXi host’s Passthrough configuration. (See Figure 3). A host reboot is required post this operation.

Figure 3 Enable Passthrough for a Network Device on a host

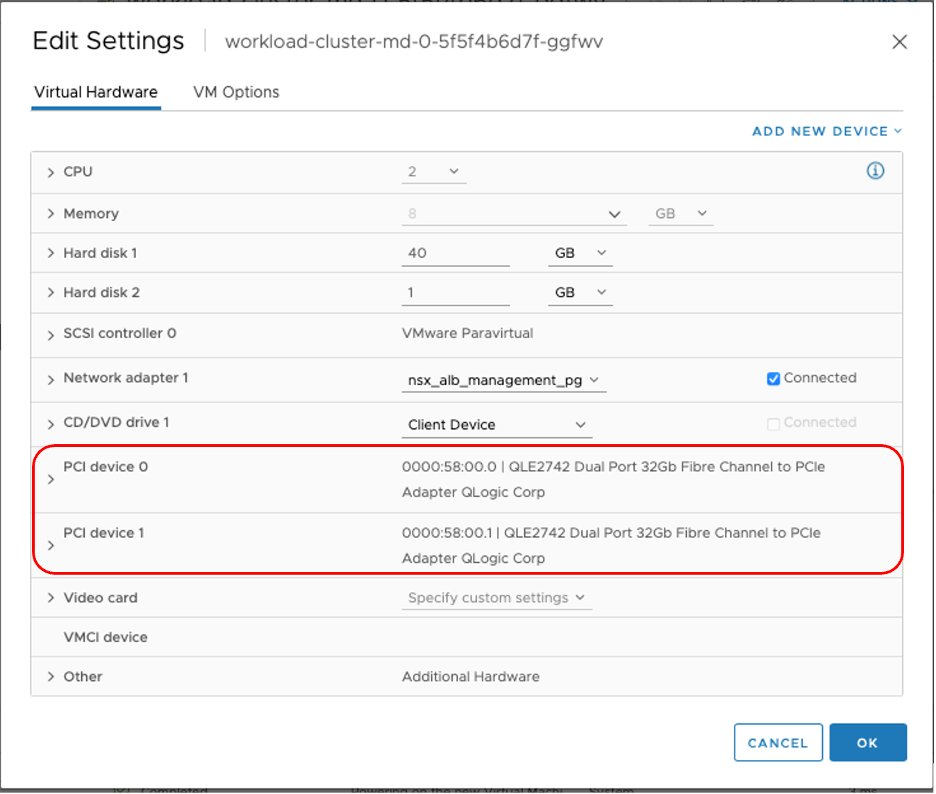

Post reboot, we migrate the workload cluster VM to the ESXi host we changed earlier and edit the VM to add the FC devices. (See Figure 4)

Figure 4 Configure a PCI Device on the Worker node(s)

Step 2 Environment preparation for installing the block storage CSI driver

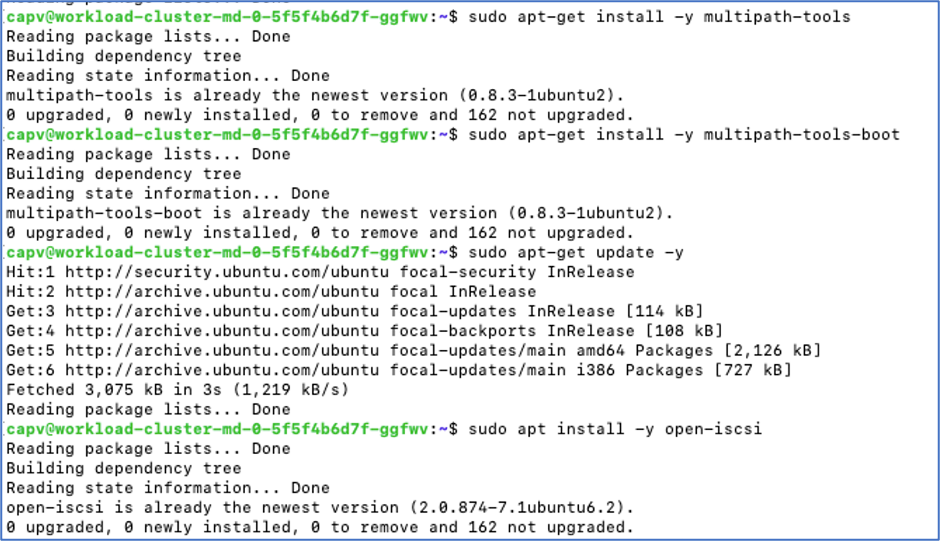

As part of the environment preparation, we install following packages

- multipath-tools

- multipath-tools-boot

- open-iscsi ( Only when using iSCSI based connectivity )

The example below depicts the installation of these packages on Ubuntu Linux. (See Figure 5)

Figure 5 Installation of packages on Worker nodes

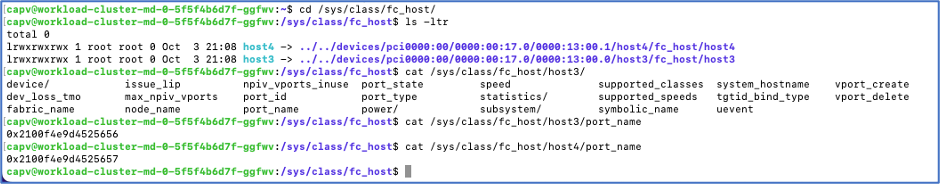

The next step is to identify the FC devices known the operating system. The assumption here is that FC zoning for host and FlashSystem storage is already in place. Following example shows the WWPN’s for each of the FC device. (See Figure 6)

Figure 6 Find the WWPN of the HBA assigned to the Worker node

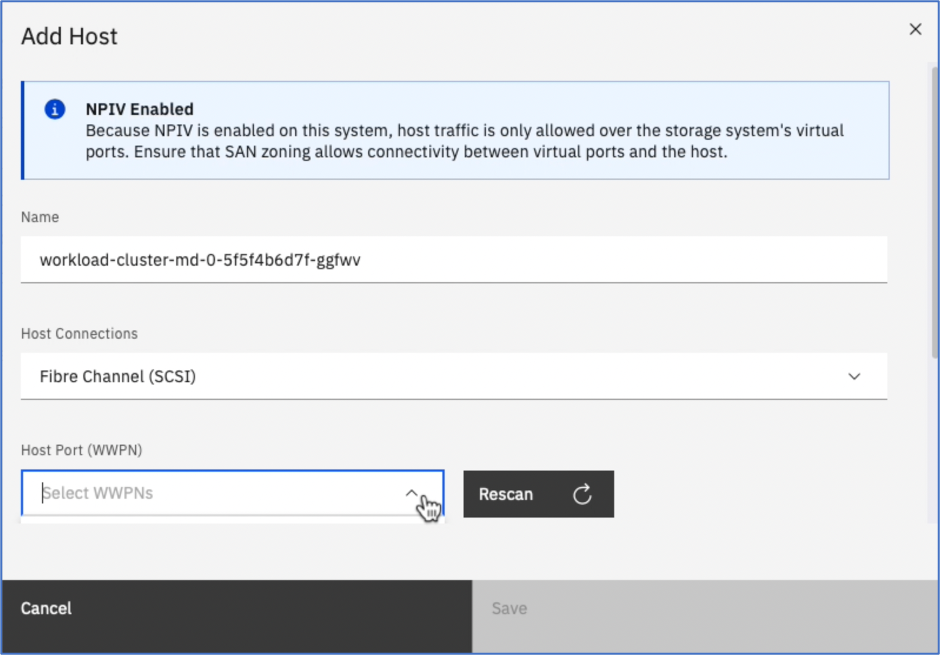

The final step in the environment preparation is the host definition on the FlashSystem storage using WWPN’s identified on the virtual machine. (See Figure 7).

Figure 7: Host definition on FlashSystem storage

Figure 7: Host definition on FlashSystem storage

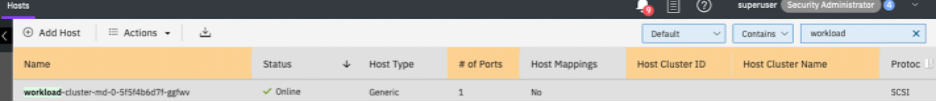

Figure 8 shows the worker vm defined as FC host.

Figure 8 FC Host on Spectrum Virtualize Storage

Step 3.- Install the IBM block storage CSI operator and driver

Now that environment preparation is complete, we’ll proceed further for IBM block storage CSI operator and driver installation.

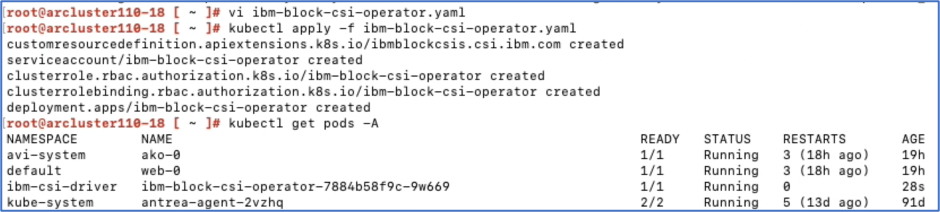

Create a namespace ibm-csi-driver that will host all the required objects such as pods, FlashSystem array secret. Modify the ibm-block-csi-operator.yaml to indicate the correct namespace (ibm-csi-driver) and apply the yaml to the system using kubectl. (See Figure 9)

Figure 9 Install IBM CSI operator

Figure 9 Install IBM CSI operator

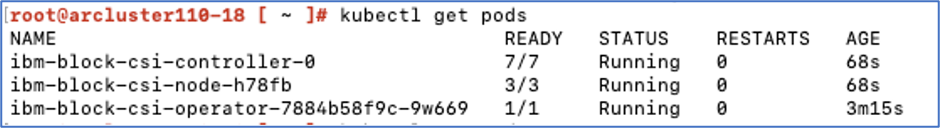

We’re good to go for CSI driver installation now. Update the csi.ibm.com_v1_ibmblockcsi_cr.yaml file with the namespace ibm-csi-driver and healthPort: 9810 and apply the yaml to the system with kubectl. Upon the successful deployment of the CSI driver, following pods are seen in the running state.

Figure 10 All the CSI driver pods in running state

Figure 10 All the CSI driver pods in running state

Create a secret with user credentials and FlashSystem management address to access the FlashSystem. Finally create a storageclass with block.csi.ibm.com as provisioner along with other parameters such as storage pool, I/O group, space-efficiency and secret. Refer to IBM’s official documentation for the sample yaml definitons.

Step 4. Application deployment and persistent volume provisioning

We’re now all set to deploy our first application and provision a persistent volume from the FlashSystem. When defining yaml attributes, set the storageclass defined for IBM block CSI driver and specify the volume capacity and apply the yaml.

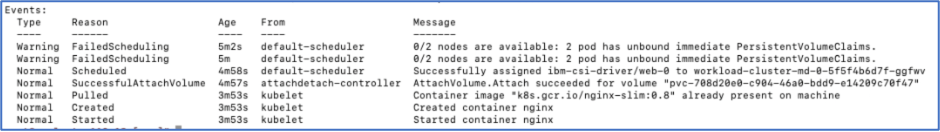

A describe on the running application pod shows volume attach events. (See Figure 11)

Figure 11: Running nginx pod with persistent volume

To see all these steps in action watch the video here.

#Featured-area-2#Highlights-home#Highlights