In two of our previous blogs, we gave an overview of Watson Machine Learning for z/OS (WMLz) and a use case of SMF real time scoring through WMLz. In this article, we call the scoring service for inference through a REST API described in WMLz service REST APIs. REST APIs are the most used type of interface to call WMLz scoring service for inference. Other than REST APIs, there are 2 other types of interfaces to call the scoring service specially for COBOL applications and Java applications. In this blog, we will give an overall introduction about those 3 WMLz scoring interfaces and give some use case examples.

WMLz scoring service REST API

First, let us recall the WMLz scoring REST API from our previous blog which is use case of SMF real time scoring through WMLz. In the use case, after the model was deployed, we built an application that scored SMF 74 records in real time. For more information on how to fetch SMF records in real time, refer to our previous blog here. Once it receives one entry of real time SMF records, the record will be sent to the WMLz scoring service end point URL through the HTTP POST method and the response would be the scoring result. The scoring process is described below:

1. Get token: Use the HTTP POST method to connect to WMLz authorization service and get a token for the following inference connection.

The authorization URL used to get the token is combined by your WMLz webUI address + "/auth/generateToken". See below example code for a whole getauthtoken() function definition.

authurl='https://your.mlz.host/auth/generateToken'

authdata={

"username": "user",

"password": "password"

}

authheaders = {'Content-Type': 'application/json'}

#Get the token used for authorization

def getauthtoken():

authresponse = requests.post(url=authurl, headers=authheaders, data=json.dumps(authdata), verify=False)

authtoken=authresponse.json()['token']

return authtoken

authtoken=getauthtoken()

The default expiration time for the token is 24 hours. If you need to set the expiration time to a customized value, use following authdata instead:

authdata={

"username": "user",

"password": "password",

"expiration": time_in_seconds

}

2. Get scoring result: Use the HTTP POST method to connect to WMLz scoring service and send the input data together with the token fetched above included in the header. The response value from the WMLz scoring service is in JSON format and users can extract the prediction result from it. See the code below for an example.

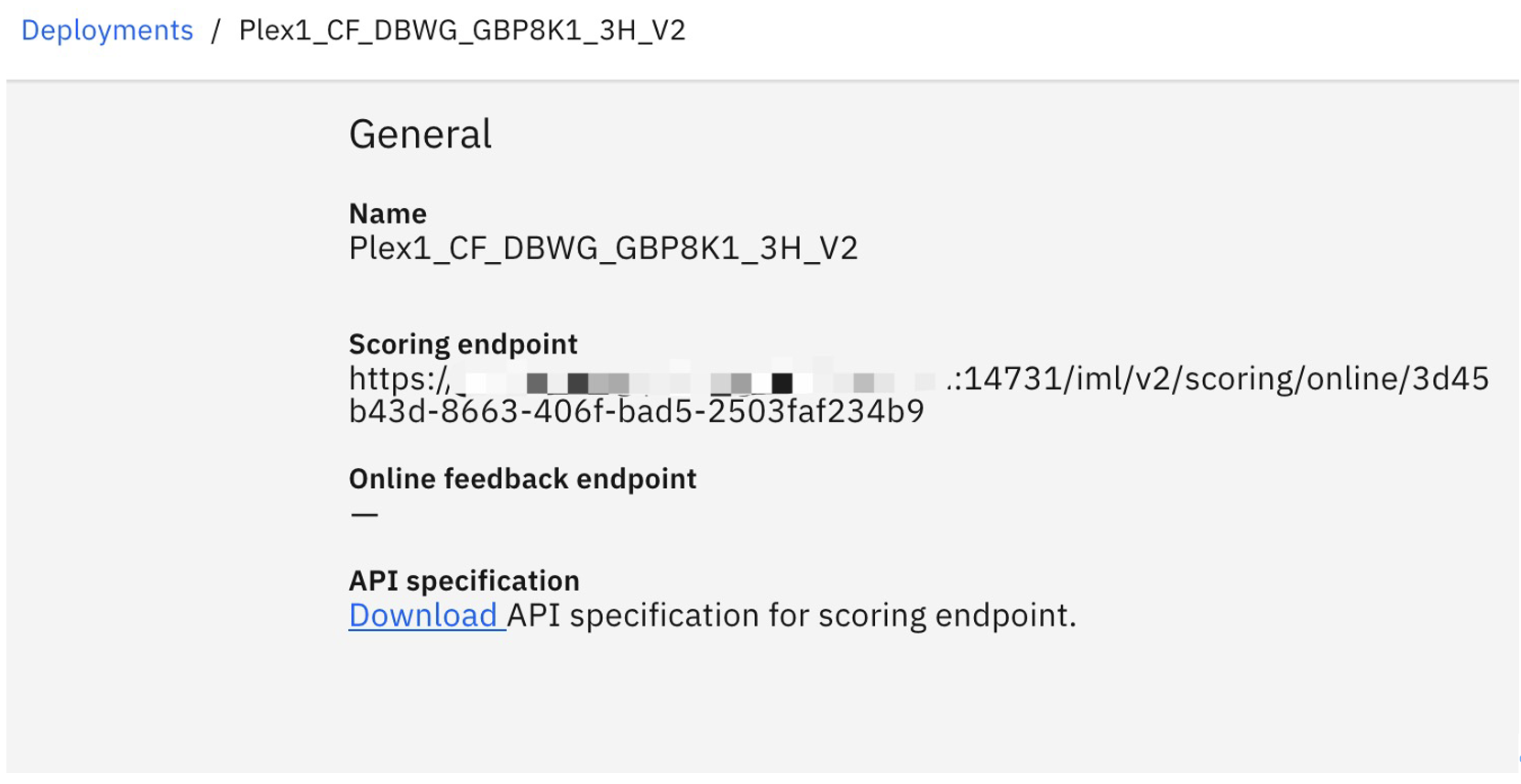

Find the scoring endpoint from WMLz dashboard: Model Management --> Deployments --> model_name. Click the model name and enter the model overview page. There is the scoring endpoint. The string which is the last part in the scoring endpoint is the model deployment ID. This ID will be used in the following 2 interfaces.

scoringurl='https://your.mlz.host:14731/iml/v2/scoring/online/3d45b43d-8663-406f-bad5-2503faf234b9'

scoringheaders = {

'Content-Type': 'application/json',

'authorization': authtoken

}

scoringresponse = requests.post(url=scoringurl,headers=scoringheaders,

data=json.dumps(inputdata), verify=False)

prediction_result = scoringresponse.json()[0]['prediction']

Use the CICS LINK command in your CICS COBOL application to call WMLz scoring service

The WML for z/OS base scoring service integrated in a CICS region is available as a program called ALNSCORE. You can use the CICS LINK command in your CICS COBOL application to call ALNSCORE for online scoring for SparkML, PMML, and ONNX models. The call uses special CICS containers to transfer the scoring input and output between the COBOL application and the ALNSCORE program. Because the input and output schemas are model-specific, you must carefully prepare each model to ensure appropriate mapping and interpretation of the input and output data structures for deployment. For more details, you can refer to the following link https://www.ibm.com/docs/en/wml-for-zos/2.4.0?topic=base-preparing-models-online-scoring-cics-alnscore.

Here we illustrate two use cases of SparkML and ONNX models to introduce how to use this interface to call the WMLz scoring service for a COBOL application.

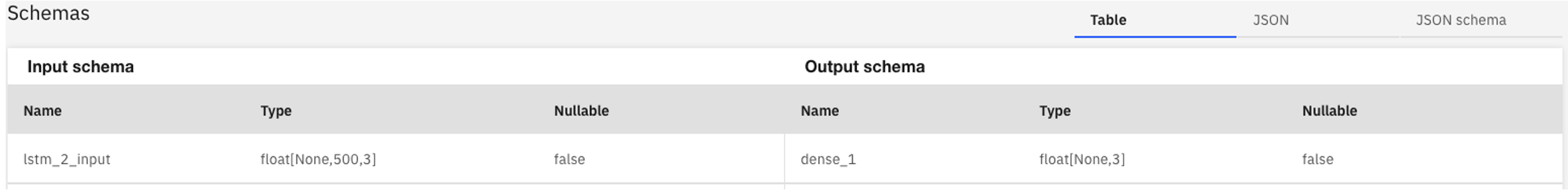

1. MLz_Contention_Model_CICS is an online SparkML model and its schema is as below:

a) Extract the deployment ID, input schema, and output schema of a model that is deployed. Copy the input and output schemas into two separate JSON files, name the files, such as modelInput.json and modelOutput.json, and store them on z/OS USS system. Take modelOutput.json as an example:

!:/omeg/MLz $ cat modelOutput.json

{

"$schema": "http://json-schema.org/schema#",

"properties": {

"prediction": {

"additionalProperties": false,

"description": "field prediction, type double",

"pattern": "",

"type": "number"

},

"probability": {

"description": "field probability, type array[double]",

"items": {

"type": "number"

},

"maxItems": 3,

"minItems": 3,

"type": "array"

}

},

"required": [

"prediction",

"probability"

],

"type": "object"

}

b) Generate COBOL copybooks for the input and output schemas for the model by using the

CICS DFHJS2LS utility. First, locate the sample JCL ALNJS2LS job file in the $IML_INSTALL_DIR/cics-scoring/extra/jcllib directory. Then customize the ALNJS2LS job by following the instructions in the JCL file to generate two COBOL copybooks.

c) Use the new COBOL copybooks to create Java helper classes for the input and output of the model. The ALNSCSER scoring server in the CICS region uses the Java helper classes to interpret the input data structure transmitted from the COBOL application and then passes the scoring result back to the COBOL application. First copy the copybook to a PDS member and add the 01 layer to the data structure and the required sections to create a COBOL program, as shown in the following example:

IDENTIFICATION DIVISION.

PROGRAM-ID. MODELPGM.

DATA DIVISION.

WORKING-STORAGE SECTION.

01 MODELIN.

06 SMF77DO1-length PIC S9999 COMP-5 SYNC.

06 SMF77DO1 PIC X(255).

06 SMF77DW1-length PIC S9999 COMP-5 SYNC.

06 SMF77DW1 PIC X(255).

06 SMF77DW2-length PIC S9999 COMP-5 SYNC.

06 SMF77DW2 PIC X(255).

06 SMF77QNM-length PIC S9999 COMP-5 SYNC.

06 SMF77QNM PIC X(255).

06 SMF77RNM-length PIC S9999 COMP-5 SYNC.

06 SMF77RNM PIC X(255).

PROCEDURE DIVISION.

STOP RUN.

Then locate the sample JCL ALNJCGEN job file in the $IML_INSTALL_DIR/cics-scoring/extra/jcllib directory, customize the JCL job and submit the job to create the Java helper classes.

d) Use special CICS containers and channels in your COBOL application to call the scoring program ALNSCORE. ALNSCORE uses the following containers to pass the input parameters and data structure from the COBOL application.

Each user COBOL application that calls ALNSCORE must create its own channel with a unique name for passing the input parameters. In our use case, we call ALNSCORE to pass one input record and then get the scoring result back from ALNSCORE. The code used for this is the same as the example in https://www.ibm.com/docs/en/wml-for-zos/2.4.0?topic=base-preparing-models-online-scoring-cics-alnscore.

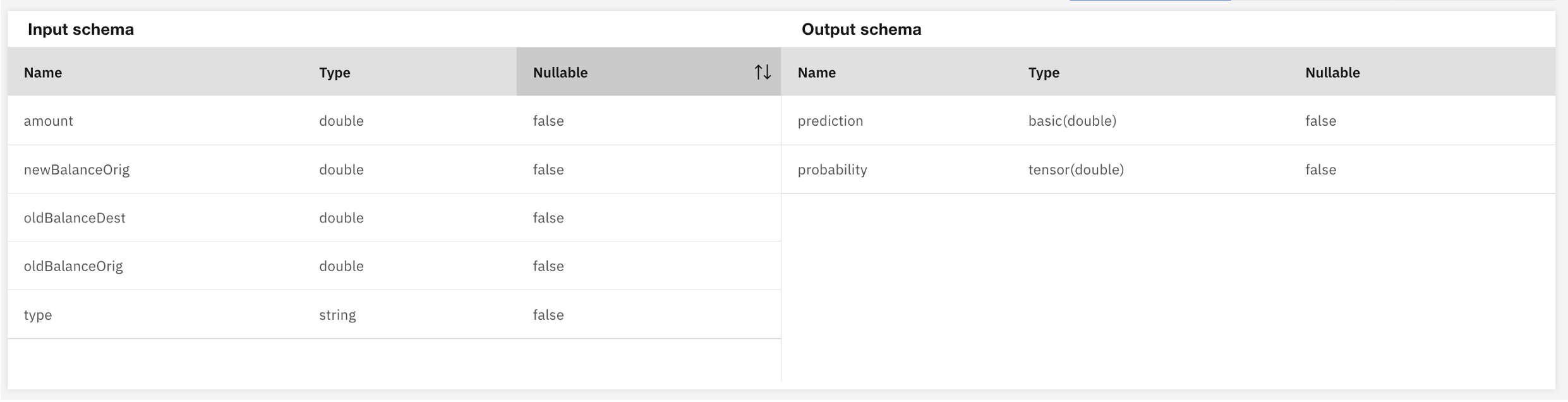

2. Cpu_Usage_prediction model is an online ONNX model and its schema is described below:

a) Extract the deployment ID, input schema, and output schema of a model that is deployed.

Flatten the multi-dimensional array into a single dimensional array

if input and output of the

ONNX model is in the form of a multi-dimensional array. Generate a Java helper class and copybook for your COBOL application if the input and output of the

ONNX model is dynamic. In our use case, for ONNX model input schema [None,500,3], the input can be shaped with n*500*3, the JSON schema that is generated for the model will contain the minItems attribute, instead of the maxItems attribute. Take modelInput.json as example:

!:/omeg/MLz/D3LSTMCPU $ cat moduleInput.json

{

"$schema": "http://json-schema.org/schema#",

"additionalProperties": false,

"description": "Input JSON schema",

"properties": {

"lstm_2_input": {

"description": "field lstm_2_input, type array[number]",

"items": {

"format": "float",

"type": "number"

},

"minItems": 1500,

"type": "array"

}

},

"required": [

"lstm_2_input"

],

"type": "object"

}

b) Generate COBOL copybooks for the input and output schemas for the model by using the

CICS DFHJS2LS utility.

The utility will generate the following COBOL copybook for the input JSON schema above:

06 lstm-2-input-num PIC S9(9) COMP-5 SYNC.

06 lstm-2-input-cont PIC X(16).

01 lstm-2-input.

03 lstm-2-input COMP-1 SYNC.

There is a limitation for CICS DFHJS2JS that the array length can not exceed 65535. Take the following model input schema for an example. If its minItems/maxItems exceeds 65,535, the utility will end with error message: com.ibm.cics.wsdl.CICSWSDLException: DFHPI9040E Array "data" occurs "150,528" times. The largest supported value is "65,535".

{

"$schema": "http://json-schema.org/schema#",

"additionalProperties": false,

"description": "Input JSON schema",

"properties": {

"data": {

"description": "field data, type array[number]",

"items": {

"format": "float",

"type": "number"

},

"maxItems": 150528,

"minItems": 150528,

"type": "array"

}

},

"required": [

"data"

],

"type": "object"

}

c) Use the new COBOL copybooks to create Java helper classes for the input and output of the model.

d) Use special CICS containers and channels in your COBOL application to call the scoring program ALNSCORE. ALNSCORE uses the following containers to pass the input parameters and data structure from the COBOL application. See COBOL program as below:

IDENTIFICATION DIVISION.

PROGRAM-ID. LSTMCOB.

DATA DIVISION.

WORKING-STORAGE SECTION.

01 lstm-2-input.

06 lstm-2-input-num PIC S9(9) COMP-5 SYNC.

06 lstm-2-input-cont PIC X(16).

01 lstm-2-input-record.

03 lstm-2-input-array OCCURS 1500 COMP-1 SYNC.

01 MODELOUT.

06 dense-1.

09 Xdata-num PIC S9(9) COMP-5 SYNC.

09 Xdata-cont PIC X(16).

09 dims OCCURS 2 PIC S9(18) COMP-5 SYNC.

01 D3MODO01-Xdata.

03 Xdata OCCURS 3 COMP-1 SYNC.

01 I PIC 9(4) VALUE 1.

01 J PIC 9(8) VALUE 1.

PROCEDURE DIVISION.

MOVE 1500 TO lstm-2-input-num.

MOVE 'INPUT-CONT' TO lstm-2-input-cont.

** Form an input array for scoring with the number of element 1500 to lstm-2-input-array

** e.g.

** PERFORM UNTIL J=1501

** MOVE 0.6 TO lstm-2-input-array(J)

** ADD 1 TO J

** END-PERFORM.

** Set the deployment ID

EXEC CICS PUT CONTAINER('ALN_DEPLOY_ID') CHANNEL('CHAN') CHAR

FROM(‘df147073-edd2-4640-972c-b1a719f8ce46’)

END-EXEC.

** Set the input helper class for lstm-2-input

EXEC CICS PUT CONTAINER('ALN_INPUT_CLASS') CHANNEL('CHAN')

CHAR FROM(D3MODInWrapper)

END-EXEC.

** Set the input data for lstm-2-input

EXEC CICS PUT CONTAINER('ALN_INPUT_DATA') CHANNEL('CHAN')

FROM(lstm-2-input) BIT

END-EXEC.

** Set the input record for scoring

EXEC CICS PUT CONTAINER(lstm-2-input-cont) CHANNEL('CHAN')

FROM(lstm-2-input-record)

END-EXEC.

** Set the output class for MODELOUT

EXEC CICS PUT CONTAINER(ALN_OUTPUT_CLASS) CHANNEL(CHAN)

CHAR FROM(D3MODOutWrapper)

END-EXEC.

** Call ALNSCORE for scoring

EXEC CICS LINK PROGRAM('ALNSCORE') CHANNEL('CHAN')

END-EXEC.

** Get the output data from MODELOUT

EXEC CICS GET CONTAINER(ALN_OUTPUT_DATA) CHANNEL('CHAN')

INTO(MODELOUT) END-EXEC.

** Get the output record from Xdata-cont

EXEC CICS GET CONTAINER(Xdata-cont) CHANNEL('CHAN')

INTO(D3MODO01-Xdata) END-EXEC.

PERFORM UNTIL I=4

DISPLAY 'Xdata :' I

DISPLAY Xdata(I)

ADD 1 TO I

END-PERFORM.

EXEC CICS RETURN END-EXEC.

STOP RUN.

Calling the Machine learning scoring engine with Java

WMLz has a calling interface into a known Java class: com.ibm.ml.scoring.online.service.api.NativeAPIs which provides a native method for Java applications running in Liberty servers to call WMLz scoring service directly.

This interface only applies to the liberty server with WMLz scoring service deployed using Option 2: Configure a scoring service to run in an external WLP server.

Here we followed the guide to deploy a scoring service in an existing CICS Liberty server and deployed the models in this scoring server. We will use a Java app running in this CICS liberty server to call scoring through this native Java API. For the general guide to use this interface, you can refer to this document.

Here we illustrate two use cases of SparkML and ONNX models to introduce how to use this interface to call the WMLz scoring service for Java applications.

1. paysim_model_decision_tree_CICS is an online SparkML model and its schema is as below:

The following is a Java code example for this model. You can refer to the code comments for details of what each step does:

ClassLoader originalClassLoader = Thread.currentThread().getContextClassLoader();

//the following steps aim to get the class loader and get the instance of the class

Class cl = Class.forName("com.ibm.ml.scoring.online.service.api.NativeAPIs");

Thread.currentThread().setContextClassLoader(cl.getClassLoader());

Object obj = cl.newInstance();

MlResult2 resultFinal = new MlResult2();

//set the input

setInput("amount", 123);

setInput("newBalanceOrig", 21);

setInput("oldBalanceDest", 2);

setInput("oldBalanceOrig", 23);

setInput("type", "CASH_IN");

//Do the predict using deployed model

Method method = cl.getMethod("doScore", String.class, Map.class);

//obj is the new instance we create steps before, "ebd0533e-44db-4224-b2e2-47011f73b2d4" is the deployment ID of the model

//input depends on the model, different models need different inputs

Map<String, Object> result = (Map<String, Object>) method.invoke(obj, "50baf317-39fe-47bf-b0b1-ae0e8bfde8e3", input);

resultFinal.setPredictionResult(result.get("prediction"));

resultFinal.setProbabilityResult(result.get("probability"));

return resultFinal;

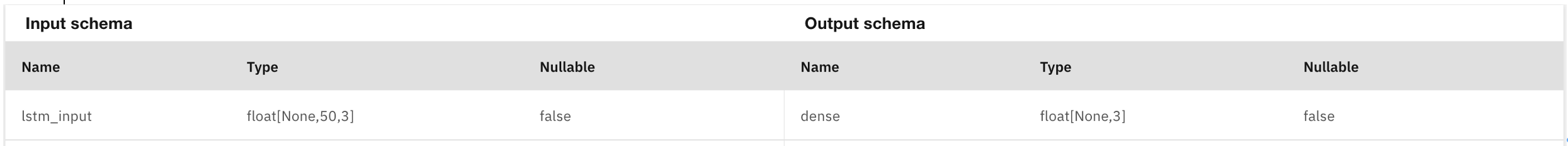

2. Cpu_Usage_prediction model is an online ONNX model and its schema is as below:

The dynamic input model using ONNX format is basically similar to the one above, and the input format should be paid attention to when input editing. As shown in the figure, the input format required in the model is [None,50,3]. Therefore, the value corresponding to istm_input in the input map should have three dimensions. Or you can flatten the input to make it one-dimensional.

Here is an example of calling a model which takes in a three-dimensional input :

ClassLoader originalClassLoader = Thread.currentThread().getContextClassLoader();

//the following steps aim to get the class loader and get the instance of the class

Class cl = Class.forName("com.ibm.ml.scoring.online.service.api.NativeAPIs");

Thread.currentThread().setContextClassLoader(cl.getClassLoader());

Object obj = cl.newInstance();

MlResult resultFinal = new MlResult();

//Set up a three-dimensional input as the model require, the threeDimensionalArray should be a [none, 50, 3] array here

setInput("lstm_input", threeDimensionalArray);

//Do the predict using deployed model

Method method = cl.getMethod("doScore", String.class, Map.class);

//obj is the new instance we create steps before, "ebd0533e-44db-4224-b2e2-47011f73b2d4" is the deployment ID of the model

//input depends on the model, different models need different inputs

Map<String, Object> result = (Map<String, Object>) method.invoke(obj, "ebd0533e-44db-4224-b2e2-47011f73b2d4", input);

resultFinal.setDenseResult(result.get("dense"));

return resultFinal;

The input in the above code is three-dimensional input. You can also use one dimensional input, you just need to adjust the input format. This example is very similar to the previous code snippet, however the key difference is that we use a one-dimensional array (flattened from a three-dimensional array) instead of the [none][50][3] three-dimensional array as before.

ClassLoader originalClassLoader = Thread.currentThread().getContextClassLoader();

Class cl = Class.forName("com.ibm.ml.scoring.online.service.api.NativeAPIs");

Thread.currentThread().setContextClassLoader(cl.getClassLoader());

Object obj = cl.newInstance();

MlResult resultFinal = new MlResult();

//The oneDimensionalArray here is a one dimensional array which is flattened by a three dimensional array

setInput("lstm_input", oneDimensionalArray);

Method method = cl.getMethod("doScore", String.class, Map.class);

Map<String, Object> result = (Map<String, Object>) method.invoke(obj, "ebd0533e-44db-4224-b2e2-47011f73b2d4", input);

resultFinal.setDenseResult(result.get("dense"));

));

return resultFinal;

You can make the input one-dimensional or multidimensional according to your needs. This does not affect the predicted results. The input in the above code is just a demonstration of the format of the input. However, it is important to think deeply about input when constructing your own use case. For example, if you may have some data that is initially plain text data and refers to bank accounts such as { name : "John Doe", accountNum : "12345", balance "67.89" }. Depending on your use case and model that you are using, the model may require that you send the input data in a certain way, in which case it may look something like [0.0, 0.1, 0.0, 0.1, 0.237869, 0.147862]. In this case, you may have to do extra steps in order to "transform" your original data into the format required by the model. In this is the case, you would simply perform such steps much like in the above examples, just before you send the input to the model for scoring.

In summary, users can choose different interfaces according to their applications. For CICS LINK and Java APIs, although the CICS integrated scoring service and the scoring service configured to run in an external WLP server are required for applications to take advantage of these 2 interfaces, it will not limit the accessible models. Applications can access models deployed on other scoring services for inference by providing the deployment ID.

Authors:

Hui Wang(cdlwhui@cn.ibm.com)

Xin Xin Dong(xxdongbj@cn.ibm.com)

Xi Zhuo Zhang(Xi.Zhuo.Zhang@ibm.com)